Center for Excellence in Teaching

Home > Resources > Short essay question rubric

Short essay question rubric

Sample grading rubric an instructor can use to assess students’ work on short essay questions.

Download this file

Download this file [62.00 KB]

Back to Resources Page

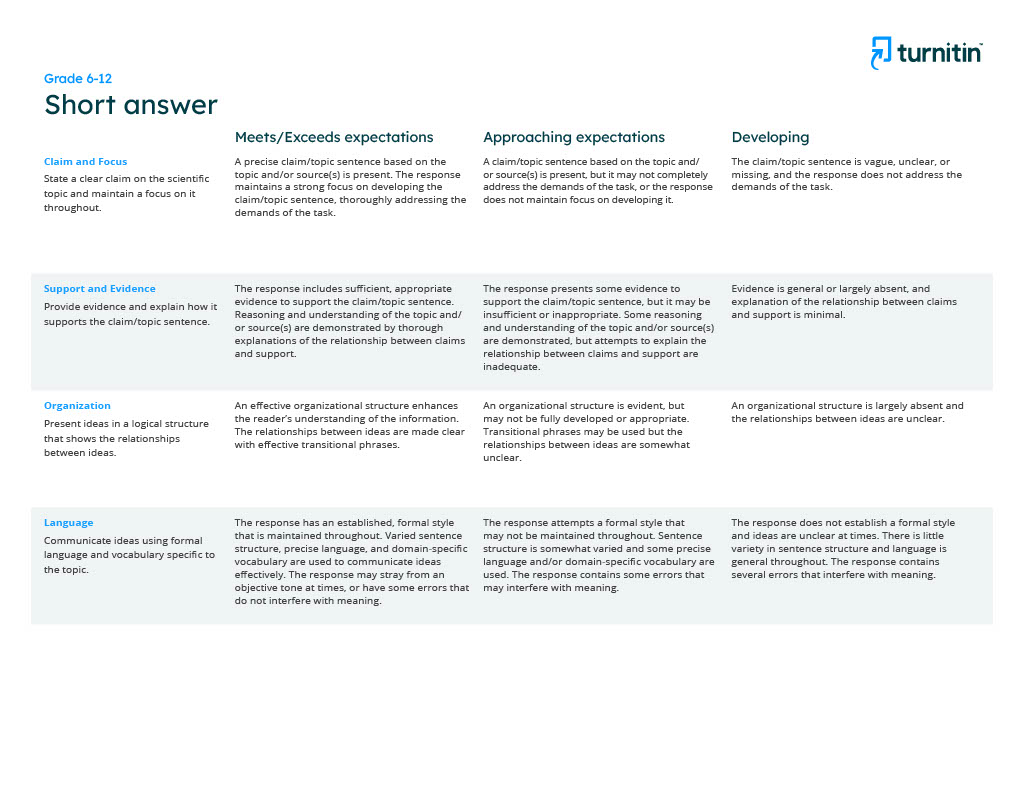

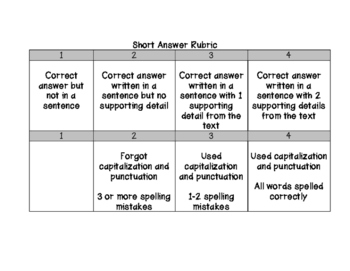

Short answer rubric (US English)

Rubric for short-answer writing on a topic.

Rubric suitable for short-answer, formative assignments that address a task on a topic. Use this rubric when asking students to directly, but briefly, defend a claim about or explain a topic.

Consider using the Short Answer QuickMark Set with this rubric. These drag-and-drop comments were tailor-made by veteran educators to give actionable, formative feedback directly to students. While they were explicitly aligned to this particular rubric, you can edit or add your own content to any QuickMark.

This rubric is available and ready to use in your Feedback Studio account. However, if you would like to customize its criteria, you can "Duplicate this rubric" in your Feedback Studio account and then edit the rubric as needed. Or, you can download this .rbc file and then import to your account to begin editing the content.

- Educational Assessment

Short Answer Questions: A Great Middle Ground

- January 12, 2011

- Susan Codone PhD

Stronger than multiple choice, yet not quite as revealing (or time consuming to grade) as the essay question, the short answer question offers a great middle ground – the chance to measure a student’s brief composition of facts, concepts, and attitudes in a paragraph or less.

The University of Wisconsin Teaching Academy calls short answer questions “constructed response”, or “open-ended questions that require students to create an answer.” The Center for Teaching Excellence at the University of Illinois at Urbana-Champaign says that short answer questions allow students to present an original answer.

Like all assessment items, a short answer question should clearly assess a specific learning objective. It should ask students to select relevant facts and concepts and integrate them into a coherent written response. Question 1, below, is a typical example of a short answer question requiring such a constructed response.

This question sets up a scenario with an expert role, a community history, and an environmental problem and asks the students to use a specific problem-solving strategy — the 4 A’s — to frame a response, which can most likely be completed in one paragraph.

Question 2 is slightly more problematic because of a very common error in constructing short answer questions.

This question, while well-intended, actually asks two questions. This likely will leave the student confused as to which question is more important. Additionally, the student will have to write a longer response to answer both questions, leading this particular test question more toward an essay response than a short answer. Short answer questions should always ask one clear question, rather than confusing the issue with multiple queries.

Finally, one strategy professors use is to post a rubric in the test so that students will know how points will be distributed. Question 3, below, both shows such a rubric and demonstrates another common problem in short answer question development.

Note in this question, a scoring distribution is provided to the students — not only do they know the question is worth six points, but they also know immediately that three points will be awarded for fully answering the question and two points for legibility, with the final point for spelling and grammar. Question 3 also demonstrates another common error — writing questions that close off a student’s potential answer. A better question would ask “How might two accidents be an acceptable level of risk…”, in order to promote a more meaningful answer.

Short answer questions are a great middle ground for professors. They are easier to develop than multiple choice and generate a more in-depth answer. Because of their brevity, they are easier to grade and they encourage students to integrate information into a coherent written answer. They can measure many types of knowledge when phrased correctly — even divergent thinking and subjective and imaginative thought. Best of all, they can provide professors with an open window into student learning — the real purpose of assessment.

Susan Codone, PhD is an associate professor in the Department of Technical Communication, School of Engineering at Mercer University.

Stay Updated with Faculty Focus!

Get exclusive access to programs, reports, podcast episodes, articles, and more!

- Opens in a new tab

Already a subscriber, log in now.

- Schoology Learning

Short Answer/Essay: Standard Question Type

Use the Short Answer/Essay question type to ask questions requiring students to provide a written response. Students can also Insert images, links, and other supporting materials in their responses.

Question Setup

Write your question or writing prompt in the Compose question field.

The Rich Text Editor appears when your click your cursor into the Compose question field. Hover your mouse over the individual icons to view the tooltip explaining the function of each button.

You may copy and paste images into this area from a browser only. If you wish to insert an image directly from your device, use the Image tool in the Rich Text Editor.

Enable rich text options in the Text f ormatting options for students area . By default, students are permitted to include bolded, italicized, underlined, bulleted, or numbered text in their responses.

Click the highlighted rich text option to remove that option for students.

Enter a word limit for student responses in the Word limit field and determine whether to allow submissions over the word limit or not.

Enter instructions into the Placeholder text for students to display a text string that will be the default in the answer box when the student sees the question. For example, "Type your answer here."

Scoring Instructions

If you are creating a Short Answer/Essay question in AMP, you will have access to a Scoring Instructions field. As an instructor, you can access this field while scoring a student’s submission.

Align Learning Objectives or Rubrics

Click Learning Objectives to open the Learning Objectives browser window.

Click Browse/Search to find and add specific objectives.

Click Manage for objectives already aligned to the question.

Click Confirm to save the learning objectives.

Click Align Rubric to create a new rubric, or to add one from your Resources.

Once a rubric has been aligned, you can click the rubric icon (four squares) to view the preview rubric or click X to remove the rubric from the question.

Check the Show to students box to display the rubric to students when they are taking the test.

Preview and Edit Question

To see how the question will appear to a student taking it in an assessment, click Preview Question .

Once you are finished editing the question, click Save .

Please note, these errors can depend on your browser setup.

If this problem persists, please contact our support.

- help_outline help

iRubric: Short Answer Rubric

- Explanatory

- Presentation

- Campus Maps

- Faculties & Schools

Now searching for:

- Home

- Courses

- Subjects

- Design standards

- Methods

- Designing exams

- Multiple choice questions

- Short answer questions

- Extended written response questions

- Quality assurance

- Frequently asked questions

- Online exams

- Interactive oral assessment

- Report

- Case study analysis or scenario-based questions

- Essay

- Newspaper article/editorial

- Literature review

- Student presentations

- Posters/infographics

- Portfolio

- Reflection

- Annotated bibliography

- OSCE/Online Practical Exam

- Viva voce

- Marking criteria and rubrics

- Review checklist

- Alterations

- Moderation

- Feedback

- Teaching

- Learning technology

- Professional learning

- Framework and policy

- Strategic projects

- About and contacts

- Help and resources

Short answer questions

Short answer questions (or SAQs) can be used in examinations or as part of assessment tasks.

They are generally questions that require students to construct a response. Short answer questions require a concise and focused response that may be factual, interpretive or a combination of the two.

SAQs can also be used in a non-examination situation. A series of SAQs can comprise a larger assessment task that is completed over time.

Advantages and limitations

- Limitations

- Questions can reveal a student’s ability to describe, explain, reason, create, analyse, synthesise, and evaluate.

- Gives opportunities for students to show higher level skills and knowledge.

- Allows students to elaborate on responses in a limited way.

- Provides an opportunity to assess a student’s writing ability.

- Can be less time consuming to prepare than other item types.

- Structured in a variety of ways that elicit a range of responses, from a few words to a paragraph.

- Can limit the range of content that can be assessed.

- Favours students who have good writing skills.

- Can potentially be difficult to moderate.

- Can be time consuming to assess.

- Need to be well written for the standard of answers to be able to be differentiated in terms of assessment.

Guidelines for constructing short answer questions

- Effective short answer questions should provide students with a focus (types of thinking and content) to use in their response.

- Avoid indeterminate, vague questions that are open to numerous and/or subjective interpretations.

- Select verbs that match the intended learning outcome and direct students in their thinking.

- If you use ‘discuss’ or ‘explain’, give specific instructions as to what points should be discussed/explained.

- Delimit the scope of the task to avoid students going off on an unrelated tangent.

- Know what a good response would look like and what it might include reference to.

- Practice writing a good response yourself so you have an exemplar and so you are aware of how long it may take to answer.

- Provide students with practice questions so they are familiar with question types and understand time limitations.

- Distribute marks based on the time required to answer.

- Does the question align with the learning outcome/s?

- Is the focus of the question clear?

- Is the scope specific and clear enough for students to be able to answer in the time allocated?

- Is there enough direction to guide the student to the expected response?

Examples of short answer questions

Your questions can access a range of cognitive skills/action verbs.

List/identify

This SAQ requires students to simply identify or list. The question may indicate the scope of requirements. e.g. List three, List the most important.

For example:

- “List the typical and atypical neuroleptics (anti-psychotics) used to treat schizophrenia.”

This question asks student to define a term or idea.

- “What is the capital gains tax?”

- “Define soundness as an element of reasoning”.

This is a question where students are asked to provide an explanation. The explanation may address what, how or why.

- “Why does the demand for luxury goods increase as the price increases?”

- “What are the important elements of a well-presented communication strategy?”

- “Why does an autoantibody binding to a post-synaptic receptor stop neuron communication?

- “Explain the purpose of scaffolding as a teaching strategy”.

Justify/support

A question that includes a requirement to justify or support can ask students to provide an example of one or several specific occurrences of an idea or concept.

- “Use 2 examples to show how scaffolding can be used to improve the efficacy of teaching and learning”.

For this kind of question, asks students to discuss how two or more concepts or objects are related. Is one different from the other? If so, how? Are they perfectly alike? Does one represent the other in some way?

- “Why would a rise in the price of sugar lead to an increase in the sales of honey?”

Combination

Types of questions can be combined.

- “List the three subphyla of the Phylum Chordata. What features permit us to place them all within the same phylum? “

- “What benefits does territorial behaviour provide? Why do many animals display territorial behaviour?”

- “Will you include short answer questions on your next exam? Justify your decision with two to three sentences explaining the factors that have influenced your decision.”

Additional resources

Short Answer Questions- Assessment Resource Centre - University of Hong Kong

Rubric for Answering Short Answer Questions (Grades 2-5)

What educators are saying

Description, questions & answers.

- We're hiring

- Help & FAQ

- Privacy policy

- Student privacy

- Terms of service

- Tell us what you think

AP® US History

How to improve ap® us history student success on short answer responses.

- The Albert Team

- Last Updated On: March 1, 2022

The APUSH Redesign (and the Re-Redesign that followed immediately this year) has brought a great deal of uncertainty, angst and confusion to many teachers. This is my eighth year teaching the course, and while I had certainly reached a comfort level with the traditional multiple-choice and free-response questions, I have come around to almost all of the aspects of the redesign.

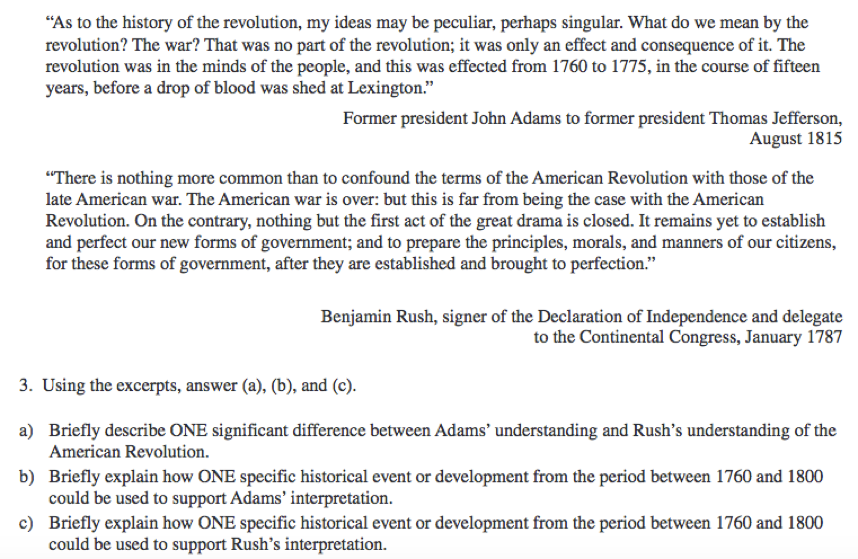

In my opinion, the best addition to the test is the new short answer section, found in Section 1 of the exam (along with the stimulus multiple-choice questions ). Last June, I was fortunate enough to attend the AP® US History Reading in Louisville Kentucky. For one week, I graded the same Short Answer Question over 3,500 times (for the record, I never want to read about John Adams or Benjamin Rush and their interpretation of the American Revolution again). While I certainly do not claim to be an expert, or have any “insider information” on the inner workings of the College Board, I am happy to share my insights and advice based on my experiences and conversations with colleagues.

Short Answer Format

- Two different secondary sources written by historians with varying perspectives on an event or time period.

- Primary sources (quotations cartoons, maps, etc.)

- A simple prompt or identification question with no stimulus

- These parts vary in difficulty, meaning that certain points may be more challenging (for example one part might ask for simple fact recall, while another part might require higher-level analysis).

- Different parts of the same question can build off of or reference each other (for example, Part A may ask for students to explain a quotation, and Part B might ask them to provide an example of something related to that same quotation).

- Some of the prompts will have “internal choice.” This means that students have options within the question. For example, a prompt may ask students to “explain why ONE of the following was the most significant cause of the Civil War: The Dred Scott Decision, Bleeding Kansas, or publication of Uncle Tom’s Cabin . Students can choose any of the options, describing WHAT it is and WHY it is the most significant.

Scoring Short Answer Questions

There is not really a rubric for these types of questions, like there is for the Document-Based Question (DBQ) or Long Essay Question (LEQ) . Students are simply scored on whether or not they answered the prompt correctly. Students either receive one point or zero points for each part of the question (A, B, and C), for a maximum total of three points per prompt.

Each letter is scored separately, meaning that students completely missing the point on Part A does not necessarily mean they are doomed for Parts B and C.

Readers are instructed that students receive credit as long as they “meet the threshold,” meaning they have completed the minimal amount needed to answer the question. While what constitutes the threshold depends on the question, what this essentially means is that some students may earn all points by going into incredible depth, giving detailed and intricate examples, and taking up the entire page, while others may simply answer in a sentence or two for each potion, barely meet the requirements, and still receive all three points. While I would never encourage my students to do the bare minimum, I do let them know that if you are short of time or unsure, it is better to put something rather than nothing.

If the amount of historically accurate and inaccurate information is roughly balanced and equal, the reader has discretion whether or not to reward the point.

One thing I was encouraged by at the reading is that in general, readers were told to give students the benefit of the doubt when scoring responses. The goal was to award them points whenever merited, not to penalize or deduct points based on minor mistakes or misunderstandings.

Ten Tips for Student Success

To help illustrate my advice, I will refer to the prompt that still haunts me in my dreams, Short Answer Question #3 from the 2015 AP® US History Exam:

Source: 2015 AP® US History Exam, Short Answer Section from AP® Central (College Board)

1. Put it in Own Words

To receive full credit for responses, students must fully answer the question using their own words. For the above prompt, many students parroted the prompts or excessively quoted them for Part A rather than describing the differences in their own words. For example, students would regularly say a difference between Adams and Rush was that:

“Adams thought the revolution was in the minds of the people, while Rush said it would not be complete until principles, morals and manners of citizens were established.”

Students would not receive the point for this, as it is simply paraphrasing what is said, and does not demonstrate genuine understanding.

An example of a more successful response would be:

“Adams believed the American Revolution was not the actual War for Independence, but rather the psychological change in mindset of Patriot colonists leading up to the conflict. Rush agrees with Adams that true revolution was not the war, but argues that the revolution is incomplete until stable federal government is established.”

2. Provide Specific Examples: HOW and WHY?

Parts B and C of the prompt ask the student to provide evidence that would support the claims of both Adams and Rush. When doing so, students should provide specific examples AND explain WHY they are relevant. For example, students might use the U.S. Constitution as an example that supports Rush’s interpretation of the revolution, as this document officially established the structure of the federal government and provided a Bill of Rights that defined people’s basic rights.

3. Get Right to the Point

No introductions are needed, as space and time are limited and these are not essays. Nor is a thesis required or terribly helpful. Students should dive right in and start directly answering the question.

4. What is Acceptable?

Complete sentences are required. Sentence fragments or bullet points will not be scored. They were very strict in enforcing this.

Use of common abbreviations is acceptable (for example, FDR, WPA, FBI, etc.).

With limited time and space, it is better to go into depth and explain ONE example rather than superficially list multiple.

5. Stay in the Time Period

One of the most common mistakes is that students do not stay in the time period. For example, if the students use the Great Awakening as evidence that supports Adams’ quote, they would not receive the point because the religious movement preceded the period of 1760-1775.

6. Stay in the Boxes

Students need to be careful to leave themselves enough room to address all three parts on the 23-line page. Students are NOT permitted to write onto a second page or even outside the boxed area. Anything written outside the box will not be scored.

7. Make Sure Evidence and Examples are ESSENTIAL

If a question asks for ONE similarity or difference, the readers are actually looking for the MAIN or ESSENTIAL similarity or difference. For example, students could not simply say:

“Adams thought the Revolution occurred between 1760-1776, while Rush thought the Revolution was after the war.”

This would not count because it is too superficial and simplistic. It is not the MAIN difference described in the text.

8. Watch for Categories of Analysis or Historical Themes

Students should watch for categories of analysis (political, economic, cultural, social, intellectual). Often students give examples that do not match the category they are being asked to identify.

Students should assume the reader has no background knowledge and fully explain their examples and evidence.

9. Minor Errors will NOT Kill your Score

Minor errors do not necessarily mean students will not be awarded points. For example, for Part C, many students used Bacon’s Rebellion as an example that a stable federal government was needed to prevent uprisings or create a fairer and more equal society. They are mistaking Bacon’s Rebellion for Shays’ Rebellion , but since their description of the events is correct and they simply switched the names, they still would be awarded the point for their example. I have not shared this with my students per se, as I hold them to high expectations and want them to focus on knowing their content and striving for accuracy, but I do stress to them that even if you don’t know the law or person, describe them as best you can, as this is better than leaving it blank.

10. The Debate Over How to Organize Writing

There was a lot of debate at the reading as to which is better: writing responses in paragraph form without letter labels, or to have separate sets of complete sentences broken down and labeled by the specific letter being addressed. The benefit of writing in paragraph form without labeled letters was that students were free to address the prompt in whatever order they preferred, and for good writers, it often had a more natural feel. Additionally, if students failed to answer Part A in the beginning where they were initially trying to, but eventually answered it later on in the response, readers could still award the point when there were no labeled letters. If the students labeled their sentences with the corresponding letters, students could not get credit if they answered the question in a different section (for example, some students failed to fully answer A in the section so labeled, but eventually got to it in Part C, but they could only receive the point in the labeled section. However, a benefit to labeling their sentences was that it ensured students actually fully addressed the specific questions for A, B, and C. Often students who wrote in unlabeled paragraph form forgot to answer parts or had incomplete responses as they jumped from one part to another.

I advise my students to do a hybrid of these two scenarios, as I believe it gives them the best of both worlds. I suggest my students label their sections so they do not forget any portions, but when the are done writing they cross out the letters, so that they are able to be awarded points if they address them inadvertently in other parts of their response.

How to Teach the AP® US History Short Answer Question

1. Work with Students on Answering the Question

Students sometimes tend to have a tough time with these types of questions initially. Some jot down fragments of vague partial answers that do not go far enough; overachievers want to turn them into complex essays with introductions and transitions. It really is a skill that needs to be practiced and perfected. Student answers should be concise (hence the SHORT answer), yet thorough with specific examples.

In the beginning, our class worked on short answers together and as partners, and walked through and discussed good responses. I also pulled student samples from the College Board’s website and had students assess them and score them. This was a great activity in helping students see the difference between incomplete, borderline and exceptional responses.

Student should be in the practice of putting their responses in their own words, not paraphrasing, parroting, or quoting the sources language. This does not demonstrate understanding, which is what the College Board is looking for. Working with students on putting responses in their own words is definitely worthwhile.

2. Expose Students to a Wide Variety of Historical Sources

Exposing your students to a wide variety of sources is great preparation for the Short Answer section (as well as the multiple choice and essays for that matter).

Looking at historians that differ in their ideological or other interpretations of history and discussing or debating in class helps students gain an understanding and appreciation of nuance and different points of view. Using Howard Zinn’s People’s History of the United States and Larry Schweikart’s Patriot’s Guide to American History gives students both liberal and conservative perspectives on key events in American history.

I also like to do Socratic Seminars or debates using secondary texts that take a compelling or unorthodox perspective and allow students to discuss whether they agree or disagree with the historian’s argument.

Additionally primary source exposure can be beneficial in preparing students for reading and comprehending texts that they could see in the prompts for any parts of the exam, including short answers.

3. Timing is everything.

The short answer portion is part of Section 1, and students have 50 minutes specifically for these four questions after the multiple-choice section is completed. This gives students less than 13 minutes per question. Students need practice in this time crunch. Many students will want to spend lots of time planning and writing that they will not have on exam day. I typically start out more lax early in the year, but by October or November, students need to be in the habit of reading the prompt quickly and thoroughly, and moving into writing their responses under a time crunch.

Why I Have Learned to Love the Short Answer Question

The short answer section of the exam is a brand new addition to the AP® exam, but I actually believe it might be the most beneficial in many ways. Students used to be forced to memorize “everything” and were at the mercy of what random factoid the College Board would ask them on the multiple-choice section. With short answers, students can bring in relevant examples that they learned and recall. They don’t need to know “everything” they just need to know some key things about each period. This can be reassuring to students and liberating for teachers who are trying to cram everything into their classes in the few short months before the AP® exam.

Additionally, what I like best about the Short Answer Question is that unlike the other types of questions, it is very obvious when students know their stuff (and conversely, when they have no clue what is going on). Multiple-choice can be “multiple guess,” and students can rationally narrow the distractors down and make an educated selection. Essay pages can be filled with fluff, and a simple thesis and analyzing a couple of documents may get them a couple of points. With the short answers, there is really nowhere for students to hide. They either know what the author is arguing, or they don’t. They either can provide an illustrative example, or they can’t. As a teacher, I love the pureness and authenticity of this type of assessment.

Looking for AP® US History practice?

Kickstart your AP® US History prep with Albert. Start your AP® exam prep today .

We also go over a five-step strategy for writing AP® US History FRQs in this video:

Ben Hubing is an educator at Greendale High School in Greendale, Wisconsin. Ben has taught AP® U.S. History and AP® U.S. Government and Politics for the last eight years and was a reader last year for the AP® U.S. History Short Answer. Ben earned his Bachelors degree at The University of Wisconsin-Madison and Masters degree at Cardinal Stritch University in Milwaukee, Wisconsin.

Interested in a school license?

2 thoughts on “how to improve ap® us history student success on short answer responses”.

It was my understanding that even if students label A, B, C, that any content which could apply in any part of the question would be fair game. For example, If a student labels A, B, and C in their answer, but then provides a piece of evidence for B in their area marked A. This was confirmed for me as recently as Tuesday by some in the know people in regards to AP® World. Is it not the same for APUSH? Or is the information provided in the article incorrect?

Hi Liz, thanks for the question. We are not privy to the exact instructions that the College Board gives to its readers, so we cannot answer your question with certainty. We recommend erring on the side of caution and developing the habit of fully answering a question in the space for which it is labelled. This will guarantee that the readers count your response to the appropriate question.

Comments are closed.

Popular Posts

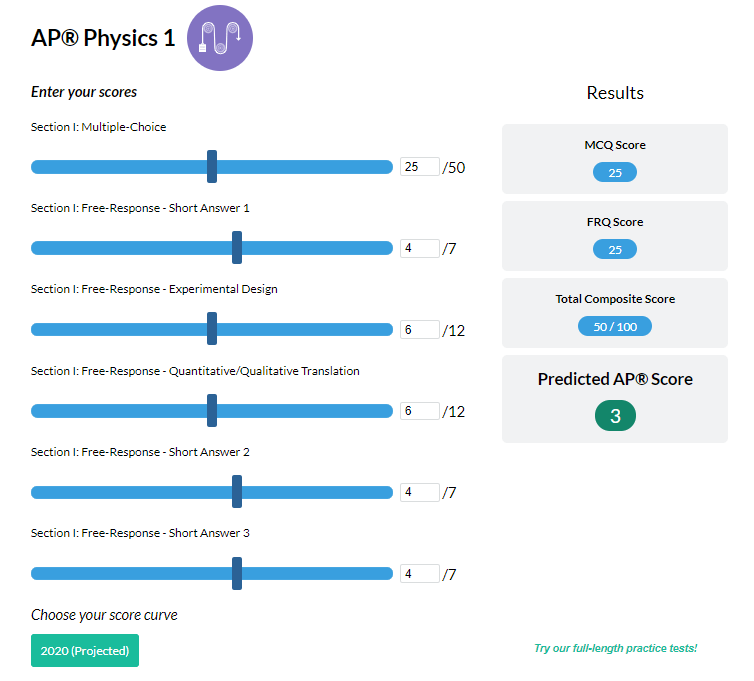

AP® Score Calculators

Simulate how different MCQ and FRQ scores translate into AP® scores

AP® Review Guides

The ultimate review guides for AP® subjects to help you plan and structure your prep.

Core Subject Review Guides

Review the most important topics in Physics and Algebra 1 .

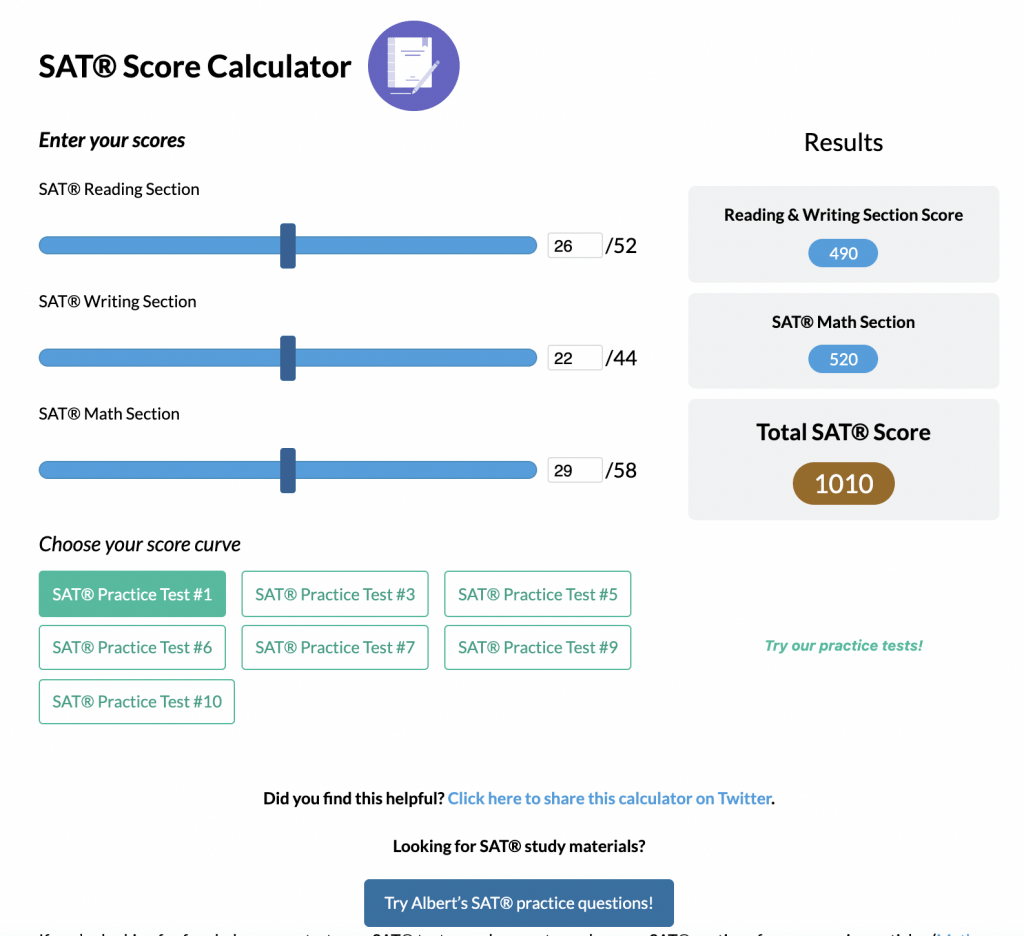

SAT® Score Calculator

See how scores on each section impacts your overall SAT® score

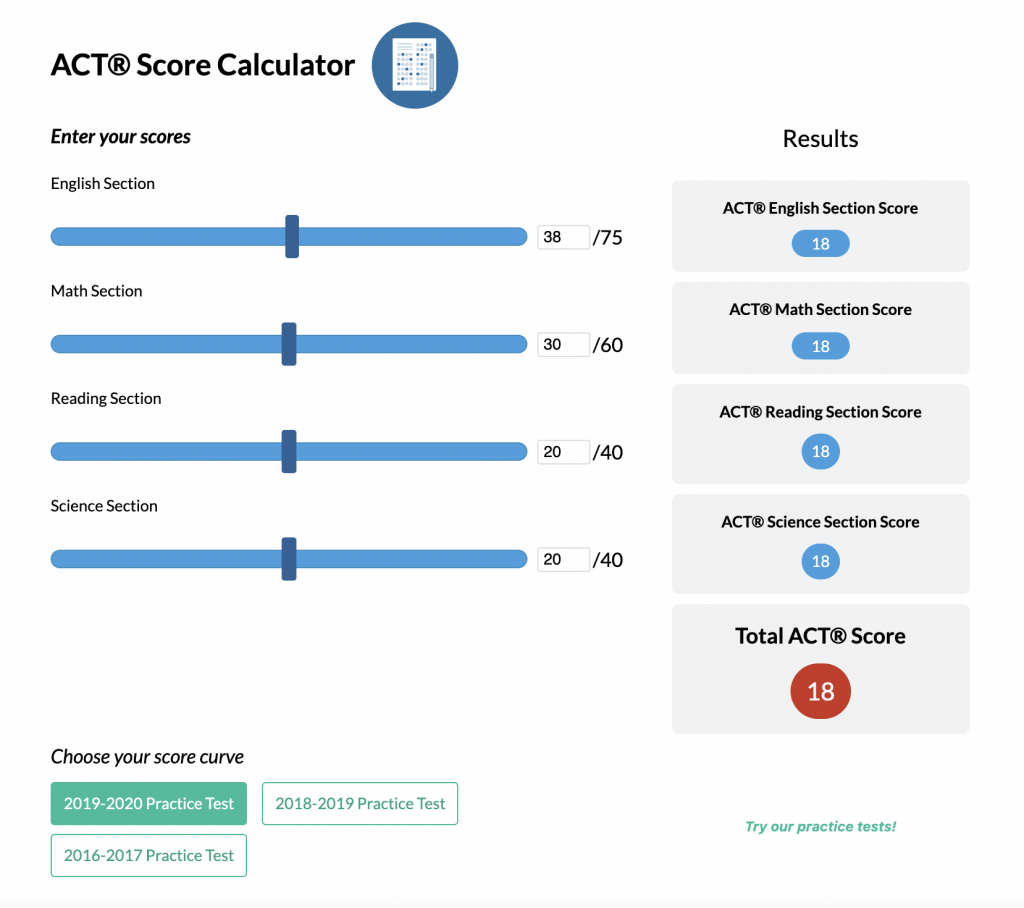

ACT® Score Calculator

See how scores on each section impacts your overall ACT® score

Grammar Review Hub

Comprehensive review of grammar skills

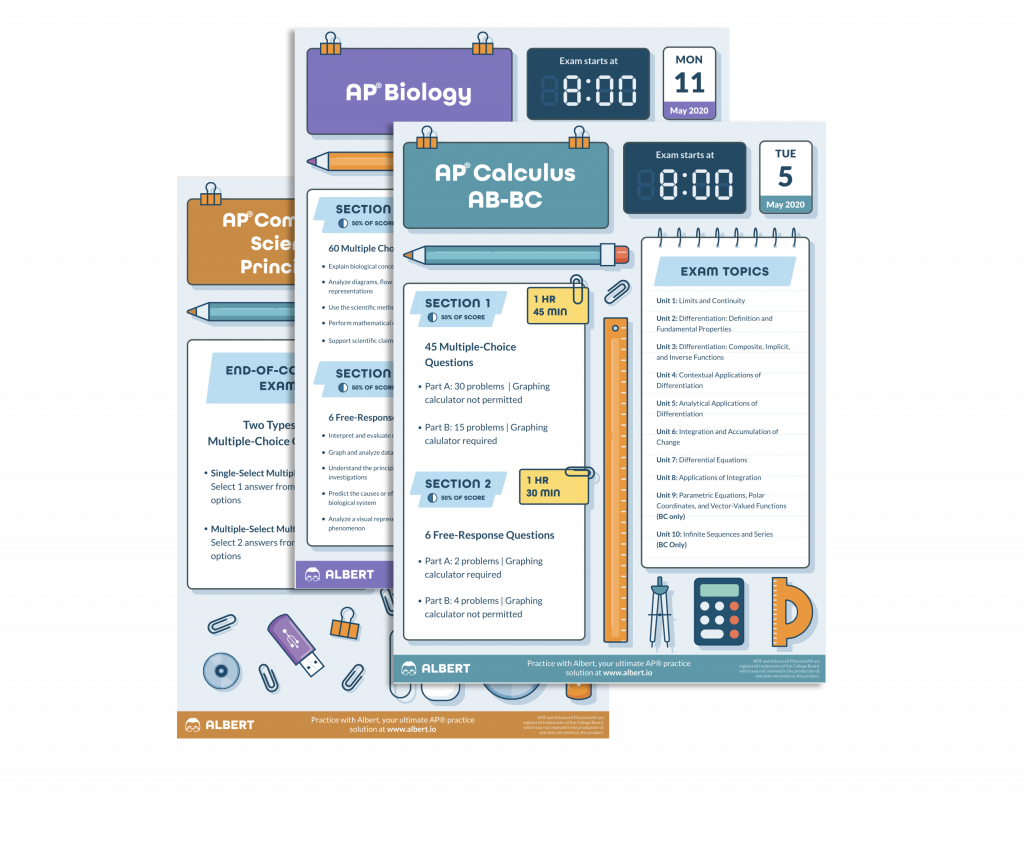

AP® Posters

Download updated posters summarizing the main topics and structure for each AP® exam.

Interested in a school license?

Bring Albert to your school and empower all teachers with the world's best question bank for: ➜ SAT® & ACT® ➜ AP® ➜ ELA, Math, Science, & Social Studies aligned to state standards ➜ State assessments Options for teachers, schools, and districts.

- Grades 6-12

- School Leaders

FREE Poetry Worksheet Bundle! Perfect for National Poetry Month.

15 Helpful Scoring Rubric Examples for All Grades and Subjects

In the end, they actually make grading easier.

When it comes to student assessment and evaluation, there are a lot of methods to consider. In some cases, testing is the best way to assess a student’s knowledge, and the answers are either right or wrong. But often, assessing a student’s performance is much less clear-cut. In these situations, a scoring rubric is often the way to go, especially if you’re using standards-based grading . Here’s what you need to know about this useful tool, along with lots of rubric examples to get you started.

What is a scoring rubric?

In the United States, a rubric is a guide that lays out the performance expectations for an assignment. It helps students understand what’s required of them, and guides teachers through the evaluation process. (Note that in other countries, the term “rubric” may instead refer to the set of instructions at the beginning of an exam. To avoid confusion, some people use the term “scoring rubric” instead.)

A rubric generally has three parts:

- Performance criteria: These are the various aspects on which the assignment will be evaluated. They should align with the desired learning outcomes for the assignment.

- Rating scale: This could be a number system (often 1 to 4) or words like “exceeds expectations, meets expectations, below expectations,” etc.

- Indicators: These describe the qualities needed to earn a specific rating for each of the performance criteria. The level of detail may vary depending on the assignment and the purpose of the rubric itself.

Rubrics take more time to develop up front, but they help ensure more consistent assessment, especially when the skills being assessed are more subjective. A well-developed rubric can actually save teachers a lot of time when it comes to grading. What’s more, sharing your scoring rubric with students in advance often helps improve performance . This way, students have a clear picture of what’s expected of them and what they need to do to achieve a specific grade or performance rating.

Learn more about why and how to use a rubric here.

Types of Rubric

There are three basic rubric categories, each with its own purpose.

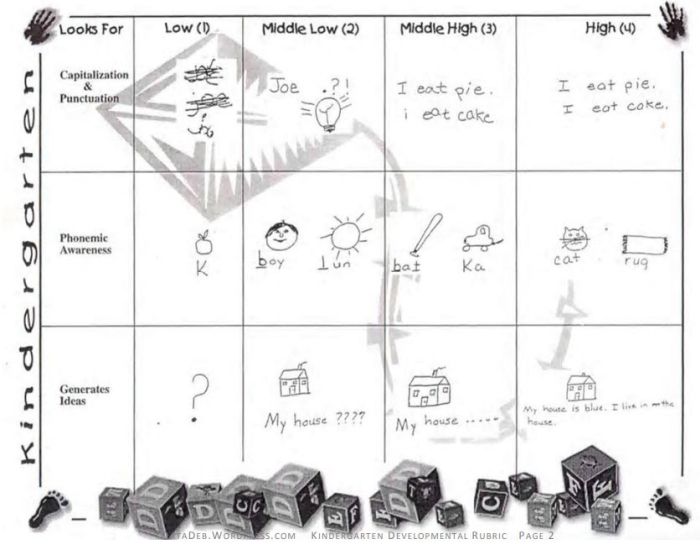

Holistic Rubric

Source: Cambrian College

This type of rubric combines all the scoring criteria in a single scale. They’re quick to create and use, but they have drawbacks. If a student’s work spans different levels, it can be difficult to decide which score to assign. They also make it harder to provide feedback on specific aspects.

Traditional letter grades are a type of holistic rubric. So are the popular “hamburger rubric” and “ cupcake rubric ” examples. Learn more about holistic rubrics here.

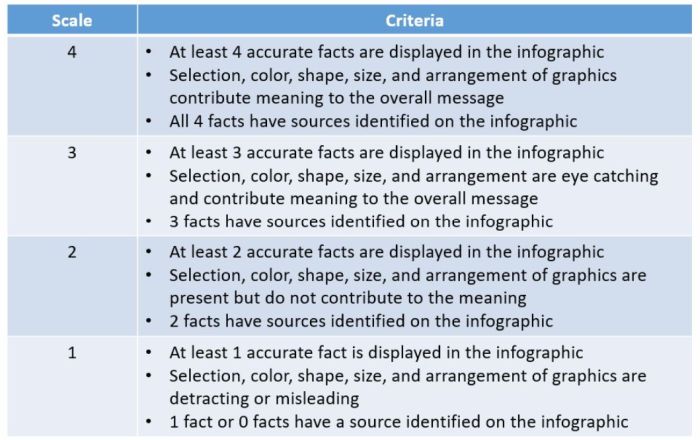

Analytic Rubric

Source: University of Nebraska

Analytic rubrics are much more complex and generally take a great deal more time up front to design. They include specific details of the expected learning outcomes, and descriptions of what criteria are required to meet various performance ratings in each. Each rating is assigned a point value, and the total number of points earned determines the overall grade for the assignment.

Though they’re more time-intensive to create, analytic rubrics actually save time while grading. Teachers can simply circle or highlight any relevant phrases in each rating, and add a comment or two if needed. They also help ensure consistency in grading, and make it much easier for students to understand what’s expected of them.

Learn more about analytic rubrics here.

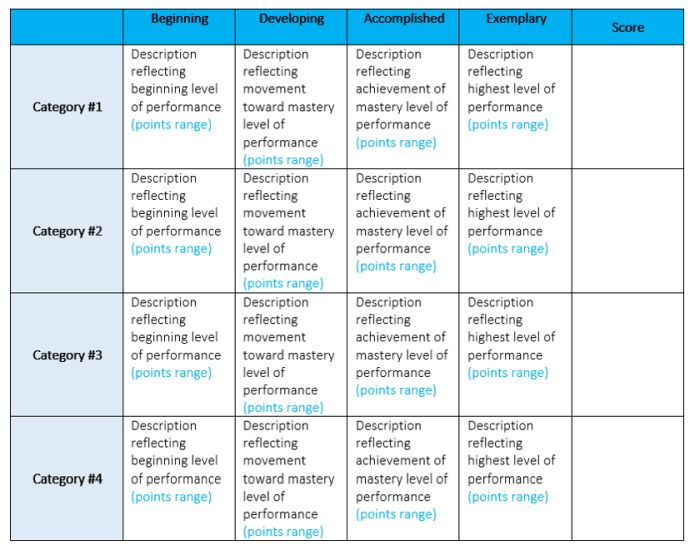

Developmental Rubric

Source: Deb’s Data Digest

A developmental rubric is a type of analytic rubric, but it’s used to assess progress along the way rather than determining a final score on an assignment. The details in these rubrics help students understand their achievements, as well as highlight the specific skills they still need to improve.

Developmental rubrics are essentially a subset of analytic rubrics. They leave off the point values, though, and focus instead on giving feedback using the criteria and indicators of performance.

Learn how to use developmental rubrics here.

Ready to create your own rubrics? Find general tips on designing rubrics here. Then, check out these examples across all grades and subjects to inspire you.

Elementary School Rubric Examples

These elementary school rubric examples come from real teachers who use them with their students. Adapt them to fit your needs and grade level.

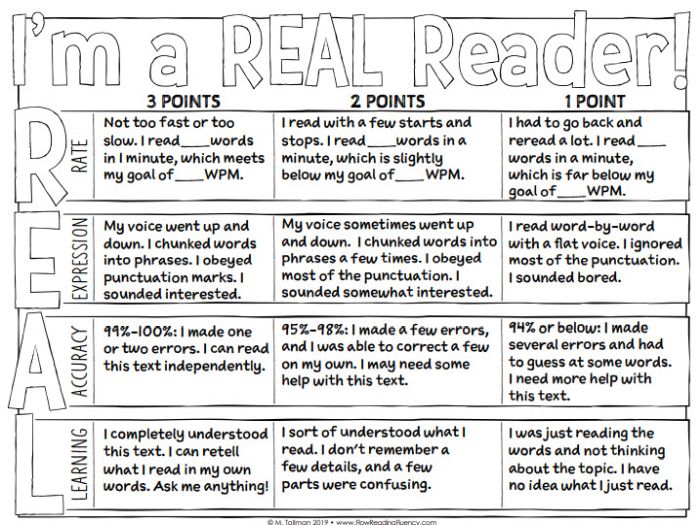

Reading Fluency Rubric

You can use this one as an analytic rubric by counting up points to earn a final score, or just to provide developmental feedback. There’s a second rubric page available specifically to assess prosody (reading with expression).

Learn more: Teacher Thrive

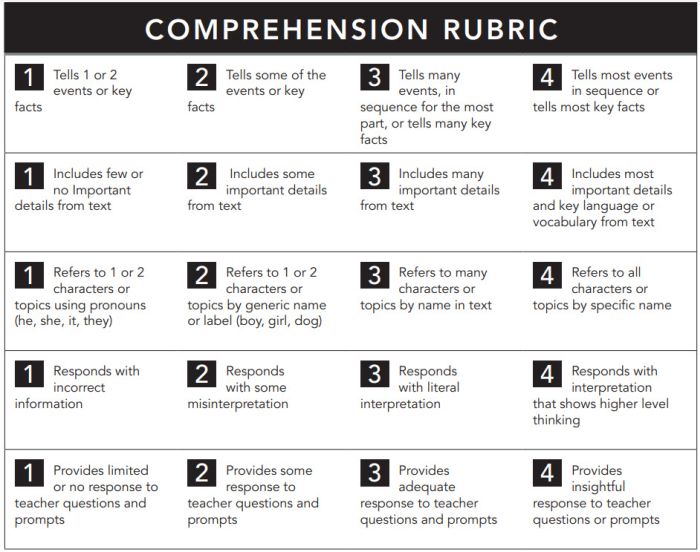

Reading Comprehension Rubric

The nice thing about this rubric is that you can use it at any grade level, for any text. If you like this style, you can get a reading fluency rubric here too.

Learn more: Pawprints Resource Center

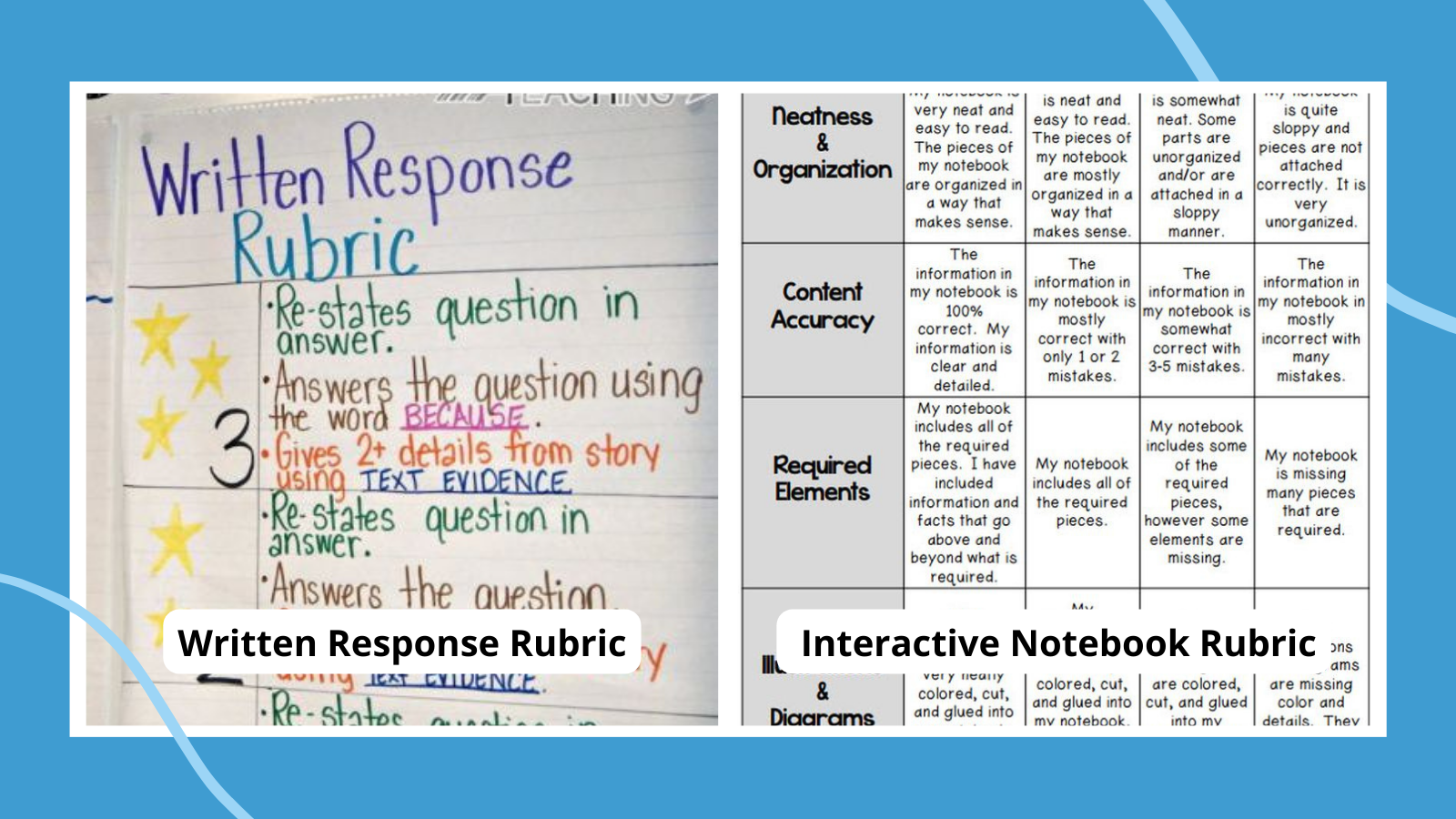

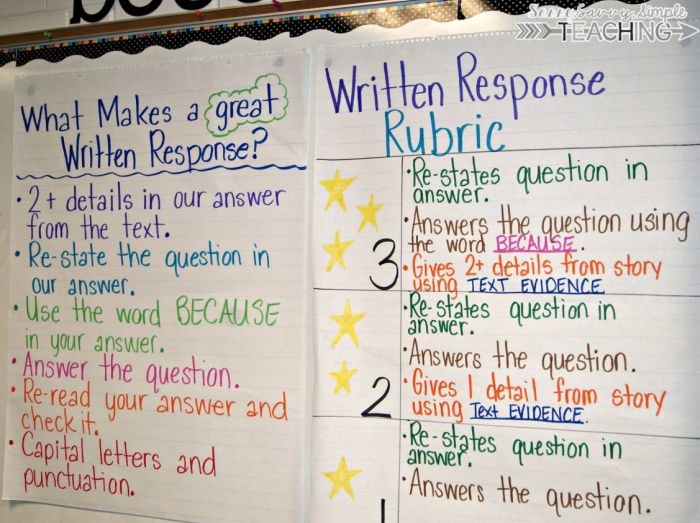

Written Response Rubric

Rubrics aren’t just for huge projects. They can also help kids work on very specific skills, like this one for improving written responses on assessments.

Learn more: Dianna Radcliffe: Teaching Upper Elementary and More

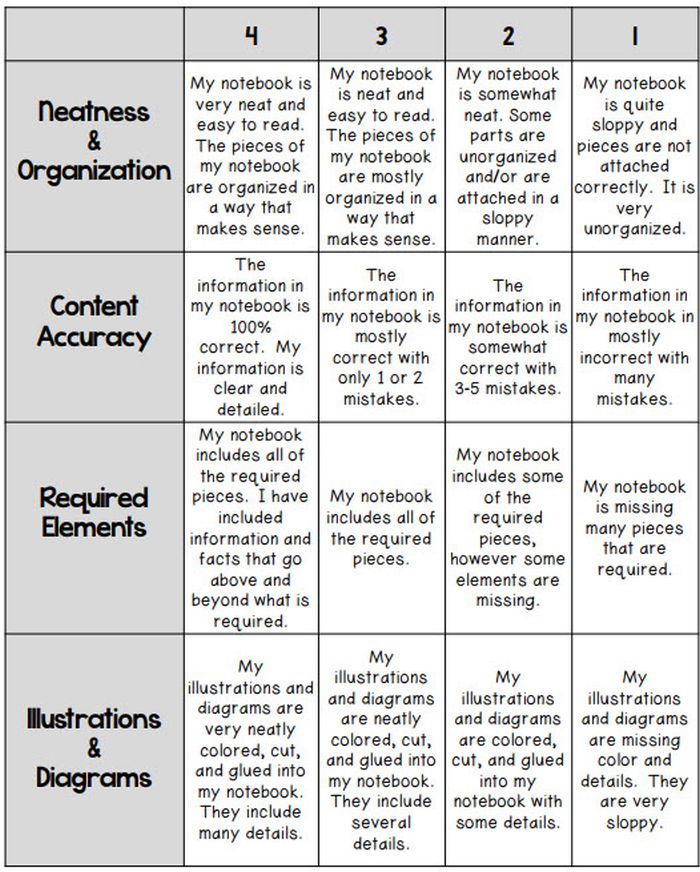

Interactive Notebook Rubric

If you use interactive notebooks as a learning tool , this rubric can help kids stay on track and meet your expectations.

Learn more: Classroom Nook

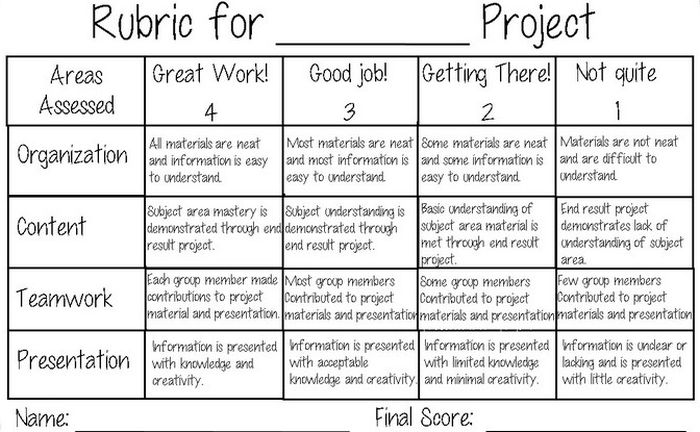

Project Rubric

Use this simple rubric as it is, or tweak it to include more specific indicators for the project you have in mind.

Learn more: Tales of a Title One Teacher

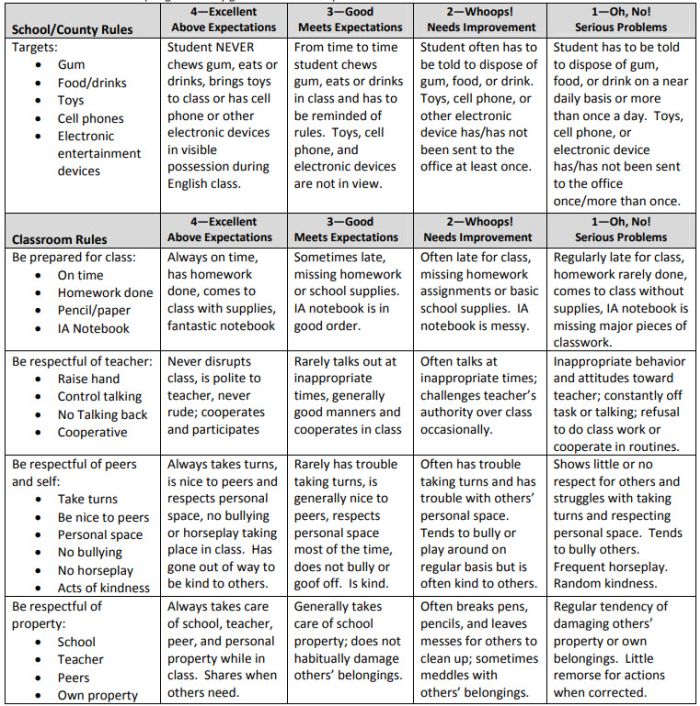

Behavior Rubric

Developmental rubrics are perfect for assessing behavior and helping students identify opportunities for improvement. Send these home regularly to keep parents in the loop.

Learn more: Teachers.net Gazette

Middle School Rubric Examples

In middle school, use rubrics to offer detailed feedback on projects, presentations, and more. Be sure to share them with students in advance, and encourage them to use them as they work so they’ll know if they’re meeting expectations.

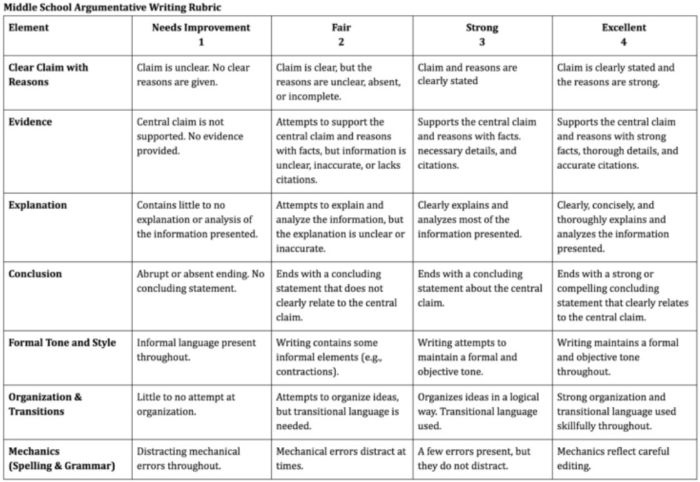

Argumentative Writing Rubric

Argumentative writing is a part of language arts, social studies, science, and more. That makes this rubric especially useful.

Learn more: Dr. Caitlyn Tucker

Role-Play Rubric

Role-plays can be really useful when teaching social and critical thinking skills, but it’s hard to assess them. Try a rubric like this one to evaluate and provide useful feedback.

Learn more: A Question of Influence

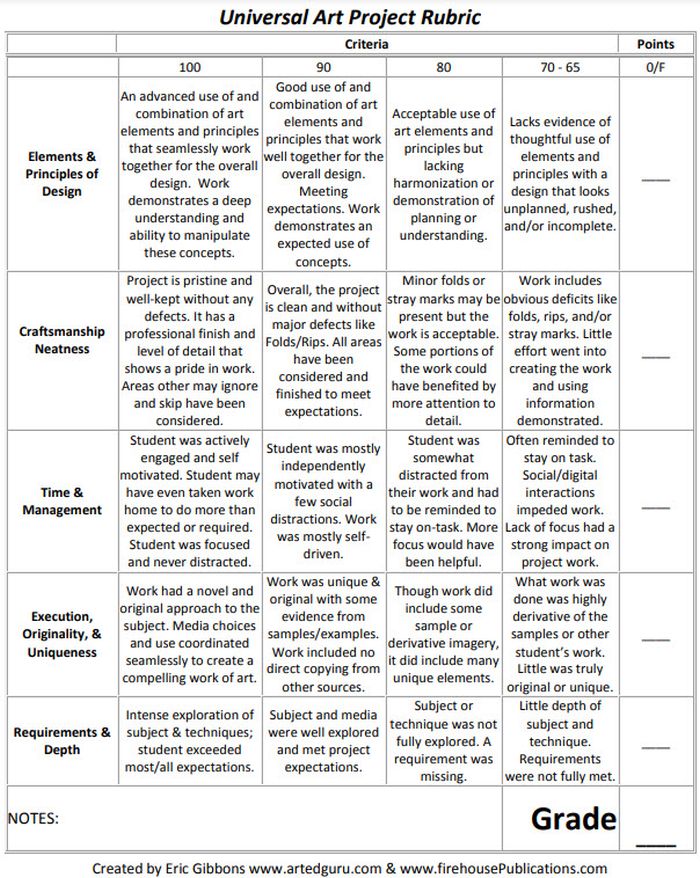

Art Project Rubric

Art is one of those subjects where grading can feel very subjective. Bring some objectivity to the process with a rubric like this.

Source: Art Ed Guru

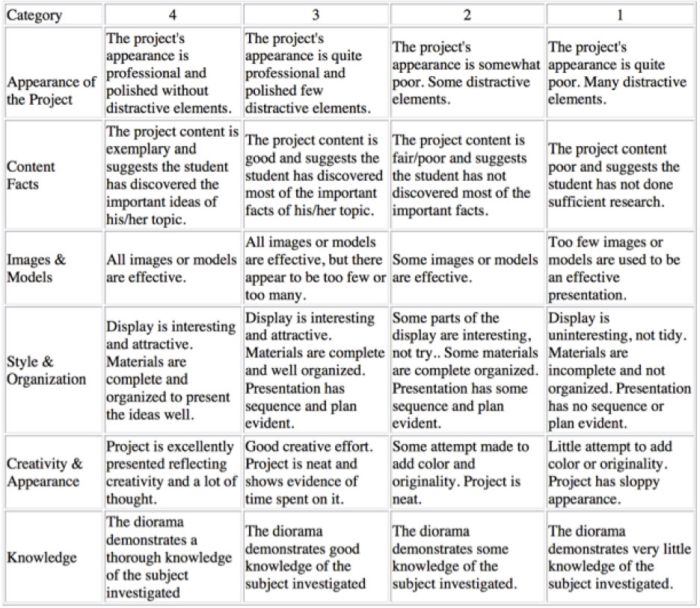

Diorama Project Rubric

You can use diorama projects in almost any subject, and they’re a great chance to encourage creativity. Simplify the grading process and help kids know how to make their projects shine with this scoring rubric.

Learn more: Historyourstory.com

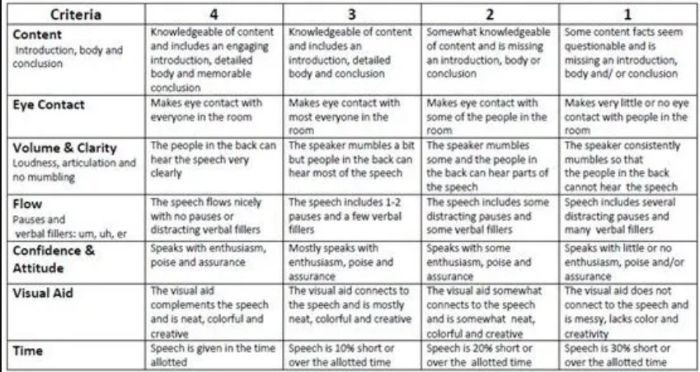

Oral Presentation Rubric

Rubrics are terrific for grading presentations, since you can include a variety of skills and other criteria. Consider letting students use a rubric like this to offer peer feedback too.

Learn more: Bright Hub Education

High School Rubric Examples

In high school, it’s important to include your grading rubrics when you give assignments like presentations, research projects, or essays. Kids who go on to college will definitely encounter rubrics, so helping them become familiar with them now will help in the future.

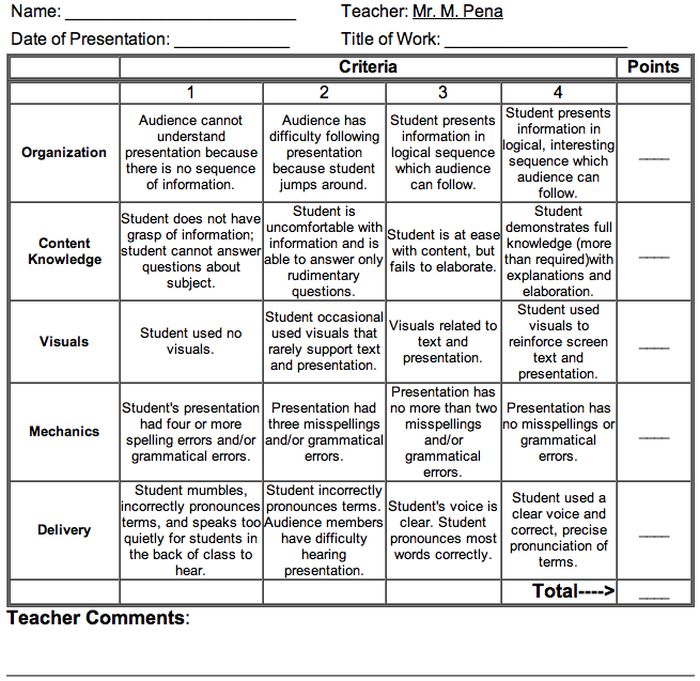

Presentation Rubric

Analyze a student’s presentation both for content and communication skills with a rubric like this one. If needed, create a separate one for content knowledge with even more criteria and indicators.

Learn more: Michael A. Pena Jr.

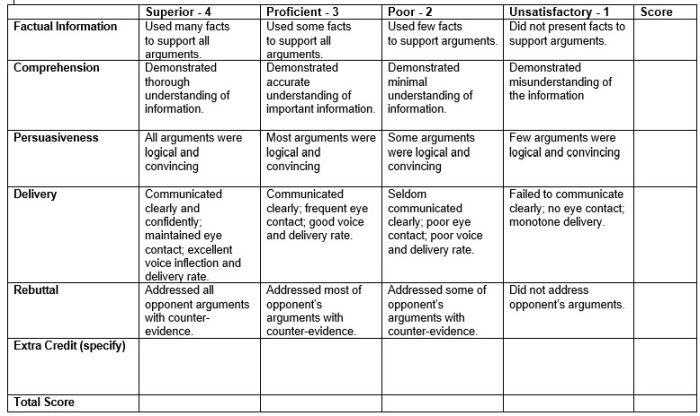

Debate Rubric

Debate is a valuable learning tool that encourages critical thinking and oral communication skills. This rubric can help you assess those skills objectively.

Learn more: Education World

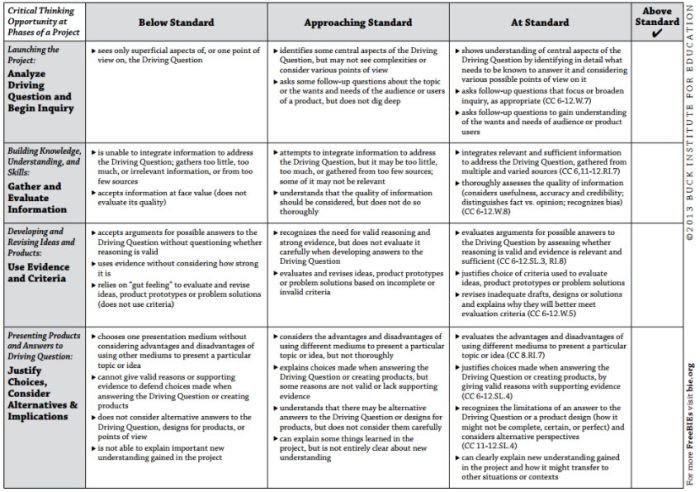

Project-Based Learning Rubric

Implementing project-based learning can be time-intensive, but the payoffs are worth it. Try this rubric to make student expectations clear and end-of-project assessment easier.

Learn more: Free Technology for Teachers

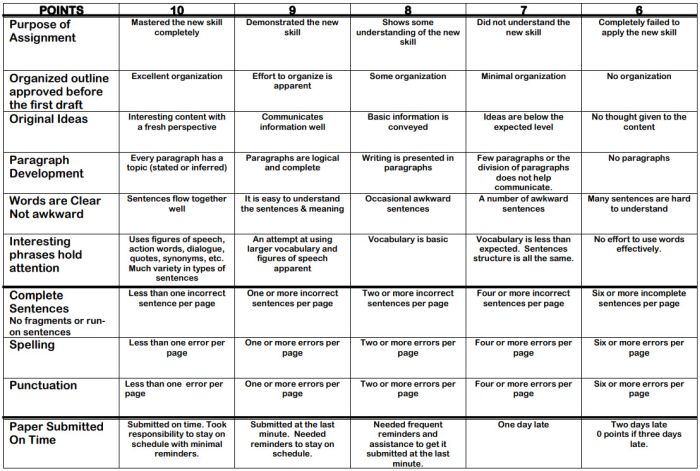

100-Point Essay Rubric

Need an easy way to convert a scoring rubric to a letter grade? This example for essay writing earns students a final score out of 100 points.

Learn more: Learn for Your Life

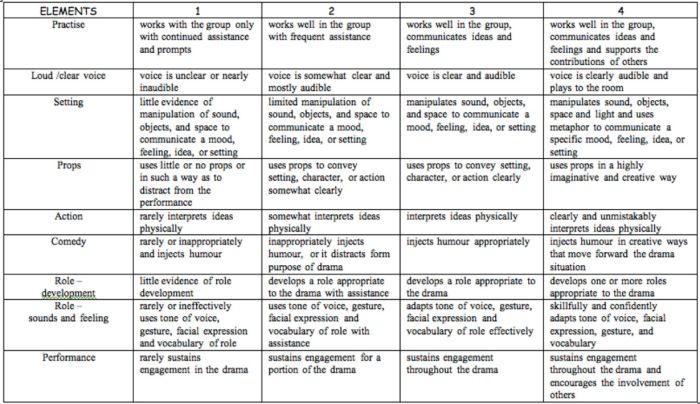

Drama Performance Rubric

If you’re unsure how to grade a student’s participation and performance in drama class, consider this example. It offers lots of objective criteria and indicators to evaluate.

Learn more: Chase March

How do you use rubrics in your classroom? Come share your thoughts and exchange ideas in the WeAreTeachers HELPLINE group on Facebook .

Plus, 25 of the best alternative assessment ideas ..

You Might Also Like

What Is Project-Based Learning and How Can I Use It With My Students?

There's a difference between regular projects and true-project based learning. Continue Reading

Copyright © 2023. All rights reserved. 5335 Gate Parkway, Jacksonville, FL 32256

European Conference on Technology Enhanced Learning

EC-TEL 2022: Educating for a New Future: Making Sense of Technology-Enhanced Learning Adoption pp 243–257 Cite as

Assessing the Quality of Student-Generated Short Answer Questions Using GPT-3

- Steven Moore ORCID: orcid.org/0000-0002-5256-0339 12 ,

- Huy A. Nguyen ORCID: orcid.org/0000-0002-1227-6173 12 ,

- Norman Bier 12 ,

- Tanvi Domadia 12 &

- John Stamper 12

- Conference paper

- First Online: 05 September 2022

3039 Accesses

12 Citations

1 Altmetric

Part of the book series: Lecture Notes in Computer Science ((LNCS,volume 13450))

Generating short answer questions is a popular form of learnersourcing with benefits for both the students’ higher-order thinking and the instructors’ collection of assessment items. However, assessing the quality of the student-generated questions can involve significant efforts from instructors and domain experts. In this work, we investigate the feasibility of leveraging students to generate short answer questions with minimal scaffolding and machine learning models to evaluate the student-generated questions. We had 143 students across 7 online college-level chemistry courses participate in an activity where they were prompted to generate a short answer question regarding the content they were presently learning. Using both human and automatic evaluation methods, we investigated the linguistic and pedagogical quality of these student-generated questions. Our results showed that 32% of the student-generated questions were evaluated by experts as high quality, indicating that they could be added and used in the course in their present condition. Additional expert evaluation identified that 23% of the student-generated questions assessed higher cognitive processes according to Bloom’s Taxonomy. We also identified the strengths and weaknesses of using a state-of-the-art language model, GPT-3, to automatically evaluate the student-generated questions. Our findings suggest that students are relatively capable of generating short answer questions that can be leveraged in their online courses. Based on the evaluation methods, recommendations for leveraging experts and automatic methods are discussed.

- Question generation

- Question quality

- Question evaluation

This is a preview of subscription content, log in via an institution .

Buying options

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Compact, lightweight edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

Tax calculation will be finalised at checkout

Purchases are for personal use only

We used the default hyperparameters as suggested in https://beta.openai.com/docs/guides/fine-tuning .

Aflalo, E.: Students generating questions as a way of learning. Act. Learn. High. Educ. 1469787418769120 (2018)

Google Scholar

Amidei, J., Piwek, P., Willis, A.: Evaluation methodologies in automatic question generation 2013–2018. In: Proceedings of the 11th International Conference on Natural Language Generation, pp. 307–317 (2018)

Amidei, J., Piwek, P., Willis, A.: Rethinking the agreement in human evaluation tasks. In: Proceedings of the 27th International Conference on Computational Linguistics, pp. 3318–3329 (2018)

Bates, S.P., Galloway, R.K., Riise, J., Homer, D.: Assessing the quality of a student-generated question repository. Phys. Rev. Spec. Top.-Phys. Educ. Res. 10 (2), 020105 (2014)

Bier, N., Moore, S., Van Velsen, M.: Instrumenting courseware and leveraging data with the open learning initiative. In: Companion Proceedings 9th International Conference on Learning Analytics & Knowledge, pp. 990–1001 (2019)

Brown, T., et al.: Language models are few-shot learners. In: Advances in Neural Information Processing Systems, vol. 33, pp. 1877–1901 (2020)

Chan, A.: GPT-3 and InstructGPT: technological dystopianism, utopianism, and “Contextual” perspectives in AI ethics and industry. AI Ethics 1–12 (2022)

Chen, G., Yang, J., Hauff, C., Houben, G.-J.: LearningQ: a large-scale dataset for educational question generation. In: Twelfth International AAAI Conference on Web and Social Media (2018)

Chin, C., Brown, D.E.: Student-generated questions: a meaningful aspect of learning in science. Int. J. Sci. Educ. 24 (5), 521–549 (2002)

Article Google Scholar

Clifton, S.L., Schriner, C.L.: Assessing the quality of multiple-choice test items. Nurse Educ. 35 (1), 12–16 (2010)

Clinciu, M.-A., Eshghi, A., Hastie, H.: A study of automatic metrics for the evaluation of natural language explanations. In: Proceedings of the 16th Conference of the European Chapter of the Association for Computational Linguistics: Main, pp. 2376–2387 (2021)

Das, S., Mandal, S.K.D., Basu, A.: Identification of cognitive learning complexity of assessment questions using multi-class text classification. Contemp. Educ. Technol. 12 (2), ep275 (2020)

Denny, P.: Generating practice questions as a preparation strategy for introductory programming exams. In: Proceedings of the 46th ACM Technical Symposium on Computer Science Education, pp. 278–283 (2015)

Denny, P., Hamer, J., Luxton-Reilly, A., Purchase, H.: PeerWise: students sharing their multiple choice questions. In: Proceedings of the Fourth international Workshop on Computing Education Research, New York, NY, USA, pp. 51–58 (2008)

Denny, P., Tempero, E., Garbett, D., Petersen, A.: Examining a student-generated question activity using random topic assignment. In: Proceedings of the 2017 ACM Conference on Innovation and Technology in Computer Science Education, pp. 146–151 (2017)

Horbach, A., Aldabe, I., Bexte, M., de Lacalle, O.L., Maritxalar, M.: Linguistic appropriateness and pedagogic usefulness of reading comprehension questions. In: Proceedings of the 12th Language Resources and Evaluation Conference, pp. 1753–1762 (2020)

Huang, J., et al.: Automatic classroom question classification based on bloom’s taxonomy. In: 2021 13th International Conference on Education Technology and Computers, pp. 33–39 (2021)

Khosravi, H., Demartini, G., Sadiq, S., Gasevic, D.: Charting the design and analytics agenda of learnersourcing systems. In: LAK21: 11th International Learning Analytics and Knowledge Conference, pp. 32–42 (2021)

Khosravi, H., Kitto, K., Williams, J.J.: RiPPLE: a crowdsourced adaptive platform for recommendation of learning activities. J. Learn. Anal. 6 (3), 91–105 (2019)

Kim, J.: Learnersourcing: improving learning with collective learner activity. Massachusetts Institute of Technology (2015)

Krathwohl, D.R.: A revision of Bloom’s taxonomy: an overview. Theory Pract. 41 (4), 212–218 (2002)

Kurdi, G., Leo, J., Parsia, B., Sattler, U., Al-Emari, S.: A systematic review of automatic question generation for educational purposes. Int. J. Artif. Intell. Educ. 30 (1), 121–204 (2020)

van der Lee, C., Gatt, A., van Miltenburg, E., Krahmer, E.: Human evaluation of automatically generated text: Current trends and best practice guidelines. Comput. Speech Lang. 67 (2021), 101151 (2021)

Lu, O.H., Huang, A.Y., Tsai, D.C., Yang, S.J.: Expert-authored and machine-generated short-answer questions for assessing students learning performance. Educ. Technol. Soc. 24 (3), 159–173 (2021)

McHugh, M.L.: Interrater reliability: the kappa statistic. Biochemia Medica 22 (3), 276–282 (2012)

Article MathSciNet Google Scholar

Moore, S., Nguyen, H.A., Stamper, J.: Examining the effects of student participation and performance on the quality of learnersourcing multiple-choice questions. In: Proceedings of the Eighth ACM Conference on Learning@ Scale, pp. 209–220 (2021)

Papinczak, T., Peterson, R., Babri, A.S., Ward, K., Kippers, V., Wilkinson, D.: Using student-generated questions for student-centred assessment. Assess. Eval. High. Educ. 37 (4), 439–452 (2012)

Ruseti, S., et al.: Predicting question quality using recurrent neural networks. In: Penstein Rosé, C., et al. (eds.) AIED 2018. LNCS (LNAI), vol. 10947, pp. 491–502. Springer, Cham (2018). https://doi.org/10.1007/978-3-319-93843-1_36

Chapter Google Scholar

Scialom, T., Staiano, J.: Ask to learn: a study on curiosity-driven question generation. In: Proceedings of the 28th International Conference on Computational Linguistics, pp. 2224–2235 (2020)

Shaikh, S., Daudpotta, S.M., Imran, A.S.: Bloom’s learning outcomes’ automatic classification using LSTM and pretrained word embeddings. IEEE Access 9 , 117887–117909 (2021)

Steuer, T., Bongard, L., Uhlig, J., Zimmer, G.: On the linguistic and pedagogical quality of automatic question generation via neural machine translation. In: De Laet, T., Klemke, R., Alario-Hoyos, C., Hilliger, I., Ortega-Arranz, A. (eds.) EC-TEL 2021. LNCS, vol. 12884, pp. 289–294. Springer, Cham (2021). https://doi.org/10.1007/978-3-030-86436-1_22

Thiergart, J., Huber, S., Übellacker, T.: Understanding emails and drafting responses–an approach using GPT-3. arXiv e-prints (2021)

Wang, Z., Manning, K., Mallick, D.B., Baraniuk, R.G.: Towards blooms taxonomy classification without labels. In: Roll, I., McNamara, D., Sosnovsky, S., Luckin, R., Dimitrova, V. (eds.) AIED 2021. LNCS (LNAI), vol. 12748, pp. 433–445. Springer, Cham (2021). https://doi.org/10.1007/978-3-030-78292-4_35

Yahya, A.A., Toukal, Z., Osman, A.: Bloom’s Taxonomy–based classification for item bank questions using support vector machines. In: Ding, W., Jiang, H., Ali, M., Li, M. (eds.) Modern Advances in Intelligent Systems and Tools, pp. 135–140. Springer, Heidelberg (2012). https://doi.org/10.1007/978-3-642-30732-4_17

Yu, F.Y., Cheng, W.W.: Effects of academic achievement and group composition on quality of student-generated questions and use patterns of online procedural prompts. In: 28th International Conference on Computers in Education, ICCE 2020, pp. 573–581 (2020)

Yu, F.-Y., Liu, Y.-H.: Creating a psychologically safe online space for a student-generated questions learning activity via different identity revelation modes. Br. J. Educ. Technol. 40 (6), 1109–1123 (2009)

Zhang, J., Wong, C., Giacaman, N., Luxton-Reilly, A.: Automated classification of computing education questions using Bloom’s taxonomy. In: Australasian Computing Education Conference, pp. 58–65 (2021)

Download references

Author information

Authors and affiliations.

Carnegie Mellon University, Pittsburgh, PA, 15213, USA

Steven Moore, Huy A. Nguyen, Norman Bier, Tanvi Domadia & John Stamper

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Steven Moore .

Editor information

Editors and affiliations.

Pontificia Universidad Católica de Chile, Santiago, Chile

Isabel Hilliger

Universidad Carlos III de Madrid, Madrid, Spain

Pedro J. Muñoz-Merino

KU Leuven, Leuven, Belgium

Tinne De Laet

Universidad de Valladolid, Valladolid, Spain

Alejandro Ortega-Arranz

The Open University, Milton Keynes, UK

Tracie Farrell

Rights and permissions

Reprints and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper.

Moore, S., Nguyen, H.A., Bier, N., Domadia, T., Stamper, J. (2022). Assessing the Quality of Student-Generated Short Answer Questions Using GPT-3. In: Hilliger, I., Muñoz-Merino, P.J., De Laet, T., Ortega-Arranz, A., Farrell, T. (eds) Educating for a New Future: Making Sense of Technology-Enhanced Learning Adoption. EC-TEL 2022. Lecture Notes in Computer Science, vol 13450. Springer, Cham. https://doi.org/10.1007/978-3-031-16290-9_18

Download citation

DOI : https://doi.org/10.1007/978-3-031-16290-9_18

Published : 05 September 2022

Publisher Name : Springer, Cham

Print ISBN : 978-3-031-16289-3

Online ISBN : 978-3-031-16290-9

eBook Packages : Computer Science Computer Science (R0)

Share this paper

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

IMAGES

VIDEO

COMMENTS

Short Answer Grading Rubric Grading Factors: 1. Completeness (5 points) Does your response directly answer each part of the assignment question(s)? Excellent 5 Very Good 4-3 Good 2 Needs Improvement 0 -1 2. Knowledge (10 points) Does your response clearly show you have read and understood

Short essay question rubric. Sample grading rubric an instructor can use to assess students' work on short essay questions. Download this file. Page. /. 2. Download this file [62.00 KB] Back to Resources Page.

A rubric for short answer questions is an essential tool for assessing student responses in a consistent and fair manner. It provides a clear set of criteria and standards that can be used to evaluate the quality of the answers. When creating a rubric for short answer questions, several key components should be included to ensure its ...

University at Buffalo Department of Philosophy Grading Rubric for Essay and Short Answer Exam Questions, Quizzes, and Homework Assignments. Unsatisfactory. Competent. Exemplary. Fails to address the question or demonstrates an inadequate or partial grasp of the question. Demonstrates an adequate understanding of the question.

Yes! A great way to maintain consistency in your grading is to weigh student answers against a rubric. This ensures that "meeting expectations" means the same thing each time you score student responses. CommonLit has created a short answer rubric for you to use while grading student answers to short answer questions on Library Lessons.

Answering Short Answer Questions. Answering Short Answer Test Questions. Students will provide concise answers to content-based questions about the lesson. They will follow the format provided and use examples for support. Rubric Code: U66W43.

Scoring Rubric for Short Answer Questions Short Answer Question Rubric Code: PXB23W3. By mrsnich Ready to use Public Rubric Subject: Communication Type: Reading Grade Levels: K-5, 9-12, Undergraduate Desktop Mode Mobile Mode ...

Rubric suitable for short-answer, formative assignments that address a task on a topic. Use this rubric when asking students to directly, but briefly, defend a claim about or explain a topic. Consider using the Short Answer QuickMark Set with this rubric. These drag-and-drop comments were tailor-made by veteran educators to give actionable ...

Short Answer Questions Grading Rubric - Free download as Word Doc (.doc / .docx), PDF File (.pdf), Text File (.txt) or view presentation slides online. For evaluating the short answer question 6, the following rubric will be used. The rubrics were adapted from the Problem Solving Rubric created by National Center for research on evaluation, standards, and student testing.

Short Response Rubric Assessed Standard(s): 2-Point Response 1-Point Response 0-Point Response s Includes valid inferences or claims from the text. Fully and directly responds to the prompt. Includes inferences or claims that are loosely based on the text. Responds partially to the prompt or does not address all elements of the prompt.

A better question would ask "How might two accidents be an acceptable level of risk…", in order to promote a more meaningful answer. Short answer questions are a great middle ground for professors. They are easier to develop than multiple choice and generate a more in-depth answer. Because of their brevity, they are easier to grade and ...

Short Answer Grading Rubric. Grading Factors: 1. Completeness (5 points) ˜ Does your response directly answer each part of the assignment question(s)? Excellen. t . 5 . Ve: ry Good. 4-3 . Good. 2 : Need Improvement. 0 -1 : 2. Knowledge (10 points) ˜ Does your response clearly show you have read and understand the

To see how the question will appear to a student taking it in an assessment, click Preview Question. To exit the preview screen and return to the question editor, click Edit Question. Once you are finished editing the question, click Save. Use the Short Answer/Essay question type to ask questions requiring students to provide a written response.

Scoring rubrics - general scoring criteria for the document-based and long essay questions, regardless of specific question prompt - are available in the course and exam description (CED). ... Students choose between 2 options for the final required short-answer question, each one focusing on a different time period:

Short Answer Rubric. Answering Short Answer Questions. Students will provide concise answers to content-based questions about the lesson. They will follow the format provided and use examples for support. Rubric Code: E2XA644. By alexiebasileyo. Ready to use.

Short answer questions (or SAQs) can be used in examinations or as part of assessment tasks. They are generally questions that require students to construct a response. Short answer questions require a concise and focused response that may be factual, interpretive or a combination of the two. SAQs can also be used in a non-examination situation.

Be the first to ask Bronwen a question about this product. Teacher Note: I was noticing my students with IEPs were scoring 0s and 1s out of 2 points on short answer questions on our state testing. I created this to assist and assess my students with answering short answer questions in complete sentences with appropriate writing conventions and ...

Abstract. This study presents an investigation of the validity of a practical scoring procedure for short answer (sentence length) reading comprehension questions, using a holistic rubric for sets of responses rather than scoring individual responses, on a university-based English proficiency test. Thirty-three previously scored response sets ...

Scoring Short Answer Questions. There is not really a rubric for these types of questions, like there is for the Document-Based Question (DBQ) or Long Essay Question (LEQ). Students are simply scored on whether or not they answered the prompt correctly. Students either receive one point or zero points for each part of the question (A, B, and C ...

15 Helpful Scoring Rubric Examples for All Grades and Subjects. In the end, they actually make grading easier. When it comes to student assessment and evaluation, there are a lot of methods to consider. In some cases, testing is the best way to assess a student's knowledge, and the answers are either right or wrong.

Short-Answer Questions Section I, Part B of the AP U.S. History Exam consists of four short-answer questions. Students are required to answer the first and second questions, and then answer either the third or the fourth question. The first question primarily assesses the practice of analyzing secondary sources, asking

Short Answer Questions Using GPT-3 Steven Moore(B), Huy A. Nguyen , Norman Bier, Tanvi Domadia, and John Stamper ... studies for assessing the linguistic and pedagogical quality of questions [16, 31]. This rubric contains 9 hierarchical criteria, shown in Table 1. These criteria are asked to the two experts in the order, from top to bottom ...