- 0 Shopping Cart $ 0.00 -->

Looking to get a head start on bar prep? Learn all about the bar exam and how to study in our FREE Bar Exam Primer Course !

Excelled at the Bar Exam? Share Your Success! JD Advising is seeking top-performing attorneys for flexible tutoring positions. Transform your expertise into extra income. Learn more and apply now at JD Advising Employment .

Special Offer: Get a Free Hour of Tutoring! Enroll in any On Demand, Premium, or Repeat Taker course by April 10th and receive a complimentary one-hour tutoring session to jumpstart your bar exam prep. Add any course + 1-hour of tutoring to your cart and apply CourseCombo at checkout for your free session. Cannot be combined with any other offer.

How to Interpret Your Bar Exam Score Report

Here, we tell you how to interpret your bar exam score report and how to avoid some of the common mistakes that students make when they attempt to interpret their bar exam score reports! If you passed the bar exam, you may be curious about how well you did. If you failed the bar exam, figuring out what your score means is the first step to making sure you pass the next exam!

A bar exam score report will typically have a few things you will want to pay attention to your overall score, your MBE scaled score, and your written score. States give a varying level of detail. Some states (like Illinois) won’t tell you your score at all if you pass. Others will only tell you your overall MBE and essay score. Some (like New York and Washington) break down exactly how you did on each essay, each MPT, and each subject on the MBE! We will try to give you a general idea of how to figure out what your score report means, assuming your state provides you with some detail!

Note: if you are in New York, please check out this post on how to dissect your New York Bar Exam score report .

Your overall score

The first thing you will probably look at is your overall score, as this will tell you if you passed or failed the bar exam. (Your bar exam score report should indicate this pretty clearly.)

Uniform Bar Exam states require a score between 260 and 280 to pass the Uniform Bar Exam . So, if your score was above 280, you technically received a score that is considered passing in every Uniform Bar Exam state. Congratulations if that is the case.

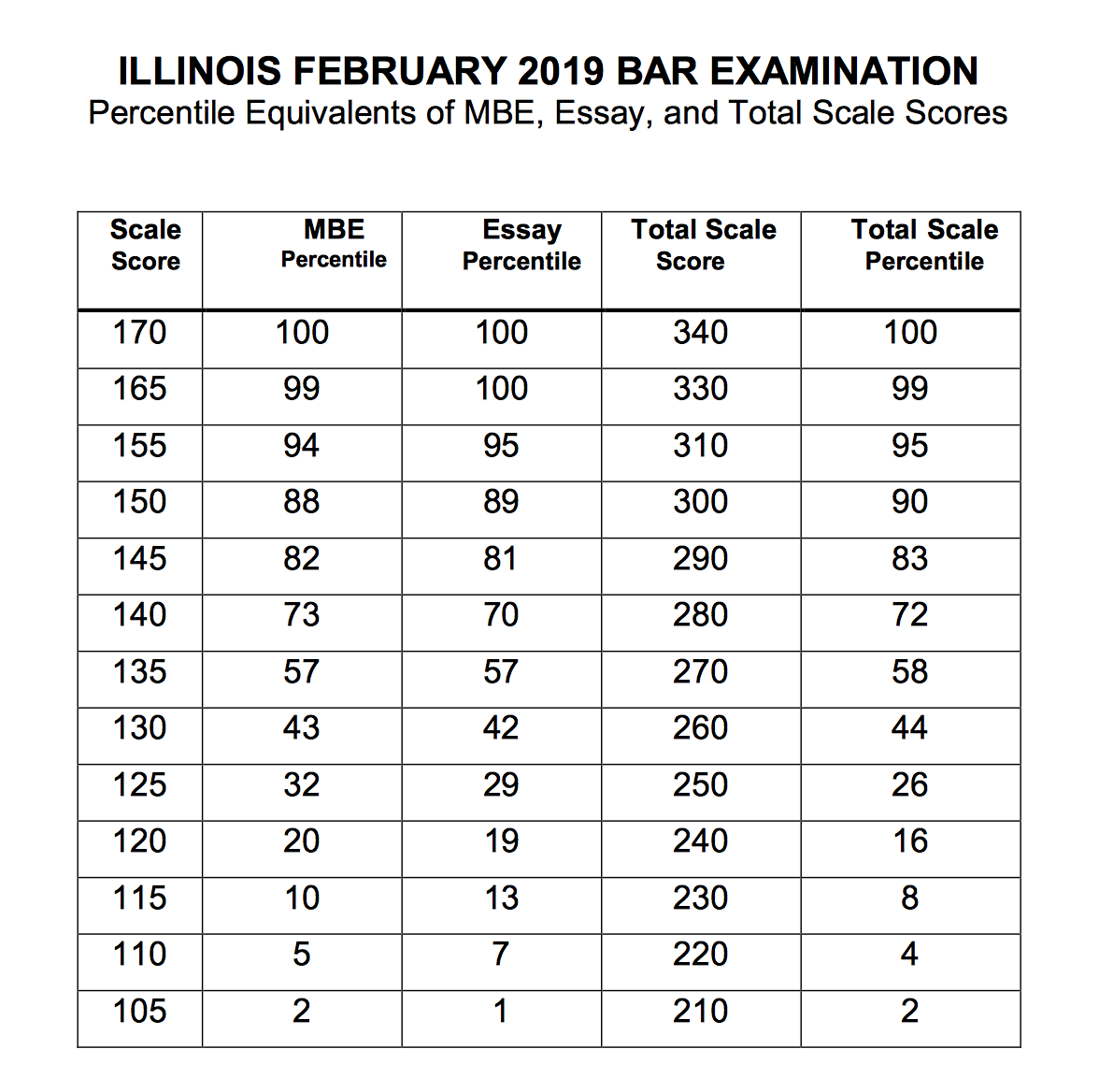

One thing you may wonder about when examining your overall score is what percentile you scored in (that is, how did you score in relation to other test takers?). If you read this post on UBE percentiles, you can figure out your approximate percentile . A few numbers to guide you if you are in a Uniform Bar Exam jurisdiction (note: these numbers change every administration, but not significantly; we have updated the below numbers to reflect the February 2019 bar exam. You can also see these in the chart below.)

- A 330 is the top percentile (99th percentile for the February 2019 Uniform Bar Exam)

- A 300 is approximately the 90th percentile

- A 280 is approximately the 72nd percentile

- A 270 is approximately the 58th percentile

- A 260 is approximately the 44th percentile

- A 250 is approximately the 26th percentile

- A 240 is approximately the 16th percentile

- A 230 is approximately the 8th percentile

- A 220 is approximately 4th percentile

- A 210 is approximately the 2nd percentile

Your percentile tells you the number of people you scored higher than. So, if you are in the 40th percentile, you scored higher than 40% of examinees (and lower than about 60% of examinees). If you failed the bar exam, your percentile can tell you how much work you need to do to pass! For example, if you are in the 2nd percentile, you have a lot more work to do compared to someone who is in the 44th percentile.

If you are not in a Uniform Bar Exam (UBE) jurisdiction, see if your state bar releases any information about percentiles. Many states do not. If that is the case, look at your MBE scaled score because you can figure out your percentile on your own for at least the MBE portion!

Your MBE scaled score

You will not get your MBE “raw” score (i.e., the exact amount of questions you answered correctly). Rather, you will get a scaled or “converted” score. The scaled score is converted from the raw score but the National Conference of Bar Examiners does not reveal the formula it uses to do this. So you will not know the exact percentage of questions you answered correctly.

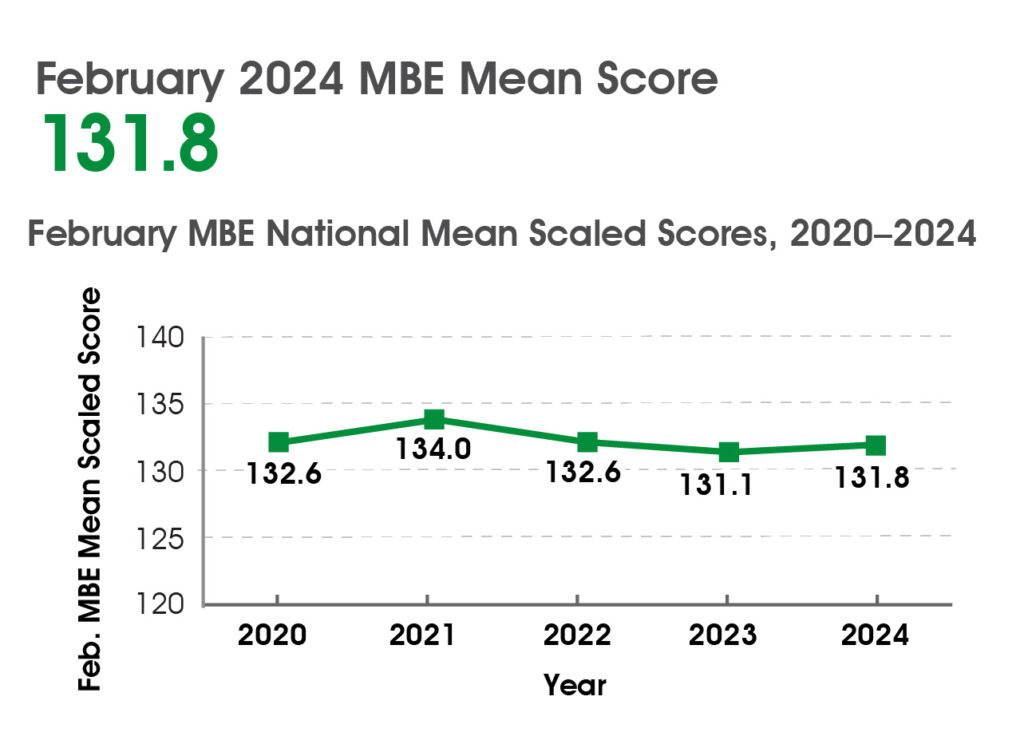

It is very important to dissect your MBE scaled score to see how well you did. For example, review this chart below from the February 2019 bar exam. (This was promulgated by Illinois.) On the very left side, find the column titled “Scale Score” then see “MBE Percentile” right next to it. Compare your scaled score to your MBE percentile. This will tell you about how you did even if you did not take the Illinois bar exam.

If you scored a 105 on the MBE, you are in the bottom 2 percentile, meaning you have a lot of work to do. If you scored a 140, however, you are in the 73rd percentile! So, that is quite good. (Note: percentiles change from administration to administration, but this is a decent guide as to where you stand.)

The MBE is curved, so just because you scored “close” to passing doesn’t mean you are as close as you think. For example, a 125 is the 32nd percentile, and a 135 is in the 57th percentile. That is only a 10-point difference in score, but is a 25-point difference in percentile! So, if you are in the 120’s on the MBE, you may still have a lot of work to do to move your score up.

In most states, you want to aim for a score between 130 and 140 to “pass” the MBE. If you are not sure what score you should aim for, and you are in a Uniform Bar Exam state, just take the overall score needed and divide it by two. So, for example, in New York, you need a 266 to pass the bar exam. If you divide 266 by two, that is 133. So you should aim for at least a 133 on the MBE. (You also can check out this post on passing MBE scores by state if you don’t want to do the math!)

Note: Some jurisdictions also tell you how you scored in each MBE subject. This is worth paying attention to as it can reveal where your weaknesses are! If your jurisdiction tells you that you scored in the 70th percentile in Evidence and in only the 5th percentile in Torts—that’s a sign you likely need to work on Torts!

Your written score

In a Uniform Bar Exam score report, you may also see six scores for your Multistate Essay Exam (MEE) answers, and two scores for your Multistate Performance Test (MPT) answers. Not all states release this information, but most do. The vast majority of states grade on a 1–6 scale. (Some states grade on a 1–10 scale, and other states, like New York, do their own thing, which you can read about here .) Your score report may look something like this:

So, the first six scores generally are your MEE scores. And, the last two scores are your MPT scores. Remember that these are not weighted equally! The six MEE essays are worth 60% of your written score. The two MPTs are worth 40% of your written score! So, the MPTs are worth more.

In most states that grade on a 1–6 scale, a 4 is considered a passing score. Here is the exact number a passing score for each essay:

- A 3.9 is considered passing in Uniform Bar Exam jurisdictions that require a 260 to pass.

- A 4.0 is considered passing in Uniform Bar Exam jurisdictions that require a 266 to pass.

- A 4.1 is considered passing in Uniform Bar Exam jurisdictions that require a 273 to pass.

- A 4.2 is considered passing in Uniform Bar Exam jurisdictions that require a score of 280 to pass.

Most students make the mistake of just assuming they passed the MPT and MEE portion of the exam when in reality, they have work to do! Make sure to carefully examine your score report to see if you actually passed either portion of the exam!

If you are not in a Uniform Bar Exam state, please consult with your jurisdiction for its grading scale and what a passing score is.

Final thoughts

If you did not pass the bar exam, we recommend you read this detailed post on what to do if you failed the bar exam . The last thing you want to do is make the same mistakes and fail it again! We tell you how to avoid that and how to study better!

New: We have an excellent On Demand course available for those looking for a fresh new approach on the bar exam. Check out the advantages of our course here . It is on sale for a limited time!

If you have questions about how to interpret your bar exam score report, feel free to post in the comments below or contact us .

Looking to Pass the Bar Exam?

Free Resources:

- 🌟 Bar Exam Free Resource Center : Access our most popular free guides, webinars, and resources to set you on the path to success.

- Free Bar Exam Guides : Expert advice on the MBE, the MEE, passing strategies, and overcoming failure.

- Free Webinars : Get insight from top bar exam experts to ace your preparation.

Paid Resources:

- 🏆 One-Sheets : Our most popular product! Master the Bar Exam with these five-star rated essentials.

- Bar Exam Outlines : Our comprehensive and condensed bar exam outlines present key information in an organized, easy-to-digest layout.

- Exclusive Mastery Classes : Dive deep into highly tested areas of the MBE, MEE, MPT, and CA bar exams in these live, one-time events.

- Specialized Private Tutoring : With years of experience under our belt, our experts provide personalized guidance to ensure you excel.

- Bar Exam Courses : On Demand and Premium options tailored to your needs.

- Bar Exam Crash Course + Mini Outlines : A great review of the topics you need to know!

🔥 NEW! Check out our Repeat Taker Bar Exam Course and our new premier Guarantee Pass Program !

Related posts

Leave a Reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

- Privacy Policy

- Terms of Use

- Public Interest

By using this site, you allow the use of cookies, and you acknowledge that you have read and understand our Privacy Policy and Terms of Service .

Cookie and Privacy Settings

We may request cookies to be set on your device. We use cookies to let us know when you visit our websites, how you interact with us, to enrich your user experience, and to customize your relationship with our website.

Click on the different category headings to find out more. You can also change some of your preferences. Note that blocking some types of cookies may impact your experience on our websites and the services we are able to offer.

These cookies are strictly necessary to provide you with services available through our website and to use some of its features.

Because these cookies are strictly necessary to deliver the website, refusing them will have impact how our site functions. You always can block or delete cookies by changing your browser settings and force blocking all cookies on this website. But this will always prompt you to accept/refuse cookies when revisiting our site.

We fully respect if you want to refuse cookies but to avoid asking you again and again kindly allow us to store a cookie for that. You are free to opt out any time or opt in for other cookies to get a better experience. If you refuse cookies we will remove all set cookies in our domain.

We provide you with a list of stored cookies on your computer in our domain so you can check what we stored. Due to security reasons we are not able to show or modify cookies from other domains. You can check these in your browser security settings.

We also use different external services like Google Webfonts, Google Maps, and external Video providers. Since these providers may collect personal data like your IP address we allow you to block them here. Please be aware that this might heavily reduce the functionality and appearance of our site. Changes will take effect once you reload the page.

Google Webfont Settings:

Google Map Settings:

Google reCaptcha Settings:

Vimeo and Youtube video embeds:

You can read about our cookies and privacy settings in detail on our Privacy Policy Page.

Bar Exam Scoring: Everything You Need to Know About Bar Exam Scores

It’s important to know how the bar exam is scored so that you can understand what’s required to pass. The following sections will provide an overview of how the bar exam is scored, including information on essay grading, the MBE scale, and converted scores.

Understanding How the Bar Exam Is Scored

First, we’ll look at the UBE (Uniform Bar Exam). This is the bar exam used in a majority of states, and it’s what we’ll be focusing on in this article.

The UBE is broken down into three sections: the MPT (Multistate Performance Test), the MBE (Multistate Bar Exam), and the MEE (Multistate Essay Exam) .

The MBE is a 200-question, multiple-choice exam covering six subject areas: contracts, torts, constitutional law, evidence, criminal law, and real property .

Each state sets its own passing bar exam score for the MBE. Thus, Pennsylvania, Georgia, New York, California, Minnesota, and Nevada bar exam scoring differs from state to state. But in general, it’s between 140 and 150.

The MEE consists of six essay questions covering the same six subject areas as the MBE.

Bar exam scoring in states may also include one or two additional, state-specific essay questions.

The MEE is graded on a scale of 0-30, and the bar exam pass score is typically between 24 and 27.

The MPT is a skills-based exam that consists of two 90-minute tasks. It’s designed to test your ability to complete common legal tasks, such as writing a memo or conducting research.

The MPT is graded on a pass/fail basis, and there is no set passing score.

Now that we’ve covered the basics of the UBE bar exam score range, let’s take a more in-depth look at how each section is graded.

Essay Grading

The MEE essays are graded by trained graders from the National Conference of Bar Examiners (NCBE).

Each essay is read and graded twice, once by a human grader, and once by a computer program.

If the human and computer grades differ by more than 2 points, a third grader will read and grade the essay.

The essays are graded on a scale of 0-30, with 6 being the lowest passing score.

To determine your score, the graders will look at the overall quality of your answer, as well as your ability to apply legal principles, analyze fact patterns, and communicate effectively.

The MBE Scale

The MBE is graded on a scale of 0-200, with 130 being the lowest passing score.

To determine your score, the NCBE will first convert your raw score (the number of questions you answered correctly) into a scaled score.

This is done to account for differences in difficulty between the exams.

After your raw score gets converted to scaled scoring, it’s then added to your MEE score to determine your overall UBE score.

Scaled Scores

The total score that an examinee receives on the bar exam is typically a scaled score.

A scaled score is a number that has been adjusted so that it can be compared to the scores of other examinees who have taken the same test.

The purpose of scaling is to ensure that the scores are accurate and reliable and to account for any differences in difficulty between different versions of the test.

For example, suppose that the average score on the MBE in one state is 100 points and the average score in another state is 10 points.

If the two states were to use the same passing score, then it would be much easier to pass the bar exam in the first state than in the second.

Scaling ensures that this is not the case by adjusting the scores so that they are comparable.

It is important to note that scaling does not necessarily mean that the scoring process is fair.

In some cases, an examinee who receives a scaled score of 140 points might have scored higher than another examinee who received a scaled score of 150 points.

However, this does not necessarily mean that the first examinee performed better on the exam overall. It could simply be that the exam was easier in the first examinee’s state than in the second examinee’s state.

What’s important is that, thanks to scaling, the two examinees have an equal chance of passing the bar exam .

Another factor that can affect an examinee’s total score is weighting.

Weighting is the process of giving certain sections of the exam more importance than others.

For example, in some states, the MBE counts for 50% of the total score, while the MEE counts for 30%, and the MPT counts for 20%.

In other states, however, the weights may be different. For example, the MBE might count for 60%, while the MEE and MPT count for 20% each.

The weights are determined by each state’s bar examiners and can be changed at any time.

It is important to note that weighting does not affect an examinee’s score on individual sections of the exam. It only affects the overall total score.

What Is a Passing UBE Score in Every State?

In order to pass the Uniform Bar Exam, examinees must earn a score of at least 260 on the MBE and MEE. However, a score of 280 is generally considered to be a good score, and a score of 300 to 330 (highest score on bar exam) is considered to be excellent.

Bar Exam Passing Score by State

It’s worth noting that a 280 will ensure a passing score in all states. And it will put you in the 73 bar exam scores percentiles.

The average baby bar exam score range is between 40 and 100. This means that the average score is between 70 and 80.

Scoring high on bar exam essays is extremely important to your overall score. For many students, essay writing is the most difficult and stressful part of the bar exam. But with a little practice and guidance, you can learn how to score high on bar exam essays so you can pass the first time to keep your bar exam costs down.

- See Top Rated Bar Review Courses

FAQs on Bar Exam Scoring

The bar exam is scored by a point system, with 400 being the highest possible score. What score do you need to pass the bar exam?

The highest possible score on the bar exam is 400.

A good bar exam score depends on the jurisdiction but is typically between 260 and 280.

Scoring for the bar exam works by awarding points for each correct answer. The number of points awarded varies depending on the question and the jurisdiction.

Kenneth W. Boyd

Kenneth W. Boyd is a former Certified Public Accountant (CPA) and the author of several of the popular "For Dummies" books published by John Wiley & Sons including 'CPA Exam for Dummies' and 'Cost Accounting for Dummies'.

Ken has gained a wealth of business experience through his previous employment as a CPA, Auditor, Tax Preparer and College Professor. Today, Ken continues to use those finely tuned skills to educate students as a professional writer and teacher.

Related Posts

California Bar Exam Grading

The California Bar Examination consists of the General Bar Examination and the Attorneys’ Examination.

The General Bar Exam consists of three parts: five essay questions, the Multistate Bar Exam (MBE), and one performance test (PT). The parts of the exam may not be taken separately, and California does not accept the transfer of MBE scores from other jurisdictions. The Attorneys’ Exam consists of the essay questions and performance test.

Essay Questions

The essay questions are designed to be answered in one hour each and to test legal analysis under a given fact situation. Answers are expected to demonstrate the applicant’s ability to analyze the facts in the question, to tell the difference between material facts and immaterial facts, and to discern the points of law and fact upon which the case turns. The answer must show knowledge and understanding of the pertinent principles and theories of law, their qualifications and limitations, and their relationships to each other. The answer should evidence the applicant’s ability to apply the law to the given facts and to reason in a logical, lawyer-like manner from the premises adopted to a sound conclusion. An applicant should not merely show that they remember the legal principles but should demonstrate their proficiency in using and applying them.

Performance Test

PT questions are designed to be completed in 90 minutes and to test an applicant’s ability to handle a select number of legal authorities in the context of a factual problem involving a client. A PT question consists of two separate sets of materials: a file and a library, with instructions advising the applicant on the task(s) to be performed. In addition to measuring an applicant’s ability to analyze legal issues, PT questions require applicants to:

- Sift through detailed factual material and separate relevant from irrelevant facts, assess the consistency and reliability of facts, and determine the need for and source of additional facts;

- Analyze the legal rules and principles applicable to a problem and formulate legal theories from facts that may be only partly known and are being developed;

- Recognize and resolve ethical issues arising in practical situations;

- Apply problem-solving skills to diagnose a problem, generate alternative solutions, and develop a plan of action; and

- Communicate effectively, whether advocating, advising a client, eliciting information, or effectuating a legal transaction.

An applicant’s performance test response is graded on its compliance with instructions and on its content, thoroughness, and organization.

Multistate Bar Examination (MBE)

The MBE is developed and typically graded by the National Conference of Bar Examiners .

Grader Calibration

The Committee of Bar Examiners maintains a diverse pool of approximately 150 experienced attorneys from which graders are selected for each grading cycle. A majority of the graders have been grading bar exams for at least five years, and many of them have participated for well over 10 years.

Six groups, each consisting of experienced graders and up to four apprentice graders, are selected to grade the essay and PT answers. The groups convene three times early in the grading cycle for the purpose of calibration. A member of the Examination Development and Grading Team and a member of the Committee supervise each group of graders. At the first calibration session, the graders discuss a set of sample answers they received prior to the session. These books are copies of answers written by a sample of the applicant pool. After this discussion, the graders receive a set of 15 copies of answers submitted for the current exam, and they begin by reading and assigning a grade to the first answer in the set. The group then discusses the grades assigned before arriving at a consensus, and the process is repeated for each answer in the set. After reading and reaching a consensus on the set of 15 books, the graders independently read a new set of 25 answers without further group discussion and submit grades for analysis and review at the second calibration session.

At the second calibration session, graders discuss the results of the first meeting, re-read and discuss any of the answers where significant disagreement was seen, and resolve the differences through further discussion. An additional 10 answer books are read, graded, and discussed before a consensus grade is assigned to each. The groups are then given their first grading assignments.

During the third calibration session, the grading standards are reviewed, and the graders read 15 additional answer books as a group to ensure that they are still grading to the same standards.

Graders evaluate answers and assign grades solely on the content of the response. The quality of handwriting or the accuracy of spelling and grammar are not considered assigning a grade to an applicant’s answer. Answers are identified by code number only; nothing about an individual applicant is known to the graders. Based on the panel discussions and using the agreed-upon standards, graders assign raw scores to essay and performance test answers in five-point increments, on a scale of 40 to 100. To earn a grade of 40, the applicant must at least identify the subject of the question and attempt to apply the law to the facts of the question. If these criteria are not met, the answer is assigned a zero.

Phased Grading

All written answers submitted by applicants who completed the exam in its entirety are read at least once before pass/fail decisions are made. Based on the results of empirical studies relative to reliability, scores have been established for passing and failing after one reading of the exam. For applicants whose scores after the first read are near the required passing score, all answer books are read a second time, and scores of the first and second readings are averaged. The total averaged score after two readings is then used to make a second set of pass/fail decisions.

To pass the exam in the first phase of grading, an applicant must have a total scale score (after one reading) of at least 1390 out of 2000 possible points. Those with total scale scores after one reading below 1350 fail the exam. If the applicant’s total scale score is at least 1350 but less than 1390 after one reading, their answers are read a second time by a different set of graders. If the applicant’s averaged total scale score after two readings is 1390 or higher, the applicant passes the exam. Applicants with averaged total scale scores of less than 1390 fail the exam.

Results from the February administration of the exam are traditionally released in mid-May, and results from the July administration are released in mid-November.

Results are posted on the Applicant Portal . At 6:00 p.m. on the day results are posted, applicants can also access the pass list on the State Bar website .

Applicants who have failed the exam receive the grades assigned to their written answers as well as their MBE scaled score. The answer files of unsuccessful applicants will be posted in the Applicant Portal with the release of results.

Successful applicants do not receive their grades nor their MBE scores and will not have their answers returned.

Reconsideration of grade

The Committee of Bar Examiners believes that its grading and administrative systems afford each applicant a full and fair opportunity to take the exam and a fair and careful consideration of all their exam answers on the bar exam, and that no useful purpose would be served by further consideration by the Committee.

All scores have been automatically checked for mathematical errors. All answers with scores within the reread range after one reading have been regraded by a different set of graders and double-checked for any mathematical errors before grades were released. For these reasons, the Committee will consider requests for reconsideration only when an applicant establishes with documented evidence that a clerical error resulted in failure or prevented the exam from being properly graded.

The Committee will not extend reconsideration based on challenges to its grading system or the judgments of its professional graders. Requests for reconsideration submitted by or on behalf of an unsuccessful applicant must be in writing and meet the criteria noted above. Requests not meeting those criteria may be summarily denied on that basis, without further explanation. All requests for reconsideration of grades must be received by the Office of Admissions no later than two weeks after the release of exam results.

- Skip to primary navigation

- Skip to main content

- Skip to primary sidebar

- Skip to footer

Bar Exam Toolbox®

Get the tools you need for bar exam success

Advice from a Bar Grader: Tips to Maximize Your Essay Score

January 5, 2017 By Avenne McBride Leave a Comment

Raw score, scaled score, what does it all mean? It means get all the points you can.

Both the written and MBE part of the bar exam are scaled which means your score may be subject to an adjustment in order to standardize the results of the exam. The scaling method is somewhat complicated and also dependent on your fellow bar takers. On the MBE some questions are weighted differently and that may depend on how many applicants answered correctly. A question that 98% of applicants answer correctly may be given less weight than a question that only 40% answered correctly.

So don’t spend a lot of time trying to game the scaling, just think of yourself as a game show contestant trying to grab as many prizes as you can in the time allowed.

On the written portion of the exam, there are some easy techniques to pick up points that you may accidentally be leaving behind.

Make sure you answer the call of the questions.

If there are multiple parts of the question, then answer them all. If a question asks for causes of actions and defenses, then make sure to cover defenses. If a question asks for the likelihood of success then make a statement as to the likelihood. Answer the question even if the answer is “maybe.”

If a question asks for a discussion about various people or properties, make sure to discuss each and every part of the question, even if you think that there isn’t much to say on a particular point. Resist the temptation to skip a section that seems unimportant. Clearly state your thoughts briefly and then move on. For example, a question might ask for causes of action against three defendants and possible defenses. The bulk of the question focuses on Defendant #1 and #3. But don’t skip Defendant #2 and if there are no causes of action and/or viable defenses for that defendant, make sure to point it out. There are points there, make sure to pick them up.

DON’T discuss causes of action or parties that are not called for in the question. You don’t have time and they aren’t worth any points no matter how interesting or brilliant your insights may be.

The Bar exam is not the place to demonstrate your superior creative writing abilities. Leave your ego at the door and stick to a simple IRAC approach. Remember your audience. Your audience is the person grading your exam.

Use headings.

Using a heading let’s the grader know that you have spotted an issue, already getting you some points. This will also help keep you on track. If you have typed for more than a page under one heading, it’s probably time to move on. You have either spent too much time on one issue or you haven’t broken out the sub-issues.

Write a conclusion without being conclusory.

Don’t forget to clearly state your conclusion at the end of each section so that the grader has easy access to the destination of your analysis. You may think that your discussion speaks for itself and you don’t want to be repetitive. A one sentence conclusion can wrap it up and demonstrate that you understand the issue, even if you don’t have a rock solid outcome. Try something like; “In conclusion, after considering all the factors discussed above, the Court may grant injunctive relief.” This shows that you understand the law and have drawn a conclusion. That is worth points and those are points that the grader wants to give to you, let them.

Outline it!

If you run out of time, use an outline format to cover what you can. Listing an issue with a short rule in incomplete sentences will be given points. Don’t worry about being perfect as the clock ticks down, just get down your knowledge on the paper. You can’t get points without demonstrating your understanding and sometime you can get the majority of points by using a quick outline format.

DON’T stop suddenly. An incomplete essay that ends mid-sentence without finishing certain sections and leaving issues unaddressed, is a red flag for the grader that you are not in control of your time or this essay subject.

Clean up oversights or mistakes quickly.

If you have missed a section or gotten disorganized in your essay, just add a clean up section with a clear heading. Points are not deducted for the order in which subjects are discussed. If the call of the question asked for defenses and you realize that you have skipped the defenses for each cause of action, then add a section and label it “defenses to all causes of action.” This may not be the most elegant organization but it immediately lets the grader know that you didn’t forget the discussion of defenses and you just got points.

DON’T start going back through your essay and trying to add to each section, this will take up twice as much time for the same amount of points.

Writing high scoring essays for the bar exam is a game of skill that anyone can master. Follow the rules and make it easy for the grader to give you that score that you deserve!

Did you find this post helpful? Check out some other great articles:

- Don’t Do This on Your Bar Exam Essays

- Why Really Wanting to Pass the Bar Exam Isn’t Enough

- What You Can Do Now to Prepare for the Bar Exam

- Train Like an Athlete for the Bar Exam

Photo credit: Shutterstock

Ready to pass the bar exam? Get the support and accountability you need with personalized one-on-one bar exam tutoring or one of our economical courses and workshops . We’re here to help!

About Avenne McBride

Avenne McBride is a Bar Exam Tutor for Bar Exam Toolbox. After graduating University of San Francisco Law School and passing the California Bar, Avenne jumped right into a litigation job, practicing plaintiff’s personal injury law and was thrilled to get right into the courtroom. But after a few years of trial work, Avenne decided to get involved with the Bar Exam process and became a grader, along with eventually transitioning to working in the non-profit sector.

Along the way Avenne worked for Legal Aid in Oakland and practiced family law specializing in child custody cases. The best part of the work was interacting with clients and helping regular people negotiate the legal system. Based on this experience, Avenne decided to spend more time teaching and educating.

So after many years of grading and working on the development of the California Bar Exam, Avenne is taking her experience to the other side of the table to work directly with students preparing for the exam.

Reader Interactions

Leave a reply cancel reply.

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

Need to Pass the Bar Exam?

Sign up for our free weekly email with useful tips!

- Terms & Conditions

Copyright 2024 Bar Exam Toolbox®™

Test Prep Insight is reader-supported. When you buy through links on our site, we may earn an affiliate commission. Learn more

What Is A Good Bar Exam Score?

Whether you just received your official score or you're still preparing, many students wonder what is a good score on the bar exam.

Like any major professional exam, you’ll likely want know what is considered a good bar exam score. This is true whether you’re just starting to prepare or you already received your official score and now want to see how you stack up against your peers. In this detailed guide, we cover how the bar exam is scored, as well as what score and percentiles are deemed “good” in the legal world.

How Is The Bar Exam Scored?

The UBE has a possible score of 400 . The UBE has multiple components and consists of the Multistate Performance Test (MPT), the Multistate Essay Exam (MEE), and the Multistate Bar Exam (MBE).

The MPT and MEE together make up a total of 200 points and are scored by jurisdiction. However, the MBE is scored by the National Conference of Bar Examiners , and is worth 200 points itself .

Thus, the MBE is worth 50% of your score , and the MPT/MEE together make up the other 50% . Specifically, the MEE is worth 30% and the MPT 20%.

An important point to keep in mind is that you will not be given what is referred to as a “raw score”, which is the number of questions you answered correctly .

What you will be given is what is termed as a “scaled score.” While your raw score is used to determine your scaled score , this is done by the NCBE, and they do not release or publish the formula they use to calculate this.

This can be frustrating since you will not know the exact number of correct answers you gave, and therefore some feel you cannot adequately prepare for a retest if you were not satisfied with your initial score.

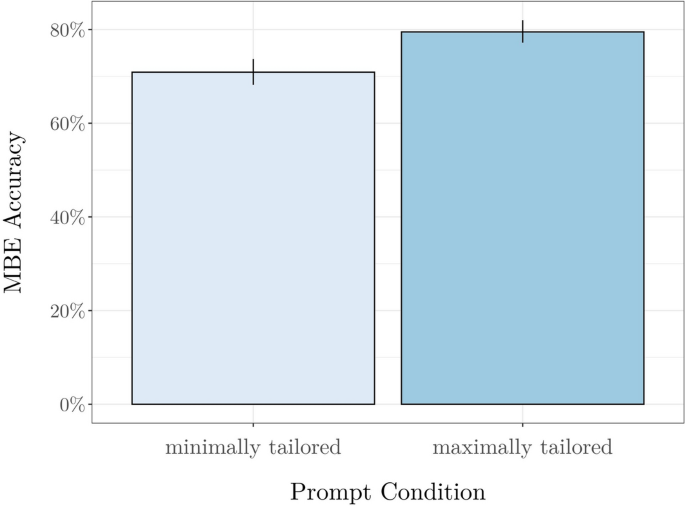

Another caveat is that the MBE, the portion explicitly scored by the NCBE, is graded on a curve . So if you think you might have scored well, once you see the results you may have to reconsider. A 10-15 point difference in raw score can mean a 30%+ difference in scaled score .

What Is Considered A Good Bar Exam Score?

While your UBE report should clearly state if you passed or failed, a score of 280 is a passing score in every state . The lowest possible passing score of 266 will suffice in states like South Carolina, Montana, and some others.

Depending on how many people have taken the UBE , a score of 280 is approximately the 73rd percentile . A 300 is in about the 90th percentile, and 330 is in the top 1% of all scores.

Keep in mind that out of the 200 points that make up the MPT and the MEE, only 175 of them are actually scored, and the remainder are considered to be ‘pretest’ questions that do not count towards your overall score .

Tips For Scoring High On The Bar Exam

Outlines are key.

Break down each outline into manageable pieces . For example, if you start with Torts , chop that up into pieces like “intentional torts”, which will each have their own subsections of duty, breach, cause, and harm. Then do the same for “intentional tort defenses” and so on.

Once you have your chunks, memorize them . Cover your outline and see if you can recite it. Change up the order and do it again . This will ensure that the information gets deeply embedded.

Once you can recite one portion verbatim, move on to the next after a brief bit of downtime . Make sure you are taking breaks, or you will get burnt out. Dealing with information in volumes like this means that breaks are an absolute necessity or fatigue will set in and your capacity to retain the information will be severely diminished.

Review everything after your current active review period. Then put it away, and get some rest . Studies have shown time and again that sleep increases retention and promotes memory formation . Do not deprive yourself of these benefits.

Memorization

The first thing, and arguably one of the more effective ways to raise your score by 20 points or more, is to memorize the law . Memorize it, verbatim. Do not mistake your excellent overall understanding of the law for having it memorized .

The National Conference of Bar Examiners grades the MBE portion of the bar based on nuances and intricacies of the law. So it follows that the easiest way to squeeze more points from that portion is to memorize the nuances and intricacies of the law.

Understanding

Once you have the memorization down, you need to make sure you understand all the things you have memorized. Ensure that you can explain the principles and functions of the laws and codes you now know by memory .

Explain the concepts out loud, explain the concepts to laypeople. You should have created outlines that help you both memorize and understand the material you will be tested on, use them, and refine them.

An ideal length of your outlines should be about 40-50 pages , long enough without being overly detailed.

Methodical Application

Lots of law students try to race through the MBE portion , without giving much thought to the actual substantive content of the answers. Focus on quality over quantity, but pace yourself.

Use an hour or two daily to completely dissect and examine MBE questions and the fact pattern they are describing . Note dates or specific events that help illuminate the issue and the rule that is being tested in that question. Bar Prep Hero is an affordable prep solution that offers official MBE questions for practice purposes.

Evaluate each answer option and determine why each one is either right or wrong, even once you have the correct answer, determine why the other options are not correct. While this may lead to spending 15-20 minutes on a given question , you will gain more from it.

Use Your Resources

You have countless resources such as study groups, tutoring, and actual MBE questions at your disposal. The NCBE even releases actual past questions for study purposes, and if you are using study aides or bar review courses that do not advertise that they are using official NCBE questions, they generally are not .

What is a passing bar exam score?

What score is deemed “passing” totally depends on your state. For some states, a UBE score as low as 266 (out of 400) is considered passing. In other states, you need to score 280 or above. It just depends on your jurisdiction.

What is a good bar exam score?

Given that the bar exam is a pass/fail test, a “good” score is one that gets you a passing score report. That means a good score will turn on what the cut-off threshold is for passing scores in your individual state.

- Sep 24, 2019

Breaking down Essay Grading by the California Bar Exam

If you are gearing up to take the February 2020 California Bar Exam, you may be wondering how the California bar essay portion is graded. California recently made some changes to their bar exam going from a three day examination period to two days. Day one is the written portion of the exam and consists of 5 one-hour essays and one 90 minute Performance Test.

California divides the graders into six groups, each consisting of 12 experienced graders and up to 4 apprentice graders. Both groups are supervised. The graders assign a raw score to each essay on a scale from 40 – 100. The State Bar of California has explained, “in order to earn a 40, the applicant must at least identify the subject of the question and attempt to apply the law to the facts of the question. If these criteria are not met, the answer is assigned a zero.” We’re going to go out on a limb here and assume you want to hit a score of 65 and above. That’s exactly what our inexpensive materials are geared to accomplish.

A score of 55 is designated as a below passing paper . The applicant missed or incompletely discussed two or more major issues. The applicant had a weak or incomplete analysis of the issues addressed and the overall organization was poor.

A score of 60 is a slightly below passing paper . The applicant may have missed or incompletely discussed one major issue. Discussion of all issues was incomplete and organization of the issues was poor.

A score of 65 is an average passing paper . Applicant had a lawyer-like discussion of all major issues and missed some minor issues. Overall paper could have been better.

A score of 70 is a slightly above average paper . Applicant had a lawyer-like discussion of all major issues and missed some minor issues. Paper could have been better, but analysis and reasoning warrants more than a 65. Well organized paper.

A score of 75 is a distinctly above average paper . Applicant discussed all major issues in a lawyer-like fashion and discussed the ancillary minor issues. Overall, a well organized paper.

A score of 80-85 is unusually complete and thorough paper. Applicant discussed all major and minor issues in a lawyer-like fashion and very well organized.

Components used for grading include: organization/format, issue spotting, rule statement, and analysis. A passing paper will have use of headings and IRAC used to organize issues discussed. Issues are generally discussed in a logical order. A passing paper will discuss all the main issues, but may fail to discuss some of the minor issues. A passing paper will have clear rule statements that may be stated verbatim or in your own words that blend some of the concepts into one statement. Rules are correctly applied to the facts of the case and there is infrequent discussion of both sides of an issue, but paper still discusses major issues raised from the fact pattern.

We hope this gives you a better idea of what a passing (65 and above) paper looks like. Feel free to head to our sample page to see a CBB sample of Civil Procedure. All subjects are organized in the same fashion and are specifically geared towards a passing score of 65 and above.

Recent Posts

Thank You, Cal Bar Bible Subscribers: A Special Shoutout to Feedpost!

Conquering the California Bar Exam: A 3-Month Study Plan for Success

July 2023 California Bar Exam Predictions

How to Tackle Essay Writing on the Bar Exam

One skill that is expected to be cultivated and refined during law school is the ability to write well. This makes sense, since good writing will be essential for many legal careers. You will likely need to write memos, client letters, motions, petitions, briefs and other legal documents— so good writing is important! Consequently, the bar exam takes note of this and makes writing an essential component of it.

Whether you’re taking the Multistate Essay Exam or a state-specific bar exam , you will be writing lots of essays during the bar exam and in your preparation for it. So here’s what you need to know about essay writing on the bar exam and strategies you can implement to improve your score.

Check out the most important bar exam essay writing tips below!

See the Top BAR Review Courses

- 1. BarMax Review Course ◄◄ Best Overall BAR Review Course + No Discounts

- 2. Quimbee BAR Prep Course ◄◄ Best Price

- 3. Kaplan BAR Review Courses ◄◄ Expert Instructors

Multistate Essay Exam (MEE) Jurisdictions

Most states use the Multistate Essay Exam. If you’ll be testing in one of these states, here are the basics you need to know:

There are 6 essay questions in total . This part of the test is 3 hours, so you have 30 minutes per question. Also, the subjects for this portion of the test cover:

- Partnerships

- Corporations and limited liability companies

- Civil procedure

- Conflict of laws

- Constitutional law

- Criminal law and procedure

- Real property

- Secured transactions

- Trusts and future interests

- Wills and estates

While any of these topics are fair game, these particular topics make up the majority of the MEE:

- Corporations and LLCs

- Family law and trusts

- Future interests

Consequently, you may want to spend extra time preparing for these areas of the law while also studying for the other subjects.

The good news is that there are guides you can use to determine the most highly tested essay rules. These bar exam study resources will identify these rules and teach you additional rules of law.

Here’s another important tip: focus your time on these major rules instead of wasting too much energy on nuanced rules that are less likely to be tested.

Keep reading for more important study tips to help you pass the MEE:

Bar Essays Studying Tips

The first part of learning how to tackle the essay writing portion of the bar exam is to develop a solid study plan . Your plan should incorporate the following:

Learn More About the IRAC Method and Format

You may have used a variety of writing styles in law school, such as IRAC, CRAC or CREAC. However, the IRAC structure is the most commonly used one on the bar exam, and is what bar examiners will expect. Hence, you need to be familiar with this writing system:

- I – Issue

- R – Rule

- A – Analysis

- C – Conclusion

This system ensures that you write concisely and only include the necessary information. It’s not flowery and won’t contain a lot of excess content— which is a good thing, since you’re on such a constrained time limit!

As you practice, read through your answers and label each sentence with an I,R,A, or C . if a sentence cannot be labeled under one of these letters, it probably does not belong.

Practice Essay Writing Each Week

When you spend so much time studying for the bar exam , it may feel tempting to skip practicing the lengthy essay portion of the test. However, this is one of the biggest mistakes made by most test takers.

Bar essays are an essential component of the test; they can often help leverage a higher score if you don’t do as well on some of the other test portions. Furthermore, while reviewing the rules of law is important, writing about them can show you understand them and know how they apply.

Basically, don’t leave practicing these essays until the end of your preparation. Instead, make practicing essays part of your weekly study plan!

Practice Under Timed Conditions

When you first begin practicing the essay portion of the bar exam, you may not want to time yourself so that you can be sure you are spotting all the issues and honing your writing style . However, toward the middle of your study time, you will want to start practicing under timed conditions.

It is not enough to know how to write a good essay. You need to know how to write a good essay quickly . You need to be able to quickly discuss the most important issues and know when not to elaborate on others.

The best way to study for these questions is to find previous MEE questions and practice them under timed conditions. Then, review the analysis to determine how you did.

Review Rules the Last Two Weeks of Your Study

Focus on memorizing as many rules of law as possible during your last two weeks of studying. You’ll need to be able to recall these basic rules as part of your essay writing without hesitation, so be sure that you can recite rules of law without even thinking about them.

Learn More About The BAR Exam

- Take These Steps To Pass The Bar Exam!

- How To Crush The Essay Portion Of The Bar Exam

- How To Study For The BAR While Working Full Time!

- How To Pass The BAR After Failing The First Time

- How To Become A Lawyer

Tips for the Day of the Bar Exam

Okay, so now it’s the day of the bar exam— you need to know how to truly tackle these questions in the moment of truth. Here’s what you need to do:

Plan The Time You Have for Writing Essays

Before beginning this portion of the test, you should have a plan on how you will manage your time, such as:

- First 10 minutes: Read the essay prompt. Maybe read it multiple times. Don’t rush this part; your ability to recall this information will be essential to answering the question. Also, outline your answer as you read through the prompt.

- Next 15 – 17 minutes: Write your answer.

- Last 3 to 5 minutes: Review your answer to check for competition and to make necessary edits.

Stick to this timeline for every question. If you start going over 5 minutes on every question, you won’t have enough time to tackle the last question. Ultimately, it’s far better to get out an analysis of all the questions than to answer one question perfectly and not even address another.

Make an Outline

Making an outline can help you organize your thoughts and create a plan on what you will be writing about. Mark up the prompt as you go— you may want to highlight or underline certain information to help your recall later.

Try to make this outline clear, such as making a bullet list of items related to the prompt. If you run low on time, you can always copy and paste this information to provide a semi-answer to the prompt. Write your rule statement and list the relevant facts that will support your analysis. Also, consider how much time you will need to discuss each subpart of the answer.

Apply the IRAC Structure

Now it’s time for you to apply what you’ve learned. Use IRAC to fully answer the question.

Briefly state the issue in a bolded heading. Issues are usually clearly stated on bar exam essay questions rather than hidden in a fact pattern, so this should be an easy way to pick up points. Restate the issue and move onto the next part of your answer.

State the rules that apply to the case. This is where rote memorization comes into play, since you need to be able to state the proper rule that applies to the question. Bold key terms to show that you know what rules and terms apply. This will get you the points you need on this section.

The summary of rules should be clear and concise and should demonstrate that you understand what is involved. Only address those rules that actually apply to this case and address the specific question.

Show how the rule applies, given the particular fact pattern. This will be the longest portion of your answer. However, your analysis should still be shorter than your analysis in your legal writing class. You can pick up (or lose) a lot of points in this portion of the answer! You need to demonstrate that you know how to apply the law to the facts. Generally speaking, the more facts you’re able to explain, the higher your score will be.

Most of the facts in the fact pattern will be there for a reason— and you need to explain why these facts matter in your analysis. Provide a step-by-step analysis of how the facts support your conclusion. You may be able to score extra points by identifying counter-arguments or a majority and minority view.

Conclusion

End with a brief conclusion. One sentence is fine here. Perhaps unlike law school exams, there is usually a “right” conclusion. Some writing structures will use a conclusion first and then end with a conclusion, but this is not recommended on the bar exam. If you start with the wrong conclusion, the grader will look for ways to prove why you are wrong while grading your answer; therefore, save your conclusion for the end!

Organize Your Content

Make your essay simple to read by taking advantage of all the tools at your disposal. Use paragraph breaks to organize your content, creating a clear I, R, A , and C section. Additionally, bold and underline key words and principles of law. Many essay graders will be scanning your work, so make it easy to identify that you understood the legal issues involved by drawing their attention to these key terms.

Also, use transitional words to qualify certain statements and to explain where you are going with your answer. This makes it easier for the grader to follow your analysis, as well as helps you to stay on track.

Answer the Question

Seems obvious, right? Listen:

While it seems simple to just answer the question you are asked, many bar exam essay questions include numerous fact patterns, potential rules of law that apply, and even some red herrings. Be sure that you only answer the question that is asked; don’t go off on a tangent that will not score you any extra points!

Read over the instructions to the question and follow these instructions, even if that means ignoring something or assuming certain facts are true. Any time you devote to issues that are not relevant to the instructions takes away from time that can score you more points.

Manage Your Time

Now that you’re in the middle of your answers, keep a close eye on time. It can be tempting to take just a few more minutes to feel you completed a question, but this can come back to haunt you by taking away necessary time from another question. Set alarms if you need to — and are permitted to — so that you know when time is up for each section. Also, you may want to set a reminder a few minutes before your allotted time so that you can quickly wrap up the question before moving on to the next one.

With that being said, avoid writing a partial essay and then moving onto another one. It can take several minutes to regain your bearings and remember what the essay was about when you switch back and forth. Instead, finish each question in the allotted time and then move onto the next.

Get Discounts On BAR Review Courses!

Enjoy Up to $2,700 Off on BarMax Course

Save Up to $700 on Kaplan BAR Review Course

$300 Savings on Quimbee Bar Review+

Crushendo Coupon: 10% Off Bar Prep Products

Quick tips for essay writing.

Here’s a quick round-up of tips to keep you on track when preparing your bar exam essays:

- Read the facts more than once. Don’t rush this part!

- Don’t write a lengthy, historical background of the law. Instead, make it concise.

- Don’t write a long analysis regarding policy if the question does not ask for it.

- Present counter-arguments but spend less time on them than arguments

- Provide a clear and decisive conclusion.

- Pace yourself. The two-day bar exam is a marathon, not a sprint. Approach each question with patience and don’t try to rush it.

- Don’t talk to anyone about your answers. This will undoubtedly make you doubt yourself; you don’t need a hit to your self-confidence at this time!

- Have a fun plan for what to do after the bar exam to have something to look forward to.

So, there you have it— a plan to help you tackle the essay portion of the bar exam. Use these strategies to help boost your score and you will soon be a licensed attorney!

Thanks for reading and good luck on your exam!

Frequently Asked Questions About Bar Essays

How do you write an essay for the bar exam.

There’s a specific structure that bar examiners expect when you write answers to essay questions. This structure is called IRAC, which is short for “Issue, Rule, Analysis, and Conclusion.” When writing a bar essay, try and structure all of your sentences around these four subjects in a way that makes sense.

How many essays are on the bar exam?

The essay portion of the bar exam is called the Multistate Essay Exam, or MEE for short. It is made up of six different essay questions that you must write answers to over the course of three hours. The subjects can vary depending on what test you take, but all are related to the legal field and will require excellent logical reasoning and critical thinking to earn a high score.

How long should bar exam essays be?

Although there may not be a set word limit for your bar exam essay, a good rule of thumb is to write at least 1,000 words for each answer. However, you should avoid padding out your article’s word count with excessively detailed descriptions of legal concepts; stick to the IRAC format and ensure each word in each sentence has a purpose.

Is it better to write or type the bar exam?

There’s no universal answer to this question, since some students will prefer to write by hand and others will prefer typing. However, there are significant benefits to typing your bar exam essay questions over using a pen and paper, such as easy erasing and the ability to copy and paste. However, power issues on rare occasions have forced essay writers to resort to pen and paper, and it makes it impossible to lose progress due to a software error.

COMPARE THE BEST BAR PREP COURSES

Valerie Keene is an experienced lawyer and legal writer. Valerie’s litigation successes have included wins for cases involving contract disputes, real property disputes, and consumer issues. She has also assisted countless families with estate planning, guardianship issues, divorce and other family law matters. She provides clients with solid legal advice and representation.

Related Posts

Additional Links

Bar Discounts Bar Exam Tips Bar Exam Requirements Policies and Disclosure Terms of Service Contact Us

- Bar Exam Info

- Bar Exam Study Materials

- Legal Services

An Essential California Bar Exam Supplement

- SELL YOUR EXAMS

- TESTIMONIALS

- Berkeley Law

- Boston University

- Cal Western

- Chapman Law

- Concord Law

- La Verne College of Law

- Monterey College of Law

- Pepperdine Law School

- San Diego Law

- Santa Clara Law

- Southwestern

- Trinity Law

- UC Hastings

- Univ. of West LA

- Western State

- Whittier Law

- Bar Exam 101

- Bar Exam Doctor

- Bar Exam Toolbox

- Bar Secrets

- Be Prepared

- Jurax Bar Prep

- Make This Your Last Time

REAL GRADED ESSAYS:

BarEssays.com has spent years and countless hours constructing our database of California Bar Exam essays . You will have access to thousands of authentic essays that have been graded by the California Bar Examiners.

BAR GRADER REVIEWS:

With each premium subscription to BarEssays.com, you gain access to hundreds of Professional Bar Grader Model Answers and Professional Bar Grader Reviews of selected essays in the BarEssays.com database, written by official former graders of the California Bar Exam.

OUTLINES, CHECKLISTS, TEMPLATES

With each premium subscription to BarEssays.com, you receive a complete set of California Bar Exam Short Review Outlines, Checklists, and Essay Attack Templates.

EASY SEARCH:

The BarEssays.com search engine allows you to browse essays by subject, essay score, handwritten/typed essays or examination year and includes links to officially released answers. The BarEssays.com search engine will dramatically increase the efficiency of your California Bar Exam studies.

A Database of Over Three Thousand Authentic Graded California Bar Exam Essays

BarEssays.com is a unique and invaluable study tool for the essay portion of the California Bar Exam. We are, by far, the most comprehensive service that provides REAL examples of REAL essays and performance exams by REAL students that were actually taken during the California Bar Exam and graded by the California Bar Examiners. Since launching in 2007, thousands have successfully used the BarEssays.com essay database to prepare for the essay portion of the California Bar Exam, with several entire law schools, review courses, and tutors providing access to all of their students.

- info@virginiabarexamtutor.com

Mastering the Virginia Bar Exam Essay Section: A Comprehensive Guide

Tackling the essay section of the Virginia Bar Exam requires a unique strategy. This guide aims to provide a comprehensive understanding of how to effectively navigate this challenging segment of the test, given its different grading standards and essay expectations compared to other states.

Understanding the Unique Nature of the Virginia Bar Exam

Historically, each state had its own unique bar exam. Major bar prep programs like Barbri, Kaplan, and Themis used to customize their materials for every state. However, as most states adopted the Uniform Bar Exam (UBE), these prep programs adjusted their materials to suit a larger audience. They still offer customized outlines and lectures for Virginia, but much of their general essay-writing advice is not well-tailored for Virginia essays and is more accurate for students taking the Multi-State Essay Exam as part of the UBE.

The issue lies in the fact that the essay section of the Virginia Bar exam differs significantly from the UBE’s multi-state essay exam in terms of what it assesses and how it is graded. Consequently, passing the Virginia essay exam calls for specific, tailored advice, which often diverges from the general essay writing advice provided by these prep courses.

The Essentials of Navigating the Virginia Bar Exam

More than just a test of legal knowledge, the Virginia Bar exam gauges your ability to distinguish between relevant and irrelevant information. The examiners will supply specific questions related to the fact pattern, unlike in UBE states or on law school exams, where you may have been asked to find all legal issues within the pattern.

Furthermore, the examiners grade partially based on relevance and scope, meaning you could lose points for including legally correct rules that don’t apply to the question. This practice contrasts with the multi-state essay exam, where the goal is often to find and discuss all legal issues in each fact pattern.

There is almost no overlap between the MBE and the Virginia Essay Exam

Unlike UBE states, where examinees take the MBE and the MEE, in Virginia, you must approach the Multistate Bar Exam (MBE) and the Virginia essay exam as two completely separate tests. Besides federal jurisdiction and the Uniform Commercial Code, there’s hardly any overlap between the two exams, necessitating customized study strategies. What might be a correct response on the MBE could be incorrect on the Virginia essay exam, and vice versa.

How to Prioritize Study Topics

A common yet misguided practice is to predict the frequency of topics that might appear on the exam by using a “subject frequency chart”. But the Virginia exam is no more or less likely to test a particular subject based on past frequency. Instead, the emphasis should be on the weight each topic carries when it does appear, as that significantly influences your overall score and determines how to spend your valuable study time.

The unique grading practices and essay expectations of the Virginia Bar exam present a distinct challenge. To succeed, it’s critical to understand the workings of the exam, adapt your study strategies accordingly, and prioritize topics based on their weight rather than frequency.

As you embark on the journey of preparing for the essay section of the Virginia Bar Exam, there are a few strategies and concepts that will be key to your success. With a focus on understanding the weight of different topics, applying a solid framework for answering questions, and a thoughtful approach to studying, you will increase your chances of achieving a positive outcome.

Prioritizing Subjects in Your Study

Knowing where to focus your attention is a crucial part of preparing for the bar exam. Virginia Civil Procedure is the most important subject, constituting sometimes as much as an estimated 20% of the exam points. Therefore, a solid grasp of this subject will set you miles ahead of the competition. Virginia procedure is often tested with its own fact pattern, and then elements of it are also tested within other subject areas. Wills and Federal Jurisdiction also rank high, and when tested, they often form the core of entire fact patterns.

Less important are the second-tier and lower subjects. Subject charts within the LexBar course categorize subjects within groups, or “tiers” according to how important they are for your study time.

The third-tier subjects, although they may come up relatively often, are less important. Spending significant time on these at the expense of the higher tier subjects can lead to sub-optimal outcomes as you neglect enough time with higher-weight subjects in exchange for trying to learn every detail of a very low-weight subject.

Structuring Your Essay: The Rule-Analysis-Conclusion (RAC) Method

A useful strategy for structuring your essay responses is the Rule-Analysis-Conclusion (RAC) format . This method involves grouping all your rules together, followed by the analysis, and then concluding with a succinct answer for each sub-question under a given fact pattern.

Avoid intermingling your rules and analysis, as clarity is key. Ensure your answer is precise and direct, mirroring the examiners’ labeling. No other subheadings are needed.

Understanding and Answering Essay Questions

When it comes to the actual answering of essay questions, a methodical approach is recommended. Start by reading the question, then preview what your conclusion might sound like. Next, read the fact pattern to figure out what facts are relevant to the rules. Your conclusion should mirror the question, forming a declarative sentence that directly answers the question. This approach keeps you focused on the scope of the question and prevents you from veering off into irrelevant facts or rules.

Practicing with Past Virginia Bar Exams

Past exams can be a powerful tool in your preparation. They allow you to get a feel for the style of questions and how they are structured. Look out for multi-part questions, and practice turning the question into a declarative sentence to create your conclusion at the end of your answer.

Remember, the goal is to understand the question, decide on your conclusion, then which legal rules apply, and finally, use the facts to provide relevant analysis under the rules. This meticulous approach to essay answering will help you master the Virginia Bar Exam essay section.

The Virginia Bar Exam essay section is not merely about producing a good analysis; it’s about demonstrating your knowledge of the specific legal rules that apply within Virginia. In other words, a beautiful, logically constructed analysis can fall flat if it doesn’t include the correct legal rules. Therefore, the first step in succeeding in the essay section is to learn the law. But how should one go about this?

Learning Through Testing

One effective method of learning and remembering legal rules is through testing. This might involve writing full tests or, more practically, using a tool like flashcards. Creating flashcards on the specific legal rules you need to remember, and going through them repeatedly until you’ve memorized them, can be a very effective strategy. For instance, a flashcard could simply state “federal diversity jurisdiction” on one side, and on the other side, the associated legal rule: “The parties are completely diverse, and the amount in controversy is greater than $75,000 exclusive of costs and interest. Complete diversity means that no Plaintiff is a citizen of the same state as any defendant.”

This method of learning leverages the power of spaced-repetition testing, which research has shown to enhance memory retention. Even getting the answer wrong can help, as it primes your brain to correct and remember the right answer the next time you see it. Testing yourself could also take the form of practicing essays and self-grading, then making a note of every legal rule you miss or state incorrectly and committing those to memory.

Where to Get Material for Study

Deciding where to source your study material depends on the importance of the subject matter. For heavily tested subjects like Virginia procedure, an outline from a major bar prep program, such as Barbri, Themis, or Kaplan, could be invaluable. These detailed outlines can be transformed into flashcards for repeated study and memorization.

However, for less significant subjects like the Uniform Commercial Code (UCC), past exam questions and sample answers may suffice. Going through these past exams and extracting the legal rules will equip you with most of the legal rules that may come up on your exam. Virginia tends to test the same rules repeatedly, so familiarizing yourself with these can give you an edge.

The number of flashcards you make should reflect the importance of the subject matter. For instance, Virginia procedure, being the most important subject, should yield close to 300 flashcards. On the other hand, less significant subjects can have fewer flashcards.

Preparing for Certain Questions on the Virginia Bar Exam

There are certain questions that appear so often that you should have “rule scripts” memorized verbatim, that you could recite at a moment’s notice with virtually no forethought. For instance, questions involving Dillon’s rule, subject matter jurisdiction by diversity, and perfecting state court civil appeals come up so often that you should be able to write the rules without having to think. Having these rules hard-coded in your mind can save a significant amount of time during the exam.

Preparing for the essay section of the Virginia Bar Exam can be a daunting task. With the proper strategies and a clear understanding of how to approach legal analysis, you can significantly improve your chances of success. Let’s look at essential aspects of legal analysis, discuss key elements, and provide you with valuable tips to effectively tackle essay questions.

Understanding Legal Analysis

Legal analysis forms a crucial part of the “RAC” (Rule, Analysis, Conclusion) organization format that you should use for the Virginia exam. In the analysis section, you show your ability to connect the facts of the case to the relevant rules and draw logical conclusions. By effectively marrying the facts with the rules, you highlight your understanding of the law and its application.

Identifying Relevant Rules and Elements

To perform a strong legal analysis, you must start by clearly identifying the rules that apply to the given essay question. Different acronyms and keywords may be used to describe these rules, such as the “ocean” acronym, which stands for open and notorious, continuous, exclusive, adverse, and hostile, the elements of adverse possession. When encountering an adverse possession question under the real property rubric, you know that all these elements must be met for a period of 15 years in Virginia to establish title by adverse possession.

Crafting an Effective Analysis

In your analysis section, it is crucial to connect the facts of the case to the identified rules. By linking the facts to the rules, you show a thorough understanding of how the law applies in each situation. For example, if the fact pattern states that John occupied the parking lot openly and notoriously, with a shack that has remained there for seven years, you can assert that his possession was open and notorious and continuous for seven years because his shed was in an open parking lot used by others, and the facts tell us it remained there for seven years. This process of connecting the facts to the rules should be repeated throughout your analysis. Every rule element needs an associated fact, and every fact that you use in your analysis must directly connect to a rule element, or it shouldn’t be there.

Formulating a Precise Conclusion for the Virginia Bar Exam

After presenting your analysis, it is important to conclude your essay with a concise and precise statement that directly answers the question posed. For instance, if the question is, “Does Sam have standing to sue Jackson to require him to remove a shed?” then your conclusion should be, “Sam (does or does not) have standing to sue Jackson to require him to remove the shed.” By mirroring the question in your conclusion, you provide a clear and direct response that your grader will immediately recognize.

Applying the Approach: An Example Essay Question

To further illustrate the concepts we just covered, let’s analyze a sample essay question within the realm of real property. The question asks whether Sam has standing to sue Jackson to require him to remove a shed from the shared parking lot of a condominium association. The relevant rule states that in Virginia, only a unit association has standing to sue for misuse of common elements, while individual unit owners lack standing.

Marrying Facts to Rules

To decide which facts matter, we consider the two elements of the rule: 1) individual unit owners lack standing and 2) misuse of common elements. Among the provided facts, the fact that Sam occupied ten parking spaces in the common parking lot stands out as it aligns with the second element of the rule. Additionally, as to the first element of the rule, it is important to note that Sam is not the condo association itself but only an individual unit owner.

Writing an Effective Analysis

In the analysis section, we would say, “Sam occupied ten parking spaces in the common parking lot, which is a common element. Sam is merely an individual unit owner and does not represent the condo association itself.” Notice how this analysis is short but complete. We have addressed each element of the 2-element rule. We should refrain from introducing any irrelevant facts or guessing about potential outcomes, just to make the answer longer. Stop when you’ve fully answered the question. There is no need to speculate about what the condo association might do, or how Sam might be able to get the shed removed. Just answer the question.

Crafting a Precise Conclusion

Concluding our essay, we would assert, “Therefore, Sam does not have standing to sue Jackson to require him to remove the shed.” This conclusion uses the language of the question itself, converting it into a declaratory statement.

Common Mistakes to Avoid

When tackling essay questions, it is crucial to remain focused on the question at hand and not try to solve problems beyond its scope. Some common errors to avoid include:

- Guessing about future sub-questions or additional issues that may arise.

- Assuming permissions or actions of other parties without clear evidence.

- Including irrelevant facts, such as the awareness or knowledge of the parties involved.

- Attempting to solve broader problems beyond the specific question asked.

To excel in the Virginia Bar Exam essay section, keep these key points in mind:

- Understand the “RAC” organization format and focus on the analysis section.

- Find the relevant rules and elements necessary to address the question.

- Connect the facts of the case to the identified rules in your analysis.

- Craft a precise conclusion that directly answers the question.

- Avoid common mistakes like issue spotting and speculation beyond the question’s scope.

Preparing for the essay section of the Virginia Bar Exam requires a strategic approach to maximize your chances of success. Let’s look at some valuable tips and resources that will help you study effectively, navigate the exam, and improve your essay writing skills. Additionally, we will discuss the LexBar online course, a resource designed to help you in your exam preparation journey to the Virginia Bar Exam.

Understanding the Unique Virginia Bar Exam Structure

The Virginia Bar Exam essay section consists of separate, unrelated sub-questions under each fact pattern. Each question requires a distinct analysis and does not build upon earlier answers unless the exam question specifically says otherwise. It is essential to read each question carefully to avoid mistakenly repeating rules or analysis from earlier responses.