Washington Monthly

The World Is Choking on Digital Pollution

Share this:.

- Click to share on Facebook (Opens in new window)

- Click to share on X (Opens in new window)

Tens of thousands of Londoners died of cholera from the 1830s to the 1860s. The causes were simple: mass quantities of human waste and industrial contaminants were pouring into the Thames, the central waterway of a city at the center of a rapidly industrializing world. The river gave off an odor so rank that Queen Victoria once had to cancel a leisurely boat ride. By the summer of 1858, Parliament couldn’t hold hearings due to the overwhelming stench coming through the windows.

The problem was finally solved by a talented engineer and surveyor named Joseph Bazalgette, who designed and oversaw the construction of an industrial-scale, fully integrated sewer system. Once it was complete, London never suffered a major cholera outbreak again.

London’s problem was not a new one for humanity. Natural and industrial waste is a fact of life. We start excreting in the womb and, despite all the inconveniences, keep at it for the rest of our lives. And, since at least the Promethean moment when we began to control fire, we’ve been contributing to human-generated emissions through advances intended to make our lives easier and more productive, often with little regard for the costs.

As industrialization led to increased urbanization, the by-products of combined human activity grew to such levels that their effects could not be ignored. The metaphorical heart of the world’s industrial capital, the Thames was also the confluence of the effects of a changing society. “Near the bridges the feculence rolled up in clouds so dense that they were visible at the surface, even in water of this kind,” noted Michael Faraday, a British scientist now famous for his contributions to electromagnetism.

Relief came from bringing together the threads needed to tackle this type of problem—studying the phenomenon, assigning responsibility, and committing to solutions big enough to match the scope of what was being faced. It started with the recognition that direct and indirect human waste was itself an industrial-scale problem. By the 1870s, governmental authorities were starting to give a more specific meaning to an older word: they started calling the various types of waste “pollution.”

A problem without a name cannot command attention, understanding, or resources—three essential ingredients of change. Recognizing that at some threshold industrial waste ceases to be an individual problem and becomes a social problem—a problem we can name—has been crucial to our ability to manage it. From the Clean Air Act to the Paris Accords, we have debated the environmental costs of progress with participants from all corners of society: the companies that produce energy or industrial products; the scientists who study our environment and our behaviors; the officials we elect to represent us; and groups of concerned citizens who want to take a stand. The outcome of this debate is not predetermined. Sometimes, we take steps to restrain industrial externalities. Other times, we unleash them in the name of some other good.

By the 1870s, governmental authorities were giving a more specific meaning to an old word: they called industrial waste “pollution.” Now, we are confronting new and alarming by-products of progress, and the stakes may be just as high.

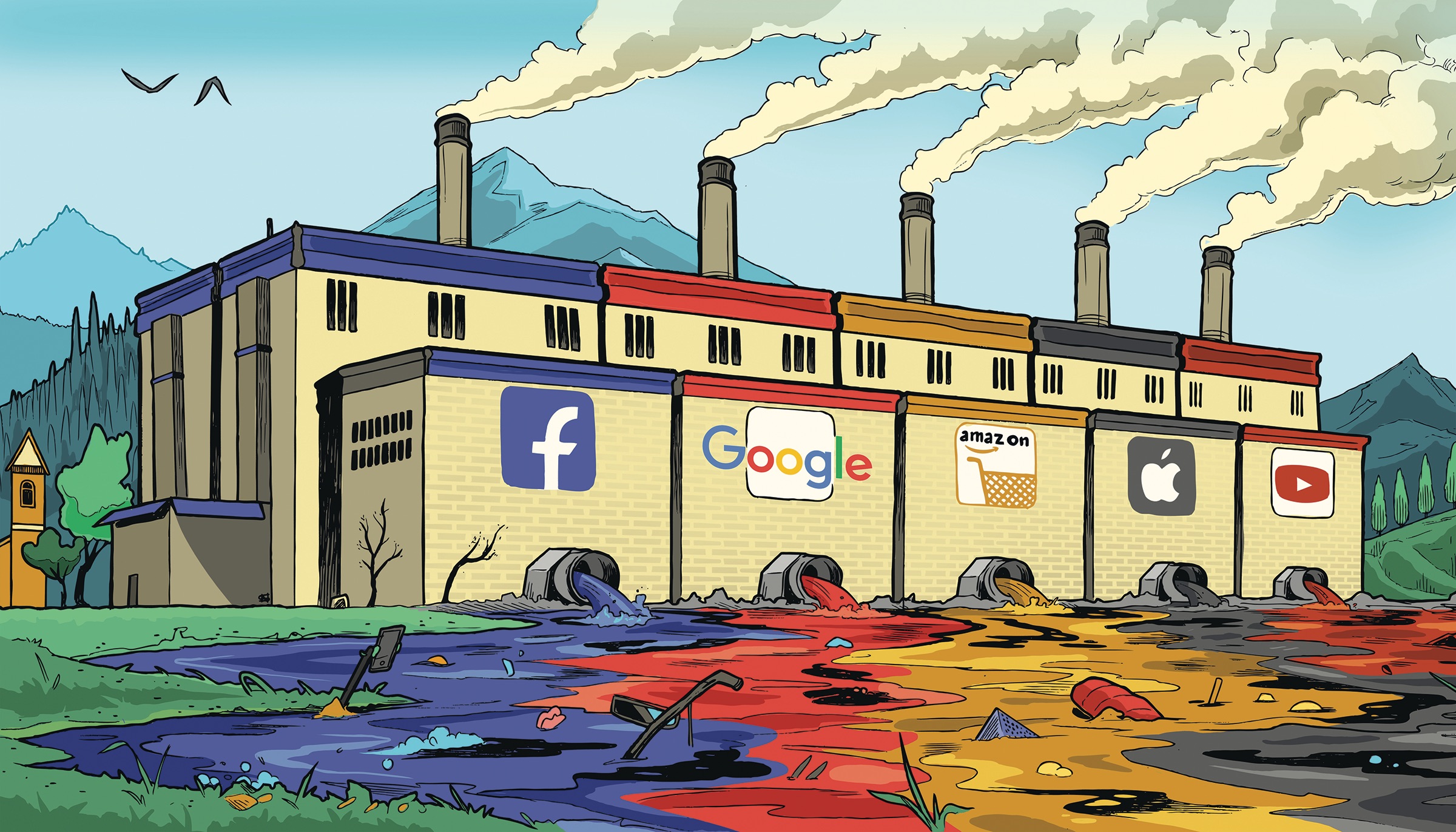

Now, we are confronting new and alarming by-products of progress, and the stakes for our planet may be just as high as they were during the Industrial Revolution. If the steam engine and blast furnace heralded our movement into the industrial age, computers and smartphones now signal our entry into the next age, one defined not by physical production but by the ease of services provided through the commercial internet. In this new age, names like Zuckerberg, Bezos, Brin, and Page are our new Carnegies, Rockefellers, and Fords.

As always, progress has not been without a price. Like the factories of 200 years ago, digital advances have given rise to a pollution that is reducing the quality of our lives and the strength of our democracy. We manage what we choose to measure. It is time to name and measure not only the progress the information revolution has brought, but also the harm that has come with it. Until we do, we will never know which costs are worth bearing.

W e seem to be caught in an almost daily reckoning with the role of the internet in our society. This past March, Facebook lost $134 billion in market value over a matter of weeks after a scandal involving the misuse of user data by the political consulting firm Cambridge Analytica. In August, several social media companies banned InfoWars, the conspiracy-mongering platform of right-wing commentator Alex Jones. Many applauded this decision, while others cried of a left-wing conspiracy afoot in the C-suites of largely California-based technology companies.

Perhaps the most enduring political news story over the past two years has been whether Donald Trump and his campaign colluded with Russian efforts to influence the 2016 U.S. presidential election—efforts that almost exclusively targeted vulnerabilities in digital information services. Twitter, a website that started as a way to let friends know what you were up to, might now be used to help determine intent in a presidential obstruction of justice investigation.

And that’s just in the realm of American politics. Facebook banned senior Myanmar military officials from the social network after a United Nations report accusing the regime of genocide against the Muslim Rohingya minority cited the platform’s role in fanning the flames of violence. The spread of hoaxes and false kidnapping allegations on Facebook and messaging application WhatsApp (which is owned by Facebook) was linked to ethnic violence, including lynchings, in India and Sri Lanka.

Concerns about the potential addictiveness of on-demand, mobile technology have grown acute. A group of institutional investors pressured Apple to do something about the problem, pointing to studies showing technology’s negative impact on students’ ability to focus, as well as links between technology use and mental health issues. The Chinese government announced plans to control use of video games by children due to a rise in levels of nearsightedness. Former Facebook executive Chamath Palihapitiya described the mechanisms the company used to hold users’ attention as “short-term, dopamine-driven feedback loops we’ve created [that] are destroying how society works,” telling an audience at the Stanford Graduate School of Business that his own children “aren’t allowed to use that shit.”

The feculence has become so dense that it is visible—and this is only what has floated to the top.

For all the good the internet has produced, we are now grappling with effects of digital pollution that have become so potentially large that they implicate our collective well-being. We have moved beyond the point at which our anxieties about online services stem from individuals seeking to do harm—committing crimes, stashing child pornography, recruiting terrorists. We are now face-to-face with a system that is embedded in every structure of our lives and institutions, and that is itself shaping our society in ways that deeply impact our basic values.

We are right to be concerned. Increased anxiety and fear, polarization, fragmentation of a shared context, and loss of trust are some of the most apparent impacts of digital pollution. Potential degradation of intellectual and emotional capacities, such as critical thinking, personal authority, and emotional well-being, are harder to detect. We don’t fully understand the cause and effect of digital toxins. The amplification of the most odious beliefs in social media posts, the dissemination of inaccurate information in an instant, the anonymization of our public discourse, and the vulnerabilities that enable foreign governments to interfere in our elections are just some of the many phenomena that have accumulated to the point that we now have real angst about the future of democratic society.

I n one sense, the new technology giants largely shaping our online world aren’t doing anything new. Amazon sells goods directly to consumers and uses consumer data to drive value and sales; Sears Roebuck delivered goods to homes, and Target was once vilified for using data on customer behavior to sell maternity products to women who had yet to announce their pregnancies. Google and Facebook grab your attention with information you want or need, and in exchange put advertisements in front of you; newspapers started the same practice in the nineteenth century and have continued to do it into the twenty-first—even if, thanks, in part, to Google and Facebook, it’s not longer as lucrative.

But there are fundamental and far-reaching differences. The instantaneity and connectivity of the internet allow new digital pollution to flow in unprecedented ways. This can be understood through three ideas: scope, scale, and complexity.

The scope of our digital world is wider and deeper than we tend to recognize.

It is wider because it touches every aspect of human experience, reducing them all to a single small screen that anticipates what we want or “should” want. After the widespread adoption of social media and smartphones, the internet evolved from a tool that helped us do certain things to the primary surface for our very existence. Data flows into our smart TV, our smart fridge, and the location and voice assistants in our phones, cars, and gadgets, and comes back out in the form of services, reminders, and notifications that shape what we do and how we behave.

It is deeper because the influence of these digital services goes all the way down, penetrating our mind and body, our core chemical and biological selves. Evidence is mounting that the 150 times a day we check our phones could be profoundly influencing our behaviors and trading on our psychological reward systems in ways more pervasive than any past medium. James Williams, a ten-year Google employee who worked on advertising and then left to pursue a career in academia, has been sounding the alarm for years. “When, exactly, does a ‘nudge’ become a ‘push’?” he asked five years ago. “When we call these types of technology ‘persuasive,’ we’re implying that they shouldn’t cross the line into being coercive or manipulative. But it’s hard to say where that line is.”

Madison Avenue had polls and focus groups. But they could not have imagined what artificial intelligence systems now do. Predictive systems curate and filter. They interpret our innermost selves and micro-target content we will like in order to advance the agendas of marketers, politicians, and bad actors. And with every click (or just time spent looking at something), these tools get immediate feedback and more insights, including the Holy Grail in advertising: determining cause and effect between ads and human behavior. The ability to gather data, target, test, and endlessly loop is every marketer’s dream—brought to life in Silicon Valley office parks. And the more we depend on technology, the more it changes us.

The scope of the internet’s influence on us comes with a problem of scale . The instantaneity with which the internet connects most of the globe, combined with the kind of open and participatory structure that the “founders” of the internet sought and valorized, has created a flow of information and interaction that we may not be able to manage or control in a safe way.

After the widespread adoption of social media and smartphones, the internet evolved from a tool that helped us do certain things to the primary surface for our very existence. And the more we depend on technology, the more it changes us.

A key driver of this scale is how easy and cheap it is to create and upload content, or to market services or ideas. Internet-enabled services strive to drain all friction out of every transaction. Anyone can now rent their apartment, sell their junk, post an article or idea—or just amplify a sentiment by hitting “like.” The lowering of barriers has, in turn, incentivized how we behave on the internet—in both good and bad ways. The low cost of production has allowed more free expression than ever before, sparked new means of providing valued services, and made it easier to forge virtuous connections across the globe. It also makes it easier to troll or pass along false information to thousands of others. It has made us vulnerable to manipulation by people or governments with malevolent intent.

The sheer volume of connections and content is overwhelming. Facebook has more than two billion active users each month. Google executes three and a half billion searches per day. YouTube streams over one billion hours of video per day. These numbers challenge basic human comprehension. As one Facebook official said in prepared testimony to Congress this year, “People share billions of pictures, stories, and videos on Facebook daily. Being at the forefront of such a high volume of content means that we are also at the forefront of new and challenging legal and policy questions.”

Translation: We ’ re not sure what to do either . And, instead of confronting the ethical questions at stake, the corporate response is often to define incremental policies based on what technology can do. Rather than considering actual human needs, people and society evolve toward what digital technology will support.

The third challenge is that the scope and scale of these effects relies on increasingly complex algorithmic and artificial intelligence systems, limiting our ability to exercise any human management. When Henry Ford’s assembly line didn’t work, a floor manager could investigate the problem and identify the source of human or mechanical error. Once these systems became automated, the machines could be subjected to testing and diagnostics and taken apart if something went wrong. After digitization, we still had a good sense of what computer code would produce and could analyze the code line by line to find errors or other vulnerabilities.

Large-scale machine-learning systems cannot be audited in this way. They use information to learn how to do things. Like a human brain, they change as they learn. When they go wrong, artificial intelligence systems cannot be seen from a God’s-eye view that tells us what happened. Nor can we predict exactly what they will do under unknown circumstances. Because they evolve based on the data they take in, they have the potential to behave in unexpected ways.

Commercial forces are taking basic questions out of our hands. It is treated as inevitable that there must be billons of posts, billions of pictures, billions of videos. The focus is on business: more users, more engagement, and greater activity.

Taken together, these three kinds of change—the scope of intertwining digital and non-digital experience, the scale and frequency leading to unprecedented global reach, and the complexity of the machines—have resulted in impacts at least as profound as the transition from agricultural to industrial society, over a much shorter period of time. And the very elements that have made the internet an incredible force for good also come together to create new problems. The shift is so fundamental that we do not really understand the impacts with any clarity or consensus. What do we call hate speech when it is multiplied by tens of thousands of human and nonhuman users for concentrated effect? What do we call redlining when it is being employed implicitly by a machine assigning thousands of credit ratings per second in ways the machine’s creator can’t quite track? What do we call the deterioration of our intellectual or emotional capacities that results from checking our phones too often?

We need a common understanding, not just of the benefits of technology, but also of its costs—to our society and ourselves.

H uman society now faces a critical choice: Will we treat the effects of digital technology and digital experience as something to be managed collectively? Right now, the answer being provided by those with the greatest concentration of power is no.

The major internet companies treat many of these decisions as theirs, even as CEOs insist that they make no meaningful decisions at all. Jack Dorsey warned against allowing Twitter to become a forum “constructed by our [Twitter employees’] personal views.” Mark Zuckerberg, in reference to various conspiracy theories, including Holocaust denialism, stated , “I don’t believe that our platform should take that down because I think there are things that different people get wrong. I don’t think that they’re intentionally getting it wrong.”

These are just the explicit controversies, and the common refrain of “We are just a platform for our users” is a decision by default. There can be no illusions here: corporate executives are making critical societal choices. Every major internet company has some form of “community standards” about acceptable practices and content; these standards are expressions of their own values. The problem is that, given their pervasive role, these companies’ values come to govern all of our lives without our input or consent.

Commercial forces are taking basic questions out of our hands. We go along through our acceptance of a kind of technological determinism: the technology simply marches forward toward less friction, greater ubiquity, more convenience. This is evident, for example, when leaders in tech talk about the volume of content. It is treated as inevitable that there must be billons of posts, billions of pictures, billions of videos. It is evident, too, when these same leaders talk to institutional investors in quarterly earnings calls. The focus is on business: more users, more engagement, and greater activity. Stagnant growth is punished in the stock price.

Commercial pressures have impacted how the companies providing services on the internet have evolved. Nicole Wong, a former lawyer for Google (and later a White House official) recently reflected during a podcast interview on how Google’s search priorities changed over time. In the early days, she said, it was about getting people all the right information quickly. “And then in the mid-2000s, when social networks and behavioral advertising came into play, there was this change in the principles,” she continued. After the rise of social media, Google became more focused on “personalization, engagement . . . what keeps you here, which today we now know very clearly: It’s the most outrageous thing you can find.”

Digital pollution is more complicated than industrial pollution. Industrial pollution is the by-product of a value-producing process, not the product itself. On the internet, value and harm are often one and the same.

The drive for profits and market dominance is instilled in artificial intelligence systems that aren’t wired to ask why. But we aren’t machines; we can ask why. We must confront how these technologies work, and evaluate the consequences and costs for us and other parts of our society. We can question whether the companies’ “solutions”—like increased staffing and technology for content moderation—are good enough, or if they are the digital equivalent of “clean coal.” As the services become less and less separable from the rest of our lives, their effects become ever more pressing social problems. Once London’s industrial effluvia began making tens of thousands fall ill, it became a problem that society shared in common and in which all had a stake. How much digital pollution will we endure before we take action?

W e tend to think of pollution as something that needs to be eradicated. It’s not. By almost every measure, our ability to tolerate some pollution has improved society. Population, wealth, infant mortality, life span, and morbidity have all dramatically trended in the right direction since the industrial revolution. Pollution is a by-product of systems that are intended to produce a collective benefit. That is why the study of industrial pollution itself is not a judgment on what actions are overall good or bad. Rather, it is a mechanism for understanding effects that are large enough to influence us at a level that dictates we respond collectively.

We must now stake a collective claim in controlling digital pollution. What we face is not the good or bad decision of any one individual or even one company. It is not just about making economic decisions. It is about dispassionately analyzing the economic, cultural, and health impacts on society and then passionately debating the values that should guide our choices—as companies, as individual employees, as consumers, as citizens, and through our leaders and elected representatives.

Hate speech and trolling, the proliferation of misinformation, digital addiction—these are not the unstoppable consequences of technology. A society can decide at what level it will tolerate such problems in exchange for the benefits, and what it is willing to give up in corporate profits or convenience to prevent social harm.

We have a model for this urgent discussion. Industrial pollution is studied and understood through descriptive sciences that name and measure the harm. Atmospheric and environmental scientists research how industrial by-products change the air and water. Ecologists measure the impact of industrial processes on plant and animal species. Environmental economists create models that help us understand the trade-offs between a rule limiting vehicle emissions and economic growth.

We require a similar understanding of digital phenomena—their breadth, their impact, and the mechanisms that influence them. What are the various digital pollutants, and at what level are they dangerous? As with environmental sciences, we must take an interdisciplinary approach, drawing not just from engineering and design, law, economics, and political science but also from fields with a deep understanding of our humanity, including sociology, anthropology, psychology, and philosophy.

To be fair, digital pollution is more complicated than industrial pollution. Industrial pollution is the by-product of a value-producing process, not the product itself. On the internet, value and harm are often one and the same. It is the convenience of instantaneous communication that forces us to constantly check our phones out of worry that we might miss a message or notification. It is the way the internet allows more expression that amplifies hate speech, harassment, and misinformation than at any point in human history. And it is the helpful personalization of services that demands the constant collecting and digesting of personal information. The complex task of identifying where we might sacrifice some individual value to prevent collective harm will be crucial to curbing digital pollution. Science and data inform our decisions, but our collective priorities should ultimately determine what we do and how we do it.

The question we face in the digital age is not how to have it all, but how to maintain valuable activity at a societal price on which we can agree. Just as we have made laws about tolerable levels of waste and pollution, we can make rules, establish norms, and set expectations for technology.

Perhaps the online world will be less instantaneous, convenient, and entertaining. There could be fewer cheap services. We might begin to add friction to some transactions rather than relentlessly subtracting it. But these constraints would not destroy innovation. They would channel it, driving creativity in more socially desirable directions. Properly managing the waste of millions of Londoners took a lot more work than dumping it in the Thames. It was worth it.

Judy Estrin and Sam Gill

Judy Estrin is an internet pioneer, business executive, technology entrepreneur, the CEO of JLabs, and the author of Closing the Innovation Gap. Sam Gill is a vice president at the John S. and James L. Knight Foundation.

- Digital Pollution: What is it?

Digital pollution includes all sources of environmental pollution produced by digital tools. It is divided into two parts: the first is related to the manufacture of any digital tool, and the second to the functioning of the Internet.

Increasingly, the internet and the digital sector are being singled out for their environmental impact. Today, some people even claim that a person who is connected is the worst polluter. Indeed, the digital sector represents significant greenhouse gas emissions. As well as various other forms of pollution and resource consumption.

At the same time, digital uses also mean better information sharing, instant communication and improved exchanges. This means less waste of paper and time. Less travel and more sharing and collaboration.

So how can we optimise our daily use of digital technology? How can we reduce digital pollution and its impact on the environment?

While the environmental impact of digital often becomes an argument to discredit the ecological commitment of anyone who dares to have a facebook account, or even worse, a smartphone. We will see that digital players often actively promote the creation of green energy and that there are many effective solutions to reduce digital pollution.

Digital pollution in numbers

Digital technology contributes significantly to humanity's environmental impact. According to a study carried out in 2019 by Frédéric Bordage, a French digital expert, it would represent nearly 3.8% of global greenhouse gas emissions. That is the equivalent of about 116 million round-the-world car journeys!

The equipments are the main source of pollution linked to digital technology, and in particular their production. In 2019, the ranking of impact sources (in decreasing order of importance) is as follows:

- Equipment manufacturing ;

- Electricity consumption of the equipments ;

- Network power consumption ;

- Data centres' power consumption ;

- Manufacture of network equipments ;

- Manufacture of equipment hosted by IT centres (servers, etc.)

According to the Shift Project report also produced in 2019. The power consumption of digital is increasing by 9% per year. The share of electricity consumption would be due to the use, up to 55%, against 45% for the production of equipment.

However, it is important to note that digital is 2.5x less than road transport CO2 emissions, not counting vehicle or infrastructure production. It is also 3x less than the carbon impact of deforestation . It is also almost 2x less than the energy consumption of commercial buildings.

What are the main causes of digital pollution?

As these figures highlight, the main causes of digital pollution are both the manufacture of the equipment and the electricity consumption of the equipment and the network.

In particular, the use of resources and the extraction and processing stages of raw materials for the manufacture of electronic equipment. As well as the methods used to produce electricity.

In this regard, it is important to note that electricity is the least polluting energy since it emits neither fine particles nor CO2 . However, this is only possible if it is produced from renewable energy sources. Unfortunately, today this is still far from being the case. Since the world's electricity production is still mainly based on fossil fuels.

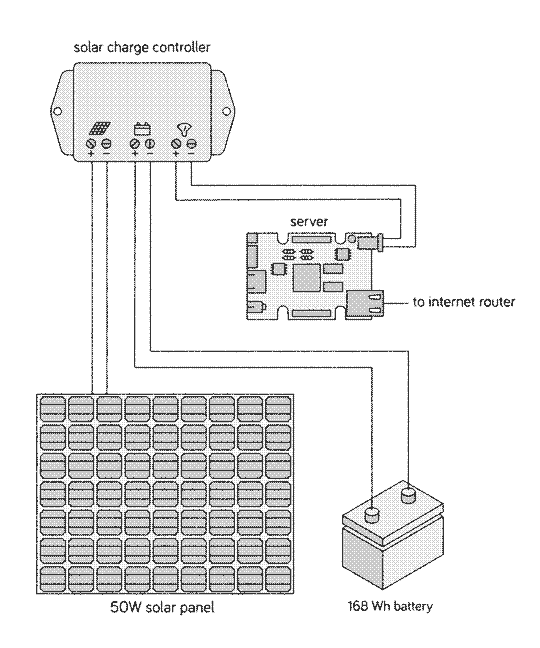

Why choose a green web hosting?

Web hosting is known to be very energy-intensive and not very ecological. Indeed, as we have seen previously the electricity consumption of the network is among the main causes of digital pollution.

Typically, data centers have several thousand high-powered computers and servers, most of them using CPUs and hard disks all the time. This means that they generate so much heat that the supplier will usually need an air-conditioning system to keep the temperature where they are installed at a tolerable level.

The players in the web hosting industry have understood the importance of going green. Whether for economic reasons, for marketing impact or driven by real eco-responsible motivations, many of them are offering green web hosting solutions. Their commitments range from offsetting their carbon emissions to promising to be powered 100% by renewable energy.

Others go further, such as Infomaniak , a pioneer in ecological web hosting in Europe. In particular, with the introduction of an environmental charter containing 20 commitments, such as:

- 100% renewable energy,

- outside air cooling system, without air conditioning

- low-energy servers

- waste recycling

Here is a comparative table of different basic eco-friendly web hosting offers:

How can we reduce the impact of digital technology on the environment?

Green solutions are obviously quite numerous when you are a blogger, just as if you simply want to create an eco-responsible website for yourself or for your company. But what about reducing the impact of digital carbon footprint on a daily basis? In this respect, as users, we can also act to minimize these impacts.

Aim for equipment longevity

Digital equipment has environmental consequences throughout its life cycle. The production of their components requires a lot of energy, chemical treatments as well as rare metals. Always remember that the best waste is the one you don't produce! So, before you buy, always ask yourself if the purchase is really necessary. Or, is the electronic device you want to dispose of still in working order? If so, consider reselling it. There is a growing market today for reconditioned appliances. On the other hand, if it no longer works, always remember to recycle your electronic waste properly.

Do emails pollute?

The impact of sending an email depends on the weight of the attachments, its storage time on a server but also on the number of recipients. To lighten your emails think about:

- Target recipients, clean up your mailing lists and delete attachments from a message you reply to

- Optimize the size of the files you transmit

- Consider using drop-off sites rather than sending as an attachment

- Regularly clean your mailbox and unsubscribe from mailing lists that do not interest you.

Does the data storage pollute?

Data storage is increasingly being done on mail servers and on the Cloud. To optimize your storage of documents, videos, photos or music, think about:

- Only keep what is useful

- Store and use as much data as possible locally

- Only store what you need on the Cloud

Lastly, note that online videos account for 60% of the global data flow and are responsible for nearly 1% of global CO2 emissions. So, to reduce their impact, consider disabling automatic playback in application settings. Give preference to downloaded music or audio streaming platforms rather than those with music clips.

Ce site utilise des cookies pour améliorer votre expérience de navigation. Notamment des cookies de performance, de fonctionnalité et de ciblage. En cliquant sur “Accepter”, vous acceptez notre utilisation de cookies conformément à notre politique de confidentialité.

- Plant trees

- No products in the cart.

All You Need to Know about Digital Pollution

Discover the hidden side of our digital world! 🌐💻 Ever wondered where all that ‘cloud’ data lives? Not in the sky, but energy-hungry data centers. Digital pollution, from e-waste to carbon emissions, is real. Learn how individuals and organizations can fight it. 🌍🌳

Imagine this: You’re scrolling through your phone, and you get a notification that your cloud storage is almost full. You sigh and think about all the pictures, documents, and videos you’ve amassed over the years. Then, you go ahead and purchase more storage, just like that.

Sounds familiar, doesn’t it? But have you ever paused to wonder where all this ‘cloud’ data actually resides? It’s not floating in the sky but stored in colossal data centres that consume tremendous amounts of electricity, contributing to something far less talked about—digital pollution.

We live in a digital age, a world increasingly dependent on technology for everything from communication and entertainment to healthcare and transportation. While the digital revolution has offered unparalleled conveniences and advancements, it comes with its own set of environmental challenges, one of which is digital pollution.

Understanding digital pollution

Digital pollution is an umbrella term that encapsulates the environmental impact of the digital world. It manifests in various ways, such as electronic waste (e-waste), excess data storage, the energy consumption of digital platforms, and the carbon footprint of the entire digital industry.

So, what causes digital pollution?

Electronic waste (e-waste)

Obsolete gadgets and hardware components often end up in landfills, contributing to toxic waste.

Excess data storage

Data centres housing our emails, photos, and digital memories consume immense amounts of electricity.

Energy consumption of digital platforms

Every time you stream a video or engage in online activities, servers somewhere consume electricity to keep that service running.

Carbon footprint of the digital industry

The production, operation, and disposal of digital technology contribute to global carbon emissions.

The impacts of digital pollution

Digital pollution has some pretty astounding impacts. For example, data centres alone are estimated to consume about 1,000 kWh per square metre, which is about ten times the power consumption of a typical American home. The production of digital technology is also pressurising on the environment, as it often involves mining rare metals, depleting Earth’s limited resources.

Digital pollution also has enormous economic and societal implications. E-waste management is not just an environmental issue but also a significant financial burden. Exposure to electronic waste can lead to severe health issues, especially in developing countries where e-waste is often dumped.

How organisations can combat digital pollution

Organisations hold a significant share of responsibility for mitigating digital pollution. Fortunately, there are multiple avenues through which organisations can take meaningful action to reduce their digital environmental footprint. By integrating sustainability into their core business practices, companies can play a vital role in combating the multi-faceted problem of digital pollution.

Green IT practices

Adopting green IT practices is one of the most immediate ways an organisation can reduce its digital pollution. This involves optimising computer systems for energy efficiency, using software that requires less power, and even incorporating AI algorithms that can manage energy use intelligently. By adhering to green IT standards and certifications, companies not only contribute to sustainability but may also see reduced operational costs over time.

Sustainable server management and cloud storage

The servers that store digital data are among the largest contributors to energy consumption in the tech industry. Organisations can make a significant impact by choosing sustainable server management solutions. This could involve migrating to cloud services that are powered by renewable energy or using hosting services that are carbon neutral. Additionally, practices like server virtualization can help companies utilise their existing hardware more efficiently, reducing the need for new equipment and thus mitigating both e-waste and energy consumption.

Recycling and proper disposal of electronic equipment

One person’s trash is another’s treasure, especially in the world of electronics. Companies can take steps to ensure that old or obsolete electronic equipment is either recycled or disposed of in an environmentally friendly manner. This could involve donating old computers to schools or non-profits, using certified e-waste recycling services, or partnering with organisations dedicated to refurbishing and reusing electronic components. Proper disposal not only prevents hazardous waste from entering landfills but also helps recover valuable materials that can be reused.

Promoting a culture of sustainability among employees

It’s not just the technology or systems in place but also the people using them that can make a difference. Organisations can create internal awareness campaigns, workshops, and training programs to educate employees about the importance of digital sustainability. Simple steps, like setting printers to double-sided printing by default or encouraging employees to power down their computers when not in use, can add up to significant energy savings. Incentive programs can also be developed to reward departments or teams that achieve specific sustainability milestones.

Reducing unnecessary digital clutter

In today’s data-driven landscape, it’s easy to accumulate digital clutter like unused files, redundant emails, and outdated databases. Not only does this take up server space, but it also requires energy to maintain. Organisations should establish regular protocols for digital clean-ups, ensuring that only necessary data is stored. Efficient coding practices can also reduce the amount of computational power required to perform tasks, contributing to energy savings.

Investing in sustainable tech solutions

Lastly, future-proofing against digital pollution involves strategic investments in sustainable technologies. This could be anything from procuring energy-efficient hardware to investing in software that enables remote work, thus reducing the need for physical infrastructure and daily commuting. Organisations can also look into funding or partnering with start-ups and initiatives that are focused on creating sustainable technology solutions.

By taking these steps, organisations don’t just do good; they also benefit from cost savings, improved brand image, and increased employee engagement. Combating digital pollution is not just an ethical imperative but also a smart business strategy for long-term resilience and success.

How individuals can combat digital pollution

Individuals can also contribute to reducing digital pollution. We can start with conscious consumption and opt for durable, upgradable, and eco-friendly electronic products when we absolutely need to purchase something. We can clean up unnecessary files in our cloud storage. We can also raise awareness about digital pollution within the community. Every bit of knowledge shared contributes to a more sustainable future.

Tree planting to offset digital pollution

So how does tree-planting tie into all this? Trees are nature’s best carbon sinks, absorbing carbon dioxide and releasing oxygen. Planting trees is a direct way to offset the carbon emissions from digital activities. By supporting tree-planting organisations, you make a tangible contribution to combating digital pollution.

As a tree-planting organisation, we offer various programs designed to offset carbon footprints aimed at both organisations and individuals. Supporting our initiatives is not just about planting trees; it’s about creating a sustainable digital ecosystem for our future.

The final word

Digital pollution is a pressing issue that requires our immediate attention. While the responsibility may seem overwhelming, tackling this problem is a collective task. By taking conscious steps as individuals and organisations, we can significantly mitigate the environmental, economic, and societal impacts of digital pollution. As we strive for a digitally advanced society, let’s not forget to balance technology with sustainability, reminding ourselves that a greener future is possible.

Privacy Overview

EcoMatcher’s software engineers develop the needed technologies to redefine the sustainability industry in smart, creative, and useful ways. As a software engineer, you will work on a specific project critical to EcoMatcher’s needs with opportunities to switch between projects as you and our fast-paced business grow and evolve. We need our engineers to be versatile, display leadership qualities, and be enthusiastic to take on new problems across the full-stack as we continue to push technology forward.

Responsibilities:

- Develop, maintain and improve EcoMatcher software;

- Collaborate with team to manage project priorities, deadlines, and deliverables;

- Self-motivated, with excellent written and verbal communication skills.

- Graduate in Computer Science, similar technical field of study or equivalent practical experience; Reputable university graduates is a plus;

- Experience with one or more general-purpose programming languages including but not limited to: PHP, Python, JavaScript, CSS, and Go;

- Familiar working with a relational database, WordPress framework, and Amazon Web Service (AWS) is a plus;

- Experience working on agile methodologies and collaborative version control tools;

- Experience developing accessible technologies;

- Excellent organization and presentation skills to structure your presentations and documents in a way that can be easily understood and modified by others;

- Problem solver that requires very little guidance on projects; thrives in a fast-paced environment;

- Capable to communicate in English properly;

- Available to work from EcoMatcher office in Bandung;

How to apply:

- Send your CV to [email protected] with the subject [Hiring – Software Engineer], or

- Click on the “Apply Now” button in the related section

A Graphic Designer at EcoMatcher demonstrate a passion for great design and the ability to achieve it at pixel level. They must have a highly refined design aesthetic and ability to work within the EcoMatcher brand. This person should have excellent communication skills and be able to articulate the rationale behind their work.

- Design elegant UIs and graphics for a global audience across platforms: web, iOS, Android and print;

- Assist the front end team in implementing precise visual design;

- Create and manage asset libraries for reusable, systematic design;

- Solid knowledge of the creative process at high quality, and to specification;

- Expert understanding of the formal elements of design, including typography, layout, balance, and proportion;

- Problem solver that requires very little guidance on projects; thrives in a fastpaced environment;

- Expert knowledge of Adobe CC suite and other industry-standard creative tools;

- Expert knowledge of assets implementation standards on iOS, Android, and Web;

- Advanced experience with image compositing and retouching with Photoshop;

- Basic level understanding of HTML & CSS;

- Highly detail-oriented, with a drive for perfection down to the last pixel.

- Send your CV to [email protected] with subject [Graphic Designer – Intern], or

- Click on “Apply now” button of the related section

The Marketing Content Assistant will administer EcoMatcher’s marketing content. Administration includes but is not limited to:

- Deliberate planning, strategy and goal setting;

- Development of brand awareness and online reputation;

- Build for its customers a number of marketing tools, including online video tutorials, and propose content to be used in digital and social media;

- Marketing assistant will work directly with the head of marketing based in Singapore;

- Supervised locally by EcoMatcher’s Technical Director in Bandung;

- Building global partnership;

- Participate in a fast growing global ‘GreenTech’ scale up combating the climate crisis.

The Marketing Content Assistant is a highly motivated, creative individual with experience and a passion for connecting with current and future customers. That passion comes through as she/he engages with customers on a daily basis, with the ultimate goal of:

- Turning fans into customers.

- Turning customers into advocates.

Additional requirements

As EcoMatcher is a B2B company, strong experience with LinkedIn and LinkedIn sales generation tools is a plus.

Mobile Application Developer of EcoMatcher work on many projects that carry varying responsibilities. You will join with all other party to build EcoMatcher products that will be used by our users and partners for either Android or iOS.

- Develop mobile application of EcoMatcher products;

- Join mobile application development process with EcoMatcher team and partners;

- Degree in Computer Science or related technical field or equivalent practical experience;

- 1+ year of relevant work experience;

- Passionate about mobile development;

- Programming experience in Flutter, React, Java, or C++;

- Strong understanding of technical architecture and development process for complex and highly scalable mobile applications

- Highly detail-oriented.

We’re looking for a Software QA Engineer to drive EcoMatcher’s quality assurance efforts and ensure the highest quality of experience across all platforms. This person should have a strong product sense, great communication skills, and be a champion for our users.

- Collaborate on improving developer and engineering team’s test coverage, release velocity and production health;

- Work closely with development teams in instrumenting their workflow to build a comprehensive picture of velocity, coverage and quality;

- Hands-on ability to automate repeated tasks and build test coverage through existing or new infrastructure;

- Write moderately complex code/scripts to test systems, implementing test harnesses and infrastructure as necessary

- Degree in Computer Science, Computer Engineering or equivalent combination of technical education and experience;

- Experience in test automation and testing frameworks;

- Strong track record of creating test plans and writing test cases based on product requirements. Must be a strong strong execution skills;

- Self motivated and takes initiatives. Must be comfortable in a startup environment;

- Experience working closely with development and business teams to communicate problem impacts and to understand business requirements;

- Proficient in written and reading English. English speaking and listening is a plus;

The Social Media Manager will administer EcoMatcher’s’s social media marketing and advertising. Administration includes but is not limited to:

- Content management (including website);

- SEO (search engine optimization) and generation of inbound traffic;

- Cultivation of leads and sales;

- Reputation management.

The Social Media Manager is a highly motivated, creative individual with experience and a passion for connecting with current and future customers. That passion comes through as she/he engages with customers on a daily basis, with the ultimate goal of:

By continuing to browse, you accept the use of cookies.

Manage Cookies

We use cookies on this site to enhance your user experience

Internet pollution: how can its impact be reduced?

By Paul Collins

Journalist and digital marketing professional

Internet pollution is defined as all digital actions emitting greenhouse gases . In fact, this negative external use of new technologies tends to be unknown by consumers. Nevertheless, the digital world represents a substantial environmental impact and creates a large carbon footprint : 4% of all greenhouse gases.

I stand up for real climate action, I offset my CO2 emissions! Global warming is everyone's business! To offset your CO2 emissions and participate in the energy transition. Send us an e-mail

What is internet pollution and what it its impact?

Digital technology has a significant impact on our carbon footprint and has consequences on the environment . Due to its intangible appearance, digital technology is usually seen as a tool without any direct impact on the environment. However, digital technology truly is tangible and depends on physical infrastructure such as data centres and kilometres of cables used for transmission antennas.

There are two types of internet pollution :

- Pollution related to data centres and network infrastructure ;

- Pollution related to consumer equipment .

How much CO2 does the internet produce? This sector is currently responsible for around 4% of global greenhouse gases and the large increase in its use suggests that this carbon footprint will double by 2025 .

Source: BBC Smart Guide to Climate Change .

Digital pollution of our electronic equipment

The underlying weight of all-natural resources necessary to manufacture a product, known as the ecological rucksack of a digital object, generates substantial carbon dioxide (CO2) emissions.

Due to the extraction of raw materials and the manufacturing process in developing countries, the manufacturing phase of an electronic device is what consumes the most energy and emits the most CO2. In fact, developing countries produce their electricity mainly from coal, a natural resource with a substantial environmental impact when mined.

And lastly, the transport phase is added to the balance.

Paradoxically, the less materials we require, the more materials we use . Also, the smaller the devices, the larger the environmental impact.

In the same way, the manufacturing of sophisticated technological equipment requires certain processes and rare metals such as tantalum and tungsten. However, these minerals are at the centre of armed conflicts, especially in Africa. For this reason, minerals extracted for their use in manufacturing of digital equipment are known as " conflict minerals ".

The negative impact of using the internet

From the start of the Covid-19 pandemic and the numerous ensuing lockdowns, there has been an exponential increase in the use of video transmission (streaming) all over the world . Despite the many erroneous assumptions estimating the CO2 emissions for watching 30 minutes of video streaming on Netflix, the climatic impact of streaming video continues to be relatively modest. In fact, according to the International Energy Agency (IEA), watching an hour of video streaming on Netflix entails emissions of 36gCO2 (keeping in mind that a trip by airplane from London to New York is the equivalent of 1.3 tonnes of CO2).

The low carbon footprint of streaming video content can be explained due to the rapid improvement in the energy efficiency of data centres, networks and devices. But the slowing of efficiency gains, the effects of outbreaks, and the new demand for emerging technology, including artificial intelligence (AI) and blockchain, is leading to a growing concern due to general environmental impacts in the sector in the coming years.

As they are data factories that store thousands of IT servers, data centres are usually considered to be energy devourers .

How much video and streaming do we watch? According to data published by media watchdog, Ofcom , Britons spent around a third of their waking hours watching TV and online video content in 2020 - an average of 5 hours and 40 minutes a day .

What consumes the most energy in a data centre?

Data centres are storage centres of digital information . Network infrastructure and data centres are responsible for half of all digital pollution. Searches in search engines require the grid and data centres.

At a data centre, air conditioning is the most expensive element in terms of energy. That is one of the reasons why Facebook has transferred its servers to Nordic countries like Sweden or Canada, close to various hydroelectric plants.

In 2021, the Danish site Data Center Map counted more than 4,700 data centres in 126 countries and over 300 transoceanic cables which extend a network of over one million kilometres.

Since 2010, Greenpeace encourages actors on the web to supply their data centres using renewable energy . Facebook and Google have also joined this pledge. Netflix, Spotify and Twitter work worst according to the study “Clicking Clean” compiled by Greenpeace and published in 2017.

Discover the Clicking Clean report from Greenpeace

5 tips to reduce digital pollution in our daily life

Today, digital pollution is equivalent to commercial air travel pollution. How can we act against digital pollution? What are the good habits to adopt to limit our digital footprint and promote the sustainable development of the digital ecosystem?

Preserve your equipment for longer

Buy second-hand products which tend to be cheaper and less polluting. Choose low energy consumption products.

It is crucial to avoid unnecessary substitution of digital equipment and to favour repair over substitution in case of damage.

Limit energy consumption of electrical appliances

Don’t leave your devices on all the time and turn off routers as frequently as possible . On your telephone, deactivate the GPS, Wi-Fi, Bluetooth functions when not in use. You can also put them in airplane mode.

Watch videos in an eco-responsible way

To limit digital pollution from video streaming, opt for downloads over video streaming and avoid using 4G to play videos. It is also possible to block automatic playing of videos on social media and adjusting the quality of videos on YouTube. In fact, watching low definition videos saves bandwidth, lower your resolution to 144p as soon as possible.

Empty your mailbox

Pollution linked to e-mail is known as " latent pollution ". This pollution is due to the storage of messages that require servers, as each e-mail is saved in three copies and, therefore, on at least three different servers for security reasons.

In order to minimise the impact of your mailbox, it is important to regularly classify and file your e-mails to avoid unnecessary storage in data centres. Furthermore, avoid sending attachment files to many recipients and cancel subscriptions to any newsletters you no longer read.

Internet pollution and emails Research by Cleanfox showed that if all Internet users the UK deleted their useless emails they received in 2020, it would save more than 2 million tonnes of CO2 emissions . To put that into perspective, that's the same as 1.3 million polluting cars.

Download the "Carbonalyser" extension

The French association The Shift Project has developed the free extension Carbonalyser which allows you to measure your digital pollution when surfing the web. The Carbonalyser extension translates your browsing into electrical consumption and CO2 emissions.

Carbonalyser takes into account electrical consumption of the terminal used, the infrastructure of the grid and the data centre which intervenes in the transfer of data. With this information, the association intends to inform users about digital pollution.

Discover more practical guides on protecting the environment , reducing your carbon footprint, and fighting global warming .

Change country

Science Editor

- A Publication of the Council of Science Editors

Gatherings of an Infovore*: Digital Pollution

Add Another Type of Pollution to the List: Digital

Pollution, as defined in the Merriam-Webster online dictionary, is “the action of polluting especially by environmental contamination with man-made waste” and has been a constant in the world ever since humans began living in groups. Anthropologists have found human waste among the ruins of ancient settlements. The word pollution took over from the term industrial waste in the late 19th century, and the different types of pollution were identified throughout the 20th century with the 3 major types: air, land, and water, joined by noise, light, radioactive, thermal, and plastic pollution. Now in the 21st century, we are beginning to recognize that advances in technology have brought about a new type of pollution: digital pollution (sometimes also called information pollution or data pollution or e-waste).

The literature describing digital pollution and various ways to measure its impacts is quite fragmented in type of publication and currency/depth of verified data from blog posts to white papers and articles in magazines/newspapers to those in peer-reviewed books and journals. Presented here is a cross-section of such resources to set you on the path of assessing whether now is the time for your organization to be concerned with the impact of its digital pollution. If interested, I suggest you contact Cambridge University Press. In 2021, the Press, along with Netflix and BT, began working with DIMPACT. DIMPACT is a pioneering initiative launched in 2019 by Carnstone, media companies, and researchers at the University of Bristol to help map and manage carbon impacts of digital information ( https://dimpact.org/news ).

“Digital is physical. Digital is not green. Digital costs the Earth. Every time I download an email I contribute to global warming. Every time I tweet, do a search, check a webpage, I create pollution. Digital is physical. Those data centers are not in the Cloud. They’re on land in massive physical buildings packed full of computers hungry for energy. It seems invisible. It seems cheap and free. It’s not. Digital costs the Earth.

One of the most difficult challenges with digital is to truly grasp what it is, its form, its impact on the physical world. I want to help give you a feel for digital. I’m going to analyze how many trees would need to be planted to offset a particular digital activity. For example:

- 1.6 billion trees would have to be planted to offset the pollution caused by email spam.

- 1.5 billion trees would need to be planted to deal with annual e-commerce returns in the US alone.

- 231 million trees would need to be planted to deal with the pollution caused as a result of the data US citizens consumed in 2019.

- 16 million trees would need to be planted to offset the pollution caused by the estimated 1.9 trillion yearly searches on Google.” —Excerpt from World Wide Waste by Gerry McGovern

Facing up to digital pollution Martin L. Research Information. July 28, 2021. https://www.researchinformation.info/analysis-opinion/facing-digital-pollution

Carbon impact of video streaming Carbon Trust white paper. 2021. https://prod-drupal-files.storage.googleapis.com/documents/resource/public/Carbon-impact-of-video-streaming.pdf

The environmental impact of digital publishing Monell ME, Carbonell JP. CCCBLAB. May 11, 2021. https://lab.cccb.org/en/the-environmental-impact-of-digital-publishing/ Almost 10% of what we read nowadays is in digital format, which is why we are looking into the digital impact of this area.

9 ways to reduce your digital pollution Beasse S. Plank. April 22, 2021. https://plankdesign.com/en/stories/9-ways-to-reduce-your-digital-pollution/

Data pollution Ben-Shahar O. J Legal Analysis. 2019;11:104–159. https://doi.org/10.1093/jla/laz005 We finally know how bad for the environment your Netflix habit is Bedingfield W. Wired. March 15, 2021. https://www.wired.co.uk/article/netflix-carbon-footprint Streaming platforms finally have a tool to evaluate the size of their carbon footprint. Now they need to take action and go green.

The world is choking on digital pollution Estrin J, Gill S. Washington Monthly. Jan/Feb/March 2019. https://washingtonmonthly.com/magazine/january-february-march-2019/the-world-is-choking-on-digital-pollution/ Society figured out how to manage the waste produced by the Industrial Revolution. We must do the same thing with the Internet today.

IoT and the new digital pollution Curry S. Forbes. February 25, 2019. https://www.forbes.com/sites/samcurry/2019/02/25/iot-and-the-new-digital-pollution/?sh=4f5a45c77fd4

How the world is dealing with its e-waste issue? Demma B. Solar Impulse Foundation. May 14, 2019. https://solarimpulse.com/news/how-the-world-is-dealing-with-its-e-waste-issue

The solution to digital pollution is a global ‘Information Consensus’ Venkatesh HR. Medium.com. March 17, 2019. Think of it as Universal Declaration of Human Rights…for information. https://medium.com/jsk-class-of-2019/the-solution-to-digital-pollution-is-a-global-information-consensus-a37db7a054b1

Causes of digital pollution Digital for the Planet. July 9, 2018. https://medium.com/@digifortheplane/causes-of-digital-pollution-c0054d555377

Powering the digital: from energy ecologies to electronic environmentalism Gabrys J. 2014. http://www.jennifergabrys.net/wp-content/uploads/2014/09/Gabrys_ElecEnviron_MediaEcol.pdf

The alarming rise of ‘digital and content pollution’ Wilms T. Forbes. March 26, 2013. https://www.forbes.com/sites/sap/2013/03/26/the-alarming-rise-of-digital-and-content-pollution/?sh=eb528481fb4c

Power, pollution and the Internet Glanz J. The New York Times. September 22, 2012. https://www.sebis.com/main/en/publications/Power%2C+Pollution+and+the+Internet.pdf

Barbara Meyers Ford has retired after a 45-year career in scholarly communications working with companies, associations/societies, and university presses in the areas of publishing, and research. If interested in connecting, find her at www.linkedin.com/in/barbarameyersford and mention that you are a reader of Science Editor .

* A person who indulges in and desires information gathering and interpretation. The term was introduced in 2006 by neuroscientists Irving Biederman and Edward Vessel.

Environment

Impact Economics

- Sustainable Agriculture

- Smart Buildings

- Green Mobility

- Energy Transition

Artificial Intelligence

- Satellites and Drones

- About RESET

Our Digital Carbon Footprint: What’s the Environmental Impact of the Online World?

Every single search query, every streamed song or video and every email sent, billions of times over all around the world - it all adds up to an ever-increasing global demand for electricity, and to rising co2 emissions too. our increasing reliance on digital tools has an environmental impact that's becoming increasingly harder to ignore..

Author Sarah-Indra Jungblut:

Translation Sarah-Indra Jungblut , 01.30.24

Digital tools and services are an integral part of our lives. It’s hard to imagine a life without smartphones, apps, Wikipedia, online banking, route planners with GPS and having a huge selection of music and movies at your fingertips pretty much everywhere, around the clock. All of these things make our lives so much easier. But it’s not only in day-to-day life that digitalisation has become indispensable; digital technologies are also playing an increasingly important role in agriculture and industry, in the transition to renewable energies and in the future of our cities . At the same time, digitalisation offers new solutions for tackling climate change and protecting the environment. Reporting on them is an important part of what we do here at RESET.

However, just because we can’t physically see or touch the data that we’re sending and receiving all over the globe, it actually carries rather heavy baggage: its energy consumption is constantly growing, the smart devices we use are often produced under exploitative and environmentally harmful conditions and, at the end of their far too short lives, they end up as toxic electronic waste . This poses a very important question: will digitalisation be able to help us on the way to a greener and fairer world, or will our growing reliance on digital tools ultimately prove to be an accelerator for climate change and the destruction of the planet?

Right now, the question is still open. Let’s take a closer look at the main causes of our digital carbon footprint – and also who and what is working to mitigate its climate impact.

How big is the world’s digital carbon footprint?

More than half of the world’s population is now online. According to a report by the digital agency We Are Social , more than four billion people used the Internet in 2019 – with more than one million people coming online for the first time each day. And with online activities such as cloud computing, streaming services and cashless payment systems on the up, the demand for online and digital services is constantly growing.

The non-profit organisation The Shift Project (PDF) looked at nearly 170 international studies on the environmental impact of digital technologies. According to the experts, their share of global CO2 emissions increased from 2.5 to 3.7 percent between 2013 and 2018. That means that our use of digital technologies now actually causes more CO2 emissions and has a bigger impact on global warming than the entire aviation industry! According to estimates, the aviation industry caused around 2.5 percent (and rising) of emissions. These figures may vary slightly from study to study, as the energy consumption of digital technologies is difficult to quantify: because too little data is available, because technological advances and changing consumption habits cause them to change rapidly and because they’re highly dependent on certain conditions (for example, the type of power that’s being used). Researchers in a new study criticise the fact that the Shift Project figures were calculated using outdated data. The short study “Climate protection through digital technologies” ( Klimaschutz durch digitale Technologien ) from the Borderstep Institute compares various studies and comes to the conclusion that the greenhouse gas emissions caused by the production, operation and disposal of digital end devices and infrastructures are between 1.8 and 3.2 percent of global emissions (as of 2020).

Even if it’s hard to work out specific figures, it is clear that our digital world has a huge energy appetite, especially if you include not only the use, but also the production, of our digital devices.

What digital activities are using the most energy?

Jens Gröger, senior researcher at the Öko-Institut, estimates that each search query emits around 1.45 grams of CO2. If we use a search engine to make around 50 search queries per day, this produces a huge 26 kilograms of CO2 per year.

Doesn’t sound like a lot? Not at an individual level. But Google itself, in its 2017 Environmental Report 2017, puts its carbon footprint for 2016 at 2.9 million tons of CO2e and its electrical energy consumption at 6.2 terawatt hours (TWh).

But online searches are by no means the core of the problem: one of the biggest causes of the internet’s huge power consumption is in fact music and video streaming. According to research by The Shift Project, 80 percent of all data flows through the net in the form of moving images. Online videos – available on different platforms and viewed without being downloaded – account for almost 60 percent of global data transfer. Transmitting these moving images requires huge amounts of data. And the higher the resolution, the more data is sent and received.

According to The Shift Project, the average CO2 consumption of streamed online video is more than 300 million tons per year (based on measurements taken in 2018). This is the same as emitted by the whole of Spain in a year. Another comparison out of interest: streaming ten hours of film in HD requires more bits and bytes than all of the articles in the English Internet encyclopedia Wikipedia put together.

Another analysis suggests that streaming a Netflix video in 2019 typically consumed 0.12-0.24 kWh of electricity per hour, about 25 to 53 times less than the Shift project estimates. Ralph Hintemann at the Borderstep Institute for Innovation and Sustainability stresses that while video streaming causes high greenhouse gas emissions, no one knows exactly how high the figures are. Concrete figures are difficult to determine because the results depend heavily on the choice of device, the type of network connection and the resolution.

Using the internet on a mobile phone uses the most power, because buildings, vegetation and weather weaken the electromagnetic waves. That means that higher transmission power is required. But even with old copper cables, the signal has to be amplified, especially over long distances. Fibre optic cables, which transmit the signals via light, are definitely the most efficient form of transmission technology. Powered by the average global electricity mix, streaming a 30-minute show on Netflix would currently release 28-57 grams of CO2. This is about 27 to 57 times less than the 1.6 kg from the Shift project. Ralph Hintemann and his research group have calculated that streaming one hour of video in full HD requires about 220 to 370 watt hours of electrical energy, depending on whether the video is streamed via tablet or TV. This adds up to around 100 to 175 grams of carbon dioxide and would be about the same as driving one kilometre in a small car.

Music streaming also comes off quite badly: a new study by the universities of Glasgow and Oslo shows that music streaming services emitted around 200 to 350 million kilograms of greenhouse gas in 2015 and 2016. That means that using streaming services such as Spotify or Apple Music is in many cases more harmful to the climate than the production (and subsequent disposal) of CDs or records.

Cloud computing is another major power guzzler. This is where data is no longer stored locally on a computer or smartphone, but on servers that can be located anywhere in the world, meaning it can be accessed anytime and anywhere. Checking your email via gmail and backing up your photos to the cloud are just two examples of these kind of services.

Most cryptocurrencies also consume large amounts of energy. One example of this is Bitcoin, probably the best-known digital currency. According to calculations by the Bitcoin Energy Consumption Index (2018), a single Bitcoin transaction consumes around 819 kWh. The same amount of energy could operate a 150-watt refrigerator for about eight months. And in a 2018 study, the Technical University of Munich determined that the entire Bitcoin system produces around 22 megatons of carbon dioxide per year, the same as the CO2 footprint of cities such as Hamburg, Vienna or Las Vegas.

But it’s not only the Bitcoin blockchain that’s energy-intensive. Other blockchains and distributed ledger technologies (DLTs) also entail a huge demand for energy. In our recent RESET Special Feature looking at how blockchain can be used for real-world positive impact, we delved even deeper into the question of whether blockchain and sustainability can ever truly go together.

Our digital energy consumption isn’t only determined by what we do, but also how we do it; the software we use also has a big impact. For example, a less efficient word processor needs four times as much energy to process the same document as an efficient one. While at the same time, software updates often cause computers or smartphones to slow down or stop working, forcing consumers to buy new hardware.

And in the future, digitalisation’s growing demand for electricity will certainly also be driven by an increase in smart technologies, such as those we are increasingly using at home, in the IoT sector, in industry and in our increasingly digitalised cities .

All roads lead to… energy-hungry data centres

Every action, no matter how small, that is carried out online, travels in the form of a data packet through data centres and their servers. Looking at the energy use of data centres therefore gives us an idea of just how energy-hungry digitalisation is. It’s impossible to say with any certainty how high the current energy requirements of all data centres worldwide are. Current estimated calculations range from 200 to 500 billion kilowatt hours per year, an estimated percent of the world’s electricity . And future predictions differ considerably too – with figures between 200 billion and 3,000 billion kilowatt hours predicted for the year 2030.

Why do expert opinions differ so much? One reason is that there are no official figures for data centres yet. Another reason is that many operators are reluctant to provide information about their energy consumption due to concerns about security and competition. Researchers can therefore only get close to the real figures via detours, such as sales figures for servers or estimates from surveys.

And what exactly is using all that power?

First and foremost, a lot of power is needed to process the enormous data streams that we constantly send and receive via our devices. And when that data is processed, heat is generated as a waste product. To prevent the servers from overheating, additional energy is required for ventilation and to cool the servers down. On average, mechanical cooling is responsible for around 25 percent of the total power consumption of a data centre . And all that heat that’s producted? Usually it’s just released into the atmosphere – simply left to go to waste.

How can swimming pools make data centres more energy efficient?

Reducing the energy consumption of data centres is an important step towards making digitalisation more sustainable. There are three main ways to do that:

1. Finding the most efficient ways to cool data centres

One fairly simple-sounding and popular solution is to locate data centres in cooler countries and simply blow the outside air into them. This explains the multiple data centres located near the Arctic . Warmed, piped water is another way to cool banks of high-performance, hot computers, as is immersion cooling . Some companies are even working on using artificial intelligence to tune their cooling systems to match the weather and other fluctuating factors – in a bid to reduce their energy bills.

2. Re-using the waste heat

Data centres produce heat throughout the year. Ideally, this heat should be constantly be extracted and reused elsewhere. But finding the right customers to recycle that heat can be a challenge. Many newer data centres use the waste heat within the data centre itself. But, in order to better exploit the potential of waste heat, a more comprehensive approach is needed. While Sweden is one of those countries that is considered an ideal location for data centres because of its cool climate, at the same time, it’s also a pioneer when it comes to reusing their waste heat. The country relies heavily on a system of district heating (where heat is distributed via pipes to residential and commercial buildings from a centralised location), which makes it relatively easy to feed the waste heat from data centres into the network, thus heating the apartments that are connected.

The Elementica facility is the latest example. Fully expanded, the data centre is expected to recover up to 112 gigawatt hours of heat per year, covering the heating of tens of thousands of households. Another example is the Stockholm Data Parks initiative, which sees waste heat recycling as playing a key role in the city’s goal to be completely fossil fuel-free by 2040.

It’s also possible to feed the excess heat not only into local and district heating networks, but also into buildings such as swimming pools, laundries or greenhouses – places which permanently require heat. The first examples of this already exist. In Paris, a swimming pool is being supplied with heat from the servers of the animation studio next door. And, the Irish company Ecologic Datacentres is currently planning a computer centre that will use the waste heat to help grow vegetables in greenhouses and heat nearby homes.

The EU-funded ReUseHeat project is also working on innovative solutions for waste heat recovery. Nine European countries are to join forces over the next four years to make waste heat available at various locations in Europe.

3. Powering them with green electricity.

If data centres are ever to be operated in an environmentally friendly, maybe even one day carbon-neutral way, they will have to be powered by clean, renewable sources of energy. While most countries’ energy mix still only contains a small fraction of renewables, some companies are starting to focus more on sourcing their very own energy – from wind or solar power.

Founded in April 2015 in German North Frisia, the startup Windcloud start-up uses energy from local wind farms to power its data centres. Its provided 2015, Windcloud has provided IT services from 100 percent locally generated and renewable energy. Hostsharing e.G. has a different approach. The webhoster is organised as a cooperative and focuses on resource-saving webhosting using cooperative energy and the use and support of open source software.

We also use a green service provider to host RESET.org. Hetzner Online uses electricity from renewable sources to power the servers in their own data centre parks.

Have your own website? You can check its carbon impact using the online Website Carbon Calculator as well as find tips on how to improve it.

If we’re ever going to seriously shrink the carbon footprint of data centres, then we need to bring in policy regulations that restrict their energy consumption and incentivise efficiency measures. Right now there is little motivation for data centre operators to do the right thing.

The ecological and social impact of our smart devices

While Silicon Valley is already working on microchip implants that, once planted under the skin, will give us automatic access to the digital world, right now we still rely on smartphones, tablets, computers and other smart gadgets – and a lot of them. Our obsession with electrical gadgets is responsible for the fastest-growing portion of the world’s garbage problem. According to statistics from the UN , an estimated 50 million tons of electronic waste are generated worldwide each year – and the trend is rising.

The consequences for people and the environment are fatal. More than half of the electronic trash we produce is shipped cheaply to countries of the global South. There the valuable raw materials are extracted, often under inhumane and unhealthy working conditions, causing pollution in the local environment. At the same time, most of the mineral raw materials used in our smart devices come from countries where there is a disregard not only for labour rights but also for environmental standards. The same applies to the entire manufacturing process.