- Utility Menu

GA4 Tracking Code

fa51e2b1dc8cca8f7467da564e77b5ea

- Make a Gift

- Join Our Email List

Whenever we give feedback, it inevitably reflects our priorities and expectations about the assignment. In other words, we're using a rubric to choose which elements (e.g., right/wrong answer, work shown, thesis analysis, style, etc.) receive more or less feedback and what counts as a "good thesis" or a "less good thesis." When we evaluate student work, that is, we always have a rubric. The question is how consciously we’re applying it, whether we’re transparent with students about what it is, whether it’s aligned with what students are learning in our course, and whether we’re applying it consistently. The more we’re doing all of the following, the more consistent and equitable our feedback and grading will be:

Being conscious of your rubric ideally means having one written out, with explicit criteria and concrete features that describe more/less successful versions of each criterion. If you don't have a rubric written out, you can use this assignment prompt decoder for TFs & TAs to determine which elements and criteria should be the focus of your rubric.

Being transparent with students about your rubric means sharing it with them ahead of time and making sure they understand it. This assignment prompt decoder for students is designed to facilitate this discussion between students and instructors.

Aligning your rubric with your course means articulating the relationship between “this” assignment and the ones that scaffold up and build from it, which ideally involves giving students the chance to practice different elements of the assignment and get formative feedback before they’re asked to submit material that will be graded. For more ideas and advice on how this looks, see the " Formative Assignments " page at Gen Ed Writes.

Applying your rubric consistently means using a stable vocabulary when making your comments and keeping your feedback focused on the criteria in your rubric.

How to Build a Rubric

Rubrics and assignment prompts are two sides of a coin. If you’ve already created a prompt, you should have all of the information you need to make a rubric. Of course, it doesn’t always work out that way, and that itself turns out to be an advantage of making rubrics: it’s a great way to test whether your prompt is in fact communicating to students everything they need to know about the assignment they’ll be doing.

So what do students need to know? In general, assignment prompts boil down to a small number of common elements :

- Evidence and Analysis

- Style and Conventions

- Specific Guidelines

- Advice on Process

If an assignment prompt is clearly addressing each of these elements, then students know what they’re doing, why they’re doing it, and when/how/for whom they’re doing it. From the standpoint of a rubric, we can see how these elements correspond to the criteria for feedback:

All of these criteria can be weighed and given feedback, and they’re all things that students can be taught and given opportunities to practice. That makes them good criteria for a rubric, and that in turn is why they belong in every assignment prompt.

Which leaves “purpose” and “advice on process.” These elements are, in a sense, the heart and engine of any assignment, but their role in a rubric will differ from assignment to assignment. Here are a couple of ways to think about each.

On the one hand, “purpose” is the rationale for how the other elements are working in an assignment, and so feedback on them adds up to feedback on the skills students are learning vis-a-vis the overall purpose. In that sense, separately grading whether students have achieved an assignment’s “purpose” can be tricky.

On the other hand, metacognitive components such as journals or cover letters or artist statements are a great way for students to tie work on their assignment to the broader (often future-oriented) reasons why they’ve been doing the assignment. Making this kind of component a small part of the overall grade, e.g., 5% and/or part of “specific guidelines,” can allow it to be a nudge toward a meaningful self-reflection for students on what they’ve been learning and how it might build toward other assignments or experiences.

Advice on process

As with “purpose,” “advice on process” often amounts to helping students break down an assignment into the elements they’ll get feedback on. In that sense, feedback on those steps is often more informal or aimed at giving students practice with skills or components that will be parts of the bigger assignment.

For those reasons, though, the kind of feedback we give students on smaller steps has its own (even if ungraded) rubric. For example, if a prompt asks students to propose a research question as part of the bigger project, they might get feedback on whether it can be answered by evidence, or whether it has a feasible scope, or who the audience for its findings might be. All of those criteria, in turn, could—and ideally would—later be part of the rubric for the graded project itself. Or perhaps students are submitting earlier, smaller components of an assignment for separate grades; or are expected to submit separate components all together at the end as a portfolio, perhaps together with a cover letter or artist statement .

Using Rubrics Effectively

In the same way that rubrics can facilitate the design phase of assignment, they can also facilitate the teaching and feedback phases, including of course grading. Here are a few ways this can work in a course:

Discuss the rubric ahead of time with your teaching team. Getting on the same page about what students will be doing and how different parts of the assignment fit together is, in effect, laying out what needs to happen in class and in section, both in terms of what students need to learn and practice, and how the coming days or weeks should be sequenced.

Share the rubric with your students ahead of time. For the same reason it's ideal for course heads to discuss rubrics with their teaching team, it’s ideal for the teaching team to discuss the rubric with students. Not only does the rubric lay out the different skills students will learn during an assignment and which skills are more or less important for that assignment, it means that the formative feedback they get along the way is more legible as getting practice on elements of the “bigger assignment.” To be sure, this can’t always happen. Rubrics aren’t always up and running at the beginning of an assignment, and sometimes they emerge more inductively during the feedback and grading process, as instructors take stock of what students have actually submitted. In both cases, later is better than never—there’s no need to make the perfect the enemy of the good. Circulating a rubric at the time you return student work can still be a valuable tool to help students see the relationship between the learning objectives and goals of the assignment and the feedback and grade they’ve received.

Discuss the rubric with your teaching team during the grading process. If your assignment has a rubric, it’s important to make sure that everyone who will be grading is able to use the rubric consistently. Most rubrics aren’t exhaustive—see the note above on rubrics that are “too specific”—and a great way to see how different graders are handling “real-life” scenarios for an assignment is to have the entire team grade a few samples (including examples that seem more representative of an “A” or a “B”) and compare everyone’s approaches. We suggest scheduling a grade-norming session for your teaching staff.

- Designing Your Course

- In the Classroom

- When/Why/How: Some General Principles of Responding to Student Work

- Consistency and Equity in Grading

- Assessing Class Participation

- Assessing Non-Traditional Assignments

- Beyond “the Grade”: Alternative Approaches to Assessment

- Getting Feedback

- Equitable & Inclusive Teaching

- Advising and Mentoring

- Teaching and Your Career

- Teaching Remotely

- Tools and Platforms

- The Science of Learning

- Bok Publications

- Other Resources Around Campus

- Basics for GSIs

- Advancing Your Skills

Examples of Rubric Creation

Creating a rubric takes time and requires thought and experimentation. Here you can see the steps used to create two kinds of rubric: one for problems in a physics exam for a small, upper-division physics course, and another for an essay assignment in a large, lower-division sociology course.

Physics Problems

In STEM disciplines (science, technology, engineering, and mathematics), assignments tend to be analytical and problem-based. Holistic rubrics can be an efficient, consistent, and fair way to grade a problem set. An analytical rubric often gives a more clear picture of what a student should direct their future learning efforts on. Since holistic rubrics try to label overall understanding, they can lead to more regrade requests when compared to analytical rubric with more explicit criteria. When starting to grade a problem, it is important to think about the relevant conceptual ingredients in the solution. Then look at a sample of student work to get a feel for student mistakes. Decide what rubric you will use (e.g., holistic or analytic, and how many points). Apply the holistic rubric by marking comments and sorting the students’ assignments into stacks (e.g., five stacks if using a five-point scale). Finally, check the stacks for consistency and mark the scores. The following is a sample homework problem from a UC Berkeley Physics Department undergraduate course in mechanics.

Homework Problem

Learning objective.

Solve for position and speed along a projectile’s trajectory.

Desired Traits: Conceptual Elements Needed for the Solution

- Decompose motion into vertical and horizontal axes.

- Identify that the maximum height occurs when the vertical velocity is 0.

- Apply kinematics equation with g as the acceleration to solve for the time and height.

- Evaluate the numerical expression.

A note on analytic rubrics: If you decide you feel more comfortable grading with an analytic rubric, you can assign a point value to each concept. The drawback to this method is that it can sometimes unfairly penalize a student who has a good understanding of the problem but makes a lot of minor errors. Because the analytic method tends to have many more parts, the method can take quite a bit more time to apply. In the end, your analytic rubric should give results that agree with the common-sense assessment of how well the student understood the problem. This sense is well captured by the holistic method.

Holistic Rubric

A holistic rubric, closely based on a rubric by Bruce Birkett and Andrew Elby:

[a] This policy especially makes sense on exam problems, for which students are under time pressure and are more likely to make harmless algebraic mistakes. It would also be reasonable to have stricter standards for homework problems.

Analytic Rubric

The following is an analytic rubric that takes the desired traits of the solution and assigns point values to each of the components. Note that the relative point values should reflect the importance in the overall problem. For example, the steps of the problem solving should be worth more than the final numerical value of the solution. This rubric also provides clarity for where students are lacking in their current understanding of the problem.

Try to avoid penalizing multiple times for the same mistake by choosing your evaluation criteria to be related to distinct learning outcomes. In designing your rubric, you can decide how finely to evaluate each component. Having more possible point values on your rubric can give more detailed feedback on a student’s performance, though it typically takes more time for the grader to assess.

Of course, problems can, and often do, feature the use of multiple learning outcomes in tandem. When a mistake could be assigned to multiple criteria, it is advisable to check that the overall problem grade is reasonable with the student’s mastery of the problem. Not having to decide how particular mistakes should be deducted from the analytic rubric is one advantage of the holistic rubric. When designing problems, it can be very beneficial for students not to have problems with several subparts that rely on prior answers. These tend to disproportionately skew the grades of students who miss an ingredient early on. When possible, consider making independent problems for testing different learning outcomes.

Sociology Research Paper

An introductory-level, large-lecture course is a difficult setting for managing a student research assignment. With the assistance of an instructional support team that included a GSI teaching consultant and a UC Berkeley librarian [b] , sociology lecturer Mary Kelsey developed the following assignment:

This was a lengthy and complex assignment worth a substantial portion of the course grade. Since the class was very large, the instructor wanted to minimize the effort it would take her GSIs to grade the papers in a manner consistent with the assignment’s learning objectives. For these reasons Dr. Kelsey and the instructional team gave a lot of forethought to crafting a detailed grading rubric.

Desired Traits

- Use and interpretation of data

- Reflection on personal experiences

- Application of course readings and materials

- Organization, writing, and mechanics

For this assignment, the instructional team decided to grade each trait individually because there seemed to be too many independent variables to grade holistically. They could have used a five-point scale, a three-point scale, or a descriptive analytic scale. The choice depended on the complexity of the assignment and the kind of information they wanted to convey to students about their work.

Below are three of the analytic rubrics they considered for the Argument trait and a holistic rubric for all the traits together. Lastly you will find the entire analytic rubric, for all five desired traits, that was finally used for the assignment. Which would you choose, and why?

Five-Point Scale

Three-point scale, simplified three-point scale, numbers replaced with descriptive terms.

For some assignments, you may choose to use a holistic rubric, or one scale for the whole assignment. This type of rubric is particularly useful when the variables you want to assess just cannot be usefully separated. We chose not to use a holistic rubric for this assignment because we wanted to be able to grade each trait separately, but we’ve completed a holistic version here for comparative purposes.

Final Analytic Rubric

This is the rubric the instructor finally decided to use. It rates five major traits, each on a five-point scale. This allowed for fine but clear distinctions in evaluating the students’ final papers.

[b] These materials were developed during UC Berkeley’s 2005–2006 Mellon Library/Faculty Fellowship for Undergraduate Research program. M embers of the instructional team who worked with Lecturer Kelsey in developing the grading rubric included Susan H askell-Khan, a GSI Center teaching consultant and doctoral candidate in history, and Sarah McDaniel, a teaching librarian with the Doe/Moffitt Libraries.

- help_outline help

iRubric: Science Essay rubric

Just one more step to access this resource!

Get your free sample task today.

Ready to explore Exemplars rich performance tasks? Sign up for your free sample now.

Science Rubrics

Exemplars science material includes standards-based rubrics that define what work meets a standard, and allows teachers (and students) to distinguish between different levels of performance.

Our science rubrics have four levels of performance: Novice , Apprentice , Practitioner (meets the standard), and Expert .

Exemplars uses two types of rubrics:

- Standards-Based Assessment Rubrics are used by teachers to assess student work in science. (Exemplars science material includes both a general science rubric as well as task-specific rubrics with each investigation.)

- Student Rubrics are used by learners in peer- and self-assessment.

Assessment Rubrics

Standards-based science rubric.

This rubric is based on science standards from the National Research Council and the American Association for the Advancement of Science.

K–2 Science Continuum

This continuum was developed by an Exemplars workshop leader and task writer, Tracy Lavallee. It provides a framework for assessing the scientific thinking of young students.

Student Rubrics

Seed rubric.

This rubric is appropriate for use with younger children. It shows how a seed develops, from being planted to becoming a flowering plant. Each growth level represents a different level of performance.

What I Need to Do

While not exactly a rubric, this guide assists students in demonstrating what they have done to meet each criterion in the rubric. The student is asked in each criterion to describe what they need to do and the evidence of what they did.

Rubric Best Practices, Examples, and Templates

A rubric is a scoring tool that identifies the different criteria relevant to an assignment, assessment, or learning outcome and states the possible levels of achievement in a specific, clear, and objective way. Use rubrics to assess project-based student work including essays, group projects, creative endeavors, and oral presentations.

Rubrics can help instructors communicate expectations to students and assess student work fairly, consistently and efficiently. Rubrics can provide students with informative feedback on their strengths and weaknesses so that they can reflect on their performance and work on areas that need improvement.

How to Get Started

Best practices, moodle how-to guides.

- Workshop Recording (Fall 2022)

- Workshop Registration

Step 1: Analyze the assignment

The first step in the rubric creation process is to analyze the assignment or assessment for which you are creating a rubric. To do this, consider the following questions:

- What is the purpose of the assignment and your feedback? What do you want students to demonstrate through the completion of this assignment (i.e. what are the learning objectives measured by it)? Is it a summative assessment, or will students use the feedback to create an improved product?

- Does the assignment break down into different or smaller tasks? Are these tasks equally important as the main assignment?

- What would an “excellent” assignment look like? An “acceptable” assignment? One that still needs major work?

- How detailed do you want the feedback you give students to be? Do you want/need to give them a grade?

Step 2: Decide what kind of rubric you will use

Types of rubrics: holistic, analytic/descriptive, single-point

Holistic Rubric. A holistic rubric includes all the criteria (such as clarity, organization, mechanics, etc.) to be considered together and included in a single evaluation. With a holistic rubric, the rater or grader assigns a single score based on an overall judgment of the student’s work, using descriptions of each performance level to assign the score.

Advantages of holistic rubrics:

- Can p lace an emphasis on what learners can demonstrate rather than what they cannot

- Save grader time by minimizing the number of evaluations to be made for each student

- Can be used consistently across raters, provided they have all been trained

Disadvantages of holistic rubrics:

- Provide less specific feedback than analytic/descriptive rubrics

- Can be difficult to choose a score when a student’s work is at varying levels across the criteria

- Any weighting of c riteria cannot be indicated in the rubric

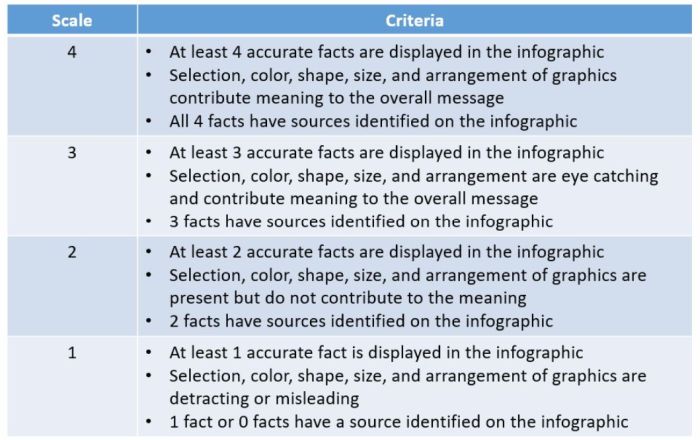

Analytic/Descriptive Rubric . An analytic or descriptive rubric often takes the form of a table with the criteria listed in the left column and with levels of performance listed across the top row. Each cell contains a description of what the specified criterion looks like at a given level of performance. Each of the criteria is scored individually.

Advantages of analytic rubrics:

- Provide detailed feedback on areas of strength or weakness

- Each criterion can be weighted to reflect its relative importance

Disadvantages of analytic rubrics:

- More time-consuming to create and use than a holistic rubric

- May not be used consistently across raters unless the cells are well defined

- May result in giving less personalized feedback

Single-Point Rubric . A single-point rubric is breaks down the components of an assignment into different criteria, but instead of describing different levels of performance, only the “proficient” level is described. Feedback space is provided for instructors to give individualized comments to help students improve and/or show where they excelled beyond the proficiency descriptors.

Advantages of single-point rubrics:

- Easier to create than an analytic/descriptive rubric

- Perhaps more likely that students will read the descriptors

- Areas of concern and excellence are open-ended

- May removes a focus on the grade/points

- May increase student creativity in project-based assignments

Disadvantage of analytic rubrics: Requires more work for instructors writing feedback

Step 3 (Optional): Look for templates and examples.

You might Google, “Rubric for persuasive essay at the college level” and see if there are any publicly available examples to start from. Ask your colleagues if they have used a rubric for a similar assignment. Some examples are also available at the end of this article. These rubrics can be a great starting point for you, but consider steps 3, 4, and 5 below to ensure that the rubric matches your assignment description, learning objectives and expectations.

Step 4: Define the assignment criteria

Make a list of the knowledge and skills are you measuring with the assignment/assessment Refer to your stated learning objectives, the assignment instructions, past examples of student work, etc. for help.

Helpful strategies for defining grading criteria:

- Collaborate with co-instructors, teaching assistants, and other colleagues

- Brainstorm and discuss with students

- Can they be observed and measured?

- Are they important and essential?

- Are they distinct from other criteria?

- Are they phrased in precise, unambiguous language?

- Revise the criteria as needed

- Consider whether some are more important than others, and how you will weight them.

Step 5: Design the rating scale

Most ratings scales include between 3 and 5 levels. Consider the following questions when designing your rating scale:

- Given what students are able to demonstrate in this assignment/assessment, what are the possible levels of achievement?

- How many levels would you like to include (more levels means more detailed descriptions)

- Will you use numbers and/or descriptive labels for each level of performance? (for example 5, 4, 3, 2, 1 and/or Exceeds expectations, Accomplished, Proficient, Developing, Beginning, etc.)

- Don’t use too many columns, and recognize that some criteria can have more columns that others . The rubric needs to be comprehensible and organized. Pick the right amount of columns so that the criteria flow logically and naturally across levels.

Step 6: Write descriptions for each level of the rating scale

Artificial Intelligence tools like Chat GPT have proven to be useful tools for creating a rubric. You will want to engineer your prompt that you provide the AI assistant to ensure you get what you want. For example, you might provide the assignment description, the criteria you feel are important, and the number of levels of performance you want in your prompt. Use the results as a starting point, and adjust the descriptions as needed.

Building a rubric from scratch

For a single-point rubric , describe what would be considered “proficient,” i.e. B-level work, and provide that description. You might also include suggestions for students outside of the actual rubric about how they might surpass proficient-level work.

For analytic and holistic rubrics , c reate statements of expected performance at each level of the rubric.

- Consider what descriptor is appropriate for each criteria, e.g., presence vs absence, complete vs incomplete, many vs none, major vs minor, consistent vs inconsistent, always vs never. If you have an indicator described in one level, it will need to be described in each level.

- You might start with the top/exemplary level. What does it look like when a student has achieved excellence for each/every criterion? Then, look at the “bottom” level. What does it look like when a student has not achieved the learning goals in any way? Then, complete the in-between levels.

- For an analytic rubric , do this for each particular criterion of the rubric so that every cell in the table is filled. These descriptions help students understand your expectations and their performance in regard to those expectations.

Well-written descriptions:

- Describe observable and measurable behavior

- Use parallel language across the scale

- Indicate the degree to which the standards are met

Step 7: Create your rubric

Create your rubric in a table or spreadsheet in Word, Google Docs, Sheets, etc., and then transfer it by typing it into Moodle. You can also use online tools to create the rubric, but you will still have to type the criteria, indicators, levels, etc., into Moodle. Rubric creators: Rubistar , iRubric

Step 8: Pilot-test your rubric

Prior to implementing your rubric on a live course, obtain feedback from:

- Teacher assistants

Try out your new rubric on a sample of student work. After you pilot-test your rubric, analyze the results to consider its effectiveness and revise accordingly.

- Limit the rubric to a single page for reading and grading ease

- Use parallel language . Use similar language and syntax/wording from column to column. Make sure that the rubric can be easily read from left to right or vice versa.

- Use student-friendly language . Make sure the language is learning-level appropriate. If you use academic language or concepts, you will need to teach those concepts.

- Share and discuss the rubric with your students . Students should understand that the rubric is there to help them learn, reflect, and self-assess. If students use a rubric, they will understand the expectations and their relevance to learning.

- Consider scalability and reusability of rubrics. Create rubric templates that you can alter as needed for multiple assignments.

- Maximize the descriptiveness of your language. Avoid words like “good” and “excellent.” For example, instead of saying, “uses excellent sources,” you might describe what makes a resource excellent so that students will know. You might also consider reducing the reliance on quantity, such as a number of allowable misspelled words. Focus instead, for example, on how distracting any spelling errors are.

Example of an analytic rubric for a final paper

Example of a holistic rubric for a final paper, single-point rubric, more examples:.

- Single Point Rubric Template ( variation )

- Analytic Rubric Template make a copy to edit

- A Rubric for Rubrics

- Bank of Online Discussion Rubrics in different formats

- Mathematical Presentations Descriptive Rubric

- Math Proof Assessment Rubric

- Kansas State Sample Rubrics

- Design Single Point Rubric

Technology Tools: Rubrics in Moodle

- Moodle Docs: Rubrics

- Moodle Docs: Grading Guide (use for single-point rubrics)

Tools with rubrics (other than Moodle)

- Google Assignments

- Turnitin Assignments: Rubric or Grading Form

Other resources

- DePaul University (n.d.). Rubrics .

- Gonzalez, J. (2014). Know your terms: Holistic, Analytic, and Single-Point Rubrics . Cult of Pedagogy.

- Goodrich, H. (1996). Understanding rubrics . Teaching for Authentic Student Performance, 54 (4), 14-17. Retrieved from

- Miller, A. (2012). Tame the beast: tips for designing and using rubrics.

- Ragupathi, K., Lee, A. (2020). Beyond Fairness and Consistency in Grading: The Role of Rubrics in Higher Education. In: Sanger, C., Gleason, N. (eds) Diversity and Inclusion in Global Higher Education. Palgrave Macmillan, Singapore.

Essay Rubric

About this printout

This rubric delineates specific expectations about an essay assignment to students and provides a means of assessing completed student essays.

Teaching with this printout

More ideas to try.

Grading rubrics can be of great benefit to both you and your students. For you, a rubric saves time and decreases subjectivity. Specific criteria are explicitly stated, facilitating the grading process and increasing your objectivity. For students, the use of grading rubrics helps them to meet or exceed expectations, to view the grading process as being “fair,” and to set goals for future learning. In order to help your students meet or exceed expectations of the assignment, be sure to discuss the rubric with your students when you assign an essay. It is helpful to show them examples of written pieces that meet and do not meet the expectations. As an added benefit, because the criteria are explicitly stated, the use of the rubric decreases the likelihood that students will argue about the grade they receive. The explicitness of the expectations helps students know exactly why they lost points on the assignment and aids them in setting goals for future improvement.

- Routinely have students score peers’ essays using the rubric as the assessment tool. This increases their level of awareness of the traits that distinguish successful essays from those that fail to meet the criteria. Have peer editors use the Reviewer’s Comments section to add any praise, constructive criticism, or questions.

- Alter some expectations or add additional traits on the rubric as needed. Students’ needs may necessitate making more rigorous criteria for advanced learners or less stringent guidelines for younger or special needs students. Furthermore, the content area for which the essay is written may require some alterations to the rubric. In social studies, for example, an essay about geographical landforms and their effect on the culture of a region might necessitate additional criteria about the use of specific terminology.

- After you and your students have used the rubric, have them work in groups to make suggested alterations to the rubric to more precisely match their needs or the parameters of a particular writing assignment.

- Print this resource

Explore Resources by Grade

- Kindergarten K

- Grades 6-12

- School Leaders

FREE Poetry Worksheet Bundle! Perfect for National Poetry Month.

15 Helpful Scoring Rubric Examples for All Grades and Subjects

In the end, they actually make grading easier.

When it comes to student assessment and evaluation, there are a lot of methods to consider. In some cases, testing is the best way to assess a student’s knowledge, and the answers are either right or wrong. But often, assessing a student’s performance is much less clear-cut. In these situations, a scoring rubric is often the way to go, especially if you’re using standards-based grading . Here’s what you need to know about this useful tool, along with lots of rubric examples to get you started.

What is a scoring rubric?

In the United States, a rubric is a guide that lays out the performance expectations for an assignment. It helps students understand what’s required of them, and guides teachers through the evaluation process. (Note that in other countries, the term “rubric” may instead refer to the set of instructions at the beginning of an exam. To avoid confusion, some people use the term “scoring rubric” instead.)

A rubric generally has three parts:

- Performance criteria: These are the various aspects on which the assignment will be evaluated. They should align with the desired learning outcomes for the assignment.

- Rating scale: This could be a number system (often 1 to 4) or words like “exceeds expectations, meets expectations, below expectations,” etc.

- Indicators: These describe the qualities needed to earn a specific rating for each of the performance criteria. The level of detail may vary depending on the assignment and the purpose of the rubric itself.

Rubrics take more time to develop up front, but they help ensure more consistent assessment, especially when the skills being assessed are more subjective. A well-developed rubric can actually save teachers a lot of time when it comes to grading. What’s more, sharing your scoring rubric with students in advance often helps improve performance . This way, students have a clear picture of what’s expected of them and what they need to do to achieve a specific grade or performance rating.

Learn more about why and how to use a rubric here.

Types of Rubric

There are three basic rubric categories, each with its own purpose.

Holistic Rubric

Source: Cambrian College

This type of rubric combines all the scoring criteria in a single scale. They’re quick to create and use, but they have drawbacks. If a student’s work spans different levels, it can be difficult to decide which score to assign. They also make it harder to provide feedback on specific aspects.

Traditional letter grades are a type of holistic rubric. So are the popular “hamburger rubric” and “ cupcake rubric ” examples. Learn more about holistic rubrics here.

Analytic Rubric

Source: University of Nebraska

Analytic rubrics are much more complex and generally take a great deal more time up front to design. They include specific details of the expected learning outcomes, and descriptions of what criteria are required to meet various performance ratings in each. Each rating is assigned a point value, and the total number of points earned determines the overall grade for the assignment.

Though they’re more time-intensive to create, analytic rubrics actually save time while grading. Teachers can simply circle or highlight any relevant phrases in each rating, and add a comment or two if needed. They also help ensure consistency in grading, and make it much easier for students to understand what’s expected of them.

Learn more about analytic rubrics here.

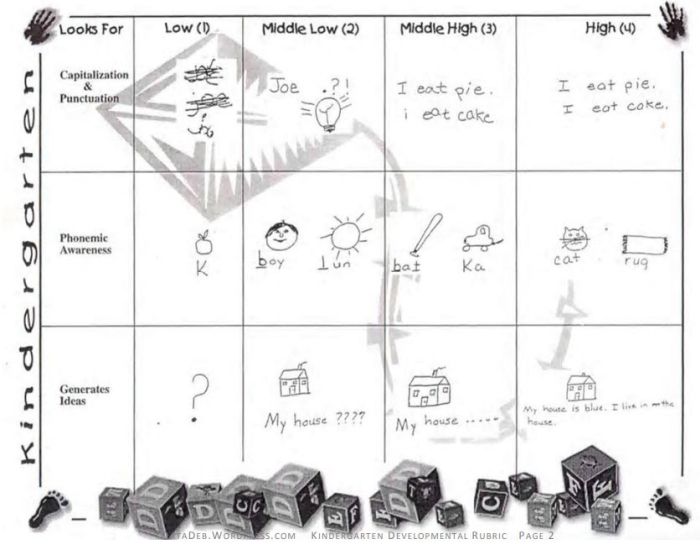

Developmental Rubric

Source: Deb’s Data Digest

A developmental rubric is a type of analytic rubric, but it’s used to assess progress along the way rather than determining a final score on an assignment. The details in these rubrics help students understand their achievements, as well as highlight the specific skills they still need to improve.

Developmental rubrics are essentially a subset of analytic rubrics. They leave off the point values, though, and focus instead on giving feedback using the criteria and indicators of performance.

Learn how to use developmental rubrics here.

Ready to create your own rubrics? Find general tips on designing rubrics here. Then, check out these examples across all grades and subjects to inspire you.

Elementary School Rubric Examples

These elementary school rubric examples come from real teachers who use them with their students. Adapt them to fit your needs and grade level.

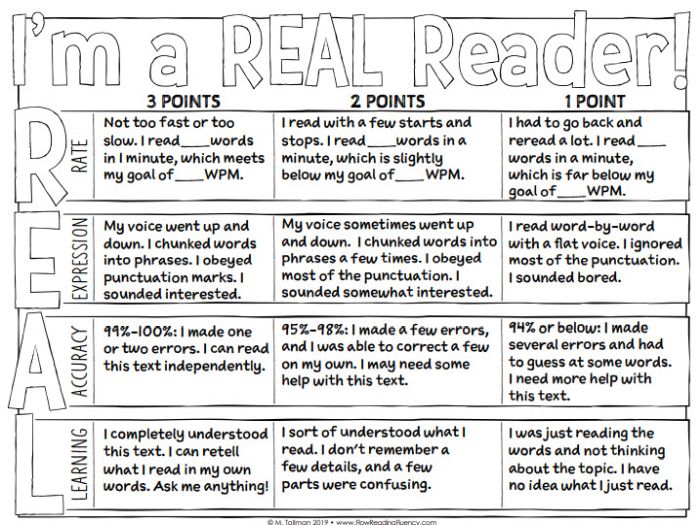

Reading Fluency Rubric

You can use this one as an analytic rubric by counting up points to earn a final score, or just to provide developmental feedback. There’s a second rubric page available specifically to assess prosody (reading with expression).

Learn more: Teacher Thrive

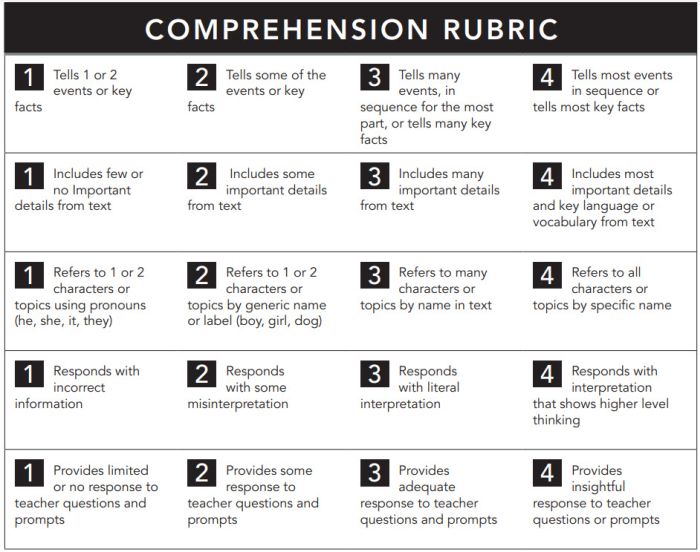

Reading Comprehension Rubric

The nice thing about this rubric is that you can use it at any grade level, for any text. If you like this style, you can get a reading fluency rubric here too.

Learn more: Pawprints Resource Center

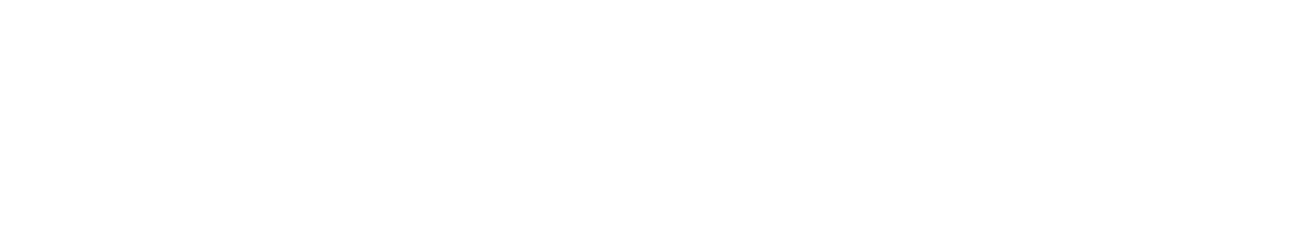

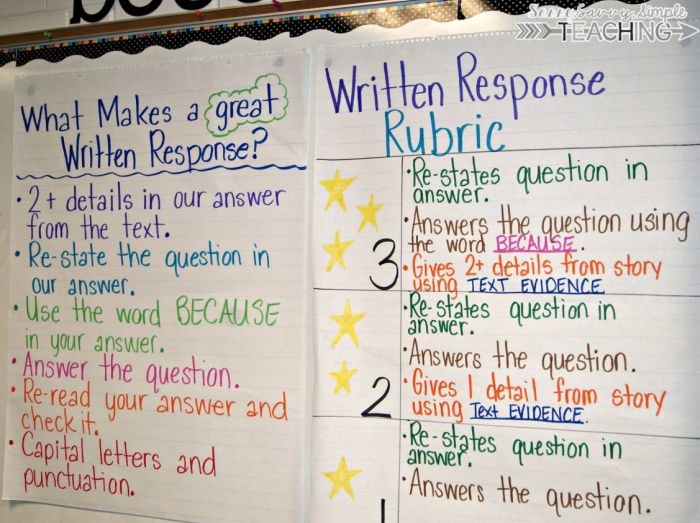

Written Response Rubric

Rubrics aren’t just for huge projects. They can also help kids work on very specific skills, like this one for improving written responses on assessments.

Learn more: Dianna Radcliffe: Teaching Upper Elementary and More

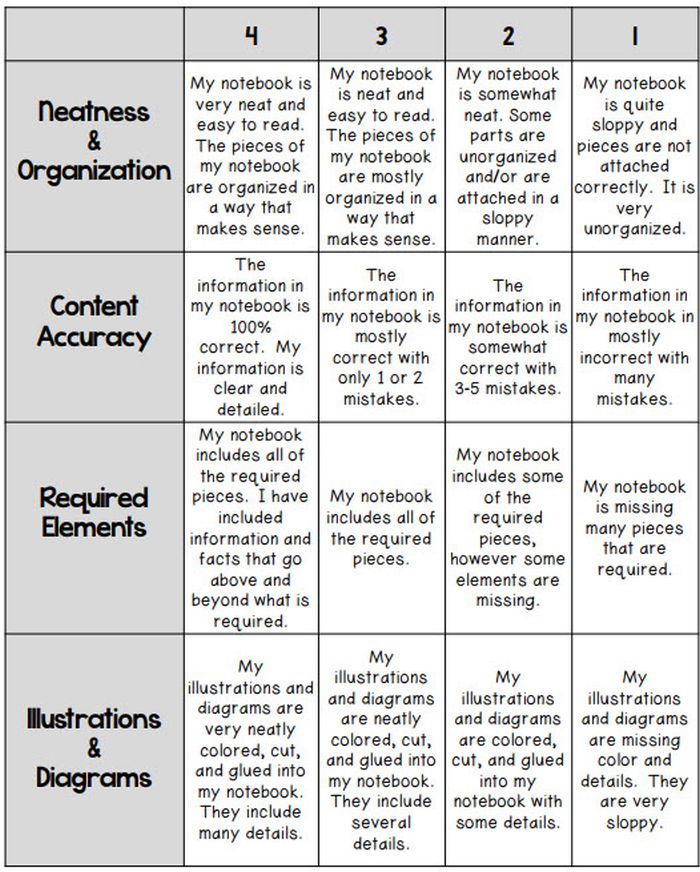

Interactive Notebook Rubric

If you use interactive notebooks as a learning tool , this rubric can help kids stay on track and meet your expectations.

Learn more: Classroom Nook

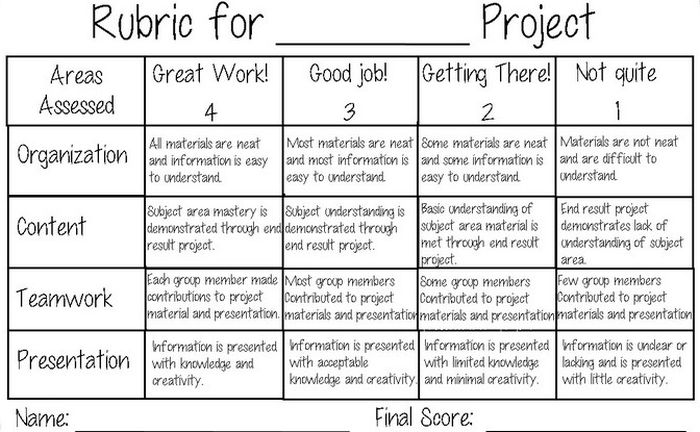

Project Rubric

Use this simple rubric as it is, or tweak it to include more specific indicators for the project you have in mind.

Learn more: Tales of a Title One Teacher

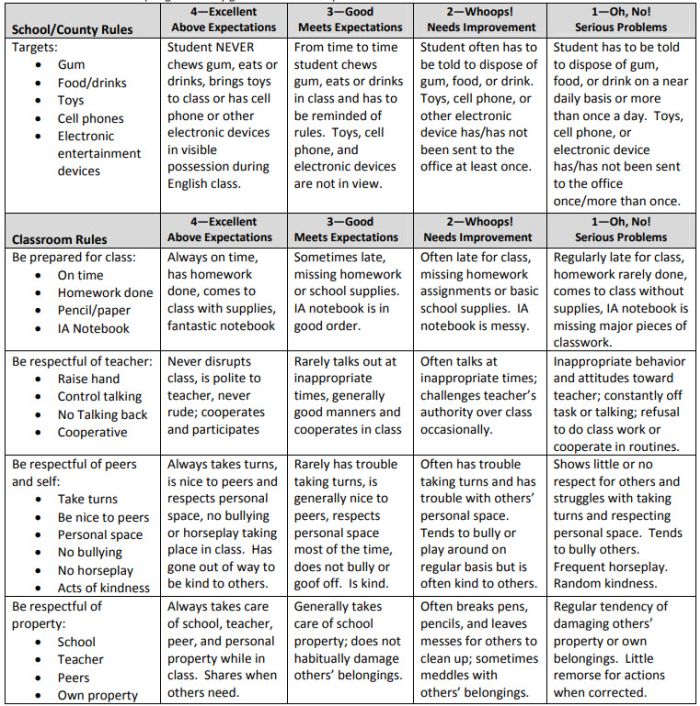

Behavior Rubric

Developmental rubrics are perfect for assessing behavior and helping students identify opportunities for improvement. Send these home regularly to keep parents in the loop.

Learn more: Teachers.net Gazette

Middle School Rubric Examples

In middle school, use rubrics to offer detailed feedback on projects, presentations, and more. Be sure to share them with students in advance, and encourage them to use them as they work so they’ll know if they’re meeting expectations.

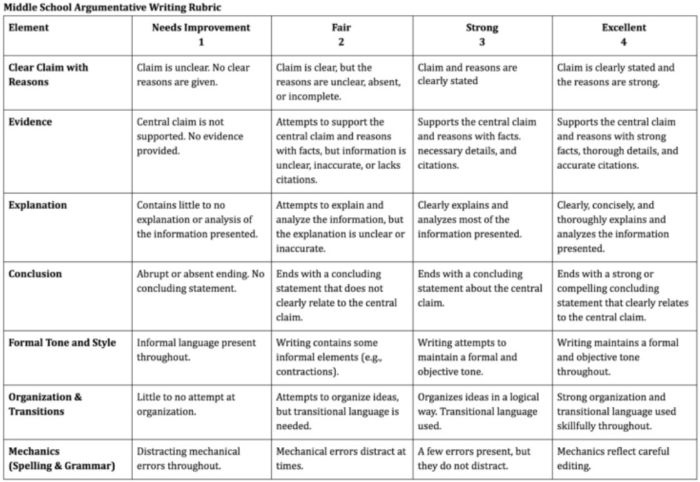

Argumentative Writing Rubric

Argumentative writing is a part of language arts, social studies, science, and more. That makes this rubric especially useful.

Learn more: Dr. Caitlyn Tucker

Role-Play Rubric

Role-plays can be really useful when teaching social and critical thinking skills, but it’s hard to assess them. Try a rubric like this one to evaluate and provide useful feedback.

Learn more: A Question of Influence

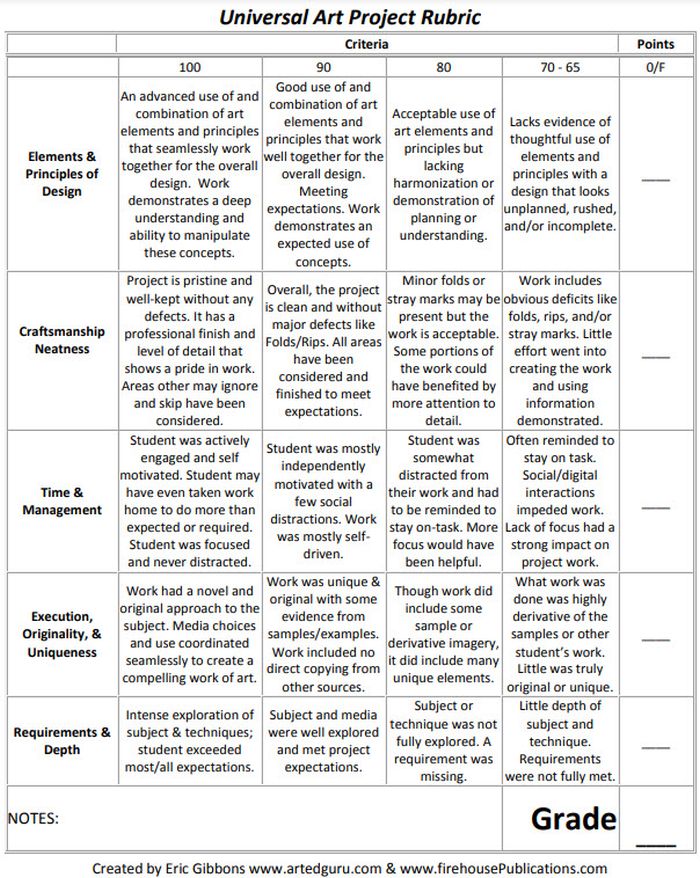

Art Project Rubric

Art is one of those subjects where grading can feel very subjective. Bring some objectivity to the process with a rubric like this.

Source: Art Ed Guru

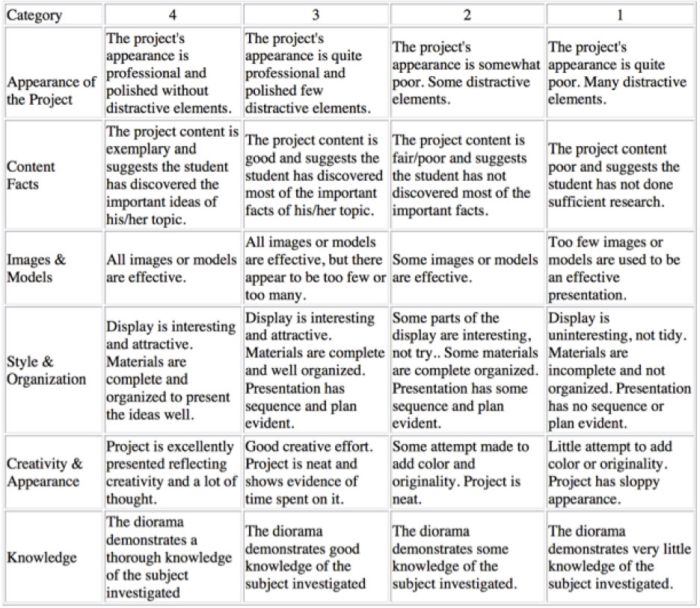

Diorama Project Rubric

You can use diorama projects in almost any subject, and they’re a great chance to encourage creativity. Simplify the grading process and help kids know how to make their projects shine with this scoring rubric.

Learn more: Historyourstory.com

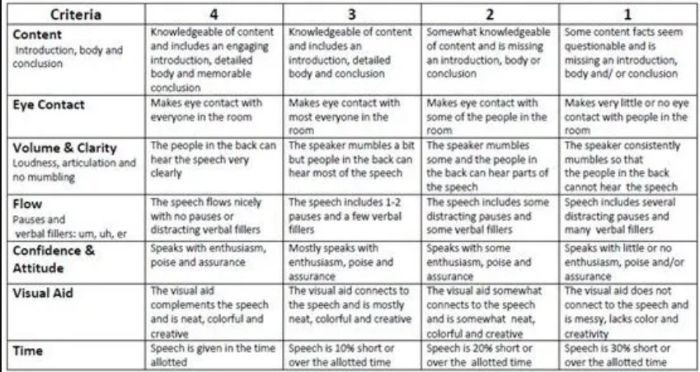

Oral Presentation Rubric

Rubrics are terrific for grading presentations, since you can include a variety of skills and other criteria. Consider letting students use a rubric like this to offer peer feedback too.

Learn more: Bright Hub Education

High School Rubric Examples

In high school, it’s important to include your grading rubrics when you give assignments like presentations, research projects, or essays. Kids who go on to college will definitely encounter rubrics, so helping them become familiar with them now will help in the future.

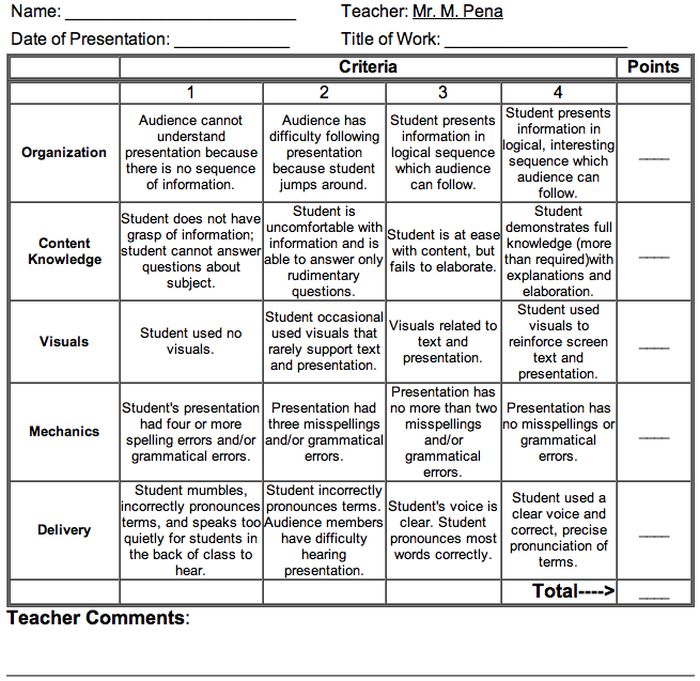

Presentation Rubric

Analyze a student’s presentation both for content and communication skills with a rubric like this one. If needed, create a separate one for content knowledge with even more criteria and indicators.

Learn more: Michael A. Pena Jr.

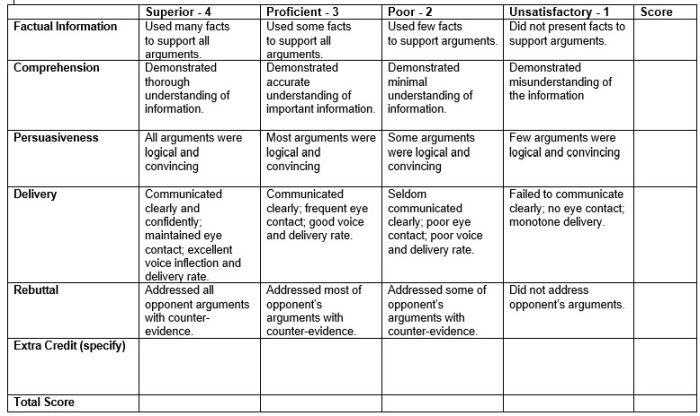

Debate Rubric

Debate is a valuable learning tool that encourages critical thinking and oral communication skills. This rubric can help you assess those skills objectively.

Learn more: Education World

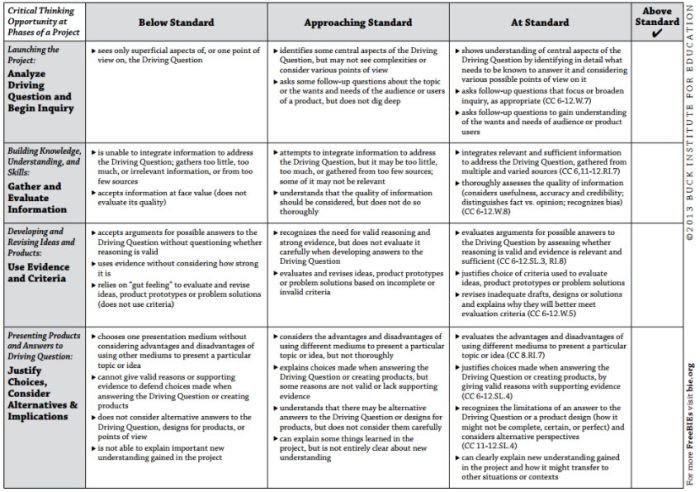

Project-Based Learning Rubric

Implementing project-based learning can be time-intensive, but the payoffs are worth it. Try this rubric to make student expectations clear and end-of-project assessment easier.

Learn more: Free Technology for Teachers

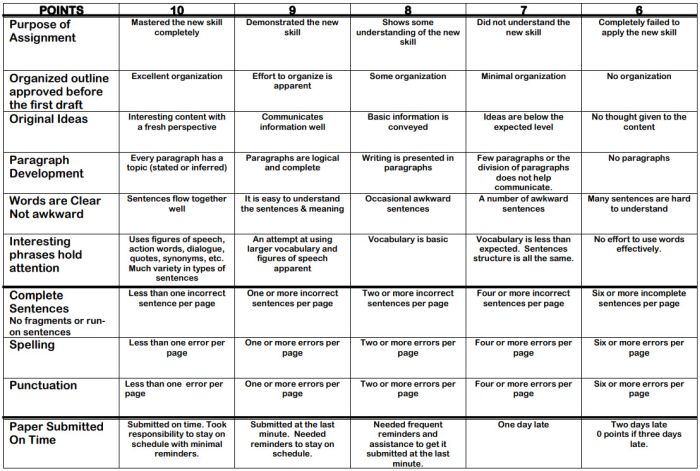

100-Point Essay Rubric

Need an easy way to convert a scoring rubric to a letter grade? This example for essay writing earns students a final score out of 100 points.

Learn more: Learn for Your Life

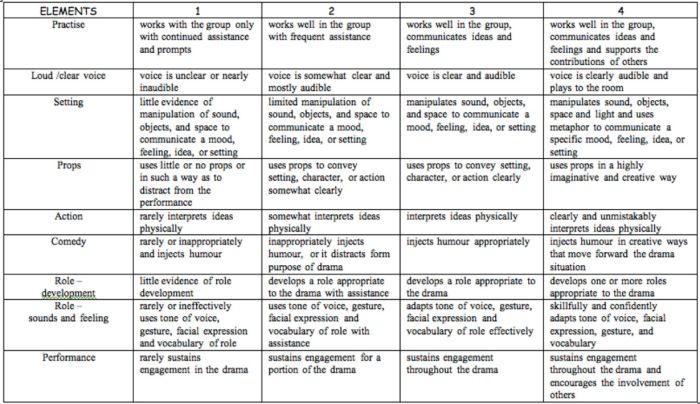

Drama Performance Rubric

If you’re unsure how to grade a student’s participation and performance in drama class, consider this example. It offers lots of objective criteria and indicators to evaluate.

Learn more: Chase March

How do you use rubrics in your classroom? Come share your thoughts and exchange ideas in the WeAreTeachers HELPLINE group on Facebook .

Plus, 25 of the best alternative assessment ideas ..

You Might Also Like

How To Get Started With Interactive Notebooks (Plus 25 Terrific Examples)

It's so much more than a place to take notes during class. Continue Reading

Copyright © 2023. All rights reserved. 5335 Gate Parkway, Jacksonville, FL 32256

Sample Essay Rubric for Elementary Teachers

- Grading Students for Assessment

- Lesson Plans

- Becoming A Teacher

- Assessments & Tests

- Elementary Education

- Special Education

- Homeschooling

- M.S., Education, Buffalo State College

- B.S., Education, Buffalo State College

An essay rubric is a way teachers assess students' essay writing by using specific criteria to grade assignments. Essay rubrics save teachers time because all of the criteria are listed and organized into one convenient paper. If used effectively, rubrics can help improve students' writing .

How to Use an Essay Rubric

- The best way to use an essay rubric is to give the rubric to the students before they begin their writing assignment. Review each criterion with the students and give them specific examples of what you want so they will know what is expected of them.

- Next, assign students to write the essay, reminding them of the criteria and your expectations for the assignment.

- Once students complete the essay have them first score their own essay using the rubric, and then switch with a partner. (This peer-editing process is a quick and reliable way to see how well the student did on their assignment. It's also good practice to learn criticism and become a more efficient writer.)

- Once peer-editing is complete, have students hand in their essay's. Now it is your turn to evaluate the assignment according to the criteria on the rubric. Make sure to offer students examples if they did not meet the criteria listed.

What the World Has Learned From Past Eclipses

C louds scudded over the small volcanic island of Principe, off the western coast of Africa, on the afternoon of May 29, 1919. Arthur Eddington, director of the Cambridge Observatory in the U.K., waited for the Sun to emerge. The remains of a morning thunderstorm could ruin everything.

The island was about to experience the rare and overwhelming sight of a total solar eclipse. For six minutes, the longest eclipse since 1416, the Moon would completely block the face of the Sun, pulling a curtain of darkness over a thin stripe of Earth. Eddington traveled into the eclipse path to try and prove one of the most consequential ideas of his age: Albert Einstein’s new theory of general relativity.

Eddington, a physicist, was one of the few people at the time who understood the theory, which Einstein proposed in 1915. But many other scientists were stymied by the bizarre idea that gravity is not a mutual attraction, but a warping of spacetime. Light itself would be subject to this warping, too. So an eclipse would be the best way to prove whether the theory was true, because with the Sun’s light blocked by the Moon, astronomers would be able to see whether the Sun’s gravity bent the light of distant stars behind it.

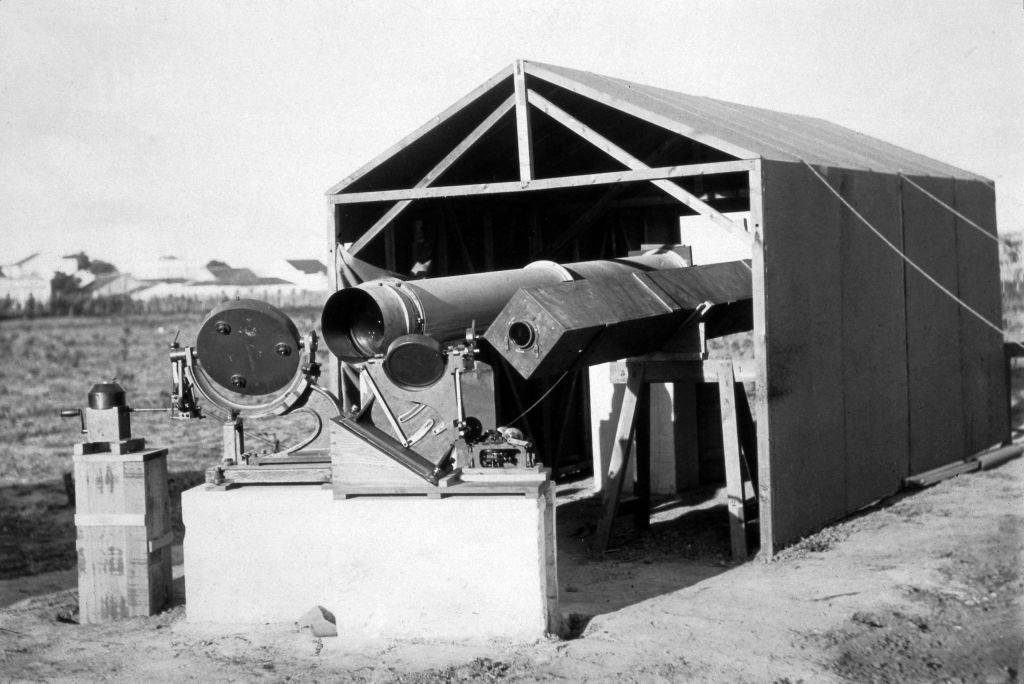

Two teams of astronomers boarded ships steaming from Liverpool, England, in March 1919 to watch the eclipse and take the measure of the stars. Eddington and his team went to Principe, and another team led by Frank Dyson of the Greenwich Observatory went to Sobral, Brazil.

Totality, the complete obscuration of the Sun, would be at 2:13 local time in Principe. Moments before the Moon slid in front of the Sun, the clouds finally began breaking up. For a moment, it was totally clear. Eddington and his group hastily captured images of a star cluster found near the Sun that day, called the Hyades, found in the constellation of Taurus. The astronomers were using the best astronomical technology of the time, photographic plates, which are large exposures taken on glass instead of film. Stars appeared on seven of the plates, and solar “prominences,” filaments of gas streaming from the Sun, appeared on others.

Eddington wanted to stay in Principe to measure the Hyades when there was no eclipse, but a ship workers’ strike made him leave early. Later, Eddington and Dyson both compared the glass plates taken during the eclipse to other glass plates captured of the Hyades in a different part of the sky, when there was no eclipse. On the images from Eddington’s and Dyson’s expeditions, the stars were not aligned. The 40-year-old Einstein was right.

“Lights All Askew In the Heavens,” the New York Times proclaimed when the scientific papers were published. The eclipse was the key to the discovery—as so many solar eclipses before and since have illuminated new findings about our universe.

To understand why Eddington and Dyson traveled such distances to watch the eclipse, we need to talk about gravity.

Since at least the days of Isaac Newton, who wrote in 1687, scientists thought gravity was a simple force of mutual attraction. Newton proposed that every object in the universe attracts every other object in the universe, and that the strength of this attraction is related to the size of the objects and the distances among them. This is mostly true, actually, but it’s a little more nuanced than that.

On much larger scales, like among black holes or galaxy clusters, Newtonian gravity falls short. It also can’t accurately account for the movement of large objects that are close together, such as how the orbit of Mercury is affected by its proximity the Sun.

Albert Einstein’s most consequential breakthrough solved these problems. General relativity holds that gravity is not really an invisible force of mutual attraction, but a distortion. Rather than some kind of mutual tug-of-war, large objects like the Sun and other stars respond relative to each other because the space they are in has been altered. Their mass is so great that they bend the fabric of space and time around themselves.

Read More: 10 Surprising Facts About the 2024 Solar Eclipse

This was a weird concept, and many scientists thought Einstein’s ideas and equations were ridiculous. But others thought it sounded reasonable. Einstein and others knew that if the theory was correct, and the fabric of reality is bending around large objects, then light itself would have to follow that bend. The light of a star in the great distance, for instance, would seem to curve around a large object in front of it, nearer to us—like our Sun. But normally, it’s impossible to study stars behind the Sun to measure this effect. Enter an eclipse.

Einstein’s theory gives an equation for how much the Sun’s gravity would displace the images of background stars. Newton’s theory predicts only half that amount of displacement.

Eddington and Dyson measured the Hyades cluster because it contains many stars; the more stars to distort, the better the comparison. Both teams of scientists encountered strange political and natural obstacles in making the discovery, which are chronicled beautifully in the book No Shadow of a Doubt: The 1919 Eclipse That Confirmed Einstein's Theory of Relativity , by the physicist Daniel Kennefick. But the confirmation of Einstein’s ideas was worth it. Eddington said as much in a letter to his mother: “The one good plate that I measured gave a result agreeing with Einstein,” he wrote , “and I think I have got a little confirmation from a second plate.”

The Eddington-Dyson experiments were hardly the first time scientists used eclipses to make profound new discoveries. The idea dates to the beginnings of human civilization.

Careful records of lunar and solar eclipses are one of the greatest legacies of ancient Babylon. Astronomers—or astrologers, really, but the goal was the same—were able to predict both lunar and solar eclipses with impressive accuracy. They worked out what we now call the Saros Cycle, a repeating period of 18 years, 11 days, and 8 hours in which eclipses appear to repeat. One Saros cycle is equal to 223 synodic months, which is the time it takes the Moon to return to the same phase as seen from Earth. They also figured out, though may not have understood it completely, the geometry that enables eclipses to happen.

The path we trace around the Sun is called the ecliptic. Our planet’s axis is tilted with respect to the ecliptic plane, which is why we have seasons, and why the other celestial bodies seem to cross the same general path in our sky.

As the Moon goes around Earth, it, too, crosses the plane of the ecliptic twice in a year. The ascending node is where the Moon moves into the northern ecliptic. The descending node is where the Moon enters the southern ecliptic. When the Moon crosses a node, a total solar eclipse can happen. Ancient astronomers were aware of these points in the sky, and by the apex of Babylonian civilization, they were very good at predicting when eclipses would occur.

Two and a half millennia later, in 2016, astronomers used these same ancient records to measure the change in the rate at which Earth’s rotation is slowing—which is to say, the amount by which are days are lengthening, over thousands of years.

By the middle of the 19 th century, scientific discoveries came at a frenetic pace, and eclipses powered many of them. In October 1868, two astronomers, Pierre Jules César Janssen and Joseph Norman Lockyer, separately measured the colors of sunlight during a total eclipse. Each found evidence of an unknown element, indicating a new discovery: Helium, named for the Greek god of the Sun. In another eclipse in 1869, astronomers found convincing evidence of another new element, which they nicknamed coronium—before learning a few decades later that it was not a new element, but highly ionized iron, indicating that the Sun’s atmosphere is exceptionally, bizarrely hot. This oddity led to the prediction, in the 1950s, of a continual outflow that we now call the solar wind.

And during solar eclipses between 1878 and 1908, astronomers searched in vain for a proposed extra planet within the orbit of Mercury. Provisionally named Vulcan, this planet was thought to exist because Newtonian gravity could not fully describe Mercury’s strange orbit. The matter of the innermost planet’s path was settled, finally, in 1915, when Einstein used general relativity equations to explain it.

Many eclipse expeditions were intended to learn something new, or to prove an idea right—or wrong. But many of these discoveries have major practical effects on us. Understanding the Sun, and why its atmosphere gets so hot, can help us predict solar outbursts that could disrupt the power grid and communications satellites. Understanding gravity, at all scales, allows us to know and to navigate the cosmos.

GPS satellites, for instance, provide accurate measurements down to inches on Earth. Relativity equations account for the effects of the Earth’s gravity and the distances between the satellites and their receivers on the ground. Special relativity holds that the clocks on satellites, which experience weaker gravity, seem to run slower than clocks under the stronger force of gravity on Earth. From the point of view of the satellite, Earth clocks seem to run faster. We can use different satellites in different positions, and different ground stations, to accurately triangulate our positions on Earth down to inches. Without those calculations, GPS satellites would be far less precise.

This year, scientists fanned out across North America and in the skies above it will continue the legacy of eclipse science. Scientists from NASA and several universities and other research institutions will study Earth’s atmosphere; the Sun’s atmosphere; the Sun’s magnetic fields; and the Sun’s atmospheric outbursts, called coronal mass ejections.

When you look up at the Sun and Moon on the eclipse , the Moon’s day — or just observe its shadow darkening the ground beneath the clouds, which seems more likely — think about all the discoveries still yet waiting to happen, just behind the shadow of the Moon.

More Must-Reads From TIME

- Exclusive: Google Workers Revolt Over $1.2 Billion Contract With Israel

- Jane Fonda Champions Climate Action for Every Generation

- Stop Looking for Your Forever Home

- The Sympathizer Counters 50 Years of Hollywood Vietnam War Narratives

- The Bliss of Seeing the Eclipse From Cleveland

- Hormonal Birth Control Doesn’t Deserve Its Bad Reputation

- The Best TV Shows to Watch on Peacock

- Want Weekly Recs on What to Watch, Read, and More? Sign Up for Worth Your Time

Contact us at [email protected]

You May Also Like

Environmental Science: Advances

2023 outstanding papers published in the environmental science journals of the royal society of chemistry.

a Hong Kong Baptist University, Hong Kong, China

b Carnegie Mellon University Department of Chemistry, Pittsburgh, PA, USA

c Department of Civil and Resource Engineering, Dalhousie University, Halifax, Nova Scotia, Canada

d Lancaster Environment Centre, Lancaster University, UK

e Universidade Católica Portuguesa, Portugal

f Harvard John A. Paulson School of Engineering and Applied Science, Harvard University, Cambridge, USA

g Department of Civil and Environmental Engineering, Virginia Tech, Blacksburg, Virginia, USA

A graphical abstract is available for this content

- This article is part of the themed collections: Outstanding Papers of 2023 from RSC’s Environmental Science journals and Outstanding Papers 2023 – Environmental Science: Advances

Article information

Download Citation

Permissions.

Z. Cai, N. Donahue, G. Gagnon, K. C. Jones, C. Manaia, E. Sunderland and P. J. Vikesland, Environ. Sci.: Adv. , 2024, Advance Article , DOI: 10.1039/D4VA90010C

This article is licensed under a Creative Commons Attribution 3.0 Unported Licence . You can use material from this article in other publications without requesting further permissions from the RSC, provided that the correct acknowledgement is given.

Read more about how to correctly acknowledge RSC content .

Social activity

Search articles by author.

This article has not yet been cited.

Advertisements

Help | Advanced Search

Computer Science > Computation and Language

Title: leave no context behind: efficient infinite context transformers with infini-attention.

Abstract: This work introduces an efficient method to scale Transformer-based Large Language Models (LLMs) to infinitely long inputs with bounded memory and computation. A key component in our proposed approach is a new attention technique dubbed Infini-attention. The Infini-attention incorporates a compressive memory into the vanilla attention mechanism and builds in both masked local attention and long-term linear attention mechanisms in a single Transformer block. We demonstrate the effectiveness of our approach on long-context language modeling benchmarks, 1M sequence length passkey context block retrieval and 500K length book summarization tasks with 1B and 8B LLMs. Our approach introduces minimal bounded memory parameters and enables fast streaming inference for LLMs.

Submission history

Access paper:.

- HTML (experimental)

- Other Formats

References & Citations

- Google Scholar

- Semantic Scholar

BibTeX formatted citation

Bibliographic and Citation Tools

Code, data and media associated with this article, recommenders and search tools.

- Institution

arXivLabs: experimental projects with community collaborators

arXivLabs is a framework that allows collaborators to develop and share new arXiv features directly on our website.

Both individuals and organizations that work with arXivLabs have embraced and accepted our values of openness, community, excellence, and user data privacy. arXiv is committed to these values and only works with partners that adhere to them.

Have an idea for a project that will add value for arXiv's community? Learn more about arXivLabs .

- marquette.edu //

- Contacts //

- A-Z Index //

- Give to Marquette

Marquette.edu // Career Center // Resources //

Properly Write Your Degree

The correct way to communicate your degree to employers and others is by using the following formats:

Degree - This is the academic degree you are receiving. Your major is in addition to the degree; it can be added to the phrase or written separately. Include the full name of your degree, major(s), minor(s), emphases, and certificates on your resume.

Double Majors - You will not be receiving two bachelor's degrees if you double major. Your primary major determines the degree (Bachelor of Arts or Bachelor of Science). If you're not fully sure which of your majors is primary, check CheckMarq or call the registrar's office.

Example: Primary Major: Psychology ; Secondary Major: Marketing

- Bachelor of Arts Degree in Psychology & Marketing

Primary Major: Marketing ; Secondary Major: Psychology

- Bachelor of Science Degree in Marketing & Psychology

In a letter, you may shorten your degree by writing it this way:

- In May 20XX, I will graduate with my Bachelor's degree in International Affairs.

- In December 20XX, I will graduate with my Master's degree in Counseling Education.

Not sure which degree you are graduating with? Here is a list of Undergraduate Majors and corresponding degrees:

- College of Arts & Sciences

- College of Business Administration

- College of Communication

- College of Education

- College of Engineering

- College of Health Sciences

- College of Nursing

- Online Resources

- Handouts and Guides

- College/Major Specific Resources

- Grad Program Specific Resources

- Diverse Population Resource s

- Affinity Group Resources

- Schedule an Appointment

- Major/Career Exploration

- Internship/Job Search

- Graduate/Professional School

- Year of Service

- Resume and Cover Letter Writing

- Login to Handshake

- Getting Started with Handshake

- Handshake Support for Students

- Handshake Support for Alumni

- Handshake Information for Employers

CONNECT WITH US

PROBLEM WITH THIS WEBPAGE? Report an accessibility problem

To report another problem, please contact [email protected]

Marquette University Holthusen Hall, First Floor Milwaukee, WI 53233 Phone: (414) 288-7423

- Campus contacts

- Search marquette.edu

A B C D E F G H I J K L M N O P Q R S T U V W X Y Z

Privacy Policy Legal Disclaimer Non-Discrimination Policy Accessible Technology

© 2024 Marquette University

2024 Ethics Essay Contest winners announced

Claire Martino , a junior from New Berlin, Wis., majoring in applied mathematics and data science, is the winner of the 2024 Ethics Essay Contest for the essay "Artificial Intelligence Could Probably Write This Essay Better than Me."

The second place entry was from Morgan J. Janes , a junior from Rock Island, Ill., majoring in biology, for the essay "The Relevant History and Medical and Ethical Future Viability of Xenotransplantation."

Third place went to Alyssa Scudder , a senior from Lee, Ill., majoring in biology, for the essay "The Ethicality of Gene Alteration in Human Embryos."

Dr. Dan Lee announced the winners on behalf of the board of directors of the Augustana Center for the Study of Ethics, sponsor of the contest. The winner will receive an award of $100, the second-place winner an award of $50, and the third-place winner an award of $25.

Honorable mentions went to Grace Palmer , a senior art and accounting double major from Galesburg, Ill., for the essay "The Ethiopian Coffee Trade: Is Positive Change Brewing?" and Sarah Marrs , a sophomore from Carpentersville, Ill., majoring in political science and women, gender and sexuality studies, for the essay "Dating Apps as an Outlet to Promote Sexual Autonomy among Disabled Individuals: an Intersectional Approach to Change."

The winning essays will be published in Augustana Digital Commons .

The Augustana Center for the Study of Ethics was established to enrich the teaching-learning experiences for students by providing greater opportunities for them to meet and interact with community leaders and to encourage discussions of issues of ethical significance through campus programs and community outreach.

Dr. Lee, whose teaching responsibilities since joining the Augustana faculty in 1974 have included courses in ethics, serves as the center's director.

If you have news, send it to [email protected] ! We love hearing about the achievements of our alumni, students and faculty.

IMAGES

VIDEO

COMMENTS

Contents. All required information is discerned with clarity and precision and contains all items listed in Meets category. Contains: application, abstract, research paper, lab report, observation log, reflective essay, guide and rubrics. Contains 5 - 6 of criteria for meets; and /or poorly organized.

Inadequate. Information from the text or science activity is incomplete, inaccurate, and/or confusing. Information is presented poorly. The writing demonstrates lack of understanding. Writing is unorganized and fragmented. Conjectures are incomplete, vague and/or unsupported. 3-34. Elementary CORE Academy 2004.

If an assignment prompt is clearly addressing each of these elements, then students know what they're doing, why they're doing it, and when/how/for whom they're doing it. From the standpoint of a rubric, we can see how these elements correspond to the criteria for feedback: 1. Purpose. 2. Genre.

Examples of Rubric Creation. Creating a rubric takes time and requires thought and experimentation. Here you can see the steps used to create two kinds of rubric: one for problems in a physics exam for a small, upper-division physics course, and another for an essay assignment in a large, lower-division sociology course.

Expository 5-Paragraph science essay. Expository 5-Paragraph Essay Final. Guidelines for scoring an expository essay. Rubric Code: M57388. By ccolt01. Ready to use. Public Rubric. Subject: Science. Type: Writing.

Science Essay Rubric Science Essay with Bibliography Rubric Code: Q22WBA6. By mldonaldson Ready to use Public Rubric Subject: Science Type: Writing Grade Levels: 9-12 Desktop Mode Mobile Mode Science Essay Students will have to write a five paragraph essay. ...

Basic science essay rubric Rubric Code: V223366. By ambikasharath Ready to use Public Rubric Subject: Biology Type: Assignment Grade Levels: 9-12 Desktop Mode Mobile Mode Enter rubric title No evidence ...

Rubric for Science Writing Advanced (12) (10) (9) Basic (8) Addresses the prompt completely Addresses the prompt completely ... Uses accurate science vocabulary to appropriately support ideas Uses some science vocabulary to support ideas; at times may be inaccurate Missing science vocabulary and/or inaccurate usage of the vocabulary ...

Five-Paragraph Essay Writing Rubric. Thesis statement/topic idea sentence is clear, correctly placed, and restated in the closing sentence. Your three supporting ideas are briefly mentioned. Thesis statement/topic idea sentence is either unclear or incorrectly placed, and it's restated in the closing sentence.

The essay is longer than 1 single spaced page, and there is only 1 citation from the reading to support the author's position. Some spelling and/or grammar errors are seen. Level 2 (Below average) - The essay fails to discuss the process of science in the development

Our science rubrics have four levels of performance: Novice, Apprentice, Practitioner (meets the standard), and Expert. Exemplars uses two types of rubrics: Standards-Based Assessment Rubrics are used by teachers to assess student work in science. (Exemplars science material includes both a general science rubric as well as task-specific ...

A rubric is a scoring tool that identifies the different criteria relevant to an assignment, assessment, or learning outcome and states the possible levels of achievement in a specific, clear, and objective way. Use rubrics to assess project-based student work including essays, group projects, creative endeavors, and oral presentations.

My hypothesis was teaching students to use a rubric to write and assess expository essay responses would produce higher quality essays than if a writing rubric was not used for instruction. My hypothesis was based on previous experiences I have had using a writing rubric in my science classes and the time I served on an elementary school 6

Essay Rubric Directions: Your essay will be graded based on this rubric. Consequently, use this rubric as a guide when writing your essay and check it again before you submit your essay. Traits 4 3 2 1 Focus & Details There is one clear, well-focused topic. Main ideas are clear and are well supported by detailed and accurate information.

Grading rubrics can be of great benefit to both you and your students. For you, a rubric saves time and decreases subjectivity. Specific criteria are explicitly stated, facilitating the grading process and increasing your objectivity. For students, the use of grading rubrics helps them to meet or exceed expectations, to view the grading process ...

Rubrics with Science Assessments As Wisconsin works toward new three-dimensional standards and assessments, educators will need to develop a clear picture of what proficient student performance looks like throughout the three dimensions. Several types of rubrics can be effective tools for mapping out what students should know and be able to do. Rubrics Resources and Examples Article on typical ...

Science Essay Rubric - Free download as Word Doc (.doc), PDF File (.pdf), Text File (.txt) or view presentation slides online. rubrics

Try this rubric to make student expectations clear and end-of-project assessment easier. Learn more: Free Technology for Teachers. 100-Point Essay Rubric. Need an easy way to convert a scoring rubric to a letter grade? This example for essay writing earns students a final score out of 100 points. Learn more: Learn for Your Life. Drama ...

Sample Essay Rubric for Elementary Teachers. An essay rubric is a way teachers assess students' essay writing by using specific criteria to grade assignments. Essay rubrics save teachers time because all of the criteria are listed and organized into one convenient paper. If used effectively, rubrics can help improve students' writing .

Created by. Mother Daughter Duo. Includes: Unit Teacher Guide (materials needed, explanation, standards), Student Packet (all experiment components, all argumentative essay components), Assessment Rubrics This unit is designed to lead students through the Next Generation Science Standards for 5th grade.

For additional information about using Science Buddies with Google Classroom, see our FAQ. Explore Our Science Videos Making Everyday Objects Out of Mushroom roots!

This file contains a sample rubric used in a Grade Four Science classroom. You can use this file as a guide for your own classroom. The rubric contains descriptions of the type of work required to receive a Level 1 - 4 grade. The bottom portion of the rubric also allows the for constructive feedback.

Bachelor of Science Holistic Review for Admission Composite Rubric . 1 . Quantitative. Academic Metrics ; 50%. ... Essay Rubric Score (12 points) Essay Rubric Score (8 points) Serial Short Interviews (SSIs) Attributes and Experiences: Civility, Integrity, Leadership & Collaboration,

ESSAY RUBRIC. Criteria Excellent Acceptable Poor. Content 10 points - Provides relevant ideas to the posted question. - Content is engaging and original. 7-9 points - Ideas are relevant to the posted question. - Content is appropriate and original.

"Lights All Askew In the Heavens," the New York Times proclaimed when the scientific papers were published. The eclipse was the key to the discovery—as so many solar eclipses before and ...

2023 Outstanding Papers published in the Environmental Science journals of the Royal Society of Chemistry . Z. Cai, N. Donahue, G. Gagnon, K. C. Jones, C. Manaia, E ...

This work introduces an efficient method to scale Transformer-based Large Language Models (LLMs) to infinitely long inputs with bounded memory and computation. A key component in our proposed approach is a new attention technique dubbed Infini-attention. The Infini-attention incorporates a compressive memory into the vanilla attention mechanism and builds in both masked local attention and ...

A Generation Lost to Climate Anxiety. By David Zaruk. FR170512 AP. In a far-reaching new essay in The New Atlantis, the environmental researcher Ted Nordhaus makes a damning and authoritative case that while the basic science of CO2 and climate is solid, it has been abused by the activist class in service of a wildly irresponsible and ...

The correct way to communicate your degree to employers and others is by using the following formats: Degree - This is the academic degree you are receiving. Your major is in addition to the degree; it can be added to the phrase or written separately. Include the full name of your degree, major (s), minor (s), emphases, and certificates on your ...

Claire Martino, a junior from New Berlin, Wis., majoring in applied mathematics and data science, is the winner of the 2024 Ethics Essay Contest for the essay "Artificial Intelligence Could Probably Write This Essay Better than Me.". The second place entry was from Morgan J. Janes, a junior from Rock Island, Ill., majoring in biology, for the essay "The Relevant History and Medical and Ethical ...