Featured Topics

Featured series.

A series of random questions answered by Harvard experts.

Explore the Gazette

Read the latest.

How did you get that frog to float?

Lifting a few with my chatbot

Hate mosquitoes? Who doesn’t? But maybe we shouldn’t.

Illustration by Ben Boothman

Trailblazing initiative marries ethics, tech

Christina Pazzanese

Harvard Staff Writer

Computer science, philosophy faculty ask students to consider how systems affect society

First in a four-part series that taps the expertise of the Harvard community to examine the promise and potential pitfalls of the rising age of artificial intelligence and machine learning, and how to humanize it.

For two decades, the flowering of the Digital Era has been greeted with blue-skies optimism, defined by an unflagging belief that each new technological advance, whether more powerful personal computers, faster internet, smarter cellphones, or more personalized social media, would only enhance our lives.

Also in the series

Great promise but potential for peril

AI revolution in medicine

Imagine a world in which AI is in your home, at work, everywhere

But public sentiment has curdled in recent years with revelations about Silicon Valley firms and online retailers collecting and sharing people’s data, social media gamed by bad actors spreading false information or sowing discord, and corporate algorithms using opaque metrics that favor some groups over others. These concerns multiply as artificial intelligence (AI) and machine-learning technologies, which made possible many of these advances, quietly begin to nudge aside humans, assuming greater roles in running our economy, transportation, defense, medical care, and personal lives.

“Individuality … is increasingly under siege in an era of big data and machine learning,” says Mathias Risse, Littauer Professor of Philosophy and Public Administration and director of the Carr Center for Human Rights Policy at Harvard Kennedy School. The center invites scholars and leaders in the private and nonprofit sectors on ethics and AI to engage with students as part of its growing focus on the ways technology is reshaping the future of human rights.

Building more thoughtful systems

Even before the technology field belatedly began to respond to market and government pressures with promises to do better, it had become clear to Barbara Grosz , Higgins Research Professor of Natural Sciences at the Harvard John A. Paulson School of Engineering and Applied Sciences (SEAS), that the surest way to get the industry to act more responsibly is to prepare the next generation of tech leaders and workers to think more ethically about the work they’ll be doing. The result is Embedded EthiCS , a groundbreaking novel program that marries the disciplines of computer science and philosophy in an attempt to create change from within.

The timing seems on target, since the revolutionary technologies of AI and machine learning have begun making inroads in an ever-broadening range of domains and professions. In medicine, for instance, systems are expected soon to work effectively with physicians to provide better healthcare. In business, tech giants like Google, Facebook, and Amazon have been using smart technologies for years, but use of AI is rapidly spreading, with global corporate spending on software and platforms expected to reach $110 billion by 2024.

“A one-off course on ethics for computer scientists would not work. We needed a new pedagogical model.”

— Alison Simmons, the Samuel H. Wolcott Professor of Philosophy

Stephanie Mitchell/Harvard Staff Photographer

So where are we now on these issues, and what does that mean? To answer those questions, this Gazette series will examine emerging technologies in medicine and business, with the help of various experts in the Harvard community. We’ll also take a look at how the humanities can help inform the future coordination of human values and AI efficiencies through University efforts such as the AI+Art project at metaLAB(at)Harvard and Embedded EthiCS.

In spring 2017, Grosz recruited Alison Simmons , the Samuel H. Wolcott Professor of Philosophy, and together they founded Embedded EthiCS . The idea is to weave philosophical concepts and ways of thinking into existing computer science courses so that students learn to ask not simply “Can I build it?” but rather “Should I build it, and if so, how?”

Through Embedded EthiCS, students learn to identify and think through ethical issues, explain their reasoning for taking, or not taking, a specific action, and ideally design more thoughtful systems that reflect basic human values. The program is the first of its kind nationally and is seen as a model for a number of other colleges and universities that plan to adapt it, including Massachusetts Institute of Technology and Stanford University.

In recent years, computer science has become the second most popular concentration at Harvard College, after economics. About 2,750 students have enrolled in Embedded EthiCS courses since it began. More than 30 courses, including all classes in the computer science department, participated in the program in spring 2019.

Students learn to ask not simply “Can I build it?” but rather “Should I build it, and if so, how?”

“We don’t need all courses, what we need is for enough students to learn to use ethical thinking during design to make a difference in the world and to start changing the way computing technology company leaders, systems designers, and programmers think about what they’re doing,” said Grosz.

It became clear that Harvard’s computer science students wanted and needed something more just a few years ago, when Grosz taught “Intelligent Systems: Design and Ethical Challenges,” one of only two CS courses that had integrated ethics into the syllabus at the time.

During a class discussion about Facebook’s infamous 2014 experiment covertly engineering news feeds to gauge how users’ emotions were affected, students were outraged by what they viewed as the company’s improper psychological manipulation. But just two days later, in a class activity in which students were designing a recommender system for a fictional clothing manufacturer, Grosz asked what information they thought they’d need to collect from hypothetical customers.

“It was astonishing,” she said. “How many of the groups talked about the ethical implications of the information they were collecting? None.”

When she taught the course again, only one student said she thought about the ethical implications, but felt that “it didn’t seem relevant,” Grosz recalled.

“You need to think about what information you’re collecting when you’re designing what you’re going to collect, not collect everything and then say ‘Oh, I shouldn’t have this information,’” she explained.

Making it stick

Seeing how quickly even students concerned about ethics forgot to consider them when absorbed in a technical project prompted Grosz to focus on how to help students keep ethics up front. Some empirical work shows that standalone courses aren’t very sticky with engineers, and she was also concerned that a single ethics course would not satisfy growing student interest. Grosz and Simmons designed the program to intertwine the ethical with the technical, thus helping students better understand the relevance of ethics to their everyday work.

In a broad range of Harvard CS courses now, philosophy Ph.D. students and postdocs lead modules on ethical matters tailored to the technical concepts being taught in the class.

“We want the ethical issues to arise organically out of the technical problems that they’re working on in class,’” said Simmons. “We want our students to recognize that technical and ethical challenges need to be addressed hand in hand. So a one-off course on ethics for computer scientists would not work. We needed a new pedagogical model.”

Examples of ethical problems courses are tackling

Image gallery

Are software developers morally obligated to design for inclusion?

Should social media companies suppress the spread of fake news on their platforms?

Should search engines be transparent about how they rank results?

Should we think about electronic privacy as a right?

Getting comfortable with a humanities-driven approach to learning, using the ideas and tools of moral and political philosophy, has been an adjustment for the computer-science instructors as well as students, said David Grant, who taught as an Embedded EthiCS postdoc in 2019 and is now assistant professor of philosophy at the University of Texas at San Antonio.

“The skill of ethical reasoning is best learned and practiced through open and inclusive discussion with others,” Grant wrote in an email. “But extensive in-class discussion is rare in computer science courses, which makes encouraging active participation in our modules unusually challenging.”

Students are used to being presented problems for which there are solutions, program organizers say. But in philosophy, issues or dilemmas become clearer over time, as different perspectives are brought to bear. And while sometimes there can be right or wrong answers, solutions are typically thornier and require some difficult choices.

“This is extremely hard for people who are used to finding solutions that can be proved to be right,” said Grosz. “It’s fundamentally a different way of thinking about the world.”

“They have to learn to think with normative concepts like moral responsibility and legal responsibility and rights. They need to develop skills for engaging in counterfactual reasoning with those concepts while doing algorithm and systems design” said Simmons. “We in the humanities problem-solve too, but we often do it in a normative domain.”

“What we need is for enough students to learn to use ethical thinking during design to make a difference in the world.”

— Barbara Grosz, Higgins Research Professor of Natural Sciences at the Harvard John A. Paulson School of Engineering and Applied Sciences

The importance of teaching students to consider societal implications of computing systems was not evident in the field’s early days, when there were only a very small number of computer scientists, systems were used largely in closed scientific or industry settings, and there were few “adversarial attacks” by people aiming to exploit system weaknesses, said Grosz, a pioneer in the field. Fears about misuse were minimal because so few had access.

But as the technologies have become ubiquitous in the past 10 to 15 years, with more and more people worldwide connecting via smartphones, the internet, and social networking, as well as the rapid application of machine learning and big data computing since 2012, the need for ethical training is urgent. “It’s the penetration of computing technologies throughout life and its use by almost everyone now that has enabled so much that’s caused harm lately,” said Grosz.

That apathy has contributed to the perceived disconnect between science and the public. “We now have a gap between those of us who make technology and those of us who use it,” she said.

Simmons and Grosz said that while computer science concentrators leaving Harvard and other universities for jobs in the tech sector may have the desire to change the industry, until now they haven’t been furnished with the tools to do so effectively. The program hopes to arm them with an understanding of how to identify and work through potential ethical concerns that may arise from new technology and its applications.

“What’s important is giving them the knowledge that they have the skills to make an effective, rational argument with people about what’s going on,” said Grosz, “to give them the confidence … to [say], ‘This isn’t right — and here’s why.’”

“It is exciting. It’s an opportunity to make use of our skills in a way that might have a visible effect in the near- or midterm.”

— Jeffrey Behrends, co-director of Embedded EthiCS

A winner of the Responsible CS Challenge in 2019, the program received a $150,000 grant for its work in technology education that helps fund two computer science postdoc positions to collaborate with the philosophy student-teachers in developing the different course modules.

Though still young, the program has also had some nice side effects, with faculty and graduate students in the two typically distant cohorts learning in unusual ways from each other. And for the philosophy students there’s been an unexpected boon: working on ethical questions at technology’s cutting edge. It has changed the course of their research and opened up new career options in the growing field of engaged ethics.

More like this

Embedding ethics in computer science curriculum

Embedded EthiCS wins $150,000 grant

“It is exciting. It’s an opportunity to make use of our skills in a way that might have a visible effect in the near- or midterm,” said philosophy lecturer Jeffrey Behrends , one of the program’s co-directors.

Will this ethical training reshape the way students approach technology once they leave Harvard and join the workforce? That’s the critical question to which the program’s directors are now turning their attention. There isn’t enough data to know yet, and the key components for such an analysis, like tracking down students after they’ve graduated to measure the program’s impact on their work, present a “very difficult evaluation problem” for researchers, said Behrends, who is investigating how best to measure long-term effectiveness.

Ultimately, whether stocking the field with designers, technicians, executives, investors, and policymakers will bring about a more responsible and ethical era of technology remains to be seen. But leaving the industry to self-police or wait for market forces to guide reforms clearly hasn’t worked so far.

“Somebody has to figure out a different incentive mechanism. That’s where really the danger still lies,” said Grosz of the industry’s intense profit focus. “We can try to educate students to do differently, but in the end, if there isn’t a different incentive mechanism, it’s quite hard to change Silicon Valley practice.”

Next: Ethical concerns rise as AI takes an ever larger decision-making role in many industries.

Share this article

You might like.

Ever-creative, Nobel laureate in physics Andre Geim extols fun, fanciful side of very serious science

Sociologist Sherry Turkle warns against growing trend of turning to AI for companionship, counsel

Entomologist says there is much scientists don’t know about habitats, habits, impacts on their environments

College accepts 1,937 to Class of 2028

Students represent 94 countries, all 50 states

Pushing back on DEI ‘orthodoxy’

Panelists support diversity efforts but worry that current model is too narrow, denying institutions the benefit of other voices, ideas

So what exactly makes Taylor Swift so great?

Experts weigh in on pop superstar's cultural and financial impact as her tours and albums continue to break records.

- Search Menu

- Browse content in Arts and Humanities

- Browse content in Archaeology

- Anglo-Saxon and Medieval Archaeology

- Archaeological Methodology and Techniques

- Archaeology by Region

- Archaeology of Religion

- Archaeology of Trade and Exchange

- Biblical Archaeology

- Contemporary and Public Archaeology

- Environmental Archaeology

- Historical Archaeology

- History and Theory of Archaeology

- Industrial Archaeology

- Landscape Archaeology

- Mortuary Archaeology

- Prehistoric Archaeology

- Underwater Archaeology

- Urban Archaeology

- Zooarchaeology

- Browse content in Architecture

- Architectural Structure and Design

- History of Architecture

- Residential and Domestic Buildings

- Theory of Architecture

- Browse content in Art

- Art Subjects and Themes

- History of Art

- Industrial and Commercial Art

- Theory of Art

- Biographical Studies

- Byzantine Studies

- Browse content in Classical Studies

- Classical History

- Classical Philosophy

- Classical Mythology

- Classical Literature

- Classical Reception

- Classical Art and Architecture

- Classical Oratory and Rhetoric

- Greek and Roman Papyrology

- Greek and Roman Epigraphy

- Greek and Roman Law

- Greek and Roman Archaeology

- Late Antiquity

- Religion in the Ancient World

- Digital Humanities

- Browse content in History

- Colonialism and Imperialism

- Diplomatic History

- Environmental History

- Genealogy, Heraldry, Names, and Honours

- Genocide and Ethnic Cleansing

- Historical Geography

- History by Period

- History of Emotions

- History of Agriculture

- History of Education

- History of Gender and Sexuality

- Industrial History

- Intellectual History

- International History

- Labour History

- Legal and Constitutional History

- Local and Family History

- Maritime History

- Military History

- National Liberation and Post-Colonialism

- Oral History

- Political History

- Public History

- Regional and National History

- Revolutions and Rebellions

- Slavery and Abolition of Slavery

- Social and Cultural History

- Theory, Methods, and Historiography

- Urban History

- World History

- Browse content in Language Teaching and Learning

- Language Learning (Specific Skills)

- Language Teaching Theory and Methods

- Browse content in Linguistics

- Applied Linguistics

- Cognitive Linguistics

- Computational Linguistics

- Forensic Linguistics

- Grammar, Syntax and Morphology

- Historical and Diachronic Linguistics

- History of English

- Language Evolution

- Language Reference

- Language Acquisition

- Language Variation

- Language Families

- Lexicography

- Linguistic Anthropology

- Linguistic Theories

- Linguistic Typology

- Phonetics and Phonology

- Psycholinguistics

- Sociolinguistics

- Translation and Interpretation

- Writing Systems

- Browse content in Literature

- Bibliography

- Children's Literature Studies

- Literary Studies (Romanticism)

- Literary Studies (American)

- Literary Studies (Asian)

- Literary Studies (European)

- Literary Studies (Eco-criticism)

- Literary Studies (Modernism)

- Literary Studies - World

- Literary Studies (1500 to 1800)

- Literary Studies (19th Century)

- Literary Studies (20th Century onwards)

- Literary Studies (African American Literature)

- Literary Studies (British and Irish)

- Literary Studies (Early and Medieval)

- Literary Studies (Fiction, Novelists, and Prose Writers)

- Literary Studies (Gender Studies)

- Literary Studies (Graphic Novels)

- Literary Studies (History of the Book)

- Literary Studies (Plays and Playwrights)

- Literary Studies (Poetry and Poets)

- Literary Studies (Postcolonial Literature)

- Literary Studies (Queer Studies)

- Literary Studies (Science Fiction)

- Literary Studies (Travel Literature)

- Literary Studies (War Literature)

- Literary Studies (Women's Writing)

- Literary Theory and Cultural Studies

- Mythology and Folklore

- Shakespeare Studies and Criticism

- Browse content in Media Studies

- Browse content in Music

- Applied Music

- Dance and Music

- Ethics in Music

- Ethnomusicology

- Gender and Sexuality in Music

- Medicine and Music

- Music Cultures

- Music and Media

- Music and Religion

- Music and Culture

- Music Education and Pedagogy

- Music Theory and Analysis

- Musical Scores, Lyrics, and Libretti

- Musical Structures, Styles, and Techniques

- Musicology and Music History

- Performance Practice and Studies

- Race and Ethnicity in Music

- Sound Studies

- Browse content in Performing Arts

- Browse content in Philosophy

- Aesthetics and Philosophy of Art

- Epistemology

- Feminist Philosophy

- History of Western Philosophy

- Metaphysics

- Moral Philosophy

- Non-Western Philosophy

- Philosophy of Language

- Philosophy of Mind

- Philosophy of Perception

- Philosophy of Science

- Philosophy of Action

- Philosophy of Law

- Philosophy of Religion

- Philosophy of Mathematics and Logic

- Practical Ethics

- Social and Political Philosophy

- Browse content in Religion

- Biblical Studies

- Christianity

- East Asian Religions

- History of Religion

- Judaism and Jewish Studies

- Qumran Studies

- Religion and Education

- Religion and Health

- Religion and Politics

- Religion and Science

- Religion and Law

- Religion and Art, Literature, and Music

- Religious Studies

- Browse content in Society and Culture

- Cookery, Food, and Drink

- Cultural Studies

- Customs and Traditions

- Ethical Issues and Debates

- Hobbies, Games, Arts and Crafts

- Lifestyle, Home, and Garden

- Natural world, Country Life, and Pets

- Popular Beliefs and Controversial Knowledge

- Sports and Outdoor Recreation

- Technology and Society

- Travel and Holiday

- Visual Culture

- Browse content in Law

- Arbitration

- Browse content in Company and Commercial Law

- Commercial Law

- Company Law

- Browse content in Comparative Law

- Systems of Law

- Competition Law

- Browse content in Constitutional and Administrative Law

- Government Powers

- Judicial Review

- Local Government Law

- Military and Defence Law

- Parliamentary and Legislative Practice

- Construction Law

- Contract Law

- Browse content in Criminal Law

- Criminal Procedure

- Criminal Evidence Law

- Sentencing and Punishment

- Employment and Labour Law

- Environment and Energy Law

- Browse content in Financial Law

- Banking Law

- Insolvency Law

- History of Law

- Human Rights and Immigration

- Intellectual Property Law

- Browse content in International Law

- Private International Law and Conflict of Laws

- Public International Law

- IT and Communications Law

- Jurisprudence and Philosophy of Law

- Law and Politics

- Law and Society

- Browse content in Legal System and Practice

- Courts and Procedure

- Legal Skills and Practice

- Primary Sources of Law

- Regulation of Legal Profession

- Medical and Healthcare Law

- Browse content in Policing

- Criminal Investigation and Detection

- Police and Security Services

- Police Procedure and Law

- Police Regional Planning

- Browse content in Property Law

- Personal Property Law

- Study and Revision

- Terrorism and National Security Law

- Browse content in Trusts Law

- Wills and Probate or Succession

- Browse content in Medicine and Health

- Browse content in Allied Health Professions

- Arts Therapies

- Clinical Science

- Dietetics and Nutrition

- Occupational Therapy

- Operating Department Practice

- Physiotherapy

- Radiography

- Speech and Language Therapy

- Browse content in Anaesthetics

- General Anaesthesia

- Neuroanaesthesia

- Clinical Neuroscience

- Browse content in Clinical Medicine

- Acute Medicine

- Cardiovascular Medicine

- Clinical Genetics

- Clinical Pharmacology and Therapeutics

- Dermatology

- Endocrinology and Diabetes

- Gastroenterology

- Genito-urinary Medicine

- Geriatric Medicine

- Infectious Diseases

- Medical Toxicology

- Medical Oncology

- Pain Medicine

- Palliative Medicine

- Rehabilitation Medicine

- Respiratory Medicine and Pulmonology

- Rheumatology

- Sleep Medicine

- Sports and Exercise Medicine

- Community Medical Services

- Critical Care

- Emergency Medicine

- Forensic Medicine

- Haematology

- History of Medicine

- Browse content in Medical Skills

- Clinical Skills

- Communication Skills

- Nursing Skills

- Surgical Skills

- Browse content in Medical Dentistry

- Oral and Maxillofacial Surgery

- Paediatric Dentistry

- Restorative Dentistry and Orthodontics

- Surgical Dentistry

- Medical Ethics

- Medical Statistics and Methodology

- Browse content in Neurology

- Clinical Neurophysiology

- Neuropathology

- Nursing Studies

- Browse content in Obstetrics and Gynaecology

- Gynaecology

- Occupational Medicine

- Ophthalmology

- Otolaryngology (ENT)

- Browse content in Paediatrics

- Neonatology

- Browse content in Pathology

- Chemical Pathology

- Clinical Cytogenetics and Molecular Genetics

- Histopathology

- Medical Microbiology and Virology

- Patient Education and Information

- Browse content in Pharmacology

- Psychopharmacology

- Browse content in Popular Health

- Caring for Others

- Complementary and Alternative Medicine

- Self-help and Personal Development

- Browse content in Preclinical Medicine

- Cell Biology

- Molecular Biology and Genetics

- Reproduction, Growth and Development

- Primary Care

- Professional Development in Medicine

- Browse content in Psychiatry

- Addiction Medicine

- Child and Adolescent Psychiatry

- Forensic Psychiatry

- Learning Disabilities

- Old Age Psychiatry

- Psychotherapy

- Browse content in Public Health and Epidemiology

- Epidemiology

- Public Health

- Browse content in Radiology

- Clinical Radiology

- Interventional Radiology

- Nuclear Medicine

- Radiation Oncology

- Reproductive Medicine

- Browse content in Surgery

- Cardiothoracic Surgery

- Gastro-intestinal and Colorectal Surgery

- General Surgery

- Neurosurgery

- Paediatric Surgery

- Peri-operative Care

- Plastic and Reconstructive Surgery

- Surgical Oncology

- Transplant Surgery

- Trauma and Orthopaedic Surgery

- Vascular Surgery

- Browse content in Science and Mathematics

- Browse content in Biological Sciences

- Aquatic Biology

- Biochemistry

- Bioinformatics and Computational Biology

- Developmental Biology

- Ecology and Conservation

- Evolutionary Biology

- Genetics and Genomics

- Microbiology

- Molecular and Cell Biology

- Natural History

- Plant Sciences and Forestry

- Research Methods in Life Sciences

- Structural Biology

- Systems Biology

- Zoology and Animal Sciences

- Browse content in Chemistry

- Analytical Chemistry

- Computational Chemistry

- Crystallography

- Environmental Chemistry

- Industrial Chemistry

- Inorganic Chemistry

- Materials Chemistry

- Medicinal Chemistry

- Mineralogy and Gems

- Organic Chemistry

- Physical Chemistry

- Polymer Chemistry

- Study and Communication Skills in Chemistry

- Theoretical Chemistry

- Browse content in Computer Science

- Artificial Intelligence

- Computer Architecture and Logic Design

- Game Studies

- Human-Computer Interaction

- Mathematical Theory of Computation

- Programming Languages

- Software Engineering

- Systems Analysis and Design

- Virtual Reality

- Browse content in Computing

- Business Applications

- Computer Security

- Computer Games

- Computer Networking and Communications

- Digital Lifestyle

- Graphical and Digital Media Applications

- Operating Systems

- Browse content in Earth Sciences and Geography

- Atmospheric Sciences

- Environmental Geography

- Geology and the Lithosphere

- Maps and Map-making

- Meteorology and Climatology

- Oceanography and Hydrology

- Palaeontology

- Physical Geography and Topography

- Regional Geography

- Soil Science

- Urban Geography

- Browse content in Engineering and Technology

- Agriculture and Farming

- Biological Engineering

- Civil Engineering, Surveying, and Building

- Electronics and Communications Engineering

- Energy Technology

- Engineering (General)

- Environmental Science, Engineering, and Technology

- History of Engineering and Technology

- Mechanical Engineering and Materials

- Technology of Industrial Chemistry

- Transport Technology and Trades

- Browse content in Environmental Science

- Applied Ecology (Environmental Science)

- Conservation of the Environment (Environmental Science)

- Environmental Sustainability

- Environmentalist Thought and Ideology (Environmental Science)

- Management of Land and Natural Resources (Environmental Science)

- Natural Disasters (Environmental Science)

- Nuclear Issues (Environmental Science)

- Pollution and Threats to the Environment (Environmental Science)

- Social Impact of Environmental Issues (Environmental Science)

- History of Science and Technology

- Browse content in Materials Science

- Ceramics and Glasses

- Composite Materials

- Metals, Alloying, and Corrosion

- Nanotechnology

- Browse content in Mathematics

- Applied Mathematics

- Biomathematics and Statistics

- History of Mathematics

- Mathematical Education

- Mathematical Finance

- Mathematical Analysis

- Numerical and Computational Mathematics

- Probability and Statistics

- Pure Mathematics

- Browse content in Neuroscience

- Cognition and Behavioural Neuroscience

- Development of the Nervous System

- Disorders of the Nervous System

- History of Neuroscience

- Invertebrate Neurobiology

- Molecular and Cellular Systems

- Neuroendocrinology and Autonomic Nervous System

- Neuroscientific Techniques

- Sensory and Motor Systems

- Browse content in Physics

- Astronomy and Astrophysics

- Atomic, Molecular, and Optical Physics

- Biological and Medical Physics

- Classical Mechanics

- Computational Physics

- Condensed Matter Physics

- Electromagnetism, Optics, and Acoustics

- History of Physics

- Mathematical and Statistical Physics

- Measurement Science

- Nuclear Physics

- Particles and Fields

- Plasma Physics

- Quantum Physics

- Relativity and Gravitation

- Semiconductor and Mesoscopic Physics

- Browse content in Psychology

- Affective Sciences

- Clinical Psychology

- Cognitive Psychology

- Cognitive Neuroscience

- Criminal and Forensic Psychology

- Developmental Psychology

- Educational Psychology

- Evolutionary Psychology

- Health Psychology

- History and Systems in Psychology

- Music Psychology

- Neuropsychology

- Organizational Psychology

- Psychological Assessment and Testing

- Psychology of Human-Technology Interaction

- Psychology Professional Development and Training

- Research Methods in Psychology

- Social Psychology

- Browse content in Social Sciences

- Browse content in Anthropology

- Anthropology of Religion

- Human Evolution

- Medical Anthropology

- Physical Anthropology

- Regional Anthropology

- Social and Cultural Anthropology

- Theory and Practice of Anthropology

- Browse content in Business and Management

- Business Ethics

- Business Strategy

- Business History

- Business and Technology

- Business and Government

- Business and the Environment

- Comparative Management

- Corporate Governance

- Corporate Social Responsibility

- Entrepreneurship

- Health Management

- Human Resource Management

- Industrial and Employment Relations

- Industry Studies

- Information and Communication Technologies

- International Business

- Knowledge Management

- Management and Management Techniques

- Operations Management

- Organizational Theory and Behaviour

- Pensions and Pension Management

- Public and Nonprofit Management

- Strategic Management

- Supply Chain Management

- Browse content in Criminology and Criminal Justice

- Criminal Justice

- Criminology

- Forms of Crime

- International and Comparative Criminology

- Youth Violence and Juvenile Justice

- Development Studies

- Browse content in Economics

- Agricultural, Environmental, and Natural Resource Economics

- Asian Economics

- Behavioural Finance

- Behavioural Economics and Neuroeconomics

- Econometrics and Mathematical Economics

- Economic History

- Economic Systems

- Economic Methodology

- Economic Development and Growth

- Financial Markets

- Financial Institutions and Services

- General Economics and Teaching

- Health, Education, and Welfare

- History of Economic Thought

- International Economics

- Labour and Demographic Economics

- Law and Economics

- Macroeconomics and Monetary Economics

- Microeconomics

- Public Economics

- Urban, Rural, and Regional Economics

- Welfare Economics

- Browse content in Education

- Adult Education and Continuous Learning

- Care and Counselling of Students

- Early Childhood and Elementary Education

- Educational Equipment and Technology

- Educational Strategies and Policy

- Higher and Further Education

- Organization and Management of Education

- Philosophy and Theory of Education

- Schools Studies

- Secondary Education

- Teaching of a Specific Subject

- Teaching of Specific Groups and Special Educational Needs

- Teaching Skills and Techniques

- Browse content in Environment

- Applied Ecology (Social Science)

- Climate Change

- Conservation of the Environment (Social Science)

- Environmentalist Thought and Ideology (Social Science)

- Natural Disasters (Environment)

- Social Impact of Environmental Issues (Social Science)

- Browse content in Human Geography

- Cultural Geography

- Economic Geography

- Political Geography

- Browse content in Interdisciplinary Studies

- Communication Studies

- Museums, Libraries, and Information Sciences

- Browse content in Politics

- African Politics

- Asian Politics

- Chinese Politics

- Comparative Politics

- Conflict Politics

- Elections and Electoral Studies

- Environmental Politics

- European Union

- Foreign Policy

- Gender and Politics

- Human Rights and Politics

- Indian Politics

- International Relations

- International Organization (Politics)

- International Political Economy

- Irish Politics

- Latin American Politics

- Middle Eastern Politics

- Political Behaviour

- Political Economy

- Political Institutions

- Political Methodology

- Political Communication

- Political Philosophy

- Political Sociology

- Political Theory

- Politics and Law

- Public Policy

- Public Administration

- Quantitative Political Methodology

- Regional Political Studies

- Russian Politics

- Security Studies

- State and Local Government

- UK Politics

- US Politics

- Browse content in Regional and Area Studies

- African Studies

- Asian Studies

- East Asian Studies

- Japanese Studies

- Latin American Studies

- Middle Eastern Studies

- Native American Studies

- Scottish Studies

- Browse content in Research and Information

- Research Methods

- Browse content in Social Work

- Addictions and Substance Misuse

- Adoption and Fostering

- Care of the Elderly

- Child and Adolescent Social Work

- Couple and Family Social Work

- Developmental and Physical Disabilities Social Work

- Direct Practice and Clinical Social Work

- Emergency Services

- Human Behaviour and the Social Environment

- International and Global Issues in Social Work

- Mental and Behavioural Health

- Social Justice and Human Rights

- Social Policy and Advocacy

- Social Work and Crime and Justice

- Social Work Macro Practice

- Social Work Practice Settings

- Social Work Research and Evidence-based Practice

- Welfare and Benefit Systems

- Browse content in Sociology

- Childhood Studies

- Community Development

- Comparative and Historical Sociology

- Economic Sociology

- Gender and Sexuality

- Gerontology and Ageing

- Health, Illness, and Medicine

- Marriage and the Family

- Migration Studies

- Occupations, Professions, and Work

- Organizations

- Population and Demography

- Race and Ethnicity

- Social Theory

- Social Movements and Social Change

- Social Research and Statistics

- Social Stratification, Inequality, and Mobility

- Sociology of Religion

- Sociology of Education

- Sport and Leisure

- Urban and Rural Studies

- Browse content in Warfare and Defence

- Defence Strategy, Planning, and Research

- Land Forces and Warfare

- Military Administration

- Military Life and Institutions

- Naval Forces and Warfare

- Other Warfare and Defence Issues

- Peace Studies and Conflict Resolution

- Weapons and Equipment

- < Previous

- Next chapter >

1 The History of Digital Ethics

Vincent C. Müller, A. v. Humboldt Professor for Theory and Ethics of AI, Friedrich-Alexander Universität Erlangen-Nürnberg

- Published: 19 December 2022

- Cite Icon Cite

- Permissions Icon Permissions

Digital ethics, also known as computer ethics or information ethics, is now a lively field that draws a lot of attention. But what were the developments that led to its existence and present state? What are the traditions, concerns, and technological and social developments that guided digital ethics? How did ethical issues change with the digitalization of human life? How did the traditional discipline of philosophy respond and how was ‘applied ethics’ influenced by these developments? This chapter proposes to view the history of digital ethics in three phases: pre-digital modernity (before the invention of digital technology), digital modernity (with digital technology but analogue lives), and digital post-modernity (with digital technology and digital lives). For each phase, the developments in digital ethics are explained with the background of the technological and social conditions. Finally, a brief outlook is provided.

Introduction

The history of digital ethics as a field was strongly shaped by the development and use of digital technologies in society. This digital ethics often mirror the ethical concerns of the pre-digital technologies that were replaced, but in more recent times, digital technologies have also posed questions that are truly new. When ‘data processing’ became a more common activity in industry and public administration in the 1960s, the concerns of ethicists were old issues like privacy, data security , and power through information access. Today, digital ethics involves old issues that took on a new quality due to digital technology, such as surveillance , news , or dating , but it also covers new issues that did not exist at all, such as automated weapons , search engines , automated decision-making , and existential risk from artificial intelligence (AI) .

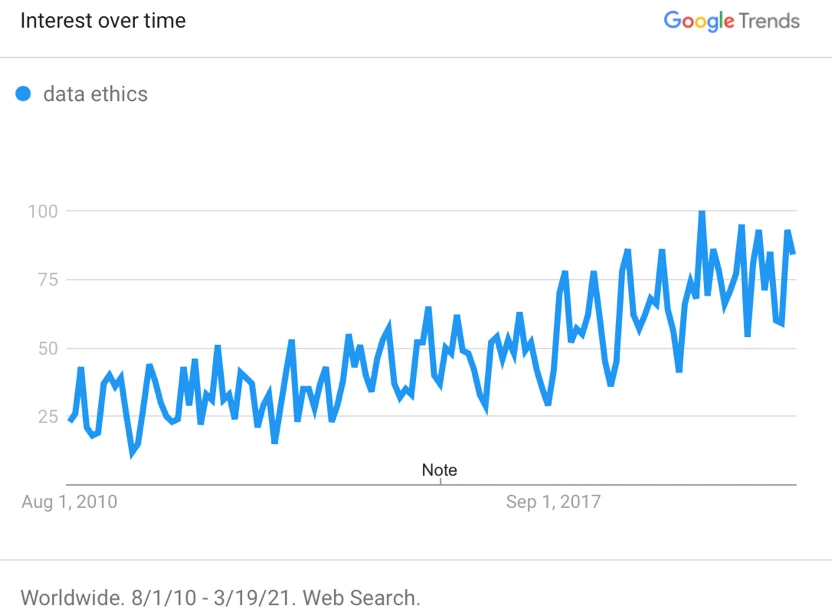

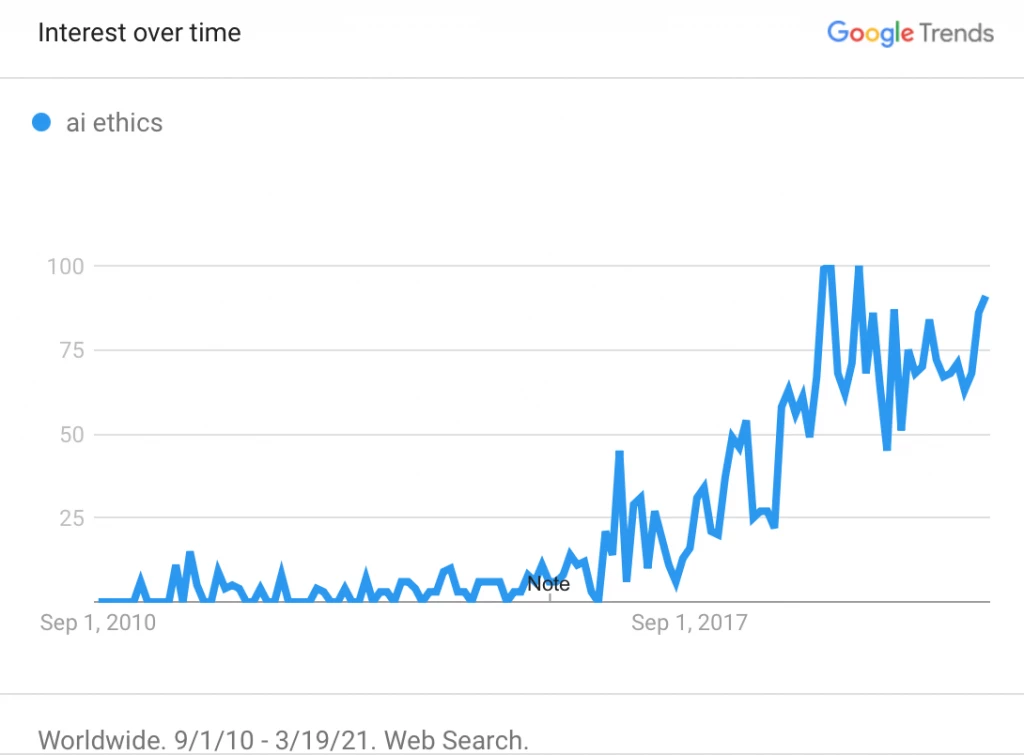

The terms used to name the expanding discipline have also changed over time: we started with ‘computer ethics’ ( Bynum 2001 ; Johnson 1985 ; Vacura 2015 ), then more abstract terms like ‘information ethics’ were proposed ( Floridi 1999 ), and now some use the term ‘digital ethics’ ( Capurro 2010 ), as this Handbook does. We also have digital ethics for particular areas, such as ‘the ethics of AI’, ‘data ethics’, ‘robot ethics’, etc.

There are reasons for these changes: ‘computer ethics’ now sounds dated because it focuses attention on the machines, which made good sense when they were visible, big boxes but began to make less sense when many technical devices invisibly included computing and the location of the processor became irrelevant. The more ambitious notion of ‘information ethics’ involves a digital ontology ( Capurro 2006 ) and faces a significant challenge to explain the role of the notion of ‘information’; see ( Floridi 1999 ) versus ( Floridi and Taddeo 2016 ). Also, the term ‘information ethics’ is sometimes used in contexts in which information is not computed, for example, in ‘library and information science’. Occasionally, one hears the term ‘cyberethics’ ( Spinello 2020 ), which specifically deals with the connected ‘cyberspace’—probably now an outdated term, at least outside the military. In this confusion, some people use ‘digital’ as the new term, which captures the most relevant phenomena and moves away from the machinery to their use. One might argue that the process of ‘computing’ is still fundamental but that we will probably soon care less about whether a device uses computing (analogue or digital)—like we do not care much which energy source the engine in a car uses. The notion of ‘data’ will continue to make sense, but, in the future, I suspect that terms like ‘computing’ and ‘digital’ will just merge into ‘technology’.

Given that this Handbook already has articles on the current state of the art, this article tries to provide historical context, both in debates during the early days of information technology (IT) from the 1940s to the 1970s, when IT was an expensive technology available only in well-funded central ‘computation centres’; then roughly the 1980s to the early 2000s, with networked personal computers entering offices and households; finally, the past fifteen years or so with ‘smart’ phones and other ‘smart’ devices being used privately—for new purposes that emerge with the devices.

This article is structured around two ideas, namely, that (a) technology drives ethics and (b) many issues that are now part of ‘digital ethics’ predate digital technology. There is a certain tension between these two ideas, however, so the discussion will try to disentangle when and in what sense ‘technology drives ethics’ (e.g. by posing new problems, by revealing old ones, or even by effecting ethical change) and when that ‘drive’ is specific to ‘digital’ (computing) technology. I start on the assumption that (b) is true, thus the article must begin before the invention of digital technology, in fact, even before the invention of writing. We will return to these two ideas in the conclusion.

I propose to divide history into three main sections: pre-digital modernity (before the invention of digital technology), digital modernity (with digital technology but analogue lives), and digital post-modernity (with digital technology and digital lives). The hope is that this organization matches the social developments of these periods, but I make no claim that the terminology used here is congruent with a standard history of digital society. In each section, we will briefly look at the technology and then at digital ethics. Finally, it may be mentioned that there are significant research desiderata in the field; a detailed history of digital ethics, and indeed of applied or practical ethics, is yet to be written.

Pre-digital modernity: Talking and writing

Technology and society.

A fair proportion of the concerns of classical digital ethics are about informational privacy, information security, power through information, etc. These issues existed long before the computing age, in fact before writing was invented—after all, they also feature in village gossip.

One significant step in this timeline, however, was the beginning of symbols and iconic representations from cave paintings onwards (cf. Sassoon and Gaur 1997 ). These allowed records that do not immediately vanish to be maintained, as speech does, some of which can be transported to another place. It may be useful to differentiate (a) representation for someone, or intentional representation , and (b) representation per se , when something represents something else because that is its function in a system (assuming this is possible without intentional states). The word ‘tree’, pronounced by someone, is an intentional representation (type 1); the non-linguistic representation of a tree in the brain of an organism that sees the tree is a non-intentional representation (type 2) ( Müller 2007 ). Evidently, one major step that is relevant for digital ethics was the invention and use of writing— for the representation of natural language but also for mathematics and other purposes. Symbols in writing are already digital; that is, they have a sharp boundary with no intermediate stages (something is either an ‘A’ or a ‘B’, it cannot be a bit of both) and they are perfectly reproducible—one can write the exact same word or sentence more than once.

In a further step, the replication of writing and images in print multiplies the impact that goes with that writing—what is printed can be transported, remembered, and read by many people. It can become more easily part of the cultural heritage. A further major step is the transmission of speech and symbols over large distances and then to larger audiences through telegraph, mail, radio, and TV. Suddenly, a single person speaking could be heard and even seen by millions of others around the globe, even in real time.

There is a significant body of ethical and legal discussion on pre-digital information handling, especially after the invention of writing, printing, and mass communication. Much of it is still the law today, such as the privacy of letters and other written communication, the press laws, and laws on libel (defamation). The privacy of letters was legally protected in the early days of postal services in the early eighteenth century, for example, in the ‘Prussian New Postal Order’ of 1712 ( Matthias 1812 : 54). Remarkably, several of these laws have lost their teeth in the digital era without explicit legal change. For example, email is often not protected by the privacy of letters, and online publications are often not covered by press law.

The central issue of privacy, often connected with ‘data protection’, started around 1900 ( Warren and Brandeis 1890 ), developed into a field ( Hoffman 1973 ; Martin 1973 ; Westin 1968 ) and is still a central topic of discussion today; from classical surveillance ( Macnish 2017 ), governance ( Bennett and Raab 2003 ), and ethical analysis ( Roessler 2017 ; van den Hoven et al. 2020 ) to analysis for activism ( Véliz 2020 ). This is an area where the law has not caught up with technical developments in such a way that the original intentions could be maintained—it is not even clear that these intentions are still politically desired.

The power of information and misinformation was well understood after the invention of printing but especially after the invention of mass media like radio and TV and their use in propaganda—media studies and media ethics became standard academic fields after the Second World War. Media ethics is still an important aspect of digital ethics ( Ess 2014 ), especially the aspect of the ‘public sphere’ ( Habermas 1962 ).

Apart from this tradition of more ‘societal’ ethics, there is a more ‘personal’ kind of ethics of professional responsibility that started in this area—and had an impact in the digital era. The influential Institute of Electrical and Electronics Engineers (IEEE, initially American Institute of Electrical Engineers, AIEE) adopted its first ‘Principles of Professional Conduct for the Guidance of the Electrical Engineer’ in 1912 ( AIEE 1912 ). ‘Engineering ethics’ is thus older than ethics of computing—but, interestingly, the electrical and telephone industries in the United States managed to get an exception to the demand that engineers hold a professional licence (PE). This move may have had a far-reaching impact into the computer science of today, which usually does not see itself as a discipline of engineering, and bound by the ethos of engineers—though there are computer scientists that would want to achieve recognition as a profession and thus the ethos of ‘being a good engineer’ (in many countries, engineering has high status and computer science degrees are ‘diplomas in engineering’).

Up to this point, we see the main ethical themes of privacy and data security, power of information, and professional responsibility.

Digital modernity: Digital ethics in IT

As a rough starting point in this part of the timeline, one should take the first design for a universal computer with Babbage’s ‘analytic engine’ in about 1840; the first actual universal computer was feasible only when computers could use electronic parts, starting with Zuse’s Z3 in 1941, followed by the independently developed ENIAC in 1945, and the Manchester Mark I in 1949 and then many more machines, mostly due to military funding ( Ifrah 1981 ). All major computers since then have been electronic universal digital computers with stored programs. Shortly after the Second World War came the beginnings of the science of ‘informatics’ with ‘cybernetics’ ( Ashby 1956 ; Wiener 1948 ) and C.E. Shannon’s ‘A Mathematical Theory of Communication’ ( Shannon 1948 ). In 1956, J. McCarthy, M.L. Minsky, N. Rochester, and C.E. Shannon organized the Dartmouth conference on ‘Artificial Intelligence’, thus coining the term ( McCarthy et al. 1955 ). Less than ten years later, H. Simon predicted, ‘Machines will be capable, within 20 years, of doing any work that a man can do’ ( Simon 1965 : 96). In 1971, integrated processor (microprocessor) computers started, with all integrated circuits in one microchip. This technology effectively started the modern computer era. Up to that point, computers had been big and very expensive devices, only used by large corporations, research centres, or public entities for ‘data processing’; from the 1980s, ‘personal computers’ were possible (and had to be labelled as such).

Ray Kurzweil has put the development from the Second World War to the present with characteristic panache:

Computers started out as large remote machines in air-conditioned rooms tended by white coated technicians. Subsequently they moved onto our desks, then under our arms, and now in our pockets. Soon, we’ll routinely put them inside our bodies and brains. Ultimately we will become more nonbiological than biological. ( Kurzweil 2002 )

Professional ethics

The first discussions about ethics and computers in digital modernity were about the personal ethics of the people who work professionally in computing—what they should or should not do. In that phase, a computer scientist was an expert, rather like a doctor or a mechanical engineer, and the question arose whether the new ‘profession’ needed ethics. These early discussions of computer ethics often had a certain tinge of moralizing, of having discovered an area of life that had escaped the attention of ethicists so far, but where immorality, or at least some impact on society, looms. In contrast to this, professional ethics today often take the more positive approach that practitioners face ethical problems that expert analysis might help to resolve. This suspicion of immorality was often supported by the view of practitioners that our technology is neutral and our aims laudable, thus ‘ethics’ is not needed—a naïve view one finds even today.

The early attempts at professional ethics moved into computer science quite early in the discipline; for example, the US Association for Computing Machinery (ACM) adopted ‘Guidelines for Professional Conduct in Information Processing; in 1966 and Donn Parker pushed this agenda in his discipline in the ensuing years ( Parker 1968 ). The current version is called the ‘ACM Code of Ethics and Professional Conduct’ ( ACM 2018 ).

Responsible technology

The use of nuclear (atomic) bombs in the Second World War and the discussion about the risk of generating electricity in nuclear power stations from the late 1950s fuelled the increasing concern about the limits of technology in the 1960s. This political development is closely connected to the political developments in ‘the generation of 1968’ on the political left in Europe and the United States. The ‘Club of Rome’ was and is a group of high-level politicians, scientists, and industry leaders that deals with the basic, long-term problems of humankind. In 1972, it published the highly influential book, The Limits to Growth: A Report for the Club of Rome’s Project on the Predicament of Mankind ( Club of Rome 1972 ). It argued that the industrialized world was on an unsustainable trajectory of economic growth, using up finite resources (e.g. oil, minerals, farmable land) and increasing pollution, with the background of an increasing world population. These were the views of a radical minority at the time, and even today they are still far from commonplace.

This report and other similar discussions fuelled a generally more critical view of technology and the growth it enables. They led to a field of ‘technology assessment’ in terms of long-term impacts that has also dealt with information technologies ( Grunwald 2002 ). This area of the social sciences is influential in political consulting and has several academic institutes (e.g. the Karlsruhe Institute of Technology). At the same time, a more political angle of technology is taken in the field of ‘Science and Technology Studies’ (STS), which is now a sizable academic field with degree programmes, journals, and conferences. As books like The Ethics of Invention ( Jasanoff 2016 ) show, concerns in STS are often quite similar to those in ethics, though typically with a more ‘critical’ and more empirical approach. Despite these agreements, STS approaches have remained oddly separate from the ethics of computing.

Concerns about sustainable development , especially with respect to the environment, have been prominent on the political agenda for about forty years and they are now a central policy aim in most countries, at least officially. In 2015, the United Nations adopted the ‘2030 Agenda for Sustainable Development’ ( United Nations 2015 ) with seventeen ‘Sustainable Development Goals’. These goals are now quite influential; for example, they guide the current development of official European Union policy on AI. The seventeen goals are: (1) no poverty; (2) zero hunger; (3) good health and well-being; (4) quality education; (5) gender equality; (6) clean water and sanitation; (7) affordable and clean energy; (8) decent work and economic growth; (9) industry, innovation, and infrastructure; (10) reducing inequality; (11) sustainable cities and communities; (12) responsible consumption and production; (13) climate action; (14) life below water; (15) life on land; (16) peace, justice, and strong institutions, and (17) partnerships for the Goals.

It had also been understood by some that science and engineering generally pose ethical problems. The prominent physicist, C.F. v. Weizsäcker predicted in 1968 that computer technology will fundamentally transform our lives in the coming decades ( Weizsäcker 1968 ). Weizsäcker asked how we can have individual freedom in such a world, ‘i.e. freedom from the control of anonymous powers’ (439). At the end of his article, he demands a Hippocratic oath for scientists. Soon after, Weizsäcker became the founding Director of the famous Max Planck Institute for Research into the Life in a Scientific-Technical World , co-directed by Jürgen Habermas since 1971. At that time, there was clearly a sense with major state funders that these issues deserved their own research institute.

In the United States, the ACM had a Special Interest Group ‘Computers & Society’ (SIGCAS) from 1969—it is still a significant actor today and still publishes the journal Computers and Society . Norbert Wiener had warned of AI even before the term was coined (see Bynum 2008 : 26–30; 2015 ). In Cybernetics , Wiener wrote:

[ … ] we are already in a position to construct artificial machines of almost any degree of elaborateness of performance. Long before Nagasaki and the public awareness of the atomic bomb, it had occurred to me that we were here in the presence of another social potentiality of unheard-of importance for good and for evil. ( Wiener 1948 : 28)

Note that the atomic bomb was a starting point for a critical view on technology in his case, too. In his later book, The Human Use of Human Beings , he warns of manipulation:

[ … ] such machines, though helpless by themselves, may be used by a human being or a block of human beings to increase their control over the rest of the race or that political leaders may attempt to control their populations by means not of machines themselves but through political techniques as narrow and indifferent to human possibility as if they had, in fact, been conceived mechanically. ( Wiener 1950 )

Thus, in this phase, professional responsibility gains prominence as an issue, the notion of control through information and machinery comes up as a theme, and there is a general concern about the longer-term impacts of technology.

Post-modernity

In this part of the timeline, from 1980 to today (2021), I will use a typical university student in a wealthy European country as an illustration. I think this timeline is useful because it is easy to forget how the availability and use of computers have changed in the past decades and even the past few years. (If this text is read a few years after writing, it will seem quaintly old-fashioned.) We will see that this is the phase in which computers enter peoples’ lives and digital ethics becomes a discipline.

In the first half of the 1980s, a student would have seen a ‘personal computer’ (PC) in a business context, and towards the end of the 1980s they would probably own one. These PCs were not connected to a network, unless on university premises, so data exchange was through floppy disks. Floppy disks held 360KB, later 720 KB and 1.44 MB; if the PC had a hard drive at all, it would hold ca. 20–120 MB. After 1990, if private PCs had network connections, that would be through modem dial-in on analogue telephone lines that would mainly serve links to others in the same network (e.g. CompuServe or AOL), allowing email and file-transfer protocol (ftp). Around the same time, personal computers moved from a command-line to a graphic interface, first on MacOS, then on MS Windows and UNIX. Students would use electrical typewriters or university-owned computers for their writing until ca . the year 2000, and often even later. The first worldwide web (WWW) page came online in 1990 and institutional web pages became common in the late 1990s; around the same time a dial-in internet connection at home through a modem became affordable, and Google was founded (1998). After 2000, it became common for a student to have a computer at home with an internet connection, though file exchanges would still be mostly via physical data carriers. By ca . 2010, the internet connection would be ‘always on’ and fast enough for frequent use of www pages, and video; by ca . 2019, it would be fully digital (ISDN, ASDL, …) and its files would often be stored in the ‘cloud’, that is, spaces somewhere on the internet. Fibre-optic lines started to be used around 2020. With the COVID-19 pandemic over 2020–2022, cooperative work online through live video became common.

Mobile phones (cell phones) became commonly affordable by students in the late 1990s, but these were just phones, increasingly miniaturized. The first ‘smart’ phone, the iPhone, was introduced in 2007. Around 2015, a typical student would own such a smartphone and would use that phone mostly for things other than calls; essentially as a portable tablet computer with wi-fi capability (but it would be called a ‘phone’, not a ‘computer’). After 2015, the typical smartphone would be connected to the internet at all times (with 3G). The frequent use of the web-over-phone internet became affordable around 2018/2019 (with 4G), so around 2020 video calls and online teaching became possible and useful.

The students born after ca . 1980 (i.e. at university from around 2020) are often called ‘digital natives’, meaning that their teenage and adult lives took place when digital information processing was commonplace. To digital natives, pre-digital technologies like print, radio, or television, feel ‘old’, while for the previous generations, digital technologies feel ‘new’. This generational difference may also be one of the few cases where technological change drives actual ethical change, for example, in that digital natives are not worried about privacy in the way older generations are.

Together with smartphones, we now (2022) also begin to have other ‘smart’ devices that incorporate computers and are connected to the internet (soon with 5G), especially portables, TVs, cars, and homes—also known as the ‘Internet of Things’ (IoT). ‘Smart’ superstructures like grids, cities, and roads are being deployed. Sensors with digital output are becoming ubiquitous. In addition, a large part of our lives is digital (and thus does not need to be captured by sensors), much of it conducted through commercial platforms and ‘social media’ systems. All these developments enable a surveillance economy where data is a valuable commodity (as discussed in other chapters in this Handbook).

While a ‘computer’ was easily recognized as a physical box until ca . 2010, it is now incorporated into a host of devices and systems and often not perceived as such; perhaps even designed not to be noticed (e.g. in order to collect data). Much of computing has become a transparent technology in our daily lives: we use it without special learning and do not notice its existence or that computing takes place: ‘The most profound technologies are those that disappear’ ( Weiser 1991 : 94).

For the purposes of digital ethics, the crucial developments of our students were the move from computers ‘somewhere else’ to their own PC ( ca . 1990), the use of the WWW ( ca . 1995) and their smartphone ( ca . 2015); the current development is the move to computing as a ‘transparent technology’.

Establishment

The first phase of digital ethics, or computer ethics, was the effort in the 1980s and 1990s to establish that there is such a thing or that there should be such a thing—both within philosophy or applied ethics and within computer science, especially the curriculum of computer science at universities. This ‘establishment’ is of significant importance for the academic field since, once ‘ethics’ is an established component of degrees in computer science and related disciplines, there is a labour market for academic teachers, a demand for writing textbooks and articles, etc. ( Bynum 2010 ). It is not an accident that the field was established beyond ‘professional ethics’ and general societal concerns around the same time as the move of computers from labs to offices and homes occurred.

The first use of ‘computer ethics’ was probably by Deborah Johnson in her paper ‘Computer Ethics: New Study Area for Engineering Science Students’, where she remarked, ‘Computer professionals are beginning to look toward codes of ethics and legislation to control the use of software’ ( Johnson 1978 ). Sometimes ( Bynum 2001 ), it is Walter Maner who is credited with the first use for ‘ethical problems aggravated, transformed or created by computer technology’ ( Maner 1980 ). Again, professional ethics seems to have been the forerunner for computer ethics, generally.

A few years later, with fundamental publications like James H. [Jim] Moor’s ‘What is Computer Ethics?’ ( Moor 1985 ), the first textbook ( Johnson 1985 ), and three anthologies with established publishers (Blackwell, MIT Press, Columbia University Press), one can speak of an established small discipline ( Moor and Bynum 2002 ). The two texts by Moor and Johnson are still the most cited works in the discipline, together with classic texts on privacy, such as ( Warren and Brandeis 1890 ) and ( Westin 1968 ). As ( Tavani 1999 ) shows, in the next fifteen years there was a steady flow of monographs, textbooks, and anthologies. In the 1990s, ‘ethics’ started to gain a place in many computer science curricula.

In terms of themes , we have the classical ones (privacy, information power, professional ethics, impact of technology) and we now have increasing confidence that there is ‘something unique’ here. Maner says, ‘I have tried to show that there are issues and problems that are unique to computer ethics. For all of these issues, there was an essential involvement of computing technology. Except for this technology, these issues would not have arisen, or would not have arisen in their highly altered form’ ( Maner 1996 ).

We now get a wider notion of digital ethics that includes issues which only come up in ethics of robotics and AI , for example, manipulation, automated decision-making, transparency, bias, autonomous systems, existential risk, etc. ( Müller 2020 ). The relationship between robots or AI systems and humans had already been discussed in Putnam’s classic paper ‘Robots: Machines or Artificially Created Life?’ ( Putnam 1964 ) and it has seen a revival in the discussion of singularity ( Kurzweil 1999 ) and existential risk from AI ( Bostrom 2014 ).

Digital ethics now covers the human digital life , online and with computing devices—both on an individual level and as a society, for example, social networks ( Vallor 2016 ). As a result, this handbook includes themes like human–robot interaction, online interaction, fake news, online relationships, advisory systems, transparency and explainability, discrimination, nudging, cybersecurity, and existential risk—in other words, the digital life is prominently discussed here; something that would not have happened even five years ago.

Institutional

The journal Metaphilosophy , founded by T.W. Bynum and R. Reese in 1970, first published articles on computer ethics in the mid-1980s. The journal Minds and Machines, founded by James Fetzer in 1991, started publishing ethics papers under the editorship of James H. Moor (2001–2010) . The conference series ETHICOMP (1995) and the European Council of the Paint, Printing Ink and Artists’ Colours Industry (CEPE) (1997) started in Europe, and specialized journals were established: the Journal of Information Ethics (1992), Science and Engineering Ethics (1995), Ethics and Information Technology (1999), and Philosophy & Technology (2010). The conferences on ‘Computing and Philosophy’ (CAP), since 1986 in North America, later in Europe and Asia, united to the International Association for Computing and Philosophy (IACAP) in 2011 and increasingly have a strong division on ethical issues; as do the Society for the Study of Artificial Intelligence and the Simulation of Behaviour (AISB) (in the UK) and the Philosophy and Theory of Artificial Intelligence (PT-AI).

Within the academic field of philosophy, applied ethics and digital ethics have remained firmly marginal or specialist even now, with very few presentations at mainstream conferences, publications in mainstream journals, or posts in mainstream departments. As far as I can tell, no paper on digital ethics has appeared in places like the Journal of Philosophy, Mind, Philosophical Review, Philosophy & Public Affairs or Ethics to this day—while, significantly, there are papers on this topic in Science, Nature , or Artificial Intelligence . Practically orientated fields in philosophy are treated largely as the poor and slightly embarrassing cousin who has to work for a living rather than having old money in the bank. In traditional philosophy, what counts as ‘a problem’ is still mostly defined through tradition rather than permitting a problem to enter philosophy from the outside. Cementing this situation, few of these ‘practical’ fields have the ambition to have a real influence on traditional philosophy; but this is changing, and I would venture that this influence will be strong in the decades to come. It is interesting to note that the citation counts of academics in computing ethics and theory have surpassed those of comparable philosophers in related traditional areas, and similar trends are happening now with journals. One data point: as of 2020, the average article in Mind is cited twice within four years, while the average article in Minds and Machines is cited three times within four years—the number for the latter journal doubled in three years. 1

Several prominent philosophers have worked on theoretical issues around AI and computing (e.g. Dennett, Dreyfus, Fodor, Haugeland, Searle), typically with a foundation of their careers in related areas of philosophy, such as philosophy of mind, philosophy of language, or logic. This also applies to Jim Moor, who was one of the first people in digital ethics to hold a professorship at a reputed general university (Dartmouth College). Still, the specialized researchers in the field were at marginal institutions or doing digital ethics on the side. This changed slowly; for example, several technical universities had professors working in digital ethics relatively early on; the Technical Universities in the Netherlands founded a 4TU Centre for Ethics and Technology in 2007 (Delft, Eindhoven, Twente, and Wageningen). In the past decade, Floridi and Bostrom were appointed to professorships at Oxford, at the Oxford Internet Institute (OII) and the Future of Humanity Institute (FHI). Coeckelbergh was appointed to a chair at the philosophy department in Vienna in 2015 (where Hrachovec was already active). A few more people were and are active in philosophical issues of ‘new media’, for example, Ch. Ess, who moved to Oslo in 2012. The ethics of AI became a field only quite recently, with the first conference in 2012 (Artificial General Intelligence (AGI)-Impacts), but it now has its own institutes at many mainstream universities.

In other words, only five years ago, almost all scholars in digital ethics were at institutions marginal to mainstream philosophy. It is only in those last couple of years that digital ethics is becoming mainstream; many more jobs are advertised, senior positions are available to people in the field, younger faculties are picking up on the topic, and more established faculties at established institutions are beginning to deem these matters worthy of their attention. That development is rapidly gaining pace now.

I expect that mainstream philosophy will quickly pick up digital ethics in the coming years—the subject has shown itself to be mature and fruitful for classical philosophical issues, and there is an obvious societal demand and significant funding opportunities. Probably there is also some hype already. In the classic notion of a ‘hype cycle’ for the expectations from a new technology, the development is supposed to go through several phases: After its beginnings at the ‘technology trigger’, it gains more and more attention, reaching a ‘peak of inflated expectations’, after which a more critical evaluation begins and the expectations go down, eventually reaching a ‘trough of disillusionment’. From there, a realistic evaluation shows that there is some use, so we get the ‘slope of enlightenment’ and eventually the technology settles on a ‘plateau of productivity’ and becomes mainstream. The Gartner Hype Cycle for AI, 2019 ( Goasduff 2019 ) sees digital ethics itself at the ‘peak of inflated expectations’ … meaning that it is downhill from here, for some time, until we hopefully reach the ‘plateau of productivity’. (My own view is that this is wrong since we are seeing the beginnings of AI policy and stronger digital ethics now.)

The state of the art at the present and an outlook into the future are given in the chapters of this Handbook. Moor saw a bright future even twenty years ago: ‘The future of computer ethics: You ain’t seen nothin’ yet!’ ( Moor 2001 ), and he followed up with a programmatic plea for ‘machine ethics’ ( Moor 2006 ). Moor opens the former article with the bold statement:

Computer ethics is a growth area. My prediction is that ethical problems generated by computers and information technology in general will abound for the foreseeable future. Moreover, we will continue to regard these issues as problems of computer ethics even though the ubiquitous computing devices themselves may tend to disappear into our clothing, our walls, our vehicles, our appliances, and ourselves. ( Moor 2001 : 89)

The prediction has undoubtedly held up until now. The ethics of the design and use of computers is clearly an area of very high societal importance and we would do well to catch problems early on—this is something we failed to do in the area of privacy ( Véliz 2020 ) and some hope that we will do in the area of AI ( Müller 2020 ).

However, as Moor mentions, there is also a very different possible line that was developed around the same time: Bynum reports on an unpublished talk by Deborah G. Johnson with the title ‘Computer Ethics in the 21st Century’ at the 1999 ETHICOMP conference:

On Johnson’s view, as information technology becomes very commonplace—as it gets integrated and absorbed into our everyday surroundings and is perceived simply as an aspect of ordinary life—we may no longer notice its presence. At that point, we would no longer need a term like ‘computer ethics’ to single out a subset of ethical issues arising from the use of information technology. Computer technology would be absorbed into the fabric of life, and computer ethics would thus be effectively absorbed into ordinary ethics. ( Bynum 2001 : 111ff) (cf. Johnson 2004 )

On Johnson’s view, we will have applied ethics and the ethics will concern most themes, such as ‘information privacy’ or ‘how to behave in a romantic relationship’ ( Nyholm et al. 2022 )—and much of this will be taking places with or through computing devices, but it will not matter (even though many things will remain that cannot be done without such devices). In other words, the ‘drive’ of technology we have seen in this history will come to a close, and the technology will become transparent. This transparency will likely have ethical problems itself—it enables surveillance and manipulation. If Johnson is right, however, we will soon have the situation that all too much is digital and transparent, and thus digital ethics is in danger of disappearing into general applied ethics. In Molière’s play, this bourgeois who wants to become a gentleman tells his ‘philosophy master’:

Oh dear! For more than forty years I have been speaking prose while knowing nothing of it, and I am most obliged to you for telling me so. Molière, Le Bourgeois gentilhomme (Act II) 1670

Conclusion and questions

One feature that is characteristic of the new developments in digital ethics and in applied philosophy generally is how a problem becomes a problem worth investigating. In traditional philosophy, the criterion is often that there already exists a discussion in the past noting that there is something philosophically interesting about it, something unresolved. Thus, typically, we do not need to ask again whether that problem is worth discussing or whether it relies on assumptions we should not make (so we will find people who seriously ask whether Leibniz or Locke was right on the origin of ideas, for example). In digital ethics, what counts as a problem also includes the demand to be philosophically interesting , but more importantly, whether it has relevance . Quite often, this means that the problem first surfaces in fields other than philosophy. The initially dominant approach of professional ethics had a touch of ‘policing’ about it, of checking that everyone behaves—that moralizing gives ethics a bad name and it typically comes too late. More modern digital ethics tries to make people sensitive in the design process (‘ethics by design’) and to pick up problems where people really do not know what the ethically right thing to do is—these are the proper ethical problems that deserve our attention.

For the relation between ethics and computer ethics, Moor seemed right in this prediction:

The development of ethical theory in computer ethics is sometimes overstated and sometimes understated. The overstatement suggests that computer ethics will produce a new ethical theory quite apart from traditional ethical notions. The understatement suggests that computer ethics will disappear into ordinary ethics. The truth, I predict, will be found in the middle [ … ] My prediction is that ethical theory in the future will be recognizable but reconfigured because of work done in computer ethics during the coming century. ( Moor 2001 : 91)