An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Springer Nature - PMC COVID-19 Collection

Information technologies of 21st century and their impact on the society

Mohammad yamin.

Department of MIS, Faculty of Economics and Admin, King Abdulaziz University, Jeddah, Saudi Arabia

Twenty first century has witnessed emergence of some ground breaking information technologies that have revolutionised our way of life. The revolution began late in 20th century with the arrival of internet in 1995, which has given rise to methods, tools and gadgets having astonishing applications in all academic disciplines and business sectors. In this article we shall provide a design of a ‘spider robot’ which may be used for efficient cleaning of deadly viruses. In addition, we shall examine some of the emerging technologies which are causing remarkable breakthroughs and improvements which were inconceivable earlier. In particular we shall look at the technologies and tools associated with the Internet of Things (IoT), Blockchain, Artificial Intelligence, Sensor Networks and Social Media. We shall analyse capabilities and business value of these technologies and tools. As we recognise, most technologies, after completing their commercial journey, are utilised by the business world in physical as well as in the virtual marketing environments. We shall also look at the social impact of some of these technologies and tools.

Introduction

Internet, which was started in 1989 [ 1 ], now has 1.2 million terabyte data from Google, Amazon, Microsoft and Facebook [ 2 ]. It is estimated that the internet contains over four and a half billion websites on the surface web, the deep web, which we know very little about, is at least four hundred times bigger than the surface web [ 3 ]. Soon afterwards in 1990, email platform emerged and then many applications. Then we saw a chain of web 2.0 technologies like E-commerce, which started, social media platforms, E-Business, E-Learning, E-government, Cloud Computing and more from 1995 to the early 21st century [ 4 ]. Now we have a large number of internet based technologies which have uncountable applications in many domains including business, science and engineering, and healthcare [ 5 ]. The impact of these technologies on our personal lives is such that we are compelled to adopt many of them whether we like it or not.

In this article we shall study the nature, usage and capabilities of the emerging and future technologies. Some of these technologies are Big Data Analytics, Internet of Things (IoT), Sensor networks (RFID, Location based Services), Artificial Intelligence (AI), Robotics, Blockchain, Mobile digital Platforms (Digital Streets, towns and villages), Clouds (Fog and Dew) computing, Social Networks and Business, Virtual reality.

With the ever increasing computing power and declining costs of data storage, many government and private organizations are gathering enormous amounts of data. Accumulated data from the years’ of acquisition and processing in many organizations has become enormous meaning that it can no longer be analyzed by traditional tools within a reasonable time. Familiar disciplines to create Big data include astronomy, atmospheric science, biology, genomics, nuclear physics, biochemical experiments, medical records, and scientific research. Some of the organizations responsible to create enormous data are Google, Facebook, YouTube, hospitals, proceedings of parliaments, courts, newspapers and magazines, and government departments. Because of its size, analysis of big data is not a straightforward task and often requires advanced methods and techniques. Lack of timely analysis of big data in certain domains may have devastating results and pose threats to societies, nature and echo system.

Big medic data

Healthcare field is generating big data, which has the potential to surpass other fields when it come to the growth of data. Big Medic data usually refers to considerably bigger pool of health, hospital and treatment records, medical claims of administrative nature, and data from clinical trials, smartphone applications, wearable devices such as RFID and heart beat reading devices, different kinds of social media, and omics-research. In particular omics-research (genomics, proteomics, metabolomics etc.) is leading the charge to the growth of Big data [ 6 , 7 ]. The challenges in omics-research are data cleaning, normalization, biomolecule identification, data dimensionality reduction, biological contextualization, statistical validation, data storage and handling, sharing and data archiving. Data analytics requirements include several tasks like those of data cleaning, normalization, biomolecule identification, data dimensionality reduction, biological contextualization, statistical validation, data storage and handling, sharing and data archiving. These tasks are required for the Big data in some of the omics datasets like genomics, tran-scriptomics, proteomics, metabolomics, metagenomics, phenomics [ 6 ].

According to [ 8 ], in 2011 alone, the data in the United States of America healthcare system amounted to one hundred and fifty Exabyte (One Exabyte = One billion Gigabytes, or 10 18 Bytes), and is expected soon reach to 10 21 and later 10 24 . Some scientist have classified Medical into three categories having (a) large number of samples but small number of parameters; (b) small number of samples and small number of parameters; (c) large small number of samples and small number of parameters [ 9 ]. Although the data in the first category may be analyzed by classical methods but it may be incomplete, noisy, and inconsistent, data cleaning. The data in the third category could be big and may require advanced analytics.

Big data analytics

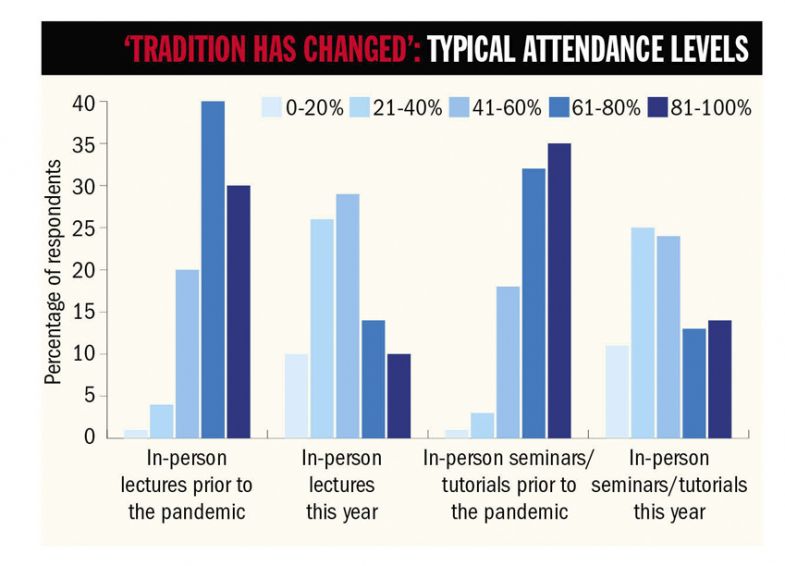

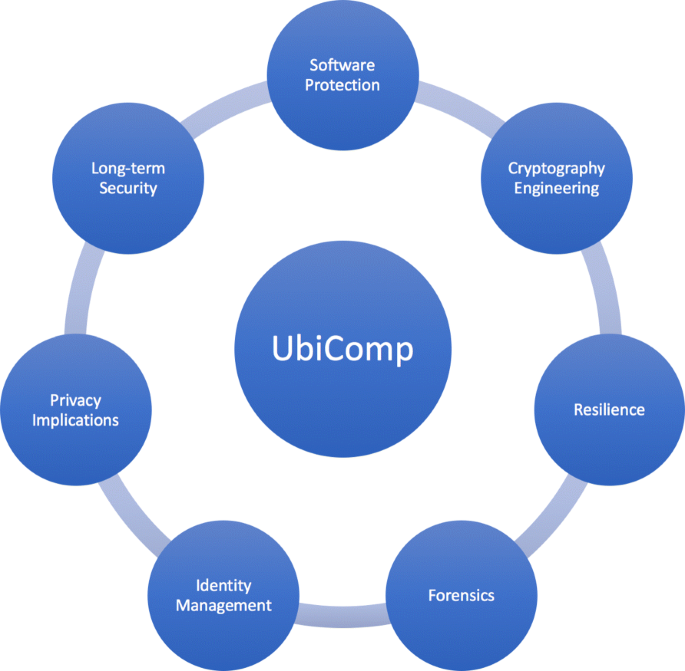

Big data cannot be analyzed in real time by traditional analytical methods. The analysis of Big data, popularly known as Big Data Analytics, often involves a number of technologies, sophisticated processes and tools as depicted in Fig. 1 . Big data can provide smart decision making and business intelligence to the businesses and corporations. Big data unless analyzed is impractical and a burden to the organization. Big data analytics involves mining and extracting useful associations (knowledge discovery) for intelligent decision-making and forecasts. The challenges in Big Data analytics are computational complexities, scalability and visualization of data. Consequently, the information security risk increases with the surge in the amount of data, which is the case in Big Data.

Big Data Analytics

The aim of data analytics has always been knowledge discovery to support smart and timely decision making. With big data, knowledge base becomes widened and sharper to provide greater business intelligence and assist businesses in becoming a leader in the market. Conventional processing paradigm and architecture are inefficient to deal with the large datasets from the Big data. Some of the problems of Big Data are to deal with the size of data sets in Big Data, requiring parallel processing. Some of the recent technologies like Spark, Hadoop, Map Reduce, R, Data Lakes and NoSQL have emerged to provide Big Data analytics. With all these and other data analytics technologies, it is advantageous to invest in designing superior storage systems.

Health data predominantly consists of visual, graphs, audio and video data. Analysing such data to gain meaningful insights and diagnoses may depend on the choice of tools. Medical data has traditionally been scattered in the organization, often not organized properly. What we find usually are medical record keeping systems which consist of heterogeneous data, requiring more efforts to reorganize the data into a common platform. As discussed before, the health profession produces enormous data and so analysing it in an efficient and timely manner can potentially save many lives.

Commercial operations of Clouds from the company platforms began in the year 1999 [ 10 ]. Initially, clouds complemented and empowered outsourcing. At earlier stages, there were some privacy concerns associated with Cloud Computing as the owners of data had to give the custody of their data to the Cloud owners. However, as time passed, with confidence building measures by Cloud owners, the technology became so prevalent that most of the world’s SMEs started using it in one or the other form. More information on Cloud Computing can be found in [ 11 , 12 ].

Fog computing

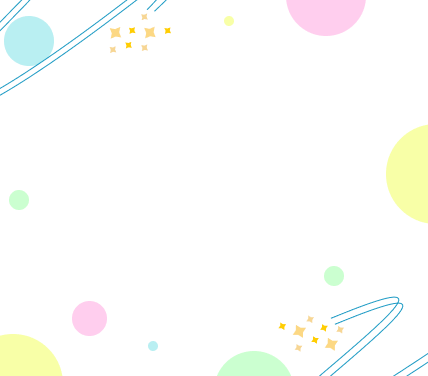

As faster processing became the need for some critical applications, the clouds regenerated Fog or Edge computing. As can be seen in Gartner hyper cycles in Figs. 2 and and3, 3 , Edge computing, as an emerging technology, has also peaked in 2017–18. As shown in the Cloud Computing architecture in Fig. 4 , the middle or second layers of the cloud configuration are represented by the Fog computing. For some applications delay in communication between the computing devices in the field and data in a Cloud (often physically apart by thousands of miles), is detrimental of the time requirements, as it may cause considerable delay in time sensitive applications. For example, processing and storage for early warning of disasters (stampedes, Tsunami, etc.) must be in real time. For these kinds of applications, computing and storing resources should be placed closer to where computing is needed (application areas like digital street). In these kind of scenarios Fog computing is considered to be suitable [ 13 ]. Clouds are integral part of many IoT applications and play central role on ubiquitous computing systems in health related cases like the one depicted in Fig. 5 . Some applications of Fog computing can be found in [ 14 – 16 ]. More results on Fog computing are also available in [ 17 – 19 ].

Emerging Technologies 2018

Emerging Technologies 2017

Relationship of Cloud, Fog and Dew computing

Snapshot of a Ubiquitous System

Dew computing

When Fog is overloaded and is not able to cater for the peaks of high demand applications, it offloads some of its data and/or processing to the associated cloud. In such a situation, Fog exposes its dependency to a complementary bottom layer of the cloud architectural organisation as shown in the Cloud architecture of Fig. 4 . This bottom layer of hierarchical resources organization is known as the Dew layer. The purpose of the Dew layer is to cater for the tasks by exploiting resources near to the end-user with minimum internet access [ 17 , 20 ]. As a feature, Dew computing takes care of determining as to when to use for its services linking with the different layers of the Cloud architecture. It is also important to note that the Dew computing [ 20 ] is associated with the distributed computing hierarchy and is integrated by the Fog computing services, which is also evident in Fig. 4 . In summary, Cloud architecture has three layers, first being Cloud, second as Fog and the third Dew.

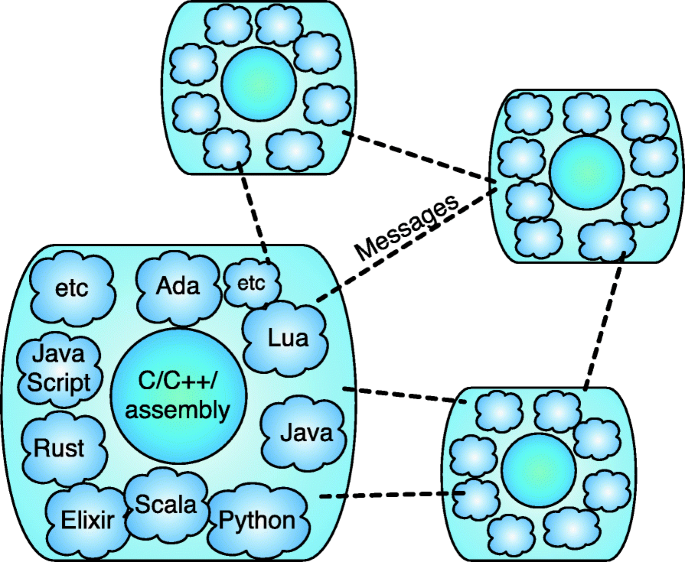

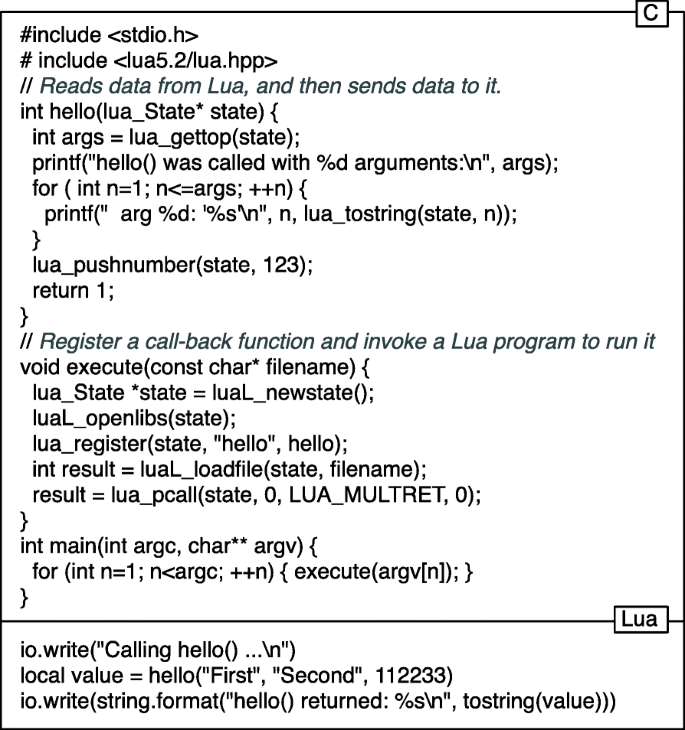

Internet of things

Definition of Internet of Things (IoT), as depicted in Fig. 6 , has been changing with the passage of time. With growing number of internet based applications, which use many technologies, devices and tools, one would think, the name of IoT seems to have evolved. Accordingly, things (technologies, devices and tools) used together in internet based applications to generate data to provide assistance and services to the users from anywhere, at any time. The internet can be considered as a uniform technology from any location as it provides the same service of ‘connectivity’. The speed and security however are not uniform. The IoT as an emerging technology has peaked during 2017–18 as is evident from Figs. 2 and and3. 3 . This technology is expanding at a very fast rate. According to [ 21 – 24 ], the number of IoT devices could be in millions by the year 2021.

Internet of Things

IoT is providing some amazing applications in tandem with wearable devices, sensor networks, Fog computing, and other technologies to improve some the critical facets of our lives like healthcare management, service delivery, and business improvements. Some applications of IoT in the field of crowd management are discussed in [ 14 ]. Some applications in of IoT in the context of privacy and security are discussed in [ 15 , 16 ]. Some of the key devices and associated technologies to IoT include RFID Tags [ 25 ], Internet, computers, cameras, RFID, Mobile Devices, coloured lights, RFIDs, Sensors, Sensor networks, Drones, Cloud, Fog and Dew.

Applications of blockchain

Blockchain is usually associated with Cryptocurrencies like Bitcoin (Currently, there are over one and a half thousand cryptocurrencies and the numbers are still rising). But the Blockchain technology can also be used for many more critical applications of our daily lives. The Blockchain is a distributed ledger technology in the form of a distributed transactional database, secured by cryptography, and governed by a consensus mechanism. A Blockchain is essentially a record of digital events [ 26 ]. A block represents a completed transaction or ledger. Subsequent and prior blocks are chained together, displaying the status of the most recent transaction. The role of chain is to provide linkage between records in a chronological order. This chain continues to grow as and when further transactions take place, which are recorded by adding new blocks to the chain. User security and ledger consistency in the Blockchain is provided by Asymmetric cryptography and distributed consensus algorithms. Once a block is created, it cannot be altered or removed. The technology eliminates the need for having a bank statement for verification of the availability of funds or that of a lawyer for certifying the occurrence of an event. The benefits of Blockchain technology are inherited in its characteristics of decentralization, persistency, anonymity and auditability [ 27 , 28 ].

Blockchain for business use

Blockchain, being the technology behind Cryptocurrencies, started as an open-source Bitcoin community to allow reliable peer-to-peer financial transactions. Blockchain technology has made it possible to build a globally functional currency relying on code, without using any bank or third-party platforms [ 28 ]. These features have made the Blockchain technology, secure and transparent for business transactions of any kind involving any currencies. In literature, we find many applications of Blockchain. Nowadays, the applications of Blockchain technology involve various kinds of transactions requiring verification and automated system of payments using smart contracts. The concept of Smart Contacts [ 28 ] has virtually eliminated the role of intermediaries. This technology is most suitable for businesses requiring high reliability and honesty. Because of its security and transparency features, the technology would benefit businesses trying to attract customers. Blockchain can be used to eliminate the occurrence of fake permits as can be seen in [ 29 ].

Blockchain for healthcare management

As discussed above, Blockchain is an efficient and transparent way of digital record keeping. This feature is highly desirable in efficient healthcare management. Medical field is still undergoing to manage their data efficiently in a digital form. As usual the issues of disparate and non-uniform record storage methods are hampering the digitization, data warehouse and big data analytics, which would allow efficient management and sharing of the data. We learn the magnitude of these problem from examples of such as the target of the National Health Service (NHS) of the United Kingdom to digitize the UK healthcare is by 2023 [ 30 ]. These problems lead to inaccuracies of data which can cause many issues in healthcare management, including clinical and administrative errors.

Use of Blockchain in healthcare can bring revolutionary improvements. For example, smart contracts can be used to make it easier for doctors to access patients’ data from other organisations. The current consent process often involves bureaucratic processes and is far from being simplified or standardised. This adds to many problems to patients and specialists treating them. The cost associated with the transfer of medical records between different locations can be significant, which can virtually be reduced to zero by using Blockchain. More information on the use of Blockchain in the healthcare data can be found in [ 30 , 31 ].

Environment cleaning robot

One of the ongoing healthcare issue is the eradication of deadly viruses and bacteria from hospitals and healthcare units. Nosocomial infections are a common problem for hospitals and currently they are treated using various techniques [ 32 , 33 ]. Historically, cleaning the hospital wards and operating rooms with chlorine has been an effective way. On the face of some deadly viruses like EBOLA, HIV Aids, Swine Influenza H1N1, H1N2, various strands of flu, Severe Acute Respiratory Syndrome (SARS) and Middle Eastern Respiratory Syndrome (MERS), there are dangerous implications of using this method [ 14 ]. An advanced approach is being used in the USA hospitals, which employs “robots” to purify the space as can be seen in [ 32 , 33 ]. However, certain problems exist within the limitations of the current “robots”. Most of these devices require a human to place them in the infected areas. These devices cannot move effectively (they just revolve around themselves); hence, the UV light will not reach all areas but only a very limited area within the range of the UV light emitter. Finally, the robot itself maybe infected as the light does not reach most of the robot’s surfaces. Therefore, there is an emerging need to build a robot that would not require the physical presence of humans to handle it, and could purify the entire room by covering all the room surfaces with UV light while, at the same time, will not be infected itself.

Figure 7 is an overview of the design of a fully motorized spider robot with six legs. This robot supports Wi-Fi connectivity for the purpose of control and be able to move around the room and clean the entire area. The spider design will allow the robot to move in any surface, including climbing steps but most importantly the robot will use its legs to move the UV light emitter as well as clear its body before leaving the room. This substantially reduces the risk of the robot transmitting any infections.

Spider Robot for virus cleaning

Additionally, the robot will be equipped with a motorized camera allowing the operator to monitor space and stop the process of emitting UV light in case of unpredicted situations. The operator can control the robot via a networked graphical user interface and/or from an augmented reality environment which will utilize technologies such as the Oculus Touch. In more detail, the user will use the oculus rift virtual reality helmet and the oculus touch, as well as hand controllers to remote control the robot. This will provide the user with the vision of the robot in a natural manner. It will also allow the user to control the two front robotic arms of the spider robot via the oculus touch controller, making it easy to do conduct advance movements, simply by move the hands. The physical movements of the human hand will be captured by the sensors of oculus touch and transmitted to the robot. The robot will then use reverse kinematics to translate the actions and position of the human hand to movements of the robotic arm. This technique will also be used during the training phase of the robot, where the human user will teach the robot how to clean various surfaces and then purify itself, simply by moving their hands accordingly. The design of the spider robot was proposed in a project proposal submitted to the King Abdulaziz City of Science and Technology ( https://www.kacst.edu.sa/eng/Pages/default.aspx ) by the author and George Tsaramirsis ( https://www.researchgate.net/profile/George_Tsaramirsis ).

Conclusions

We have presented details of some of the emerging technologies and real life application, that are providing businesses remarkable opportunities, which were previously unthinkable. Businesses are continuously trying to increase the use of new technologies and tools to improve processes, to benefit their client. The IoT and associated technologies are now able to provide real time and ubiquitous processing to eliminate the need for human surveillance. Similarly, Virtual Reality, Artificial Intelligence robotics are having some remarkable applications in the field of medical surgeries. As discussed, with the help of the technology, we now can predict and mitigate some natural disasters such as stampedes with the help of sensor networks and other associated technologies. Finally, the increase in Big Data Analytics is influencing businesses and government agencies with smarter decision making to achieve targets or expectations.

- Previous Article

- Next Article

Promises and Pitfalls of Technology

Politics and privacy, private-sector influence and big tech, state competition and conflict, author biography, how is technology changing the world, and how should the world change technology.

- Split-Screen

- Article contents

- Figures & tables

- Supplementary Data

- Peer Review

- Open the PDF for in another window

- Guest Access

- Get Permissions

- Cite Icon Cite

- Search Site

Josephine Wolff; How Is Technology Changing the World, and How Should the World Change Technology?. Global Perspectives 1 February 2021; 2 (1): 27353. doi: https://doi.org/10.1525/gp.2021.27353

Download citation file:

- Ris (Zotero)

- Reference Manager

Technologies are becoming increasingly complicated and increasingly interconnected. Cars, airplanes, medical devices, financial transactions, and electricity systems all rely on more computer software than they ever have before, making them seem both harder to understand and, in some cases, harder to control. Government and corporate surveillance of individuals and information processing relies largely on digital technologies and artificial intelligence, and therefore involves less human-to-human contact than ever before and more opportunities for biases to be embedded and codified in our technological systems in ways we may not even be able to identify or recognize. Bioengineering advances are opening up new terrain for challenging philosophical, political, and economic questions regarding human-natural relations. Additionally, the management of these large and small devices and systems is increasingly done through the cloud, so that control over them is both very remote and removed from direct human or social control. The study of how to make technologies like artificial intelligence or the Internet of Things “explainable” has become its own area of research because it is so difficult to understand how they work or what is at fault when something goes wrong (Gunning and Aha 2019) .

This growing complexity makes it more difficult than ever—and more imperative than ever—for scholars to probe how technological advancements are altering life around the world in both positive and negative ways and what social, political, and legal tools are needed to help shape the development and design of technology in beneficial directions. This can seem like an impossible task in light of the rapid pace of technological change and the sense that its continued advancement is inevitable, but many countries around the world are only just beginning to take significant steps toward regulating computer technologies and are still in the process of radically rethinking the rules governing global data flows and exchange of technology across borders.

These are exciting times not just for technological development but also for technology policy—our technologies may be more advanced and complicated than ever but so, too, are our understandings of how they can best be leveraged, protected, and even constrained. The structures of technological systems as determined largely by government and institutional policies and those structures have tremendous implications for social organization and agency, ranging from open source, open systems that are highly distributed and decentralized, to those that are tightly controlled and closed, structured according to stricter and more hierarchical models. And just as our understanding of the governance of technology is developing in new and interesting ways, so, too, is our understanding of the social, cultural, environmental, and political dimensions of emerging technologies. We are realizing both the challenges and the importance of mapping out the full range of ways that technology is changing our society, what we want those changes to look like, and what tools we have to try to influence and guide those shifts.

Technology can be a source of tremendous optimism. It can help overcome some of the greatest challenges our society faces, including climate change, famine, and disease. For those who believe in the power of innovation and the promise of creative destruction to advance economic development and lead to better quality of life, technology is a vital economic driver (Schumpeter 1942) . But it can also be a tool of tremendous fear and oppression, embedding biases in automated decision-making processes and information-processing algorithms, exacerbating economic and social inequalities within and between countries to a staggering degree, or creating new weapons and avenues for attack unlike any we have had to face in the past. Scholars have even contended that the emergence of the term technology in the nineteenth and twentieth centuries marked a shift from viewing individual pieces of machinery as a means to achieving political and social progress to the more dangerous, or hazardous, view that larger-scale, more complex technological systems were a semiautonomous form of progress in and of themselves (Marx 2010) . More recently, technologists have sharply criticized what they view as a wave of new Luddites, people intent on slowing the development of technology and turning back the clock on innovation as a means of mitigating the societal impacts of technological change (Marlowe 1970) .

At the heart of fights over new technologies and their resulting global changes are often two conflicting visions of technology: a fundamentally optimistic one that believes humans use it as a tool to achieve greater goals, and a fundamentally pessimistic one that holds that technological systems have reached a point beyond our control. Technology philosophers have argued that neither of these views is wholly accurate and that a purely optimistic or pessimistic view of technology is insufficient to capture the nuances and complexity of our relationship to technology (Oberdiek and Tiles 1995) . Understanding technology and how we can make better decisions about designing, deploying, and refining it requires capturing that nuance and complexity through in-depth analysis of the impacts of different technological advancements and the ways they have played out in all their complicated and controversial messiness across the world.

These impacts are often unpredictable as technologies are adopted in new contexts and come to be used in ways that sometimes diverge significantly from the use cases envisioned by their designers. The internet, designed to help transmit information between computer networks, became a crucial vehicle for commerce, introducing unexpected avenues for crime and financial fraud. Social media platforms like Facebook and Twitter, designed to connect friends and families through sharing photographs and life updates, became focal points of election controversies and political influence. Cryptocurrencies, originally intended as a means of decentralized digital cash, have become a significant environmental hazard as more and more computing resources are devoted to mining these forms of virtual money. One of the crucial challenges in this area is therefore recognizing, documenting, and even anticipating some of these unexpected consequences and providing mechanisms to technologists for how to think through the impacts of their work, as well as possible other paths to different outcomes (Verbeek 2006) . And just as technological innovations can cause unexpected harm, they can also bring about extraordinary benefits—new vaccines and medicines to address global pandemics and save thousands of lives, new sources of energy that can drastically reduce emissions and help combat climate change, new modes of education that can reach people who would otherwise have no access to schooling. Regulating technology therefore requires a careful balance of mitigating risks without overly restricting potentially beneficial innovations.

Nations around the world have taken very different approaches to governing emerging technologies and have adopted a range of different technologies themselves in pursuit of more modern governance structures and processes (Braman 2009) . In Europe, the precautionary principle has guided much more anticipatory regulation aimed at addressing the risks presented by technologies even before they are fully realized. For instance, the European Union’s General Data Protection Regulation focuses on the responsibilities of data controllers and processors to provide individuals with access to their data and information about how that data is being used not just as a means of addressing existing security and privacy threats, such as data breaches, but also to protect against future developments and uses of that data for artificial intelligence and automated decision-making purposes. In Germany, Technische Überwachungsvereine, or TÜVs, perform regular tests and inspections of technological systems to assess and minimize risks over time, as the tech landscape evolves. In the United States, by contrast, there is much greater reliance on litigation and liability regimes to address safety and security failings after-the-fact. These different approaches reflect not just the different legal and regulatory mechanisms and philosophies of different nations but also the different ways those nations prioritize rapid development of the technology industry versus safety, security, and individual control. Typically, governance innovations move much more slowly than technological innovations, and regulations can lag years, or even decades, behind the technologies they aim to govern.

In addition to this varied set of national regulatory approaches, a variety of international and nongovernmental organizations also contribute to the process of developing standards, rules, and norms for new technologies, including the International Organization for Standardization and the International Telecommunication Union. These multilateral and NGO actors play an especially important role in trying to define appropriate boundaries for the use of new technologies by governments as instruments of control for the state.

At the same time that policymakers are under scrutiny both for their decisions about how to regulate technology as well as their decisions about how and when to adopt technologies like facial recognition themselves, technology firms and designers have also come under increasing criticism. Growing recognition that the design of technologies can have far-reaching social and political implications means that there is more pressure on technologists to take into consideration the consequences of their decisions early on in the design process (Vincenti 1993; Winner 1980) . The question of how technologists should incorporate these social dimensions into their design and development processes is an old one, and debate on these issues dates back to the 1970s, but it remains an urgent and often overlooked part of the puzzle because so many of the supposedly systematic mechanisms for assessing the impacts of new technologies in both the private and public sectors are primarily bureaucratic, symbolic processes rather than carrying any real weight or influence.

Technologists are often ill-equipped or unwilling to respond to the sorts of social problems that their creations have—often unwittingly—exacerbated, and instead point to governments and lawmakers to address those problems (Zuckerberg 2019) . But governments often have few incentives to engage in this area. This is because setting clear standards and rules for an ever-evolving technological landscape can be extremely challenging, because enforcement of those rules can be a significant undertaking requiring considerable expertise, and because the tech sector is a major source of jobs and revenue for many countries that may fear losing those benefits if they constrain companies too much. This indicates not just a need for clearer incentives and better policies for both private- and public-sector entities but also a need for new mechanisms whereby the technology development and design process can be influenced and assessed by people with a wider range of experiences and expertise. If we want technologies to be designed with an eye to their impacts, who is responsible for predicting, measuring, and mitigating those impacts throughout the design process? Involving policymakers in that process in a more meaningful way will also require training them to have the analytic and technical capacity to more fully engage with technologists and understand more fully the implications of their decisions.

At the same time that tech companies seem unwilling or unable to rein in their creations, many also fear they wield too much power, in some cases all but replacing governments and international organizations in their ability to make decisions that affect millions of people worldwide and control access to information, platforms, and audiences (Kilovaty 2020) . Regulators around the world have begun considering whether some of these companies have become so powerful that they violate the tenets of antitrust laws, but it can be difficult for governments to identify exactly what those violations are, especially in the context of an industry where the largest players often provide their customers with free services. And the platforms and services developed by tech companies are often wielded most powerfully and dangerously not directly by their private-sector creators and operators but instead by states themselves for widespread misinformation campaigns that serve political purposes (Nye 2018) .

Since the largest private entities in the tech sector operate in many countries, they are often better poised to implement global changes to the technological ecosystem than individual states or regulatory bodies, creating new challenges to existing governance structures and hierarchies. Just as it can be challenging to provide oversight for government use of technologies, so, too, oversight of the biggest tech companies, which have more resources, reach, and power than many nations, can prove to be a daunting task. The rise of network forms of organization and the growing gig economy have added to these challenges, making it even harder for regulators to fully address the breadth of these companies’ operations (Powell 1990) . The private-public partnerships that have emerged around energy, transportation, medical, and cyber technologies further complicate this picture, blurring the line between the public and private sectors and raising critical questions about the role of each in providing critical infrastructure, health care, and security. How can and should private tech companies operating in these different sectors be governed, and what types of influence do they exert over regulators? How feasible are different policy proposals aimed at technological innovation, and what potential unintended consequences might they have?

Conflict between countries has also spilled over significantly into the private sector in recent years, most notably in the case of tensions between the United States and China over which technologies developed in each country will be permitted by the other and which will be purchased by other customers, outside those two countries. Countries competing to develop the best technology is not a new phenomenon, but the current conflicts have major international ramifications and will influence the infrastructure that is installed and used around the world for years to come. Untangling the different factors that feed into these tussles as well as whom they benefit and whom they leave at a disadvantage is crucial for understanding how governments can most effectively foster technological innovation and invention domestically as well as the global consequences of those efforts. As much of the world is forced to choose between buying technology from the United States or from China, how should we understand the long-term impacts of those choices and the options available to people in countries without robust domestic tech industries? Does the global spread of technologies help fuel further innovation in countries with smaller tech markets, or does it reinforce the dominance of the states that are already most prominent in this sector? How can research universities maintain global collaborations and research communities in light of these national competitions, and what role does government research and development spending play in fostering innovation within its own borders and worldwide? How should intellectual property protections evolve to meet the demands of the technology industry, and how can those protections be enforced globally?

These conflicts between countries sometimes appear to challenge the feasibility of truly global technologies and networks that operate across all countries through standardized protocols and design features. Organizations like the International Organization for Standardization, the World Intellectual Property Organization, the United Nations Industrial Development Organization, and many others have tried to harmonize these policies and protocols across different countries for years, but have met with limited success when it comes to resolving the issues of greatest tension and disagreement among nations. For technology to operate in a global environment, there is a need for a much greater degree of coordination among countries and the development of common standards and norms, but governments continue to struggle to agree not just on those norms themselves but even the appropriate venue and processes for developing them. Without greater global cooperation, is it possible to maintain a global network like the internet or to promote the spread of new technologies around the world to address challenges of sustainability? What might help incentivize that cooperation moving forward, and what could new structures and process for governance of global technologies look like? Why has the tech industry’s self-regulation culture persisted? Do the same traditional drivers for public policy, such as politics of harmonization and path dependency in policy-making, still sufficiently explain policy outcomes in this space? As new technologies and their applications spread across the globe in uneven ways, how and when do they create forces of change from unexpected places?

These are some of the questions that we hope to address in the Technology and Global Change section through articles that tackle new dimensions of the global landscape of designing, developing, deploying, and assessing new technologies to address major challenges the world faces. Understanding these processes requires synthesizing knowledge from a range of different fields, including sociology, political science, economics, and history, as well as technical fields such as engineering, climate science, and computer science. A crucial part of understanding how technology has created global change and, in turn, how global changes have influenced the development of new technologies is understanding the technologies themselves in all their richness and complexity—how they work, the limits of what they can do, what they were designed to do, how they are actually used. Just as technologies themselves are becoming more complicated, so are their embeddings and relationships to the larger social, political, and legal contexts in which they exist. Scholars across all disciplines are encouraged to join us in untangling those complexities.

Josephine Wolff is an associate professor of cybersecurity policy at the Fletcher School of Law and Diplomacy at Tufts University. Her book You’ll See This Message When It Is Too Late: The Legal and Economic Aftermath of Cybersecurity Breaches was published by MIT Press in 2018.

Recipient(s) will receive an email with a link to 'How Is Technology Changing the World, and How Should the World Change Technology?' and will not need an account to access the content.

Subject: How Is Technology Changing the World, and How Should the World Change Technology?

(Optional message may have a maximum of 1000 characters.)

Citing articles via

Email alerts, affiliations.

- Special Collections

- Review Symposia

- Info for Authors

- Info for Librarians

- Editorial Team

- Emerging Scholars Forum

- Open Access

- Online ISSN 2575-7350

- Copyright © 2024 The Regents of the University of California. All Rights Reserved.

Stay Informed

Disciplines.

- Ancient World

- Anthropology

- Communication

- Criminology & Criminal Justice

- Film & Media Studies

- Food & Wine

- Browse All Disciplines

- Browse All Courses

- Book Authors

- Booksellers

- Instructions

- Journal Authors

- Journal Editors

- Media & Journalists

- Planned Giving

About UC Press

- Press Releases

- Seasonal Catalog

- Acquisitions Editors

- Customer Service

- Exam/Desk Requests

- Media Inquiries

- Print-Disability

- Rights & Permissions

- UC Press Foundation

- © Copyright 2024 by the Regents of the University of California. All rights reserved. Privacy policy Accessibility

This Feature Is Available To Subscribers Only

Sign In or Create an Account

How has technology changed - and changed us - in the past 20 years?

Remember this? Image: REUTERS/Stephen Hird

.chakra .wef-1c7l3mo{-webkit-transition:all 0.15s ease-out;transition:all 0.15s ease-out;cursor:pointer;-webkit-text-decoration:none;text-decoration:none;outline:none;color:inherit;}.chakra .wef-1c7l3mo:hover,.chakra .wef-1c7l3mo[data-hover]{-webkit-text-decoration:underline;text-decoration:underline;}.chakra .wef-1c7l3mo:focus,.chakra .wef-1c7l3mo[data-focus]{box-shadow:0 0 0 3px rgba(168,203,251,0.5);} Madeleine Hillyer

.chakra .wef-1nk5u5d{margin-top:16px;margin-bottom:16px;line-height:1.388;color:#2846F8;font-size:1.25rem;}@media screen and (min-width:56.5rem){.chakra .wef-1nk5u5d{font-size:1.125rem;}} Get involved .chakra .wef-9dduvl{margin-top:16px;margin-bottom:16px;line-height:1.388;font-size:1.25rem;}@media screen and (min-width:56.5rem){.chakra .wef-9dduvl{font-size:1.125rem;}} with our crowdsourced digital platform to deliver impact at scale

Stay up to date:, davos agenda.

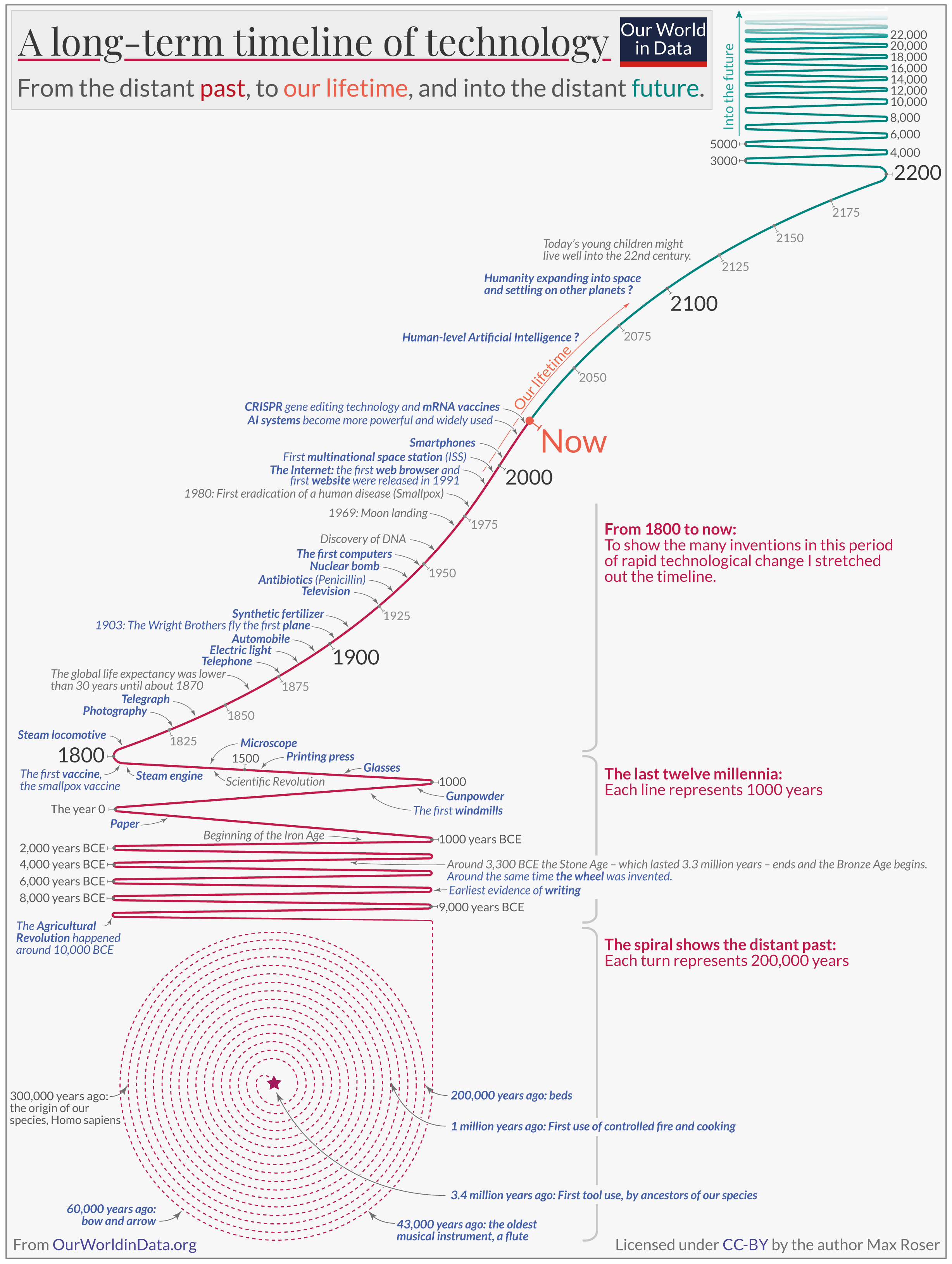

- Since the dotcom bubble burst back in 2000, technology has radically transformed our societies and our daily lives.

- From smartphones to social media and healthcare, here's a brief history of the 21st century's technological revolution.

Just over 20 years ago, the dotcom bubble burst , causing the stocks of many tech firms to tumble. Some companies, like Amazon, quickly recovered their value – but many others were left in ruins. In the two decades since this crash, technology has advanced in many ways.

Many more people are online today than they were at the start of the millennium. Looking at broadband access, in 2000, just half of Americans had broadband access at home. Today, that number sits at more than 90% .

This broadband expansion was certainly not just an American phenomenon. Similar growth can be seen on a global scale; while less than 7% of the world was online in 2000, today over half the global population has access to the internet.

Similar trends can be seen in cellphone use. At the start of the 2000s, there were 740 million cell phone subscriptions worldwide. Two decades later, that number has surpassed 8 billion, meaning there are now more cellphones in the world than people

Have you read?

The future of jobs report 2023, how to follow the growth summit 2023.

At the same time, technology was also becoming more personal and portable. Apple sold its first iPod in 2001, and six years later it introduced the iPhone, which ushered in a new era of personal technology. These changes led to a world in which technology touches nearly everything we do.

Technology has changed major sectors over the past 20 years, including media, climate action and healthcare. The World Economic Forum’s Technology Pioneers , which just celebrated its 20th anniversary, gives us insight how emerging tech leaders have influenced and responded to these changes.

Media and media consumption

The past 20 years have greatly shaped how and where we consume media. In the early 2000s, many tech firms were still focused on expanding communication for work through advanced bandwidth for video streaming and other media consumption that is common today.

Others followed the path of expanding media options beyond traditional outlets. Early Tech Pioneers such as PlanetOut did this by providing an outlet and alternative media source for LGBTQIA communities as more people got online.

Following on from these first new media options, new communities and alternative media came the massive growth of social media. In 2004 , fewer than 1 million people were on Myspace; Facebook had not even launched. By 2018, Facebook had more 2.26 billion users with other sites also growing to hundreds of millions of users.

While these new online communities and communication channels have offered great spaces for alternative voices, their increased use has also brought issues of increased disinformation and polarization.

Today, many tech start-ups are focused on preserving these online media spaces while also mitigating the disinformation which can come with them. Recently, some Tech Pioneers have also approached this issue, including TruePic – which focuses on photo identification – and Two Hat , which is developing AI-powered content moderation for social media.

Climate change and green tech

Many scientists today are looking to technology to lead us towards a carbon-neutral world. Though renewed attention is being given to climate change today, these efforts to find a solution through technology is not new. In 2001, green tech offered a new investment opportunity for tech investors after the crash, leading to a boom of investing in renewable energy start-ups including Bloom Energy , a Technology Pioneer in 2010.

In the past two decades, tech start-ups have only expanded their climate focus. Many today are focuses on initiatives far beyond clean energy to slow the impact of climate change.

Different start-ups, including Carbon Engineering and Climeworks from this year’s Technology Pioneers, have started to roll out carbon capture technology. These technologies remove CO2 from the air directly, enabling scientists to alleviate some of the damage from fossil fuels which have already been burned.

Another expanding area for young tech firms today is food systems innovation. Many firms, like Aleph Farms and Air Protein, are creating innovative meat and dairy alternatives that are much greener than their traditional counterparts.

Biotech and healthcare

The early 2000s also saw the culmination of a biotech boom that had started in the mid-1990s. Many firms focused on advancing biotechnologies through enhanced tech research.

An early Technology Pioneer, Actelion Pharmaceuticals was one of these companies. Actelion’s tech researched the single layer of cells separating every blood vessel from the blood stream. Like many other biotech firms at the time, their focus was on precise disease and treatment research.

While many tech firms today still focus on disease and treatment research, many others have been focusing on healthcare delivery. Telehealth has been on the rise in recent years , with many young tech expanding virtual healthcare options. New technologies such as virtual visits, chatbots are being used to delivery healthcare to individuals, especially during Covid-19.

Many companies are also focusing their healthcare tech on patients, rather than doctors. For example Ada, a symptom checker app, used to be designed for doctor’s use but has now shifted its language and interface to prioritize giving patients information on their symptoms. Other companies, like 7 cups, are focused are offering mental healthcare support directly to their users without through their app instead of going through existing offices.

The past two decades have seen healthcare tech get much more personal and use tech for care delivery, not just advancing medical research.

The World Economic Forum was the first to draw the world’s attention to the Fourth Industrial Revolution, the current period of unprecedented change driven by rapid technological advances. Policies, norms and regulations have not been able to keep up with the pace of innovation, creating a growing need to fill this gap.

The Forum established the Centre for the Fourth Industrial Revolution Network in 2017 to ensure that new and emerging technologies will help—not harm—humanity in the future. Headquartered in San Francisco, the network launched centres in China, India and Japan in 2018 and is rapidly establishing locally-run Affiliate Centres in many countries around the world.

The global network is working closely with partners from government, business, academia and civil society to co-design and pilot agile frameworks for governing new and emerging technologies, including artificial intelligence (AI) , autonomous vehicles , blockchain , data policy , digital trade , drones , internet of things (IoT) , precision medicine and environmental innovations .

Learn more about the groundbreaking work that the Centre for the Fourth Industrial Revolution Network is doing to prepare us for the future.

Want to help us shape the Fourth Industrial Revolution? Contact us to find out how you can become a member or partner.

In the early 2000s, many companies were at the start of their recovery from the bursting dotcom bubble. Since then, we’ve seen a large expansion in the way tech innovators approach areas such as new media, climate change, healthcare delivery and more.

At the same time, we have also seen tech companies rise to the occasion of trying to combat issues which arose from the first group such as internet content moderation, expanding climate change solutions.

The Technology Pioneers' 2020 cohort marks the 20th anniversary of this community - and looking at the latest awardees can give us a snapshot of where the next two decades of tech may be heading.

Don't miss any update on this topic

Create a free account and access your personalized content collection with our latest publications and analyses.

License and Republishing

World Economic Forum articles may be republished in accordance with the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International Public License, and in accordance with our Terms of Use.

The views expressed in this article are those of the author alone and not the World Economic Forum.

The Agenda .chakra .wef-n7bacu{margin-top:16px;margin-bottom:16px;line-height:1.388;font-weight:400;} Weekly

A weekly update of the most important issues driving the global agenda

.chakra .wef-1dtnjt5{display:-webkit-box;display:-webkit-flex;display:-ms-flexbox;display:flex;-webkit-align-items:center;-webkit-box-align:center;-ms-flex-align:center;align-items:center;-webkit-flex-wrap:wrap;-ms-flex-wrap:wrap;flex-wrap:wrap;} More on Davos Agenda .chakra .wef-17xejub{-webkit-flex:1;-ms-flex:1;flex:1;justify-self:stretch;-webkit-align-self:stretch;-ms-flex-item-align:stretch;align-self:stretch;} .chakra .wef-nr1rr4{display:-webkit-inline-box;display:-webkit-inline-flex;display:-ms-inline-flexbox;display:inline-flex;white-space:normal;vertical-align:middle;text-transform:uppercase;font-size:0.75rem;border-radius:0.25rem;font-weight:700;-webkit-align-items:center;-webkit-box-align:center;-ms-flex-align:center;align-items:center;line-height:1.2;-webkit-letter-spacing:1.25px;-moz-letter-spacing:1.25px;-ms-letter-spacing:1.25px;letter-spacing:1.25px;background:none;padding:0px;color:#B3B3B3;-webkit-box-decoration-break:clone;box-decoration-break:clone;-webkit-box-decoration-break:clone;}@media screen and (min-width:37.5rem){.chakra .wef-nr1rr4{font-size:0.875rem;}}@media screen and (min-width:56.5rem){.chakra .wef-nr1rr4{font-size:1rem;}} See all

Building trust amid uncertainty – 3 risk experts on the state of the world in 2024

Andrea Willige

March 27, 2024

Why obesity is rising and how we can live healthy lives

Shyam Bishen

March 20, 2024

Global cooperation is stalling – but new trade pacts show collaboration is still possible. Here are 6 to know about

Simon Torkington

March 15, 2024

How messages of hope, diversity and representation are being used to inspire changemakers to act

Miranda Barker

March 7, 2024

AI, leadership, and the art of persuasion – Forum podcasts you should hear this month

Robin Pomeroy

March 1, 2024

This is how AI is impacting – and shaping – the creative industries, according to experts at Davos

Kate Whiting

February 28, 2024

Science, technology and innovation in a 21st century context

- Published: 27 August 2011

- Volume 44 , pages 209–213, ( 2011 )

Cite this article

- John H. Marburger III 1

23k Accesses

8 Citations

3 Altmetric

Explore all metrics

Avoid common mistakes on your manuscript.

This editorial essay was prepared by John H. “Jack” Marburger for a workshop on the “science of science and innovation policy” held in 2009 that was the basis for this special issue. It is published posthumously .

Linking the words “science,” “technology,” and “innovation,” may suggest that we know more about how these activities are related than we really do. This very common linkage implicitly conveys a linear progression from scientific research to technology creation to innovative products. More nuanced pictures of these complex activities break them down into components that interact with each other in a multi-dimensional socio-technological-economic network. A few examples will help to make this clear.

Science has always functioned on two levels that we may describe as curiosity-driven and need-driven, and they interact in sometimes surprising ways. Galileo’s telescope, the paradigmatic instrument of discovery in pure science, emerged from an entirely pragmatic tradition of lens-making for eye-glasses. And we should keep in mind that the industrial revolution gave more to science than it received, at least until the last half of the nineteenth century when the sciences of chemistry and electricity began to produce serious economic payoffs. The flowering of science during the era, we call the enlightenment owed much to its links with crafts and industry, but as it gained momentum science created its own need for practical improvements. After all, the frontiers of science are defined by the capabilities of instrumentation, that is, of technology. The needs of pure science are a huge but poorly understood stimulus for technologies that have the capacity to be disruptive precisely because these needs do not arise from the marketplace. The innovators who built the World Wide Web on the foundation of the Internet were particle physicists at CERN, struggling to satisfy their unique need to share complex information. Others soon discovered “needs” of which they had been unaware that could be satisfied by this innovation, and from that point the Web transformed the Internet from a tool for the technological elite into a broad platform for a new kind of economy.

Necessity is said to be the mother of invention, but in all human societies, “necessity” is a mix of culturally conditioned perceptions and the actual physical necessities of life. The concept of need, of what is wanted, is the ultimate driver of markets and an essential dimension of innovation. And as the example of the World Wide Web shows, need is very difficult to identify before it reveals itself in a mass movement. Why did I not know I needed a cell phone before nearly everyone else had one? Because until many others had one I did not, in fact, need one. Innovation has this chicken-and-egg quality that makes it extremely hard to analyze. We all know of visionaries who conceive of a society totally transformed by their invention and who are bitter that the world has not embraced their idea. Sometimes we think of them as crackpots, or simply unrealistic about what it takes to change the world. We practical people necessarily view the world through the filter of what exists, and fail to anticipate disruptive change. Nearly always we are surprised by the rapid acceptance of a transformative idea. If we truly want to encourage innovation through government policies, we are going to have to come to grips with this deep unpredictability of the mass acceptance of a new concept. Works analyzing this phenomenon are widely popular under titles like “ The Tipping Point ” by Gladwell ( 2000 ) or more recently the book by Taleb ( 2007 ) called The Black Swan , among others.

What causes innovations to be adopted and integrated into economies depends on their ability to satisfy some perceived need by consumers, and that perception may be an artifact of marketing, or fashion, or cultural inertia, or ignorance. Some of the largest and most profitable industries in the developed world—entertainment, automobiles, clothing and fashion accessories, health products, children’s toys, grownups’ toys!—depend on perceptions of need that go far beyond the utilitarian and are notoriously difficult to predict. And yet these industries clearly depend on sophisticated and rapidly advancing technologies to compete in the marketplace. Of course, they do not depend only upon technology. Technologies are part of the environment for innovation, or in a popular and very appropriate metaphor—part of the innovation ecology .

This complexity of innovation and its ecology is conveyed in Chapter One of a currently popular best-seller in the United States called Innovation Nation by the American innovation guru, Kao ( 2007 ), formerly on the faculty of the Harvard Business School:

“I define it [innovation],” writes Kao, “as the ability of individuals, companies, and entire nations to continuously create their desired future. Innovation depends on harvesting knowledge from a range of disciplines besides science and technology, among them design, social science, and the arts. And it is exemplified by more than just products; services, experiences, and processes can be innovative as well. The work of entrepreneurs, scientists, and software geeks alike contributes to innovation. It is also about the middlemen who know how to realize value from ideas. Innovation flows from shifts in mind-set that can generate new business models, recognize new opportunities, and weave innovations throughout the fabric of society. It is about new ways of doing and seeing things as much as it is about the breakthrough idea.” (Kao 2007 , p. 19).

This is not your standard government-type definition. Gurus, of course, do not have to worry about leading indicators and predictive measures of policy success. Nevertheless, some policy guidance can be drawn from this high level “definition,” and I will do so later.

The first point, then, is that the structural aspects of “science, technology, and innovation” are imperfectly defined, complex, and poorly understood. There is still much work to do to identify measures, develop models, and test them against actual experience before we can say we really know what it takes to foster innovation. The second point I want to make is about the temporal aspects: all three of these complex activities are changing with time. Science, of course, always changes through the accumulation of knowledge, but it also changes through revolutions in its theoretical structure, through its ever-improving technology, and through its evolving sociology. The technology and sociology of science are currently impacted by a rapidly changing information technology. Technology today flows increasingly from research laboratories but the influence of technology on both science and innovation depends strongly on its commercial adoption, that is, on market forces. Commercial scale manufacturing drives down the costs of technology so it can be exploited in an ever-broadening range of applications. The mass market for precision electro-mechanical devices like cameras, printers, and disk drives is the basis for new scientific instrumentation and also for further generations of products that integrate hundreds of existing components in new devices and business models like the Apple iPod and video games, not to mention improvements in old products like cars and telephones. Innovation is changing too as it expands its scope beyond individual products to include all or parts of systems such as supply chains and inventory control, as in the Wal-Mart phenomenon. Apple’s iPod does not stand alone; it is integrated with iTunes software and novel arrangements with media providers.

With one exception, however, technology changes more slowly than it appears because we encounter basic technology platforms in a wide variety of relatively short-lived products. Technology is like a language that innovators use to express concepts in the form of products, and business models that serve (and sometimes create) a variety of needs, some of which fluctuate with fashion. The exception to the illusion of rapid technology change is the pace of information technology, which is no illusion. It has fulfilled Moore’s Law for more than half a century, and it is a remarkable historical anomaly arising from the systematic exploitation of the understanding of the behavior of microscopic matter following the discovery of quantum mechanics. The pace would be much less without a continually evolving market for the succession of smaller, higher capacity products. It is not at all clear that the market demand will continue to support the increasingly expensive investment in fabrication equipment for each new step up the exponential curve of Moore’s Law. The science is probably available to allow many more capacity doublings if markets can sustain them. Let me digress briefly on this point.

Many science commentators have described the twentieth century as the century of physics and the twenty-first as the century of biology. We now know that is misleading. It is true that our struggle to understand the ultimate constituents of matter has now encompassed (apparently) everything of human scale and relevance, and that the universe of biological phenomena now lies open for systematic investigation and dramatic applications in health, agriculture, and energy production. But there are two additional frontiers of physical science, one already highly productive, the other very intriguing. The first is the frontier of complexity , where physics, chemistry, materials science, biology, and mathematics all come together. This is where nanotechnology and biotechnology reside. These are huge fields that form the core of basic science policy in most developed nations. The basic science of the twenty-first century is neither biology nor physics, but an interdisciplinary mix of these and other traditional fields. Continued development of this domain contributes to information technology and much else. I mentioned two frontiers. The other physical science frontier borders the nearly unexploited domain of quantum coherence phenomena . It is a very large domain and potentially a source of entirely new platform technologies not unlike microelectronics. To say more about this would take me too far from our topic. The point is that nature has many undeveloped physical phenomena to enrich the ecology of innovation and keep us marching along the curve of Moore’s Law if we can afford to do so.

I worry about the psychological impact of the rapid advance of information technology. I believe it has created unrealistic expectations about all technologies and has encouraged a casual attitude among policy makers toward the capability of science and technology to deliver solutions to difficult social problems. This is certainly true of what may be the greatest technical challenge of all time—the delivery of energy to large developed and developing populations without adding greenhouse gases to the atmosphere. The challenge of sustainable energy technology is much more difficult than many people currently seem to appreciate. I am afraid that time will make this clear.

Structural complexities and the intrinsic dynamism of science and technology pose challenges to policy makers, but they seem almost manageable compared with the challenges posed by extrinsic forces. Among these are globalization and the impact of global economic development on the environment. The latter, expressed quite generally through the concept of “sustainability” is likely to be a component of much twenty-first century innovation policy. Measures of development, competitiveness, and innovation need to include sustainability dimensions to be realistic over the long run. Development policies that destroy economically important environmental systems, contribute to harmful global change, and undermine the natural resource basis of the economy are bad policies. Sustainability is now an international issue because the scale of development and the globalization of economies have environmental and natural resource implications that transcend national borders.

From the policy point of view, globalization is a not a new phenomenon. Science has been globalized for centuries, and we ought to be studying it more closely as a model for effective responses to the globalization of our economies. What is striking about science is the strong imperative to share ideas through every conceivable channel to the widest possible audience. If you had to name one chief characteristic of science, it would be empiricism. If you had to name two, the other would be open communication of data and ideas. The power of open communication in science cannot be overestimated. It has established, uniquely among human endeavors, an absolute global standard. And it effectively recruits talent from every part of the globe to labor at the science frontiers. The result has been an extraordinary legacy of understanding of the phenomena that shape our existence. Science is the ultimate example of an open innovation system.

Science practice has received much attention from philosophers, social scientists, and historians during the past half-century, and some of what has been learned holds valuable lessons for policy makers. It is fascinating to me how quickly countries that provide avenues to advanced education are able to participate in world science. The barriers to a small but productive scientific activity appear to be quite low and whether or not a country participates in science appears to be discretionary. A small scientific establishment, however, will not have significant direct economic impact. Its value at early stages of development is indirect, bringing higher performance standards, international recognition, and peer role models for a wider population. A science program of any size is also a link to the rich intellectual resources of the world scientific community. The indirect benefit of scientific research to a developing country far exceeds its direct benefit, and policy needs to recognize this. It is counterproductive to base support for science in such countries on a hoped-for direct economic stimulus.

Keeping in mind that the innovation ecology includes far more than science and technology, it should be obvious that within a small national economy innovation can thrive on a very small indigenous science and technology base. But innovators, like scientists, do require access to technical information and ideas. Consequently, policies favorable to innovation will create access to education and encourage free communication with the world technical community. Anything that encourages awareness of the marketplace and all its actors on every scale will encourage innovation.

This brings me back to John Kao’s definition of innovation. His vision of “the ability of individuals, companies, and entire nations to continuously create their desired future” implies conditions that create that ability, including most importantly educational opportunity (Kao 2007 , p. 19). The notion that “innovation depends on harvesting knowledge from a range of disciplines besides science and technology” implies that innovators must know enough to recognize useful knowledge when they see it, and that they have access to knowledge sources across a spectrum that ranges from news media and the Internet to technical and trade conferences (2007, p. 19). If innovation truly “flows from shifts in mind-set that can generate new business models, recognize new opportunities, and weave innovations throughout the fabric of society,” then the fabric of society must be somewhat loose-knit to accommodate the new ideas (2007, p. 19). Innovation is about risk and change, and deep forces in every society resist both of these. A striking feature of the US innovation ecology is the positive attitude toward failure, an attitude that encourages risk-taking and entrepreneurship.

All this gives us some insight into what policies we need to encourage innovation. Innovation policy is broader than science and technology policy, but the latter must be consistent with the former to produce a healthy innovation ecology. Innovation requires a predictable social structure, an open marketplace, and a business culture amenable to risk and change. It certainly requires an educational infrastructure that produces people with a global awareness and sufficient technical literacy to harvest the fruits of current technology. What innovation does not require is the creation by governments of a system that defines, regulates, or even rewards innovation except through the marketplace or in response to evident success. Some regulation of new products and new ideas is required to protect public health and environmental quality, but innovation needs lots of freedom. Innovative ideas that do not work out should be allowed to die so the innovation community can learn from the experience and replace the failed attempt with something better.

Do we understand innovation well enough to develop policy for it? If the policy addresses very general infrastructure issues such as education, economic, and political stability and the like, the answer is perhaps. If we want to measure the impact of specific programs on innovation, the answer is no. Studies of innovation are at an early stage where anecdotal information and case studies, similar to John Kao’s book—or the books on Business Week’s top ten list of innovation titles—are probably the most useful tools for policy makers.

I have been urging increased attention to what I call the science of science policy —the systematic quantitative study of the subset of our economy called science and technology—including the construction and validation of micro- and macro-economic models for S&T activity. Innovators themselves, and those who finance them, need to identify their needs and the impediments they face. Eventually, we may learn enough to create reliable indicators by which we can judge the health of our innovation ecosystems. The goal is well worth the sustained effort that will be required to achieve it.

Gladwell, M. (2000). The tipping point: How little things can make a big difference . Boston: Little, Brown and Company.

Google Scholar

Kao, J. (2007). Innovation nation: How America is losing its innovation edge, why it matters, and what we can do to get it back . New York: Free Press.

Taleb, N. N. (2007). The black swan: The impact of the highly improbable . New York: Random House.

Download references

Author information

Authors and affiliations.

Stony Brook University, Stony Brook, NY, USA

John H. Marburger III

You can also search for this author in PubMed Google Scholar

Additional information

John H. Marburger III—deceased

Rights and permissions

Reprints and permissions

About this article

Marburger, J.H. Science, technology and innovation in a 21st century context. Policy Sci 44 , 209–213 (2011). https://doi.org/10.1007/s11077-011-9137-3

Download citation

Published : 27 August 2011

Issue Date : September 2011

DOI : https://doi.org/10.1007/s11077-011-9137-3

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Find a journal

- Publish with us

- Track your research

Visual Life

- Creative Projects

- Write Here!

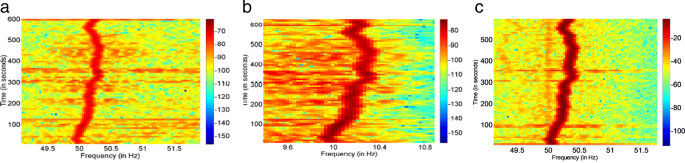

Karunaratne, Indika & Atukorale, Ajantha & Perera, Hemamali. (2011). Surveillance of human- computer interactions: A way forward to detection of users' Psychological Distress. 2011 IEEE Colloquium on Humanities, Science and Engineering, CHUSER 2011. 10.1109/CHUSER.2011.6163779.

June 9, 2023 / 0 comments / Reading Time: ~ 12 minutes

The Digital Revolution: How Technology is Changing the Way We Communicate and Interact

This article examines the impact of technology on human interaction and explores the ever-evolving landscape of communication. With the rapid advancement of technology, the methods and modes of communication have undergone a significant transformation. This article investigates both the positive and negative implications of this digitalization. Technological innovations, such as smartphones, social media, and instant messaging apps, have provided unprecedented accessibility and convenience, allowing people to connect effortlessly across distances. However, concerns have arisen regarding the quality and authenticity of these interactions. The article explores the benefits of technology, including improved connectivity, enhanced information sharing, and expanded opportunities for collaboration. It also discusses potential negative effects including a decline in in-person interactions, a loss of empathy, and an increase in online anxiety. This article tries to expand our comprehension of the changing nature of communication in the digital age by exposing the many ways that technology has an impact on interpersonal interactions. It emphasizes the necessity of intentional and thoughtful communication techniques to preserve meaningful connections in a society that is becoming more and more reliant on technology.

Introduction:

Technology has significantly transformed our modes of communication and interaction, revolutionizing the way we connect with one another over the past few decades. However, the COVID-19 pandemic has acted as a catalyst, expediting this transformative process, and necessitating our exclusive reliance on digital tools for socializing, working, and learning. Platforms like social media and video conferencing have emerged in recent years, expanding our options for virtual communication. The impact of these changes on our lives cannot be ignored. In this article, we will delve into the ways in which technology has altered our communication and interaction patterns and explore the consequences of these changes for our relationships, mental well-being, and society.

To gain a deeper understanding of this topic, I have conducted interviews and surveys, allowing us to gather firsthand insights from individuals of various backgrounds. Additionally, we will compare this firsthand information with the perspectives shared by experts in the field. By drawing on both personal experiences and expert opinions, we seek to provide a comprehensive analysis of how technology influences our interpersonal connections. Through this research, we hope to get a deeper comprehension of the complex interactions between technology and people, enabling us to move mindfully and purposefully through the rapidly changing digital environment.

The Evolution of Communication: From Face-to-Face to Digital Connections:

In the realm of communication, we have various mediums at our disposal, such as face-to-face interactions, telephone conversations, and internet-based communication. According to Nancy Baym, an expert in the field of technology and human connections, face-to-face communication is often regarded as the most personal and intimate, while the phone provides a more personal touch than the internet. She explains this in her book Personal Connections in the Digital Age by stating, “Face-to-face is much more personal; phone is personal as well, but not as intimate as face-to-face… Internet would definitely be the least personal, followed by the phone (which at least has the vocal satisfaction) and the most personal would be face-to-face” (Baym 2015). These distinctions suggest that different communication mediums are perceived to have varying levels of effectiveness in conveying emotion and building relationships. This distinction raises thought-provoking questions about the impact of technology on our ability to forge meaningful connections. While the internet offers unparalleled convenience and connectivity, it is essential to recognize its limitations in reproducing the depth of personal interaction found in face-to-face encounters. These limitations may be attributed to the absence of nonverbal cues, such as facial expressions, body language, and tone of voice, which are vital elements in understanding and interpreting emotions accurately.