Articles on Data analytics

Displaying 1 - 20 of 62 articles.

For over a century, baseball’s scouts have been the backbone of America’s pastime – do they have a future?

H. James Gilmore , Flagler College and Tracy Halcomb , Flagler College

Robo-advisers are here – the pros and cons of using AI in investing

Laurence Jones , Bangor University and Heather He , Bangor University

AI threatens to add to the growing wave of fraud but is also helping tackle it

Laurence Jones , Bangor University and Adrian Gepp , Bangor University

Twitter’s new data fees leave scientists scrambling for funding – or cutting research

Jon-Patrick Allem , University of Southern California

Insurance firms can skim your online data to price your insurance — and there’s little in the law to stop this

Zofia Bednarz , University of Sydney ; Kayleen Manwaring , UNSW Sydney , and Kimberlee Weatherall , University of Sydney

Two years into the pandemic, why is Australia still short of medicines?

Maryam Ziaee , Victoria University

How we communicate, what we value – even who we are: 8 surprising things data science has revealed about us over the past decade

Paul X. McCarthy , UNSW Sydney and Colin Griffith , CSIRO

3 ways for businesses to fuel innovation and drive performance

Grant Alexander Wilson , University of Regina

Sports card explosion holds promise for keeping kids engaged in math

John Holden , Oklahoma State University

Get ready for the invasion of smart building technologies following COVID-19

Patrick Lecomte , Université du Québec à Montréal (UQAM)

David Chase might hate that ‘The Many Saints of Newark’ is premiering on HBO Max – but it’s the wave of the future

Anthony Palomba , University of Virginia

For these students, using data in sports is about more than winning games

Felesia Stukes , Johnson C. Smith University

New data privacy rules are coming in NZ — businesses and other organisations will have to lift their games

Anca C. Yallop , Auckland University of Technology

The value of the Mountain Equipment Co-op sale lies in its customer data

Michael Parent , Simon Fraser University

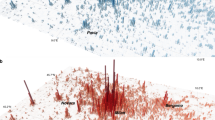

Disasters expose gaps in emergency services’ social media use

Tan Yigitcanlar , Queensland University of Technology ; Ashantha Goonetilleke , Queensland University of Technology , and Nayomi Kankanamge , Queensland University of Technology

How much coronavirus testing is enough? States could learn from retailers as they ramp up

Siqian Shen , University of Michigan

Tracking your location and targeted texts: how sharing your data could help in New Zealand’s level 4 lockdown

Jon MacKay , University of Auckland, Waipapa Taumata Rau

How sensors and big data can help cut food wastage

Frederic Isingizwe , Stellenbosch University and Umezuruike Linus Opara , Stellenbosch University

Data lakes: where big businesses dump their excess data, and hackers have a field day

Mohiuddin Ahmed , Edith Cowan University

How big data can help residents find transport, jobs and homes that work for them

Sae Chi , The University of Western Australia and Linda Robson , The University of Western Australia

Related Topics

- Artificial intelligence (AI)

- Data analysis

- Data collection

- Data privacy

- Data science

- Social media

Top contributors

Professor in Business Information Systems, University of Sydney

Lecturer in Finance, Bangor University

Adjunct Professor and Industry Fellow, UNSW Sydney

Professor of Finance, UNSW Sydney

Productivity Growth Program Director, Grattan Institute

Senior Lecturer in Applied Ethics & CyberSecurity, Griffith University

Professor of Law, University of Sydney

Professor of Computing Science, Director of the Digital Institute, Newcastle University

Senior Research Fellow, Allens Hub for Technology, Law & Innovation, and Senior Lecturer, School of Private & Commercial Law, UNSW Sydney

Strategy & Business Development, CSIRO

Professor of Urban and Cultural Geography, Western Sydney University

Professor of Organisational Behaviour, Bayes Business School, City, University of London

Leader, Machine Learning Research Group, Data61

Deputy Vice-Chancellor (Research), University of Tasmania

Research Fellow in Science Communication, UNSW Sydney

- X (Twitter)

- Unfollow topic Follow topic

Data and analytics: Why does it matter and where is the impact?

McKinsey is currently conducting global research to benchmark data analytics maturity levels within and across industries. We encourage you to take our 20-minute survey on the topic 1 1. http://esurveydesigns.com/wix/p30952257.aspx (individual results are kept confidential), and register to receive results showing your organization’s maturity benchmarked against peers and best practices.

The promise of using analytics to enhance decision-making, automate processes and create new business ventures is well established across industries. In fact, many leading organizations are already recognizing significant impact by leveraging data and analytics to create business value. Our research indicates, however, that maturity often varies by function or sector (or both), based on a number of contributing factors; for example:

- Marketing and Sales: Maturity in marketing and sales analytics tends to be more advanced, at least in the B2C context. Customer segmentation and personalization, social signal mining, and experimentation across channels have become mainstream across a number of industries, including retail, banking/insurance, and utilities. Intensity and sophistication largely varies and can still offer a significant competitive advantage if multiple analytics domains such as pricing, loyalty and segmentation are cleverly combined and integrated.

- Operations: Maturity of advanced analytics in operations tends to be lower. This is usually because opportunities are harder to spot and cross-business domain knowledge is required to create a step change. Also, use cases in operations are often connected with leveraging sensor and equipment data, which can be difficult to effectively expose for analysis. Data and analytics use in operations has traditionally included identification of new oil and gas drilling sites, but has now come to include mining sensor data for predictive maintenance, integrated and demand-driven workforce management and realtime scheduling optimization.

- Data-driven ventures: Only a few firms have started to explore the power of big data and advanced analytics to step outside their current business, either by leveraging internal data or developing analytics insights to offer as a service to customers. Examples include credit card companies providing data-driven customer targeting, or telecom companies selling location data for traffic monitoring and fraud detection. We believe that similar opportunities can be identified in the operations space and provide a competitive difference to those who do it well.

While some leading organizations are realizing great success with the emergence of these new capabilities, most companies are still in an exploration and piloting phase and have not scaled them up. McKinsey’s digital survey in 2014 revealed that while respondents felt that data and analytics would be one of the top categories of digital spending in three years’ time, they were also far more likely to believe that they were currently underinvesting in the space. Additionally, nine out of ten executives claimed that their companies would have a pressing need for digital talent in the next year, and nearly 60 percent of CIOs and CTOs polled thought that the need for data and analytics expertise would be more acute than other talent gaps.

Given the value at stake, how do companies ensure an effective data strategy and recognize impact from the promise of analytics?

Our work helping clients to build robust programs in data analytics suggests that winners have a clear strategy and follow best practices across five key areas:

- Strategy and value: Understanding the business case for pursuing each use case and how it aligns with the company’s overall value is critical to ensure that whatever is built delivers the business impact expected. Additionally, organizations must ensure that data and analytics is high on the senior management agenda and be prepared to invest in talent, data, and technology at scale.

- Talent and organization: While the decision to centralize or federate data and analytics capabilities depends largely on the anticipated use cases, the organizational positioning of any central group and the presence of analytics talent both centrally and in domain-specific roles is critical. Commodity services such as data cleansing or data infrastructure management may be outsourced to free up capacity for more proprietary activities, even as companies leverage capability-building programs to help grow talent organically.

- Governance, access and quality: Analytics leaders ensure that data from disparate systems such as finance, customer, suppliers, and transactions are linked and available across the organization, while also ensuring that proper accountability and policy management techniques are in place and tied to performance metrics. Distribution of reports is often quick and automated, and prominent use is being made of both external, open and unstructured data.

- Technology and tools: The broad availability of appropriate advanced tools for data scientists, power business users, and regular business users is critical to staying ahead of competition. New technologies, such as cloud, high-performance workbenches, and distributed data environments (data lakes) are a key component of successful data and analytics platforms.

- Integration and adoption: A good indication of organizational maturity can be seen by how far various data and analytics have penetrated various business units, and the speed with which new use cases can be implemented. Leaders in the space are careful to measure effectiveness and to tie incentives and performance metrics to generate impact through analytics.

While fairly intuitive, all of these factors are difficult to implement effectively, and no single element represents a silver bullet to achieve competitive advantage. Our client work has consistently shown us that the combination of these factors leads to superior maturity, and, in turn, superior decision-making and stronger impact from data and analytics programs.

We are currently building benchmarks on how companies are performing in data and analytics relative to these five key areas. You can contribute by following the link to complete a 20-minute survey ( http://esurveydesigns.com/wix/p30952257.aspx ); a copy of the results specific to your organization will be made available to participants who register .

Josh Gottlieb is a practice manager in McKinsey's Atlanta office and Matthias Roggendorf is a senior expert in the Berlin office.

Explore a career with us

Predictions 2022: Data can help address the world's biggest challenges - 5 experts explain how

We need a trusted, global data ecosystem. Image: DCStudio/Freepik.com

.chakra .wef-1c7l3mo{-webkit-transition:all 0.15s ease-out;transition:all 0.15s ease-out;cursor:pointer;-webkit-text-decoration:none;text-decoration:none;outline:none;color:inherit;}.chakra .wef-1c7l3mo:hover,.chakra .wef-1c7l3mo[data-hover]{-webkit-text-decoration:underline;text-decoration:underline;}.chakra .wef-1c7l3mo:focus,.chakra .wef-1c7l3mo[data-focus]{box-shadow:0 0 0 3px rgba(168,203,251,0.5);} Rebecca King

Listen to the article

Data can help us tackle our largest societal challenges, including climate change, inequality, global health and economic resilience.

- But how do we ensure that our global data systems are structured to capture the true value of data, not just the financial?

- Business leaders share their perspectives on the real power of data and how it can be unlocked to address the biggest challenges of 2022.

With every advancement in the digital world, we unlock a limitless resource: data. It is both a by-product and a driver of global development that has transformed how we make decisions. Not only do we have increased granularity and accuracy to inform evidence-based decision making, but through AI and machine learning, we enable technology to make decisions on our behalf.

The value of this data is well established in the private sector. Successful businesses have captured this value through increasingly efficient and targeted advertisements and product design – with the global marketing data market worth an estimated at $52 billion in 2021. This is significant financially but fails to capture the true productive power of data.

Have you read?

This weather index measures climate-related risks. here's how, how the digital revolution can make healthcare more inclusive, employers hold too much power over information. workers must claim their data rights.

We can better understand how changing temperatures are impacting our environment and predict global weather disasters. We can measure inequalities to inform the policies that can best “close the gap”. We can limit the spread of global disease. And we can hold businesses and governments accountable to the environment and their citizens’ human rights.

We need to use data to empower the masses, not the few. But how do we ensure that our global data systems are structured to capture the true value of data, not just the financial?

Ahead of this year’s Davos Agenda virtual meeting, we invited leaders to share their perspectives on the real power of data and how it can be unlocked to help address the biggest challenges of 2022.

‘Leverage predictive analytics’

Vijay Guntur, Corporate Vice President and Head, Engineering and R&D Services, HCL Technologies

It is anticipated that 2022 will see a proliferation of COVID-19 mutations and that the power of data will be the key to minimizing their impact on the world. Big data and IoT technologies are evolving at an unprecedented pace to enable us to collect, prepare, analyze, anonymize, and share pandemic related data at volumes, and at a velocity, that would have been unimaginable a few years ago. Access to a trusted, global data ecosystem enables healthcare professionals, governments, and big business to leverage predictive analytics, model different scenarios, and refine and redeploy those models as more data becomes available.

We expect that the disruption we have seen in the global supply chain will continue throughout 2022 and that once again the power of data will be the key to relieving much of the stress this has caused. The use of low latency data transmission via 5G networks, streaming IoT data, and real-time insights will provide demand planning, forecasters, and logistics managers with better visibility into the various parts of their supply chain and enable them to react instantly when problems occur.

'Developing a global talent pool'

Igor Tulchinsky, Founder, Chairman and CEO, WorldQuant

If nothing else, 2021 reinforced the inevitability of uncertainty. Thanks to the growth in data and the increasing power of AI and machine learning, we are now in the age of prediction. We have already seen the promise of prediction in sectors like healthcare, where Weill Cornell Medicine enhanced its machine learning capabilities to predict COVID-19 infections within two hours – much faster than is possible with RT-PCR tests.

In the year ahead, I expect the role of predictive analytics to continue growing across public and private sectors, embedding itself in many aspects of work and life. But, grappling with the growing surge of information requires an increased focus on developing a global talent pool with the right technical skills, unified by advanced, shared goals, to interpret it and realize prediction’s full potential.

The needs of the future present a massive opportunity and maintaining a global mindset will enable new sources of talent to contribute significantly. Organizations are already embracing new ways of work, talent sourcing and development, which will be critical to succeed in the age of prediction. There is tremendous potential for business and society to harness this opportunity and have an exponentially positive impact, globally.

‘ Enable data to flow across borders'

Dr. Norihiro Suzuki, Vice President and Executive Officer, Chief Technology Officer, General Manager of the Research & Development Group and General Manager of the Corporate Venturing Office, Hitachi, Ltd.

The answer to many of our unsolved problems lies hidden in the almost unfathomable trove of data in existence. This wealth of knowledge can accelerate solutions from climate change to urbanization and education. For example, through work with The Centre for the Fourth Industrial Revolution ( C4IR) Japan and the G20 Smart Cities Alliance , we are harnessing data to create safer, viable, and sustainable cities.

However, a lack of coordination in data governance and regulation is restricting international data flows – each country has only a fraction of the information they need to effectively tackle global challenges. We need to enable data to flow across borders , by building trust between business and consumers, aligning regulations across jurisdictions and by governments and large organizations forming partnerships to support small and medium enterprises.

There is the view that technology is the accelerator and governance is the brake in innovation, but the truth is that they are 2 wheels on either side supporting the same innovation vehicle. Taking “trust” and “governance” into consideration from the design phase will accelerate the implementation of technology and innovation in society.

'Inclusive and responsible solutions'

Crystal Rugege , Managing Director, The Centre for the Fourth Industrial Revolution (C4IR) Rwanda

The last two years of the COVID-19 pandemic have amplified the critical role of data and technology in solving the profoundly complex and highly dynamic challenges of our time. As we look forward, we must prioritize building a comprehensive global data ecosystem that balances privacy rights, socio-economic development, and technological advancement. This calls for agile and interoperable data governance frameworks that provide a spectrum of instruments from policies to regulations that can adapt over time as new thinking evolves.

The World Economic Forum was the first to draw the world’s attention to the Fourth Industrial Revolution, the current period of unprecedented change driven by rapid technological advances. Policies, norms and regulations have not been able to keep up with the pace of innovation, creating a growing need to fill this gap.

The Forum established the Centre for the Fourth Industrial Revolution Network in 2017 to ensure that new and emerging technologies will help—not harm—humanity in the future. Headquartered in San Francisco, the network launched centres in China, India and Japan in 2018 and is rapidly establishing locally-run Affiliate Centres in many countries around the world.

The global network is working closely with partners from government, business, academia and civil society to co-design and pilot agile frameworks for governing new and emerging technologies, including artificial intelligence (AI) , autonomous vehicles , blockchain , data policy , digital trade , drones , internet of things (IoT) , precision medicine and environmental innovations .

Learn more about the groundbreaking work that the Centre for the Fourth Industrial Revolution Network is doing to prepare us for the future.

Want to help us shape the Fourth Industrial Revolution? Contact us to find out how you can become a member or partner.

Furthermore, we need open, high-quality data sets to create inclusive and responsible solutions that leverage machine learning, and other emerging technologies, to enhance our ability to deliver at scale. Finally, a global multi-stakeholder approach will be imperative in building the hard and soft infrastructure required to facilitate cross-border data flows and the circulation of knowledge to build more resilient economies and more equitable societies.

‘Change the fate of the ocean'

Kimberly Mathisen, CEO, The Centre for the Fourth Industrial Revolution (C4IR) Ocean

The old slogan “if you can't measure it, you can't manage it” has never been more relevant. Industry 4.0 technology will allow us to manage and measure in ways we are only just beginning to grasp.

By sharing ocean data, we can change the fate of the ocean by unleashing the power of data, technology and collaboration. We see strong indications the world is getting ready to share more ocean data. That is the starting point for data to become powerful, accelerating good solutions for more sustainable blue foods, more renewal energy sources, and greener transportation – a few areas where the power of data will benefit the ocean.

Achieving ocean sustainability requires transformative solutions that are based on data and science. One concrete example of benefits is our Ship Emissions Tracker , developed with NOA Ignite and Microsoft. Through open-source data combined with the renowned ICCT emission algorithm, the Tracker makes it possible to estimate the greenhouse gas footprint of each or all of 250,000 vessels in the global merchant fleet.

This provides compelling insights for progressive leaders in the shipping industry with targets to reduce emissions by at least 50% by 2050, and the end customers who want to purchase greener transport.

The power of ocean data to improve ocean health and wealth is immense. But for data to become powerful, sharing ocean data is the first step.

Related topics:

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- HHS Author Manuscripts

Global analysis of large-scale chemical and biological experiments

Research in the life sciences is increasingly dominated by high-throughput data collection methods that benefit from a global approach to data analysis. Recent innovations that facilitate such comprehensive analyses are highlighted. Several developments enable the study of the relationships between newly derived experimental information, such as biological activity in chemical screens or gene expression studies, and prior information, such as physical descriptors for small molecules or functional annotation for genes. The way in which global analyses can be applied to both chemical screens and transcription profiling experiments using a set of common machine learning tools is discussed.

Introduction

Research in the life sciences has become dominated by high-throughput data collection methods. It is now common to screen many thousands or millions of small molecules in miniaturized biological tests, such as protein-targeted assays or cell-based assays [ 1• ]. In addition, it is common to perform microarray-based transcription profiling, which involves the simultaneous hybridization of thousands of DNA sequences to spatially arrayed targets [ 2 ]. An emerging challenge is the analysis and integration of the large datasets generated by these disparate high-throughput techniques.

Until recently, only a few genes or compounds postulated in advance to be modulators of a phenotype or to have activity of interest were selected for study. High-throughput methods now permit use of a hypothesis-generating strategy in which large libraries of genes or chemicals are tested for biological effects of interest. One relies on the large size and diversity of the initial collection to yield active genes or compounds rather than prior knowledge of the screening candidates or the biological processes being studied. This strategy uncovers a large and varied set of active compounds or genes that can then be studied with a targeted, hypothesis-driven approach.

Ideally, the dataset from each new high-throughput experiment is interpreted in the context of all previous results. It then becomes part of the context in which all future screens are analyzed. Building on previous results is not new, but doing so takes on a new level of importance and complexity when datasets are vast and involve extremely inter-related information, and the relevant prior experimental data cannot be stored and organized in the mind of one scientist. We use the term ‘global analysis’ to refer to an emphasis on greater integration and analysis of data from all sources.

Challenges involved in the global analysis of experimental data are illustrated by the new fields of chemical genetics and chemical genomics [ 1• ]. By analogy to classical genetics, chemical genetics uses small molecules in place of mutations as modifiers of protein function. Small molecules that modulate a process or phenotype of interest are identified through large-scale screening and serve as probes of the mechanisms underlying the biological process. Chemical genetics, like other large-scale screening approaches, integrates information from several large datasets. The activity profile of a library of compounds in a particular assay is measured and correlated with structural and chemical properties of the compounds, as well as previously documented biological activities. Chemical genomics involves the integration of chemical and genomic information and technologies. One example of the challenges of a chemical genomic approach is the integration and analysis of both transcription profiling and chemical screening data.

We will review work reported primarily within the last year that is applicable to global analyses of the properties of both small molecules and genes, focusing on: (i) selection and evaluation of physical descriptors for small molecules; (ii) new applications of machine learning algorithms; and (iii) novel approaches for analyzing microarray-based transcription profiling data.

Selecting chemical entities to screen

We restrict our discussion of chemical screens to low molecular weight organic molecules as these compounds are of particular interest in drug discovery efforts and in biological research. Small molecule screens are preferred for drug discovery because the resulting lead compounds can be more easily developed into orally available pharmaceuticals. Many of the tools for global analyses that we describe can also be applied to screens involving peptide, RNA, DNA or protein reagents.

The problem of selecting compounds to screen is a difficult one. The total number of possible organic compounds increases with molecular weight, thus, without a defined molecular weight cut-off there is an infinite number of possible compounds. Published estimates of the number of theoretical small molecule drugs range as high as 10 66 , which is close to the number of atoms in the universe [ 3 ].

One strategy for selecting compounds for screening is to purchase or make a representative set of molecules based on physical properties or functional groups. This approach amounts to an attempt to select an optimally diverse subset of the obtainable compounds for an initial screen. Jorgensen et al , for example, developed a method for evaluating the diversity of a compound collection using common subgraphs or substructural elements [ 4 ]. Xu et al , on the other hand, developed a drug-like index to aid the selection of compounds for screening. The index was trained on 4836 compounds from the Comprehensive Medicinal Chemistry database [ 5 ]. Reynolds et al evaluated two stochastic sampling algorithms for their ability to select both diverse and representative subsets of a chemical library space [ 6 ].

Much effort has also focused on exploring and quantitating the notion of molecular complexity and determining the appropriate level of complexity for small molecules used in high-throughput screens. Barone and Chanon refined a quantitative index of complexity that uses the number and size of the rings in the smallest set of smallest rings and the connectivity of each atom [ 7• ]. Alternatively, complexity can be defined as the number of interactive domains contained in a molecule. A molecule with low complexity has fewer sites of interaction with a target than a molecule with greater complexity. Hann et al devised a simple model in which complex molecules are more selective than simple compounds and, therefore, yield fewer hits in primary screens [ 8• ]. This model predicts an optimal level of complexity for compounds used in primary screens as the result of a trade-off between sufficient affinity for detection versus sufficient promiscuity to yield a reasonable number of hits. This model is consistent with recent analyses affirming that successful lead compounds are generally less complex than the resulting drugs [ 8• , 9• ].

Given the virtually unlimited sources of small molecules, there has been interest in identifying characteristics of small molecules that are useful for drugs and for creating models that predict the probability that a given compound will be able to function as a drug ( vide infra ). It is difficult to evaluate the performance of these predictive models because of the great variability in crucial factors, such as the choice of the training sets of compounds and the choice of descriptors that define the actual criteria for discrimination. Furthermore, all empirically derived predictive models are essentially interpolative and extrapolative. Models that are better at assigning close structural analogs to members of the training set (interpolation) may be worse at generalizing more abstract properties to novel structures (extrapolation) and vice versa. Thus, one must beware of inferring the overall performance of a predictive model from a too limited set of test compounds.

Nonetheless, several efforts at discriminating drugs and non-drugs have been reported recently. Ertl et al used polar atom surface area to predict the extent to which small molecules exhibit a single property of drug transport (ie, bioavailability) [ 10 ]. Anzali et al used chemical descriptors consisting of multilevel neighborhoods of atoms to discriminate between drugs and non-drugs with some success. Their training and testing sets consisted of 5000 compounds from the World Drug Index and 5000 compounds from the Available Chemicals Directory (ACD) [ 11 ]. Muegge et al developed a simple functional group filter to discriminate between drugs and non-drugs using both the Comprehensive Medicinal Chemistry and MACCS-II Drug Data Report (MDDR) databases for drugs and the ACD for non-drugs [ 12 ]. Frimurer et al used a feed-forward neural network with two-dimensional (2D) descriptors based on atom types to classify compounds from the MDDR and ACD as drug-like or non-drug-like, respectively. They reported 88% correct assignment of a subset of each library that had been excluded from the training set. They also tested their model with a different library and claimed generalizability to compounds structurally dissimilar to those in the training set [ 13 ].

Drug versus non-drug comparisons emphasize characteristics common to all drugs over those characteristics specific to a particular receptor. Drugs share a number of general characteristics, such as target-binding affinity and the ability to permeate into cells, and they must also have favorable absorption, distribution, metabolism and excretion (ADME) properties. Models that discriminate drugs from non-drugs tend to select for ADME properties rather than properties that correlate with cellular biological activity. If one is interested simply in cellular biological activity rather than the full complement of required drug characteristics, a correspondingly appropriate compound training set must be selected. For example, in chemical genetic approaches, compound libraries with enriched protein-binding affinity are valuable, whereas compounds with favorable ADME properties have little added value.

Finally, it has been noted that many natural products do not conform to the canonical rules for selecting drug-like compounds. Moreover, many natural products have been directly developed as drugs without the need for significant (or any) analog synthesis. This observation has inspired a new strategy of synthesizing natural-product like compounds using combinatorial, diversity-oriented syntheses [ 14• , 15• ].

Descriptors

For comparisons that involve molecular properties, the structural, physicochemical, and/or biological properties of the molecules need to be represented in a consistent form to permit direct comparison. A standardized representation of a molecular feature is referred to as a ‘descriptor’. The choice of descriptors plays a crucial role in the analysis of chemical screening data. A major challenge in descriptor analyses is the identification of the smallest, most easily and reproducibly calculated set of descriptors that retains all the information required to make the distinctions and comparisons of interest. Here, we discuss some general considerations concerning descriptor choice, and highlight some recent developments.

Chemical descriptors

The compounds in a database are normally identified by their 2D structural representations, which consist of a list of the constituent atoms, their interconnectivity and sometimes their relevant stereochemistry. Aside from experimental data, these 2D representations of the molecular structure typically contain all the available information distinguishing the compounds in the library. For each compound, a common set of structural/physical/chemical descriptors is generated from these 2D structures. Choosing this set of descriptors amounts to defining the ‘chemical space’ spanned by all possible descriptor representations. A correlation between regions in this chemical space and bioactivity is assumed to arise from the binding of the chemical to specific biological targets. Here, we concentrate on the case in which there is no specific knowledge of the presumed binding sites and there is a purely empirical relationship between structure and activity.

There is a tremendous range in both the complexity and the reliability of descriptors. Simple descriptors, such as atom counts, may be obtained directly and reliably from the 2D structural representation. At the other extreme of both complexity and reliability are three-dimensional (3D) descriptors that involve 3D geometry-optimization and provide no assurance of producing a conformation with in vivo relevance. A widely varying number of descriptor dimensions have been employed to describe chemical libraries, but these have all involved a reduction in dimensions and, thus, a loss of information versus the original representation. Removing information that does not distinguish molecules by the properties of interest (eg, bioactivity) decreases the computational expense involved in computing and manipulating the descriptor representations and the ‘noise’ associated with the descriptors that do not contribute to the distinction of interest. One family of widely used descriptors consists of database hash keys, which were originally designed to filter compounds quickly in substructure searches. Although experience shows that these keys are unreliable when used alone to represent compounds, they have proven useful when used in conjunction with other descriptors [ 16• , 17• , 18 ].

Considerable effort has been devoted to determining the importance of 3D (conformational) information relative to more simply and reliably obtained 2D information, but the results seem to be highly dependent on the details of the analysis and the nature of the correlation being sought. 3D conformational analysis is generally avoided in the interest of computational speed and reproducibility. Estrada et al found a significant correlation between 2D topological indices and the dihedral angle in a series of alkylbiphenyls, demonstrating that 3D properties may be implicitly represented without resorting to geometry optimization [ 19 ]. In addition, Ertl found that 2D topological information was sufficient to calculate a molecular surface polar area descriptor that was essentially identical to the value obtained with the comparable 3D calculation [ 10 ]. One limitation of topological descriptors is that they cannot distinguish between stereoisomers. To help address this problem, Golbraikh et al [ 20 ] and Lukovits and Linert [ 21 ] have introduced interesting ways of combining chirality with 2D topological information.

The descriptors chosen to describe a compound library may be very different from one another with respect to their range and distribution. Godden and Bajorath used measures derived from Shannon entropy to quantify the information content of each descriptor within a compound library. They extended this method to compare the distributions of a descriptor between different libraries [ 22• ].

Biological descriptors

There are a number of biologically relevant quantities that can be used as independent variables in a manner directly analogous to the chemical descriptors described above. Biological descriptors can be used in the global analyses of microarray-derived transcription profiling data or to interpret the results of a screen for biological activity in terms of previously known activities of compounds in the library. Chromosomal location can also serve as a descriptor. For example, Wyrick et al used chromatin immunoprecipitation and subsequent hybridization to genomic DNA microarrays to identify autonomously replicating sequences (ARS) in yeast cells. Using chromosomal location in the list of generated sequences, these authors determined that ARSs are overrepresented in subtelomeric and intergenic regions of chromosomes [ 23•• ].

Properties can be calculated directly from DNA sequence information in a manner analogous to the calculation of physical descriptors for small molecules. For example, enrichment of the fraction of guanine/cytosine base pairs (GC content) in promoter regions can be calculated directly from genomic DNA sequence. Konu et al , for example, found that gene expression levels were correlated with the GC content of the third nucleotide codon position of the message [ 24 ]. One can relate the presence of splice site sequences, promoter elements and transcription factor binding sites to gene expression level using similar strategies. For example, Bernstein et al determined that binding sites for the transcription factor Ume6p were enriched upstream of genes that are induced in sin3 mutant yeast cells [ 25 ]. This type of global analysis correlates genomic sequence information with gene expression data.

Some properties, such as gene function, may be linked to a DNA sequence through a strategy of annotation. Other possible annotations include chromosomal location, protein interactions and co-regulated expression groups. Each of these descriptors can serve as an independent variable for global analyses. Using functional annotation categories, Bernstein et al determined that the expression of carbon metabolite and carbohydrate utilization genes was greater in yeast cells with a HDA1 deletion [ 25 ].

The construction of a descriptor vector for each gene used in a microarray experiment can be envisaged. Each sequence (eg, gene or chromosomal fragment) would have an associated value for GC content, the number of splice sites, the number and type of promoter elements, the number of binding sites for each of many transcription factors and a quantitative assignment (perhaps binary) for each functional annotation category. Once these vectors are constructed, they allow rapid analysis of the relationship between active and inactive genes for each of these descriptor categories. By applying computational strategies described in the next section, it is possible to extract the relationship between, for example, the number of AP-1 binding sites in a gene promoter and the level of induced expression in an experiment. Moreover, such methods would permit the detection of non-linear and combinatorial relationships among these descriptors, eg, ‘stress-response genes with AP-1 binding sites and > 40% GC content in their promoter are enriched in response to stimulus X′. Finally, data from global analyses could be used to develop a predictive model to classify untested genes.

Data analysis

It is important to make a distinction between two fundamentally different applications of high-throughput screening data. Such methods may be used simply to identify compounds exceeding a certain activity threshold (hits) or to identify a more comprehensive correlation between the measured activity, molecular structure and/or previously determined biological activity or mechanism. This distinction is important because the acceptable false positive and false negative rates for the two approaches are substantially different. In a ‘threshold’ screen, high false negative and false positive rates are acceptable because secondary screening of the hits is used to distinguish between true positives and false positives. Since the identification of true positives is the ultimate goal in a ‘threshold’ screening approach, false negatives are not a concern as long as a sufficient number of true positives is found. In a global analysis, however, the false positive and false negative rates must be minimized because all results are used in a quantitative or semi-quantitative analysis. Global analyses can be quite powerful but are more expensive in terms of time and money to perform, and may require the use of sophisticated computational methods ( vide infra ).

Analysis of screening data

Screening results typically exhibit a continuous range of activities, usually with a Gaussian distribution. A cut-off value is chosen for the selection of hits and the active elements are normally confirmed in a secondary assay. The cut-off criteria for determining hits may be based on absolute activity (ie, 2-fold activity versus control), distribution (ie, three standard deviations or greater from the mean) or a desired number of compounds to be retested. Once confirmed actives have been identified, it may be desirable to search for additional active elements by testing or retesting candidates that are related in form or function. In transcription profiling screens, retesting entails performing a search of the original gene set for genes that are related to the active genes in terms of sequence or function. The screen comes to its natural conclusion with the selection of a set of actives that can be pursued in subsequent experiments.

Global analyses

Various learning techniques have been used to generate hypotheses and form models of relationships between descriptors and biological activity. These techniques may be divided into two main categories: classification and clustering. For simplicity, we assume that the data to be analyzed are compound descriptors and that the classes of compounds are active and inactive.

The goal of a classifier is to produce a model that can separate new, untested compounds into classes using a training set of already classified compounds. Classification routines attempt to discover those descriptors or sets of descriptors that distinguish the classes from each other. Neural networks, genetic algorithms and support vector machines attempt to discover regions in descriptor space that separate pre-defined classes. Unknown compounds that are subsequently placed in these regions can be classified as active or inactive [ 26 – 28 ]. These techniques optimize a learning function in order to fit the given number of classes while minimizing an error function based on the mismatch of the classifier in the assignment of compounds. One of the main issues of training is overfitting, in which the initial classes are learned so narrowly that no new members are allowed into a class. The learned model should be specific so that it seldom misclassifies compounds from the original training set but general enough to recognize new compounds that should belong to a class.

Recursive partitioning and decision trees first find the best single descriptor to split active and inactive populations into two groups and then successively find the next best descriptor to further divide the newly formed groups. These are known as greedy algorithms because they select the best solution at every step but do not necessarily find the global optimum [ 29 ].

Statistical methods can also be used to form probability models or estimate the likelihood of particular descriptors forming the known classes. These approaches generally involve the use of the training set to form a probability model that generates both a classification and a probability of being in a class. Simple statistical methods include k-nearest neighbors and the Naïve Bayes classifier. Support vector machines are also examples of statistical classifiers.

The goal in clustering a dataset is to group similar data together. Clustering forms groups of compounds that maximize internal class similarity while simultaneously minimizing external class similarity. Clustering can be accomplished by either a supervised method, where the number of classes is known, or through unsupervised learning, where the data are not grouped into a fixed set of classes.

In many cases, classes produced by clustering can be used for classification. Unknown compounds that group with predominately active compounds have a higher probability of also being active [ 30 ]. One drawback to this strategy is the fact that the higher hit rate only applies to the relatively small number of compounds that lie close to known hits. Furthermore, models of activity are not generated from clustering techniques and must be deduced by expert analysis. Indeed, descriptors that cluster compounds together may not be related to activity at all. As with classification, there are a variety of available clustering algorithms. These include hierarchical methods, such as Ward’s clustering, and non-hierarchical methods, such as Jarvis-Patrick [ 31 ] and Self-Organizing Maps [ 32 ]. Examples of statistical-based clustering include the use of Bayesian neural network to cluster drugs and non-drugs [ 33 ] and the use of k-nearest neighbor analysis to cluster compounds at various stages of the screening process [ 34 ].

In a recent global analysis of both compound screening and gene expression data, Staunton et al used a statistical classifier to identify a correlation between gene expression and cell sensitivity to compounds. Sixty cancer cell lines were exposed to numerous compounds at the National Cancer Institute, and were determined to be either sensitive or resistant to each compound. Using a Bayesian statistical classifier, Staunton et al showed that for at least one third of the tested compounds, cell sensitivity can be predicted with the gene expression pattern of untreated cells [ 35•• ]. This example demonstrates the power of global analyses to identify subtle but important relationships among variables in large-scale datasets.

Global analyses can be performed on data from compound screening and transcription profiling experiments using similar computational methods. The goal of such analyses is to discern sometimes-subtle relationships within these datasets and to make correlations between large sets of multidimensional data. Recent advances are making global analyses increasingly feasible and powerful.

There are numerous future challenges in this area. Firstly, it will be valuable to identify robust chemical descriptors that best define global chemical space, as well as the ligand-rich regions therein. Standardized tests for evaluating classification methods would enable more meaningful comparisons. Finally, methods for automatic incorporation of publicly accessible data into such analyses would be enormously powerful, as the range of testable relationships would expand dramatically.

Acknowledgments

Brent R Stockwell, PhD, is a Whitehead Fellow and is supported in part by a Career Award at the Scientific Interface from the Burroughs Wellcome Fund.

•• of outstanding interest

• of special interest

- Economy & Politics ›

Global economy - Statistics & Facts

The rise of china, unemployment and rising inflation, key insights.

Detailed statistics

Global gross domestic product (GDP) 2028

Global inflation rate from 2000 to 2028

Countries with the largest gross domestic product (GDP) per capita 2022

Editor’s Picks Current statistics on this topic

Current statistics on this topic.

Countries with the largest gross domestic product (GDP) 2022

Leading export countries worldwide 2022

Related topics

Global economy.

- Financial markets

Global economic indicators

- Inflation worldwide

- Gross Domestic Product (GDP) worldwide

- Unemployment worldwide

- Retail employment worldwide

Major economies

- BRICS countries

Recommended statistics

- Basic Statistic Global gross domestic product (GDP) 2028

- Basic Statistic Gross domestic product (GDP) of selected global regions 2022

- Premium Statistic GDP of the main industrialized and emerging countries 2022

- Basic Statistic Countries with the largest gross domestic product (GDP) 2022

- Basic Statistic Share of global regions in the gross domestic product 2022

- Basic Statistic Global gross domestic product (GDP) per capita 2022

- Premium Statistic Annual change in CPI 2015-2022, by country

Global gross domestic product (GDP) at current prices from 1985 to 2028 (in billion U.S. dollars)

Gross domestic product (GDP) of selected global regions 2022

Gross domestic product (GDP) of selected global regions at current prices in 2022 (in trillion U.S. dollars)

GDP of the main industrialized and emerging countries 2022

Gross domestic product (GDP) of the main industrialized and emerging countries in current prices in 2022 (in trillion U.S. dollars)

The 20 countries with the largest gross domestic product (GDP) in 2022 (in billion U.S. dollars)

Share of global regions in the gross domestic product 2022

Share of global regions in the gross domestic product (adjusted for purchasing power) in 2022

Global gross domestic product (GDP) per capita 2022

Global gross domestic product (GDP) per capita from 2012 to 2022, at current prices (in U.S. dollars)

Annual change in CPI 2015-2022, by country

Annual change in Consumer Price Index (CPI) in selected countries worldwide from 2015 to 2022

- Basic Statistic Global Purchasing Manager Index (PMI) of the industrial sector August 2023

- Premium Statistic Purchasing Managers Index (PMI) in developed and emerging countries 2020-2023

- Premium Statistic Global consumer confidence index 2020-2023

- Premium Statistic Consumer confidence in developed and emerging countries 2023

- Premium Statistic Industrial production growth worldwide 2019-2023, by region

- Basic Statistic Average annual wages in major developed countries 2008-2022

- Premium Statistic Wage growth in developed countries 2019-2023

- Premium Statistic Global policy uncertainty index monthly 2019-2023

Global Purchasing Manager Index (PMI) of the industrial sector August 2023

Global Purchasing Manager Index (PMI) of the industrial sector from August 2021 to August 2023 (50 = no change)

Purchasing Managers Index (PMI) in developed and emerging countries 2020-2023

Manufacturing PMI (Industrial PMI) in key developed and emerging economies from January 2020 to December 2023 (50 = no change)

Global consumer confidence index 2020-2023

Global consumer confidence in developed and emerging countries from January 2020 to December 2023

Consumer confidence in developed and emerging countries 2023

Consumer confidence in developed and emerging countries in November 2023

Industrial production growth worldwide 2019-2023, by region

Global industrial production growth between January 2019 to October 2023, by region

Average annual wages in major developed countries 2008-2022

Average annual wages in major developed countries from 2008 to 2022 (in U.S. dollars)

Wage growth in developed countries 2019-2023

Wage growth in major developed countries from January 2020 to November 2023

Global policy uncertainty index monthly 2019-2023

Global economic policy uncertainty index from January 2019 to November 2023

Gross domestic product

- Basic Statistic Share of the main industrialized and emerging countries in the GDP 2022

- Basic Statistic Countries with the largest proportion of global gross domestic product (GDP) 2022

- Basic Statistic Gross domestic product (GDP) per capita in the main industrialized and emerging countries

- Basic Statistic Countries with the largest gross domestic product (GDP) per capita 2022

- Basic Statistic Countries with the lowest estimated GDP per capita 2023

- Basic Statistic Share of economic sectors in the global gross domestic product from 2012 to 2022

- Basic Statistic Share of economic sectors in the gross domestic product, by global regions 2022

- Basic Statistic Proportions of economic sectors in GDP in selected countries 2022

Share of the main industrialized and emerging countries in the GDP 2022

Share of the main industrialized and emerging countries in the gross domestic product (adjusted for purchasing power) in 2022

Countries with the largest proportion of global gross domestic product (GDP) 2022

The 20 countries with the largest proportion of the global gross domestic product (GDP) based on Purchasing Power Parity (PPP) in 2022

Gross domestic product (GDP) per capita in the main industrialized and emerging countries

Gross domestic product (GDP) per capita in the main industrialized and emerging countries in current prices in 2022 (in U.S. dollars)

The 20 countries with the largest gross domestic product (GDP) per capita in 2022 (in U.S. dollars)

Countries with the lowest estimated GDP per capita 2023

The 20 countries with the lowest estimated gross domestic product (GDP) per capita in 2023 (in U.S. dollars)

Share of economic sectors in the global gross domestic product from 2012 to 2022

Share of economic sectors in the global gross domestic product (GDP) from 2012 to 2022

Share of economic sectors in the gross domestic product, by global regions 2022

Share of economic sectors in the gross domestic product (GDP) of selected global regions in 2022

Proportions of economic sectors in GDP in selected countries 2022

Proportions of economic sectors in the gross domestic product (GDP) in selected countries in 2022

Economic growth

- Basic Statistic Growth of the global gross domestic product (GDP) 2028

- Premium Statistic Forecast on the GDP growth in selected world regions until 2028

- Premium Statistic Gross domestic product (GDP) growth forecast in selected countries until 2028

- Basic Statistic Countries with the highest growth of the gross domestic product (GDP) 2022

- Basic Statistic The 20 countries with the greatest decrease of the gross domestic product in 2022

- Premium Statistic GDP growth in the leading industrial and emerging countries 2nd quarter 2023

Growth of the global gross domestic product (GDP) 2028

Growth of the global gross domestic product (GDP) from 1980 to 2022, with forecasts until 2028 (compared to the previous year)

Forecast on the GDP growth in selected world regions until 2028

Growth of the real gross domestic product (GDP) in selected world regions from 2018 to 2028 (compared to the previous year)

Gross domestic product (GDP) growth forecast in selected countries until 2028

Growth of the gross domestic product (GDP) in selected countries from 2018 to 2028 (compared to the previous year)

Countries with the highest growth of the gross domestic product (GDP) 2022

The 20 countries with the highest growth of the gross domestic product (GDP) in 2022 (compared to the previous year)

The 20 countries with the greatest decrease of the gross domestic product in 2022

The 20 countries with the greatest decrease of the gross domestic product (GDP) in 2022 (compared to the previous year)

GDP growth in the leading industrial and emerging countries 2nd quarter 2023

Growth of the real gross domestic product (GDP) in the leading industrial and emerging countries from 2nd quarter 2021 to 2nd quarter 2023 (compared to the previous quarter)

Unemployment

- Basic Statistic Number of unemployed persons worldwide 1991-2024

- Basic Statistic Global unemployment rate 2003-2022

- Basic Statistic Unemployed persons in selected world regions 2024

- Basic Statistic Unemployment rate in selected world regions 2022

- Basic Statistic Youth unemployment rate in selected world regions 2022

- Premium Statistic Monthly unemployment rate in industrial and emerging countries August 2023

- Premium Statistic Breakdown of unemployment rates in G20 countries 2023

Number of unemployed persons worldwide 1991-2024

Number of unemployed persons worldwide from 1991 to 2024 (in millions)

Global unemployment rate 2003-2022

Global unemployment rate from 2003 to 2022 (as a share of the total labor force)

Unemployed persons in selected world regions 2024

Number of unemployed persons in selected world regions in 2021 and 2022, up to 2024 (in millions)

Unemployment rate in selected world regions 2022

Unemployment rate in selected world regions between 2017 and 2022

Youth unemployment rate in selected world regions 2022

Youth unemployment rate in selected world regions in 2000 to 2022

Monthly unemployment rate in industrial and emerging countries August 2023

Unemployment rate in the leading industrial and emerging countries from August 2022 to August 2023

Breakdown of unemployment rates in G20 countries 2023

Unemployment rate of G20 countries in 2023

Global trade

- Premium Statistic Monthly change in goods trade globally 2018-2023

- Basic Statistic Leading export countries worldwide 2022

- Premium Statistic Leading import countries worldwide 2022

- Premium Statistic The 20 countries with the highest trade surplus in 2022

- Premium Statistic The 20 countries with the highest trade balance deficit in 2022

- Basic Statistic Trade: export value worldwide 1950-2022

- Premium Statistic Global merchandise imports index 2019-2023, by region

- Premium Statistic Global merchandise exports index 2019-2023, by region

Monthly change in goods trade globally 2018-2023

Change in global goods trade volume from January 2018 to October 2023

Leading export countries worldwide in 2022 (in billion U.S. dollars)

Leading import countries worldwide 2022

Leading import countries worldwide in 2022 (in billion U.S. dollars)

The 20 countries with the highest trade surplus in 2022

The 20 countries with the highest trade surplus in 2022 (in billion U.S. dollars)

The 20 countries with the highest trade balance deficit in 2022

The 20 countries with the highest trade balance deficit in 2022 (in billion U.S. dollars)

Trade: export value worldwide 1950-2022

Trends in global export value of trade in goods from 1950 to 2022 (in billion U.S. dollars)

Global merchandise imports index 2019-2023, by region

Global merchandise imports index between January 2019 to November 2023, by region

Global merchandise exports index 2019-2023, by region

Global merchandise exports index from January 2019 to November 2023, by region

- Basic Statistic Global inflation rate from 2000 to 2028

- Basic Statistic Inflation rate in selected global regions in 2022

- Premium Statistic Monthly inflation rates in developed and emerging countries 2021-2024

- Basic Statistic Inflation rate of the main industrialized and emerging countries 2022

- Basic Statistic Countries with the highest inflation rate 2022

- Basic Statistic Countries with the lowest inflation rate 2022

Global inflation rate from 2000 to 2022, with forecasts until 2028 (percent change from previous year)

Inflation rate in selected global regions in 2022

Inflation rate in selected global regions in 2022 (compared to previous year)

Monthly inflation rates in developed and emerging countries 2021-2024

Monthly inflation rates in developed and emerging countries from January 2021 to January 2024 (compared to the same month of the previous year)

Inflation rate of the main industrialized and emerging countries 2022

Estimated inflation rate of the main industrialized and emerging countries in 2022 (compared to previous year)

Countries with the highest inflation rate 2022

The 20 countries with the highest inflation rate in 2022 (compared to the previous year)

Countries with the lowest inflation rate 2022

The 20 countries with the lowest inflation rate in 2022 (compared to the previous year)

Further reports Get the best reports to understand your industry

Get the best reports to understand your industry.

Mon - Fri, 9am - 6pm (EST)

Mon - Fri, 9am - 5pm (SGT)

Mon - Fri, 10:00am - 6:00pm (JST)

Mon - Fri, 9:30am - 5pm (GMT)

- Global Data Analytics

The first edition of ESOMAR’s Global Data Analytics is the global analysis of the size and segmentation of the insights industry's evolving data analytics sector.

Part of the Global Insights Overview package.

- Reports and Publications

At a glance

The Global Data Analytics 2023 is the first edition of the annual series that will delve into the size, characteristics, and performance of the evolving sector of the insights industry – the data analytics sector. The inferences of the report are based on the data collected by national research associations, leading companies, independent analysts, and ESOMAR representatives from 15 countries. They are complemented by ESOMAR’s independent size estimations.

In the upcoming editions, ESOMAR intends to leverage its global presence by working with its partner associations worldwide to survey this sector of the insights industry in more detail.

What can I find inside?

The Global Data Analytics report is being released at the heels of the occasion when the turnover of the data analytics sector has surpassed the established market research sector for the first time. In 2022, the global insights industry expanded from US$ 119 billion to US$ 129 billion.

ESOMAR estimates that 39% of the global insights industry’s turnover to those companies primarily engaged in data analytics, including DaaS, SaaS and other research platforms, compared to 36% of market research firms (24% of reporting firms). In 2023, the data analytics sector is expected to maintain this trend to attain a turnover of US$ 56 billion in an insights industry slated to expand to US$ 141 billion.

The report covers:

Global and regional overview of the Data Analytics sector

Experts’ views on the sector’s expansion

Self-regulation and its role in data analysis

Insiders’ perspectives on the sector

Overview of the Top 20 Data Analytics companies

Want to get better insight into the report?

Here is a selection of articles based on the Global Data Analytics.

Understanding Europe’s Data Analytics $1bn growth

The $36bn hegemony of Data Analytics in the US

Insights in Asia Pacific, set to dominate in 2024

Rest of the Americas’ journey through the inflationary hurdles

Inflation curtails promising Middle Eastern and African prospects

In addition to using essential cookies that are necessary to provide you with a smooth navigation experience on the website, we use other cookies to improve your user experience. To respect your privacy, you can adjust the selected cookies. However, this might affect your interaction with our website. Learn more about the cookies we use.

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- My Account Login

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Data Descriptor

- Open access

- Published: 24 January 2024

A 31-year (1990–2020) global gridded population dataset generated by cluster analysis and statistical learning

- Luling Liu 1 , 2 ,

- Xin Cao ORCID: orcid.org/0000-0001-5789-7582 1 , 2 ,

- Shijie Li ORCID: orcid.org/0000-0002-1583-4951 1 , 2 &

- Na Jie 1 , 2

Scientific Data volume 11 , Article number: 124 ( 2024 ) Cite this article

1407 Accesses

Metrics details

- Social anthropology

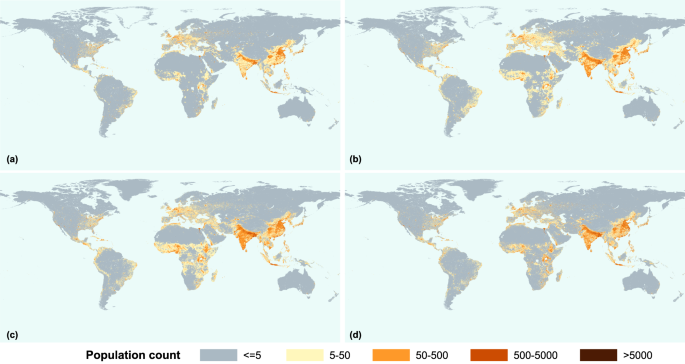

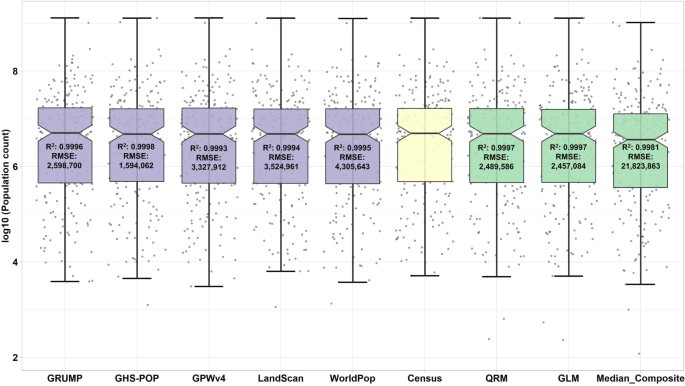

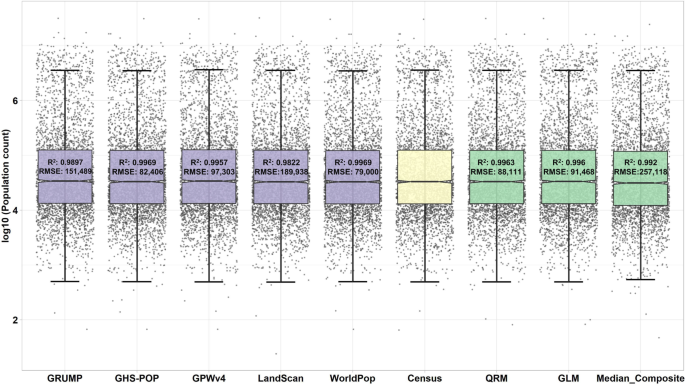

Continuously monitoring global population spatial dynamics is crucial for implementing effective policies related to sustainable development, including epidemiology, urban planning, and global inequality. However, existing global gridded population data products lack consistent population estimates, making them unsuitable for time-series analysis. To address this issue, this study designed a data fusion framework based on cluster analysis and statistical learning approaches, which led to the generation of a continuous global gridded population dataset (GlobPOP). The GlobPOP dataset was evaluated through two-tier spatial and temporal validation to demonstrate its accuracy and applicability. The spatial validation results show that the GlobPOP dataset is highly accurate. The temporal validation results also reveal that the GlobPOP dataset performs consistently well across eight representative countries and cities despite their unique population dynamics. With the availability of GlobPOP datasets in both population count and population density formats, researchers and policymakers can leverage the new dataset to conduct time-series analysis of the population and explore the spatial patterns of population development at global, national, and city levels.

Similar content being viewed by others

Uncovering temporal changes in Europe’s population density patterns using a data fusion approach

Filipe Batista e Silva, Sérgio Freire, … Carlo Lavalle

High-resolution gridded population datasets for Latin America and the Caribbean using official statistics

Tom McKeen, Maksym Bondarenko, … Alessandro Sorichetta

The Multi-temporal and Multi-dimensional Global Urban Centre Database to Delineate and Analyse World Cities

Michele Melchiorri, Sergio Freire, … Thomas Kemper

Background & Summary

The world’s population is estimated at over 8 billion and is projected to reach around 8.5 billion by 2030 1 . As population growth continues, the ability to monitor population spatial dynamics over long periods becomes increasingly essential for the implementation of effective policies and initiatives related to sustainable development. Specifically, of the 17 Sustainable Development Goals and 169 targets set by the United Nations 2 in 2015, approximately half of the indicators require accurate and spatially explicit demographic data. The Sustainable Development Goals emphasize ‘leaving no one behind’, which means we need increasingly spatial-temporal consistent gridded population data to identify areas and groups that are vulnerable to poverty, disease, and other development challenges, enabling more targeted and effective interventions. A continuous gridded population dataset can offer more spatially detailed information and allows for analysis of the unevenly changing relationship between humans and nature at a pixel scale over time. It was recognized as essential data source for various applications, such as epidemiology, urban planning, environmental management, assessment of risks to vulnerable population, energy crises, global inequities, and assessment of progress toward the Sustainable Development Goals (SDGs) 3 , 4 , 5 , 6 , 7 , 8 , 9 , 10 .

The gridded population data is originally derived from census data, which is typically collected through a formal enumeration, although other methods such as surveys may also be used. After converting the census data table of administrative units or enumeration areas to vector format, it will be reallocated into raster grids 11 , 12 . Raster grids are a series of cells arranged in rows and columns, where each cell represents a geographic area and contains information about the population within that area. There are two main methods for producing top-down gridded population data: area-weighted and dasymetric mapping, and bottom-up population mapping methods are adopted when census data is not available. Area-weighted mapping assumes that the population is evenly distributed across administrative areas and assigns demographic information to each grid cell based on the proportion of administrative cells covered by each cell. This method is simple and easy to implement but may not accurately reflect the true population distribution, especially in areas with heterogeneous population density 13 . Dasymetric mapping makes assumptions about the relationship between population and various geographic and land cover characteristics and uses ancillary data to determine where and how much population should be assigned to each location. This method may result in more accurate estimates of population distribution, but it requires more detailed ancillary data and expertise to implement.

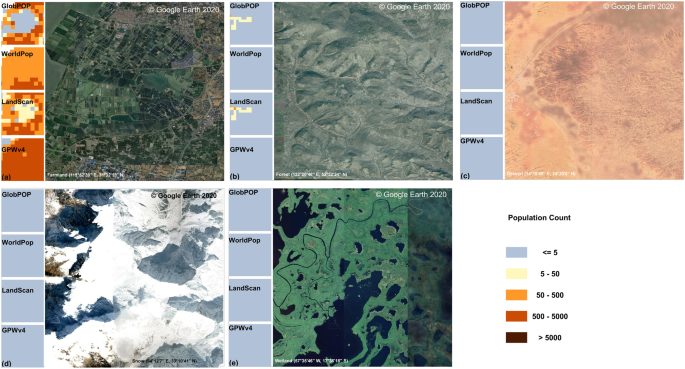

There are five long time-series of global gridded population data products with either density or count measures, including the Global Human Settlements Layer Population (GHS-POP), the Global Rural Urban Mapping Project (GRUMP), the Gridded Population of the World Version 4 (GPWv4), the LandScan Population datasets and the WorldPop datasets, all with a spatial resolution of 30 arcseconds (about 1 km at the equator). Nonetheless, previous research has identified some limitations associated with these datasets.

First of all, there is currently no continuous long-term gridded population dataset available at a spatial resolution of approximately 1 km, particularly before 2000. Among the three datasets (GHS-POP, GRUMP, and GPWv4), the shortest time interval is five years. Continuous gridded population maps are available after 2000 for the other two datasets (LandScan and WorldPop). However, LandScan’s methods and metadata are updated every year, especially for the 2000s 14 . These products are based on correlations between modeling factors and populations at the administrative unit level and then predicted to gridded populations. Therefore, the accuracy of population spatialization depends on the accuracy of the elements used to a large extent and population allocation methods 8 , 15 . Besides, there is a mismatch between the training and predicted data under scale variation, resulting in low accuracy of the overall estimate 11 , 16 .

Secondly, the reliability and uncertainty of population data products are typically described in documentation or validated in specific countries and regions, with methodological and ancillary data uncertainties being the most common sources of uncertainty. Methodological uncertainty issues can arise due to spatial autocorrelation resulting from the equally weighted distribution of the population, leading to overestimation of the population 12 , 17 . Problems associated with ancillary data include common inaccuracies in land cover data, which typically have an accuracy range of 70–85% 18 . Other ancillary data sources, such as nighttime light data, can also introduce cumulative errors in the gridded population data due to saturation effects, blooming effects, and inter-annual inconsistencies 19 . These errors can undermine the reliability of the ancillary data and propagate into the final population estimates, further increasing uncertainties in the results.

Last but not least, one issue that has received limited attention is the global applicability of gridded population data. The five sets of gridded population data products are used extensively in global-scale studies, but their accuracy and suitability for different regions and situations have not been fully evaluated. Currently, there are ongoing efforts to validate and compare the precision of various population data products, although the findings are frequently restricted to specific countries or regions. For example, Archila Bustos et al . 14 used the example of Sweden, where population change is slow, to validate and compare five demographic datasets with statistical data from 1990–2015, and found that no datasets showed consistent best for different situations, and there were differences in accuracy across datasets in uninhabited areas.

Although population data products are fundamental for many researches and applications, a lack of long-term and consistently highly accurate gridded population data exists for time-series analysis. As assessments of population data product applicability continue to emerge, it has been found that each population data product has its applicability and, in some cases, shows a high degree of accuracy 4 , 20 . These findings offer insights into the research objective of whether it is possible to integrate these five sets of multi-source demographic data and leverage the strengths of each data through a statistical learning approach to produce a set of new demographic products suitable for long time-series analysis at the global grid scale.

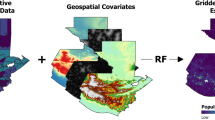

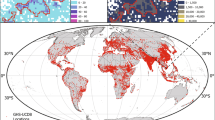

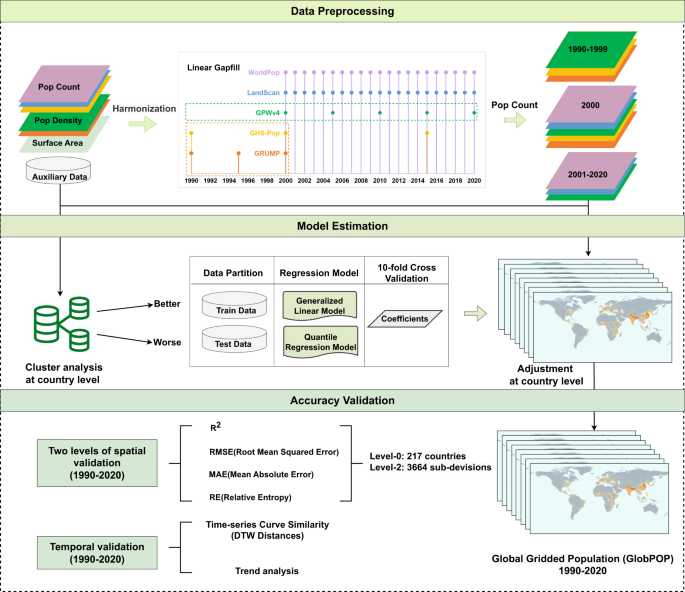

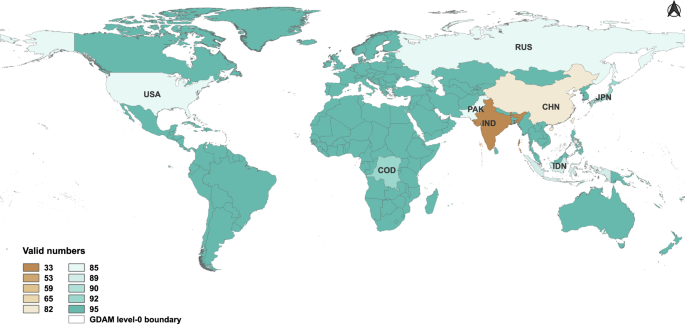

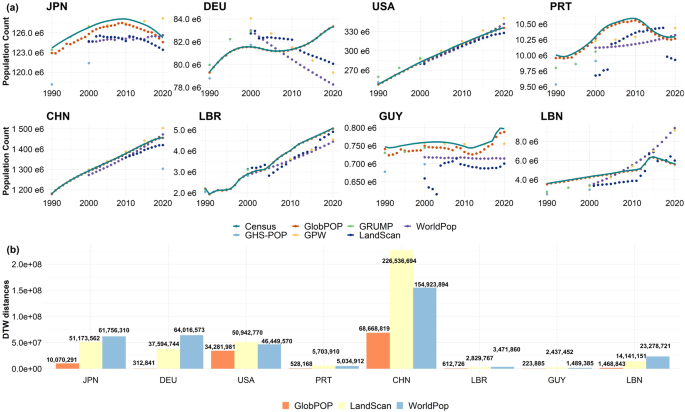

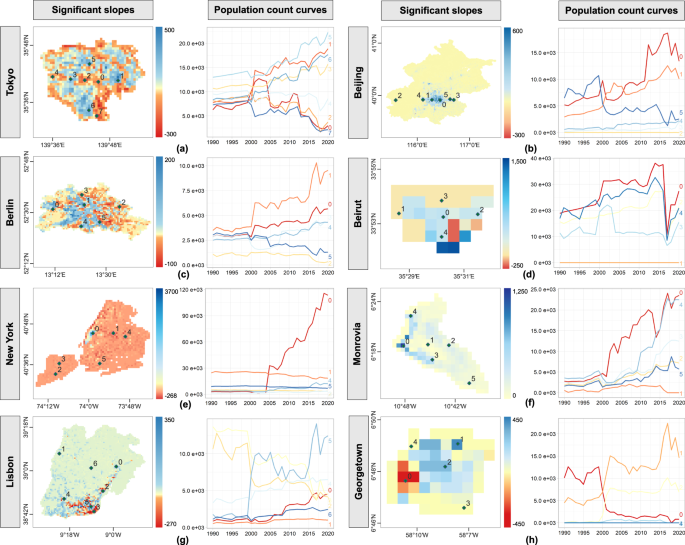

Hence, this study proposed a data fusion framework to generate a continuous global gridded population (GlobPOP) from 1990 to 2020 using the five existing products. As shown in Fig. 1 , the whole framework of population data production is divided into three parts. The first part was pre-processing, which harmonized the data by converting population data format uniformly and linear gap-filling. The second part involved model building and estimation based on cluster analysis and statistical learning. The clustering analysis allowed for understanding the differences in each population dataset’s performance across countries. The estimation model was established through statistical learning and training regression parameters on the regions with better performance. The third part was accuracy validation, which included two levels of spatial and temporal validation. Finally, we examined the model sensitivity and discussed the adaptability of the new data product at pixel scale.

Workflow of the estimation and validation of the global gridded population (GlobPOP).

In this section, we described the input data and the data fusion framework used in producing the global gridded population data product.

This section summarizes the five global population data products used to produce the continuous gridded population. Table 1 shows the detailed information of original input population data sources.

GPWv4 is the only dataset that uses area weighting for each year from national census registration data, where a water body mask is first applied before area weighting, to ensure that population is not allocated to water bodies and snow- and ice-covered areas 21 . The limitation lies firstly in the assumption that the population is evenly distributed within administrative boundaries and is, therefore, more accurate for smaller input units than larger ones 22 . Secondly, it can be affected by interpolation, particularly in areas where the population changes dramatically over short periods, leading to population underestimation 23 .

GHS-POP population data are binary dasymetric mapped, with population data derived from the GPWv4 UN-adjusted population dataset at the administrative district level and ancillary data using a gridded dataset of built-up areas, with each grid representing the percentage of cells covered by built-up areas. 95% of the population data is allocated to grid cells in proportion to the density of built-up areas using an area-weighted approach 24 . Only when the administrative district area is less than 250 m grid area, all the population within one grid will be aggregated together, which may lead to a shift in the spatial distribution of population to adjacent grids. As the reallocation of the population in the GHS-POP is based on the density of built-up, which may be allocated to non-residential areas, such as commercial, industrial, and recreational areas, distinguished by the residential population allocated to built-up areas 24 .

The GRUMP data is based on GPWv3 (version 3) to produce improved population gridded data, which redistributes the population to urban and rural areas according to a binary mapping method, with rural and urban areas being divided mainly based on nighttime light data. The GRUMP data refers to the use of nighttime light data such as DMSP, to estimate urban areas where the population is overestimated. Due to the ‘blooming’ effect of nighttime lights, where poorly electrified or un-electrified areas cannot be detected, and therefore the population is underestimated. Moreover, the GPWv3 as the older version is less accurate than GPWv4, and consequently, the GRUMP data is less accurate than GPWv4 in some regions 12 .